Photo by Cristian Cristian on Unsplash

In the near future, it will be possible to activate the Amazon Echo or Nest Audio voice speaker, search in Google or Siri on Apple devices without a greeting like "Hello, Google!" Using AI, scientists from the United States have developed an algorithm thanks to which smart voice assistants understand that a person is talking to them.

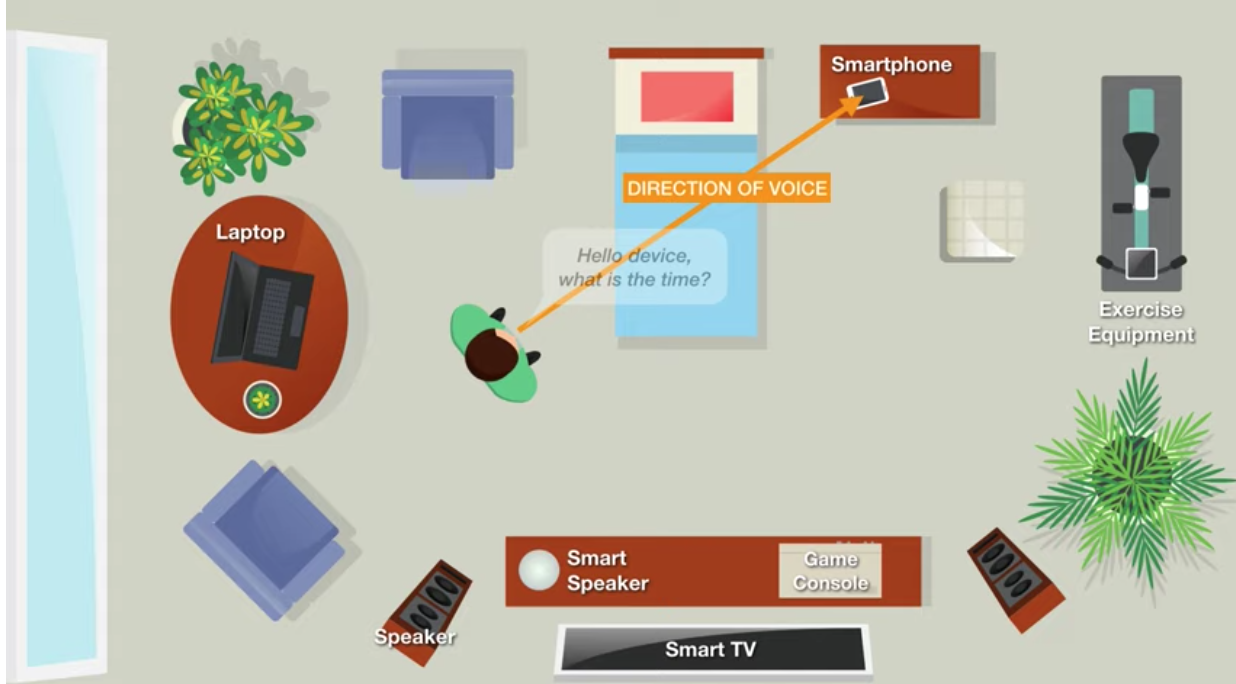

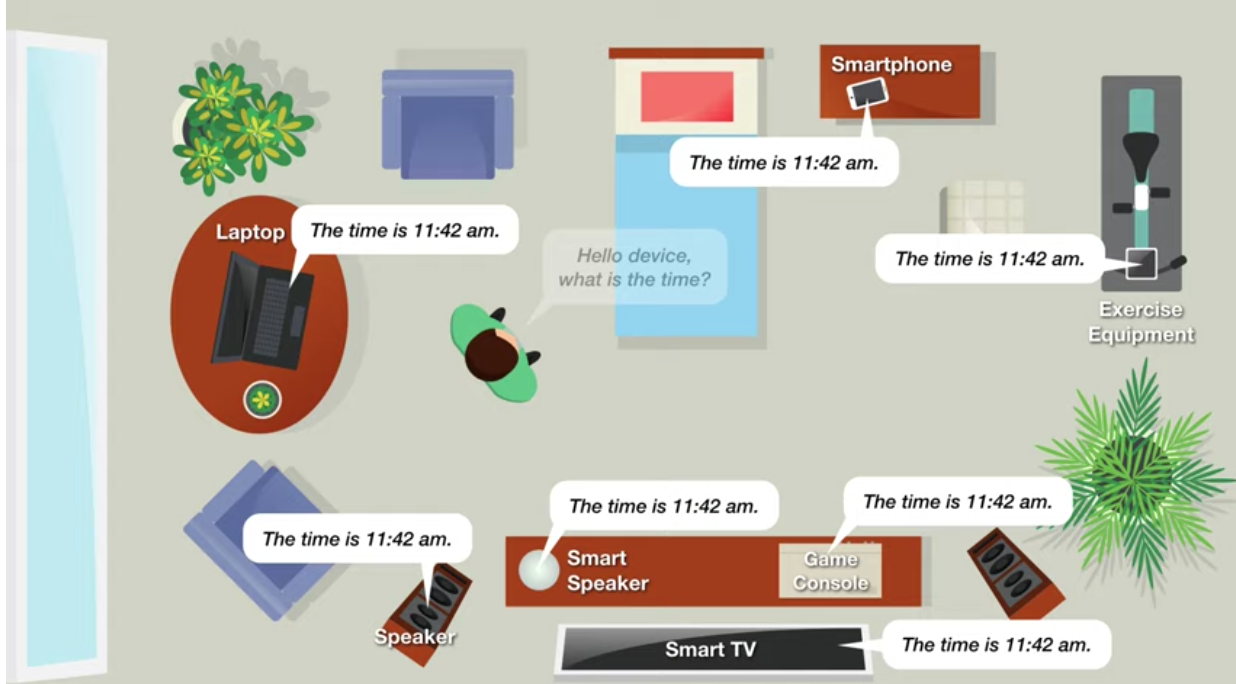

In ordinary conversation, people designate the addressee of a message by simply looking at it. But most voice devices are tailored for activation with key phrases that no one says in real communication. Understanding of non-verbal cues by voice assistants would make communication easier and more intuitive. Especially if there are several such devices in the house.

Scientists at Carnegie Mellon University note that the developed algorithm determines the direction of voice (DoV) using a microphone.

DoV is different from detecting the direction of arrival (DoA).

According to the researchers, the use of DoV makes targeted commands possible, which resembles the eye contact of the interlocutors when starting a conversation. However, the cameras of the devices are not involved. Thus, there is a natural interaction with different types of devices without confusion.

Among other things, the technology will reduce the number of accidental activations of voice assistants, which are on standby all the time.

The new audio technology is based on the features of speech sound propagation. If the voice is directed into the microphone, then it is dominated by low and high frequencies. If the voice is reflected, that is, initially directed to another device, then there will be a noticeable decrease in high frequencies compared to low ones.

The algorithm also analyzessound propagation in the first 10 milliseconds. Two scenarios are possible here: The

user is turned towards the microphone. The signal that arrives first at the microphone will be clear compared to possible other signals reflected from other devices in the house.

The user is turned away from the microphone. All sound vibrations will be duplicated and distorted.

The algorithm measures the waveform, calculates the peak of its intensity, compares it with the average value and determines whether the voice was directed towards the microphone or not.

By measuring the spread of the voice, scientists were able to determine with an accuracy of 93.1% whether a speaker was in front of a particular microphone or not. They noted that this is the best such result to date and an important step towards the implementation of the solution in existing devices. When trying to determine one of the eight angles at which a person looks at the device, an accuracy of 65.4% was achieved . This is still not enough for an application, the essence of which is active interaction with users.

To collect information, the engineers used Python, the signals were processed based on the Extra-Trees classifier algorithm.

The data and algorithm collected during development are open on GitHub . They can be used when creating your own voice assistant.