Tensorflow, while losing ground in the research environment, is still popular in practical development. One of the strengths of TF that keeps it afloat is the ability to optimize models for deployment in resource-limited environments. There are special frameworks for this: Tensorflow Lite for mobile devices and Tensorflow Servingfor industrial use. There are enough tutorials on their use on the Web (and even on Habré). In this article, we have collected our experience in optimizing models without using these frameworks. We will look at some of the methods and libraries that accomplish the task at hand, describe how you can save disk space and RAM, the strengths and weaknesses of each approach, and some unexpected effects that we encountered.

In what conditions do we work

One of the classic NLP tasks is the thematic classification of short texts. Classifiers are represented by many different architectures, ranging from classical methods like SVC to transformer-architectures like BERT and its derivatives. We will be looking at CNN - convolutional models.

An important limitation for us is the need to train and use models (as part of the product) on machines without a GPU. This primarily affects the speed of learning and inference.

Another condition is that the models for classification are trained and used in sets of several pieces. A set of models, even simple ones, can use a lot of resources, especially RAM. We use our own solution for serving models, however, if you need to operate with sets of models, take a look at Tensorflow Serving .

We were faced with the need to optimize the model on TF version 1.x, which is now officially considered obsolete. For TF 2.x, many of the techniques discussed are either irrelevant or integrated into the standard API, and therefore the optimization process is quite simple.

Let's take a look at the structure of our model first.

How the TF model works

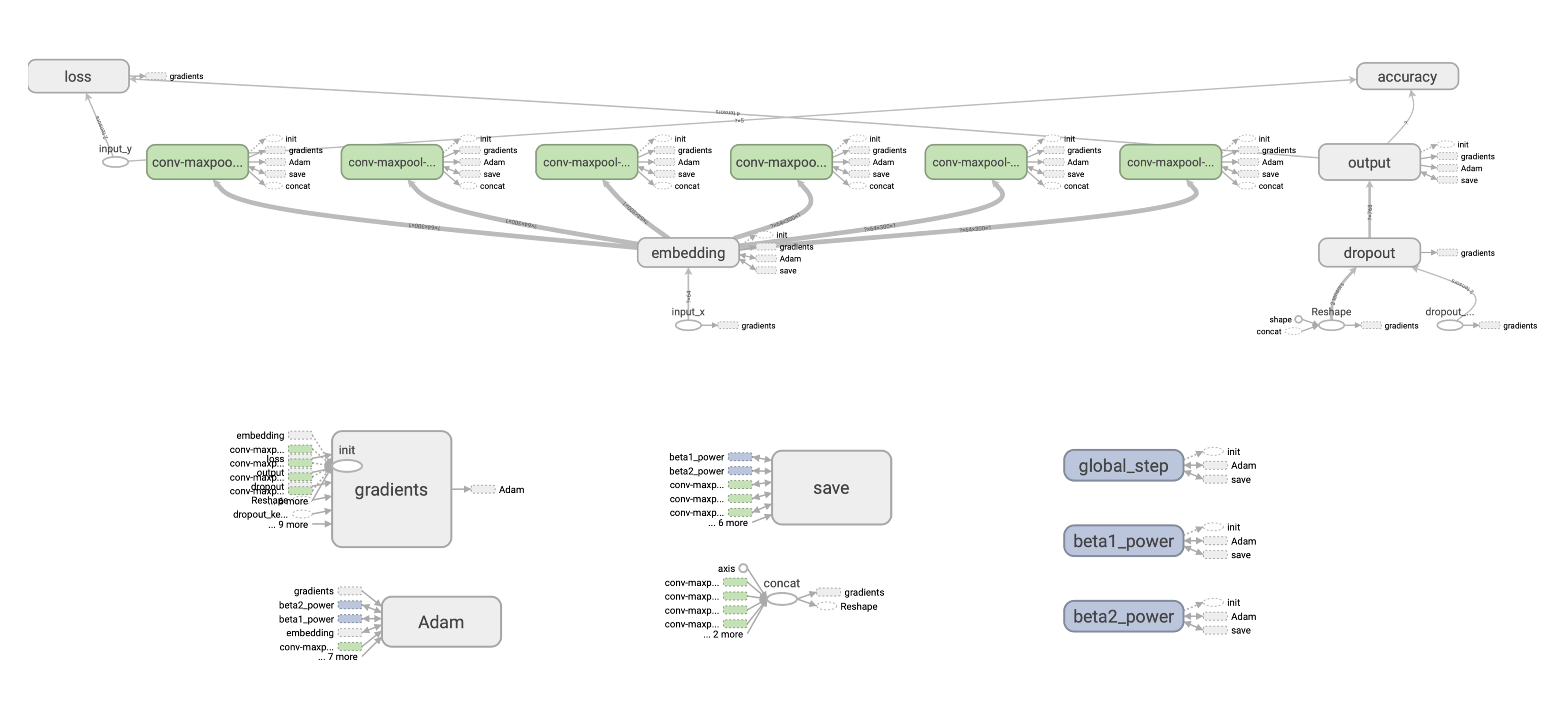

Consider the so-called Shallow CNN - a network with one convolutional layer and several filters. This model has worked well enough for text classification over vector word representations.

For simplicity, we will use a fixed pretrained set of vector representations of dimension v x k , where v is the size of the dictionary, k is the dimension of embeddings.

:

- Embedding-, .

- w x k. , (1, 1, 2, 3) 4 , 1 , 2 3 , .

- Max-pooling .

- , dropout- softmax- .

Adam, .

: .

, , 128 c w = 2 k = 300 () [filter_height, filter_width, in_channels, output_channels] — , 2*300*1*128 = 76800 float32, , 76800*(32/8) = 307200 .

? ( 220 . ) 300 265 . , .

TF . ( ), , , — ( ), . (). :

. , : SavedModel. , .

Checkpoint

, Saver API:

saver = tf.train.Saver(save_relative_paths=True)

ckpt_filepath = saver.save(sess, "cnn.ckpt"), global_step=0)global_step , , — cnn-ckpt-0.

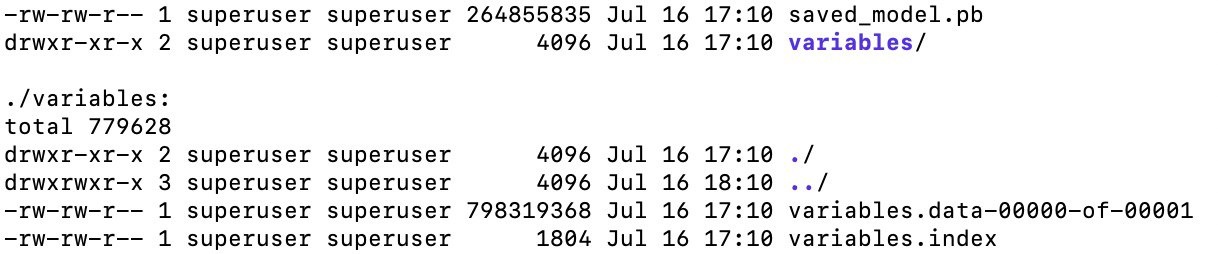

<model_path>/cnn_ckpt :

checkpoint — . , TF . , .

.data , . , — 800 . , (≈265 ). ( ). , .

.index .

.meta — , (, , ), GraphDef, . , . — .meta , ? , TF - embedding-. , , , , , . , , :

with tf.Session() as sess:

saver = tf.train.import_meta_graph('models/ckpt_model/cnn_ckpt/cnn.ckpt-0.meta') # load meta

for n in tf.get_default_graph().as_graph_def().node:

print(n.name, n['attr'].shape).

SavedModel

, . . API tf.saved_model. tf.saved_model, TF- (TFLite, TensorFlow.js, TensorFlow Serving, TensorFlow Hub).

:

saved_model.pb, , , .meta , (, ), API, ( CLI, ).

SavedModel — , . “” . , , - — , .

, CNN-, TF 1.x, . .

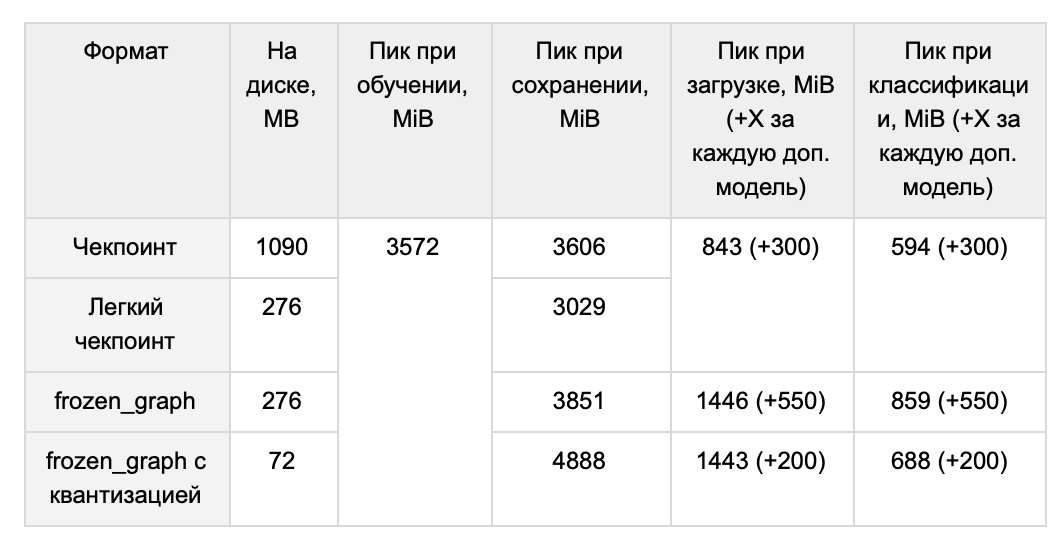

, 1 , :

-

. , , ( tools.optimize_for_inference ). -

. , , — , tf.trainable_variables(). -

, . , (. BERT). -

. , . .

, , . , forward pass, . , . 1 265 .

TF 1.x , .

( ) GraphDef:

graph = tf.get_default_graph()

input_graph_def = graph.as_graph_def() . : tf.python.tools.freeze_graph tf.graph_util.convert_variables_to_constants. ( ) (, ['output/predictions']), , , . .

output_graph_def = graph_util.convert_variables_to_constants(self.sess, input_graph_def, output_node_names), .

freeze_graph() ( , , ). graph_util.convert_variables_to_constants() :

with tf.io.gfile.GFile('graph.pb', 'wb') as f:

f.write(output_graph_def.SerializeToString())266 , :

# GraphDef

with tf.io.gfile.GFile(graph_filepath, 'rb') as f:

graph_def = tf.GraphDef()

graph_def.ParseFromString(f.read())

with tf.Graph().as_default() as graph:

#

self.input_x = tf.placeholder(tf.int32, [None, self.properties.max_len], name="input_x")

self.dropout_keep_prob = tf.placeholder(tf.float32, name="dropout_keep_prob")

# graph_def

input_map = {'input_x': self.input_x, 'dropout_keep_prob': self.dropout_keep_prob}

tf.import_graph_def(graph_def, input_map), import:

predictions = graph.get_tensor_by_name('import/output/predictions:0'):

feed_dict = {self.input_x: encode_sentence(sentence), self.dropout_keep_prob: 1.}

sess.run(self.predictions, feed_dict), :

- . ,

sess.run(...). , CPU 20 ms, ~2700 ms. , . SavedModel . - RAM. RAM, . ~265 , . , TF GraphDef .

- – RAM TF . 1.15, TF 1.x, 118 MiB, 1.14 – 3 MiB.

, . ? / TF- tf.train.Saver. , , , :

- MetaGraph

tf.train.Saver . , :

saver = tf.train.Saver(var_list=tf.trainable_variables())MetaGraph . , meta . MetaGraph save:

ckpt_filepath = saver.save(self.sess, filepath, write_meta_graph=False)1014 M 265 M ( , ).

Pruning — , , . , .

, TF 1.x:

- Grappler: c tensorflow

- Pruning API: google-research

- Graph Transform Tool:

, — tensorflow, Grappler. Grappler . , set_experimental_options. , zip . , zip , . Grappler .

google-research mask threshold, . . , , mask threshold, , , . .

Grappler, . : ? , ? , 0.99 . , mc, hex :

, , . . -, . -, , , , . , .

CNN. .

, . Graph transform tool.

quantize_weights 8 . , 8- . , , - .

quantize_nodes 8- . .

, - . quantize_weights - , 4 .

, , TensorFlow Lite, .

— , . 64 (32) , .

RAM Ubuntu ( numpy int64) . 220 , int32, int16. .

tf-. float16. , , ( 10%), ( 10 ). , , epsilon learning_rate . , , .

RAM

, . , .

, . . .

QA-

Q: -, - ?

A: , . word2vec. ( , , min count, learning rate), 220 ( — 265 MB) CNN, 439 (510 MB).

- , , , - . , ( ). , . YouTokenToMe, , , .. , .., . . , , , . 30 (37 MB) , 3.7 CPU 2.6 GPU. ( ), OOV-.

Q: , , ?

A: , .

:

1. :

with tf.gfile.GFile(path_to_pb, 'rb') as f:

graph_def = tf.GraphDef()

graph_def.ParseFromString(f.read())

with tf.Graph().as_default() as graph:

tf.import_graph_def(graph_def, name='')

return graph2. "" :

sess.run(restored_variable_names) 3. , .

4. , , :

tf.Variable(tensors_to_restore["output/W:0"], name="W"), .

, , .

We did not try to retrain the models compressed by the rest of the described methods, but theoretically there should not be any problems with this.

Q: Are there other ways to reduce optimization that you have not considered?

A: We have several ideas that we never got to realize. First, constant folding is a “folding” of a subset of graph nodes, pre-computation of the value of parts of the graph that are weakly dependent on the input data. Secondly, in our model, it seems like a good solution to apply pruning of embeddings.