Our new story about the graduation projects of the students of our study projects is the last one this year. This time we will introduce you to three works by students of Technopark, Technopolis and Technosphere. These are graduation projects based on the results of two years of study, and the jury selected them as the best work. We would also like to remind you that training programs in Technopark, Technopolis and Technosphere have their own specialization and are very different.

Previous publications: 1 , 2 , 3 , 4 , 5 .

This is the first time students who have defended projects are exposed to these technologies. The project takes one semester, the purpose of the article is to show the educational process and the results of the students' work.

Facepick, Technopolis

Photo search service by faces.

At mass events - conferences, holidays, corporate parties, weddings - hundreds, if not thousands of photos are taken. And it takes a very long time to find pictures that show you and your friends or relatives. Therefore, the project team decided to make a service for quickly finding photographs that contain specified faces.

The system, using neural networks, first recognizes faces in reference images, and then clusters the base of photographs by the found people. The service can work with external sources: VKontakte, Odnoklassniki, Yandex.Disk and Google Drive.

The processed album is a set of photopacks, each of which contains photographs of one person. On the page of the processed album, the user can view photos of a particular photopack, and also download it to his device, or share with friends. You can search the processed album by uploading a photo of a person.

The service is a client-server application with REST API. The server part consists of two main components: Java-application, which implements the logic of user interaction with the service; and Python applications for identifying faces in photographs and extracting their unique features using a neural network.

The authors focused on scalability, so they used a load balancer to balance the load on the backend, and using the Redis message queue for interaction between Java and Python applications allows you to independently change the number of instances of these components.

All services are deployed in separate Docker containers, and docker-compose is used to orchestrate them. To implement the client side of the application, we used TypeScript and React. The PostgreSQL database is used as a persistent data storage.

In the future, graduates want to improve recognition accuracy, add filters by gender and age, and support for Facebook and Google Photos. There are also ideas for monetizing the service by limiting free functionality and introducing advertising.

Project team: Vadim Dyachkov, Egor Shakhmin, Nikolay Rubtsov.

Video with project protection .

Playmakers, Technopark

Hardware and software solution for sports training logging.

It so happened that all members of the project team are fond of sports. Coming into the gym and watching people log their workouts and progress, students wondered if this process could be improved? After research and surveys, the team realized that existing workout apps have too complex a UX, and wearables on the market work well mainly for cardio workouts (running, ellipse, etc.). As a result, they formulated their scheme of work:

The device was made independently, because the integration with existing solutions (for example, MiBand) turned out to be very laborious, and in the case of watches and bracelets, the developers were not satisfied with the placement on the wrist, this gave less information about the movement patterns.

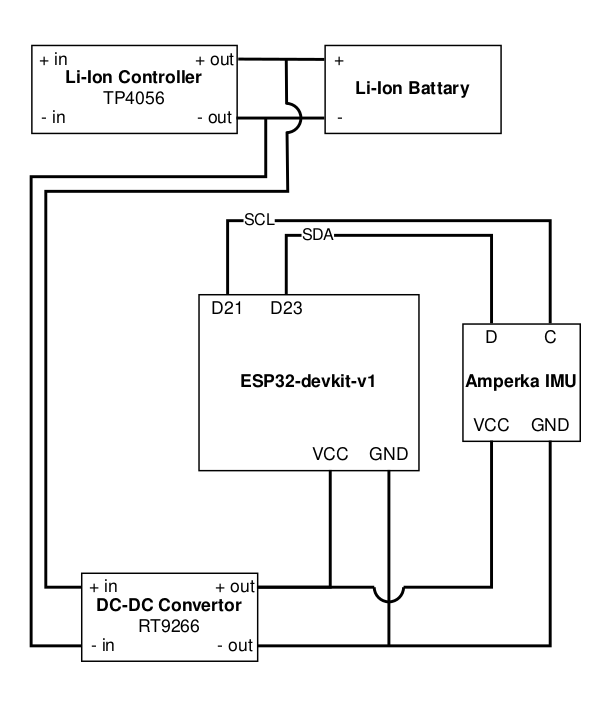

We chose the ESP32-WROOM module as part of the ESP32-devkit-v1. It met certain requirements, utilities for generating code and firmware in Python were written for it, and besides, it can be programmed from the Arduino IDE like any Arduino board. The Amperka IMU module was chosen for the role of sensors, which includes an accelerometer and a gyroscope. All communication with sensors is carried out using the I2C protocol.

Prototype diagram:

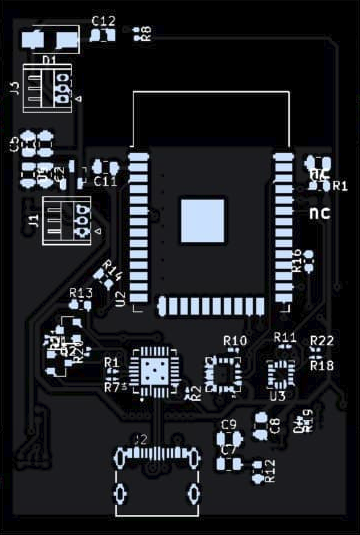

For the next version of the device, board printing and component soldering were ordered in China.

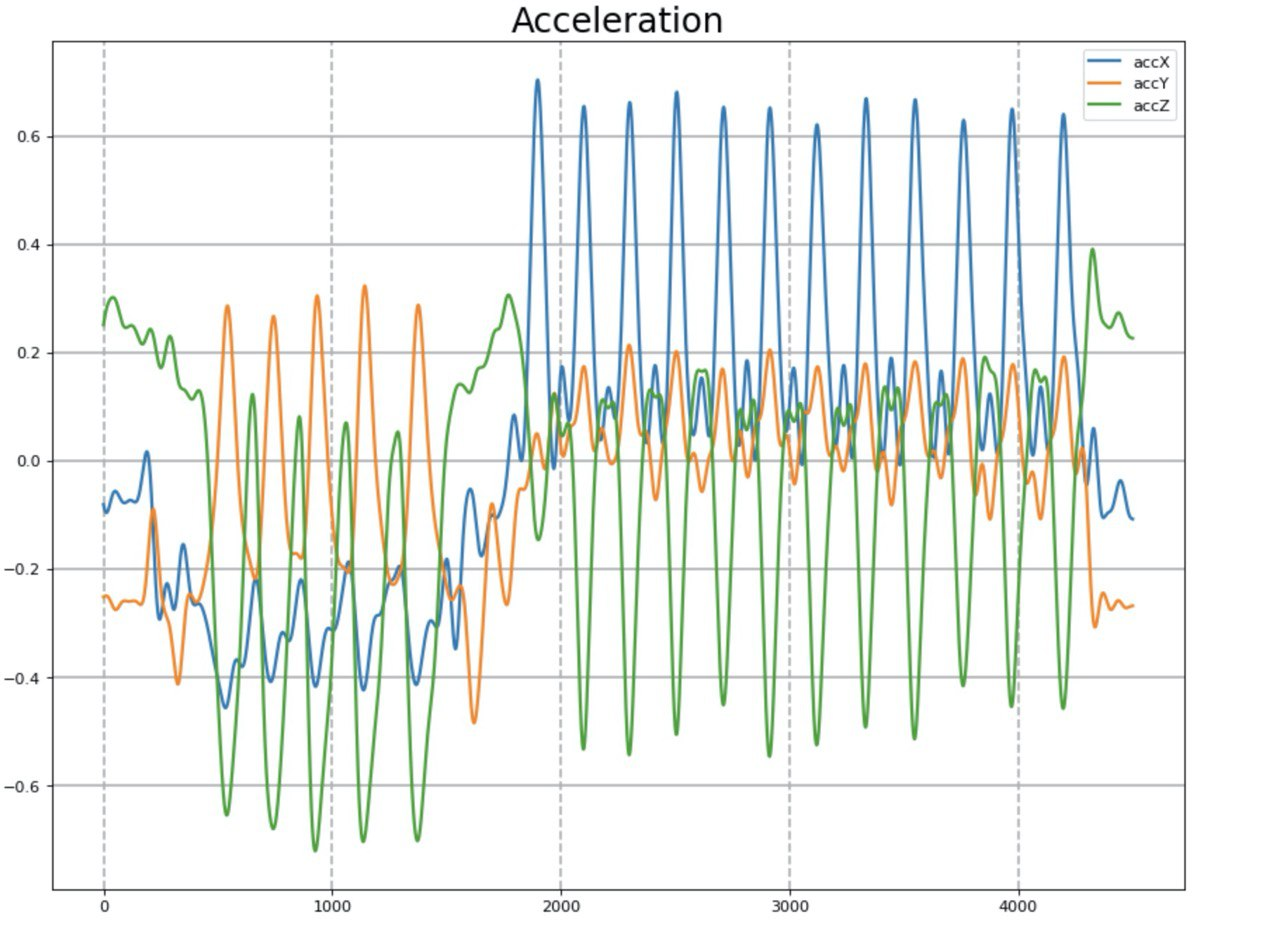

Next, it was necessary to train the neural network to recognize various exercises. However, there are not so many open data sets that would contain time series from the accelerometer and gyroscope during physical activity. And most of them cover only running, walking, etc. As a result, we decided to make a training set ourselves. We chose three main exercises that did not require special equipment: push-ups, squats and twists.

Accelerometer data after filtering.

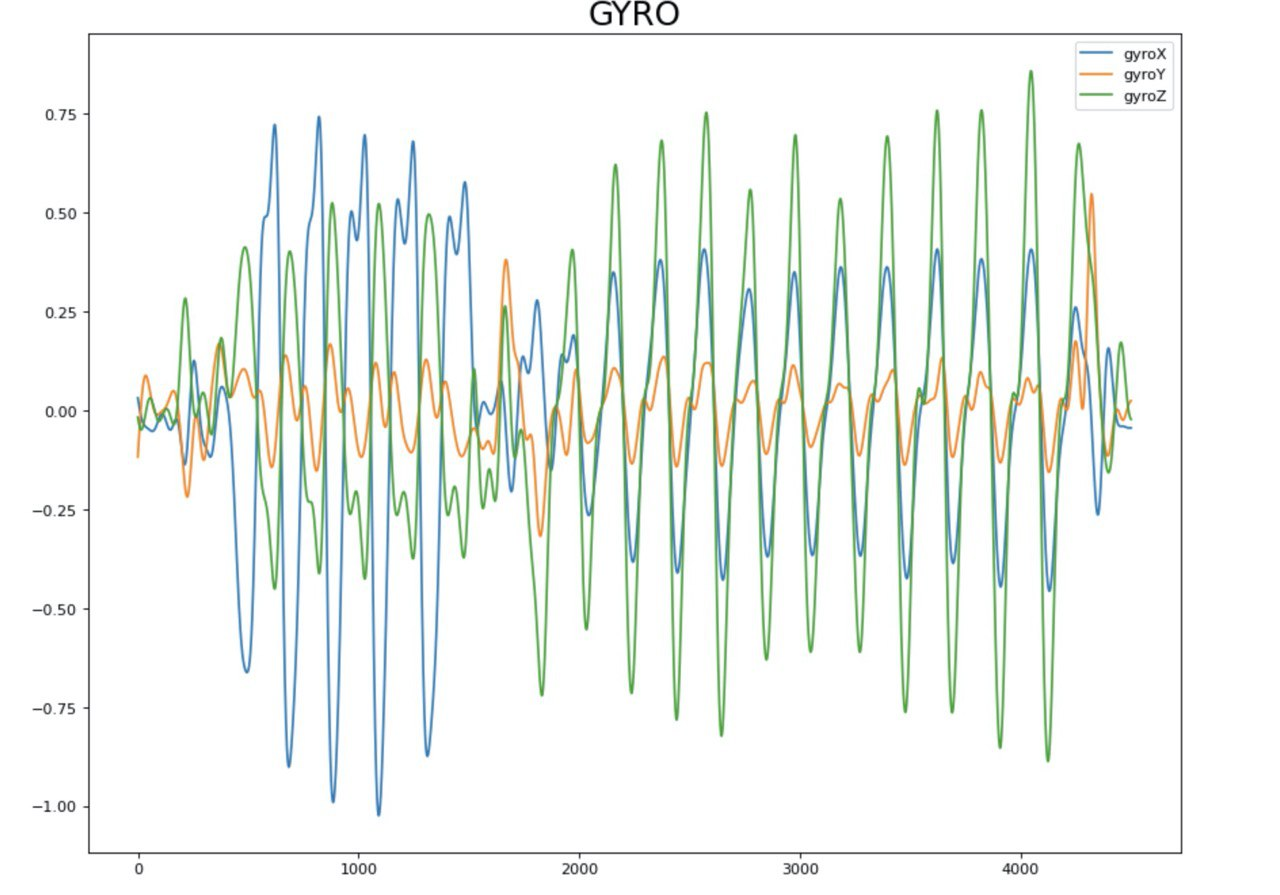

Gyroscope data after filtering.

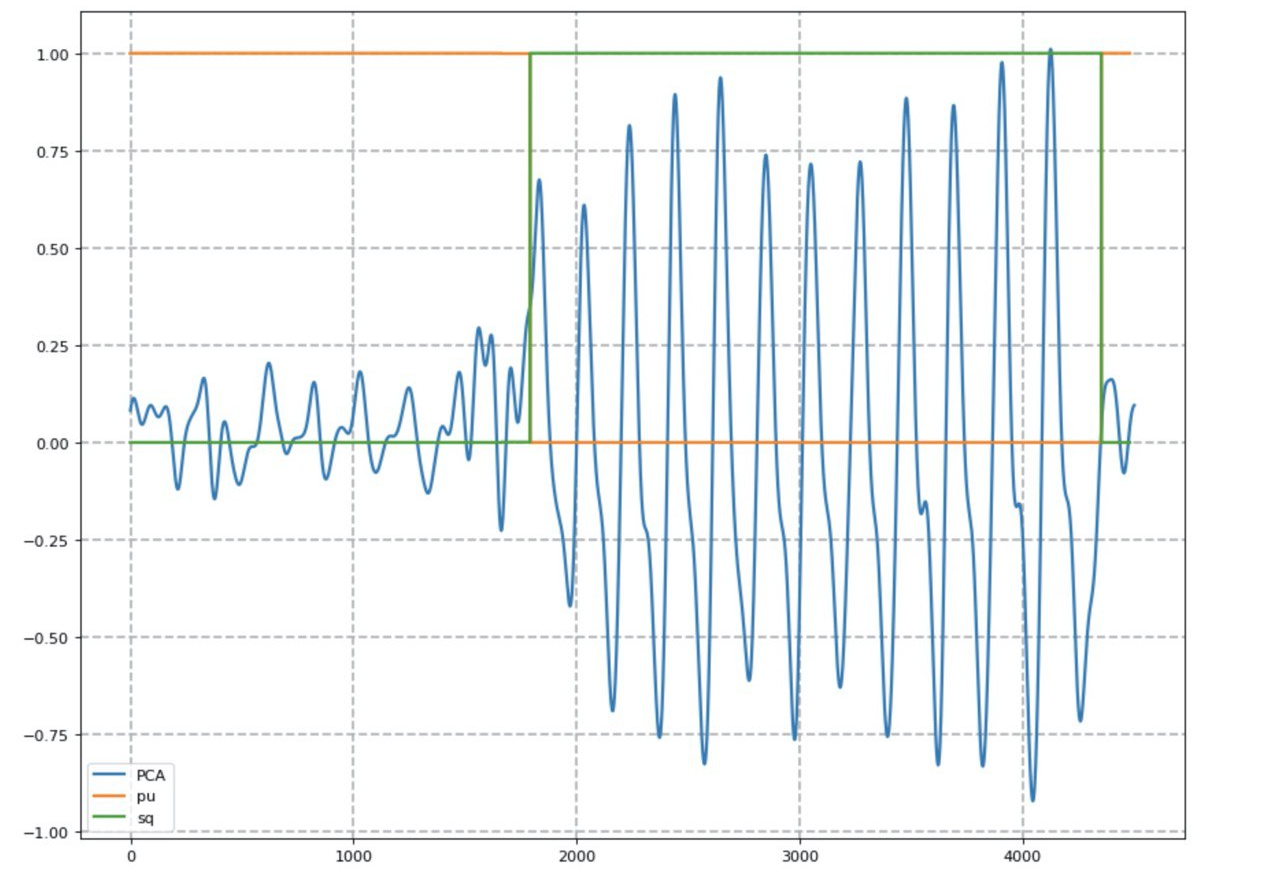

A recurrent neural network with LSTM architecture is responsible for classifying exercises. For the convenience of visualizing the time series, the principal component analysis (PCA) was used.

The result of the neural network (orange line - the probability of doing push-ups, green - squats).

To count the number of repetitions, the method of counting local maxima was used, taking into account the base signal level. After achieving satisfactory results, we took up the mobile application. One of the conditions was the development of such an interface that would require minimal interaction with a smartphone. We chose MVP as a design pattern.

Application interface:

In addition to the client application, the authors developed the Batcher utility program, which facilitated recording and marking up data for training a neural network:

In addition, the authors wrote a mechanism for validating batches after saving them to the database.

For storing time series, the InfluxDB database was chosen, which is adapted for such tasks. To implement a machine learning service, we chose a standard stack from Python, Django and Celery. The task queue allowed the classification task to be performed asynchronously without blocking the main application interface.

To store user data, they took Postgres, the backend of the application itself was implemented in Go using the Gin framework.

General architecture.

As a result, the team achieved their goals and developed an MVP version of the product, which allows you to solve the task of logging training with a single button. Now students are working to reduce the cost and size of the device, improve the accuracy of the neural network, and expand the set of supported exercises.

Project team: Oleg Soloviev, Temirlan Rakhimgaliev, Vladimir Elfimov, Anton Martynov.

Video with project protection .

GestureApp, Technosphere

A framework for a contactless interface.

Sometimes it becomes inconvenient or undesirable to use familiar tactile interfaces. For example, when you are driving a car, using an ATM or payment terminal, or when your hands are simply dirty. To solve this problem, the authors created a contactless interface framework that allows gestures to control the application.

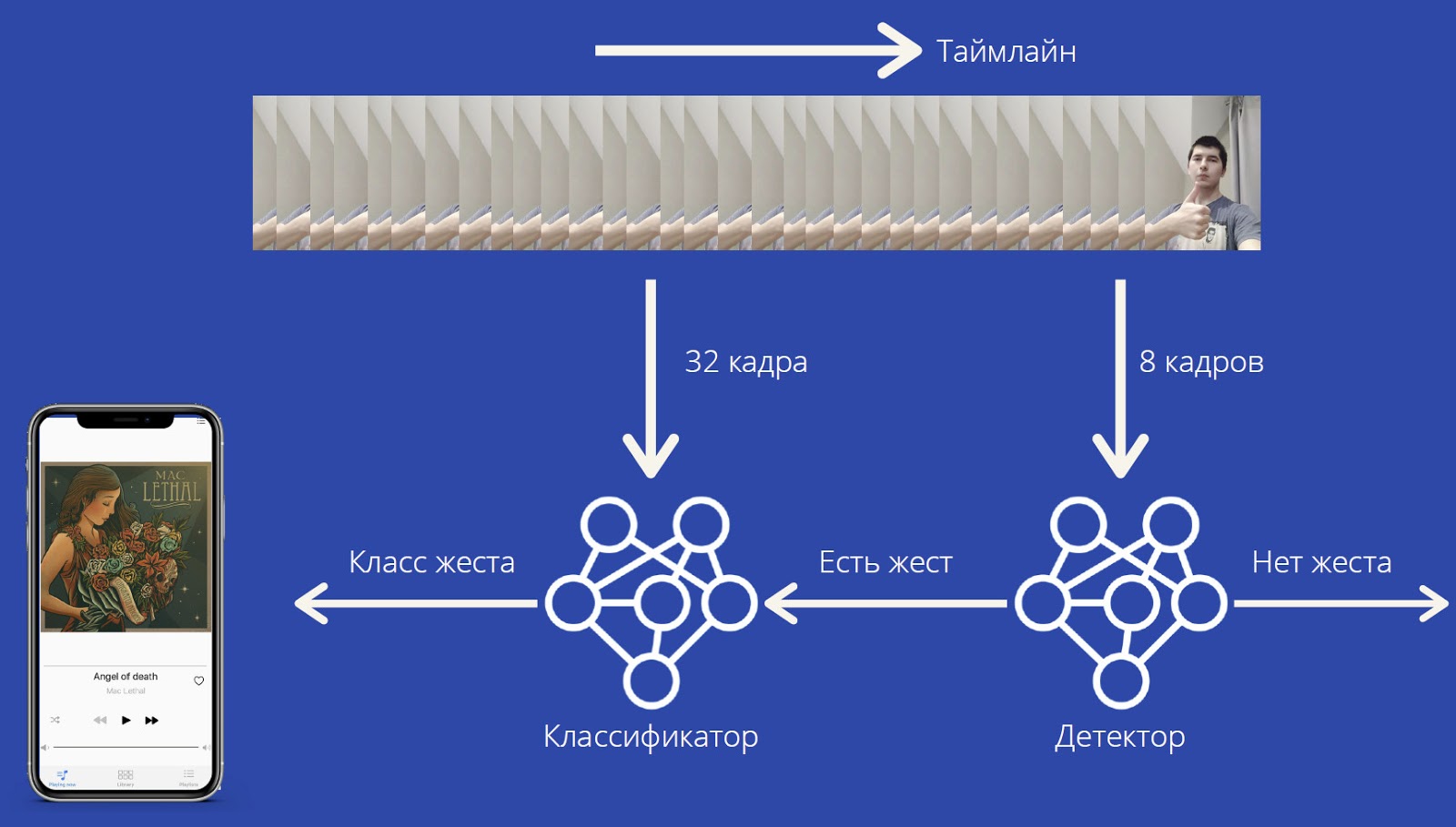

To work, GestureApp needs a video camera, the signal from which is processed in real time in order to recognize the user's gestures. And depending on the gestures, the framework gives the appropriate commands to the application.

The computational load is relatively low, the framework can work on rather weak devices and does not require special equipment.

Gestures are recognized by the neural network MobileNet3D. The neural network gives the probabilities of classes of gestures, as well as a special class "no gesture". This architecture allows recognition of both static and dynamic gestures. The neural network was trained on the Jester dataset. The accuracy of forecasting F 1 = 0.92 has been achieved .

The capture stream receives frames from the front camera and places them at the end of the list. If its length is more than 32, the capture thread wakes up the model execution thread. It takes 32 frames from the beginning of the list, predicts the classes, then removes elements from the end until one element remains. This eliminates the need for heavy sync and significantly improves performance: 20 FPS on the iPhone 11, 18 FPS on the iPhone XS Max, 15 FPS on the iPhone XR. And with a smart pipeline for pre-processing and post-processing, energy consumption is kept to a minimum.

So far, the framework only works for iOS and Windows. The development used the PyTorch framework, TwentyBN platform and Swift language.

The plans include: improve the recognition quality, add recognition of new gestures without retraining all models, create a version for Android, add not only button gestures, but also sliders gestures.

Project team: Maxim Matyushin, Boris Konstantinovsky, Miroslav Morozov.

Video with project protection .

You can read more about our educational projects at this link . And more often go to the Technostream channel , there regularly appear new training videos about programming, development and other disciplines.