I decided to help those who are busy choosing SSG. A colleague of mine has put together a list of questions to help you find a static site generator. Attached to this list is a summary of popular SSGs. It only lacks an assessment of how different SSGs perform in action.

What all SSGs have in common is how they work. Namely, they accept some data as input and pass this data through the template engine. The output is HTML files. This process is commonly referred to as building the project.

In order to get comparable performance data for different SSGs, there are many nuances to consider. It is necessary to pay attention to the features of the projects, to the factors that slow down or speed up the assembly. This is where our exploration of the performance of popular SSGs begins.

That being said, our goal is not just to find the fastest SSG. The reputation of being "the fastest" has already taken hold on Hugo . I mean, the project's website says that Hugo is the world's fastest website building framework. And that means - the way it is.

This article compares the performance of popular SSGs. Namely, we are talking about the build time of projects. But the most important thing here is a deep analysis of why certain tools show certain results. It will be a mistake, regardless of anything except the time of project assembly, to choose the "fastest SSG" or immediately abandon the "slowest" one. Let's talk about why this is so.

Tests

The SSG testing process is designed to start by researching a few popular projects and exploring the processing of simple data formats. This is the basis on which to build a study of more static site generators and expand this study with more complex data formats. The study now includes six popular SSGs:

When studying each of them, the following approach and the following conditions are applied:

- The data source for each test (project build process) are Markdown files containing a randomly generated header (what is called the "front matter") and the document body (three paragraphs of text).

- There are no images in the documents.

- The tests are run multiple times on the same computer. This makes the specific values obtained from the test less important than the comparison of relative results.

- The output is in plain text on an HTML page. Data processing is performed using standard settings, which are described in the getting started guides for each of the examined SSGs.

- « ». Markdown-.

These tests are considered performance tests (benchmarks). They use simple Markdown files, resulting in unstyled HTML code.

In other words, the output is, from a technical point of view, a website that could be deployed in production. But this is not an implementation of a real SSG scenario. Instead of trying to reproduce a real situation, we want to get a baseline for comparing the frameworks under study. When using the above tools to create real sites, SSG will work with more complex data and with different settings, which will affect the build time of projects (this usually slows down the build).

For example, one of the differences between our test and real-world SSG use cases is the fact that we are examining cold build processes. In reality, things are a little different. For example, if the project includes 10,000 Markdown files that are the data source for SSG, and if Gatsby is used to build the project, then the Gatsby cache will be used. And this greatly (by almost half) reduces the assembly time.

The same can be said for incremental builds. This has to do with comparing hot and cold builds in the sense that only changed files are processed when performing an incremental build. We are not examining incremental builds in these tests. In the future, it is quite possible that this study will be expanded in this direction.

SSG of different levels

Before we start exploring, let's look at the fact that there are actually two flavors of SSG, two levels of static site generators. Let's call them "basic" and "advanced".

- Basic generators (although they are not so simple) are, in fact, command line tools (Command-Line Interface, CLI) that take data and output HTML. Often their capabilities lend themselves to expansion in the direction of processing additional resources (we are not doing this here).

- Advanced generators offer some additional features in addition to creating static sites. These are, for example, server-side rendering of pages, serverless functions, integration with various web frameworks. They are usually, immediately after installation, configured to give the user more dynamic capabilities than the base generators.

For this test, I specially selected three generators of each level. The basic ones include Eleventy, Hugo and Jekyll. The other three generators are based on frontend frameworks. These SSGs include various additional tools. Gatsby and Next are based on React, while Nuxt is based on Vue.

| Basic generators | Advanced generators |

| Eleventy | Gatsby |

| Hugo | Next |

| Jekyll | Nuxt |

Hypotheses and assumptions

I propose to apply the scientific method in our research . Science is very exciting (and can be very useful).

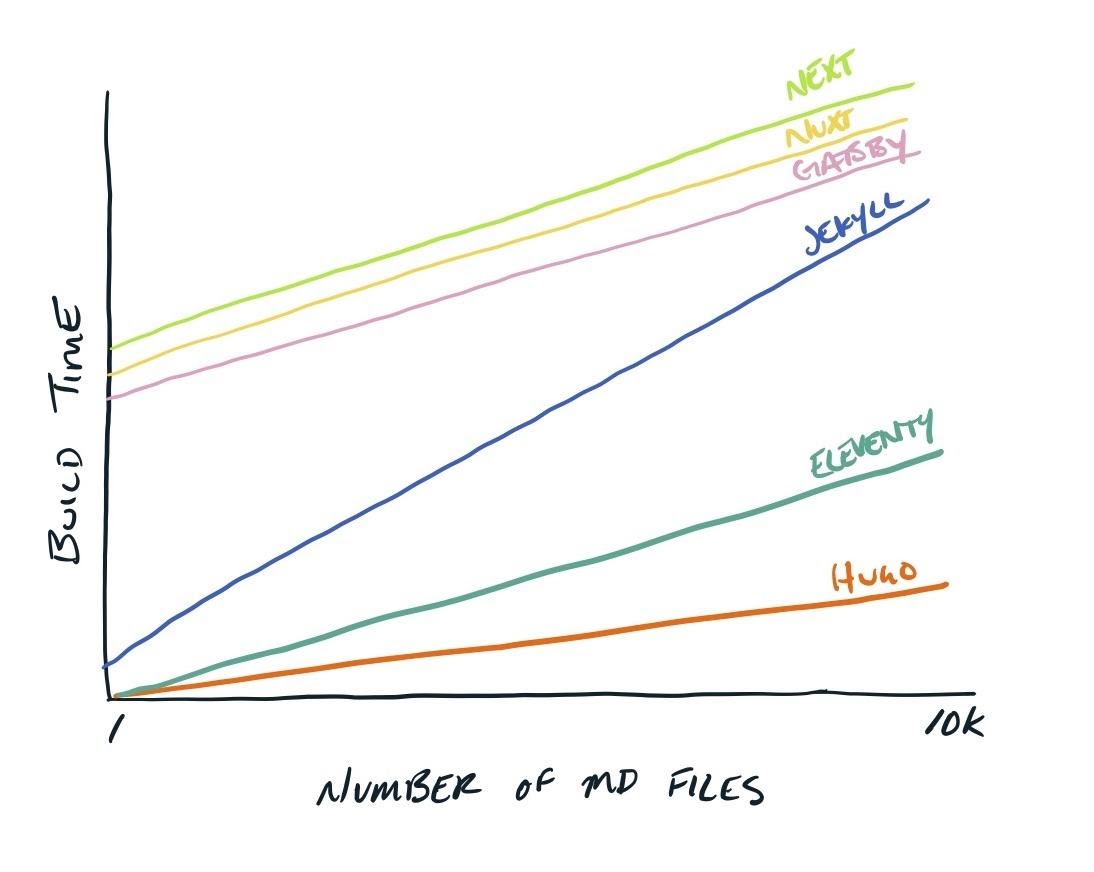

My hypothesis is that if SSG is advanced it means that it will run slower than basic generators. I am sure that this will be reflected in the results of the study, since more mechanisms are involved in the work of advanced SSGs than in the work of basic ones. As a result, it is very likely that, based on the research, basic and advanced generators can be clearly divided into two groups. At the same time, basic generators will work faster than advanced ones.

▍Basic SSG: high speed and linear dependence of build speed on the number of files

Hugo and Eleventy will process small datasets very quickly. They are (relatively) simple processes created by Go and Node.js respectively. Their test results should reflect this. While both of these SSGs will slow down as the number of files grows, I expect them to remain the leaders. At the same time, it is possible that Eleventy, with increasing load, will demonstrate the dynamics of change in assembly time, which deviates more from linear than Hugo. This could be a simple consequence of the fact that Go's performance is generally better than Node.js

▍Advanced SSG: Slow build start and subsequent speed increase, but not too serious

Advanced SSGs, or those tied to some kind of web framework, will start slowly, it will be noticeable. I suspect that in a single file test, the difference between basic and advanced frameworks will be quite significant. For basic ones, this will be a few milliseconds, while for advanced ones, for Gatsby, Next and Nuxt, it will be seconds.

SSGs based on web frameworks use webpack, which adds additional load to the system as they run. At the same time, this additional load does not depend on the amount of processed data. But we ourselves agree to this, using more advanced tools (we will talk more about this below).

And when it comes to processing thousands of files, I suspect that the gap between the basic and advanced generators groups will narrow. At the same time, however, advanced SSGs will still seriously lag behind the basic ones.

If we talk about a group of advanced generators, then I'm expecting the fastest of them to be Gatsby. I only think so because it doesn't have a server-side rendering component that can slow things down. But this is just a reflection of my inner feelings. Perhaps in Next and Nuxt server rendering is optimized to such a level that if it is not used, then it does not affect the build time of projects in any way. I suspect Nuxt will be faster than Next. I make this assumption based on the fact that Vue is "lighter" than React.

▍Jekyll is an unusual representative of basic SSG

The Ruby platform is notorious for its poor performance. It gets optimized over time, it gets faster, but I don't expect it to be as fast as Node.js, let alone Go. But, at the same time, Jekyll does not bear the additional burden associated with a web framework.

I think that at the beginning of the test, when processing a small number of files, Jekyll will show high speed. Possibly as tall as Eleventy. But as we get to examining the processing of thousands of files, performance will be hit. It seems to me that there are other reasons that Jekyll may be the slowest of the six SSGs studied. To test this, we examine the performance of our generators on sets of files of different sizes - up to 100,000.

Below is a graph showing my assumptions.

Assumptions regarding the dependence of the speed of work of various SSGs

The Y-axis represents the build time of projects, the X-axis is the number of files. Next is shown in green, Nuxt in yellow, Gatsby in pink, Jekyll in blue, Eleventy in turquoise, Hugo in orange. All lines reflect the increase in project build time as the number of files grows. At the same time, the line corresponding to Jekyll has the largest inclination angle.

results

Here is the code that produces the results that I will now discuss. I also made a page that compiles the relative test results.

After many attempts to find conditions for running tests, I settled on 10 runs of each test using three different datasets.

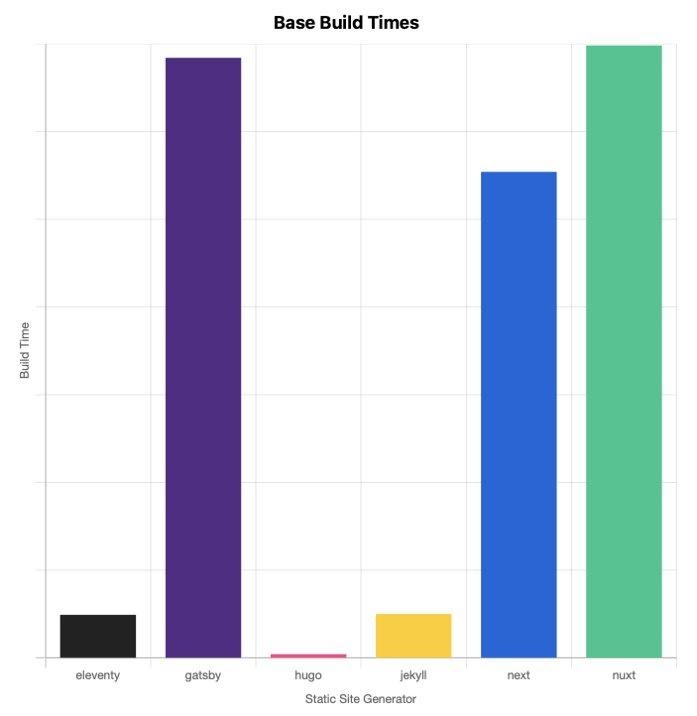

- Base dataset (Base). This is one file. Its processing allows you to estimate the time that SSG needs to get ready for work. This is the time it will take for SSG to launch. It can be called basic, regardless of the number of files being processed.

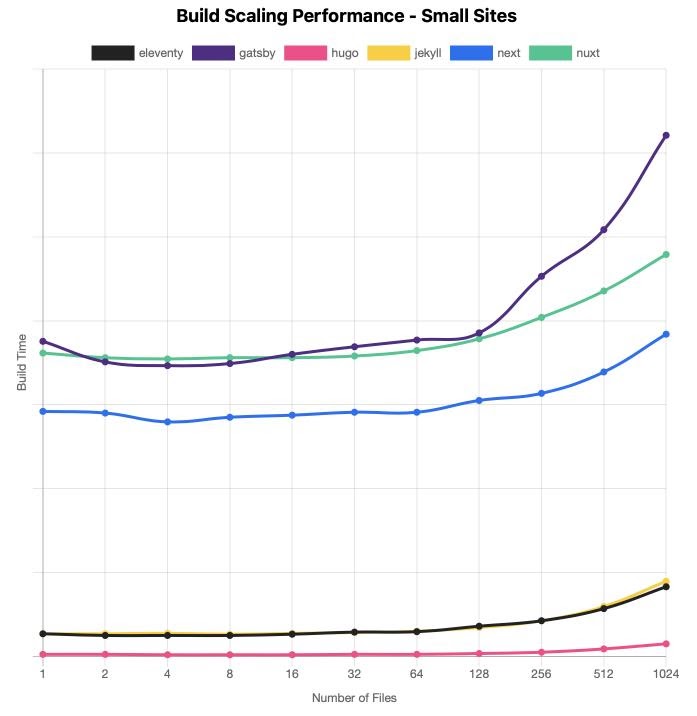

- A set of "small sites" (Small sites). It examines the assembly of sets of files from 1 to 1024. Each new test pass is carried out with a doubled number of files (to make it easier to find out whether the processing time of files grows linearly with the growth of their number).

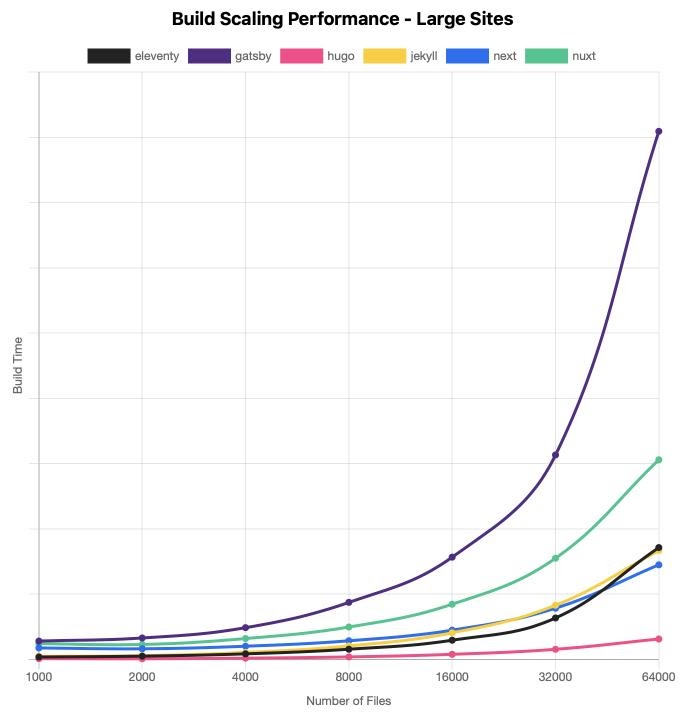

- A set of "large sites" (Large sites). Here the number of files changes from 1000 to 64000, doubling with each new test run. I originally wanted to get to 128,000 files, but I ran into bottlenecks in some frameworks. As a result, it turned out that 64,000 files are enough to find out how the studied SSGs behave when processing large-scale sites.

Here are the results I got.

Base dataset

Small sites dataset

Large sites dataset

Summarizing the results

Some of the results surprised me, but some turned out to be exactly as I expected. Here are some general findings:

- As expected, the fastest SSG was Hugo. It works great on sets of files of all sizes. But I did not expect that other generators would at least approach it, even on the Base dataset (I didn’t expect it to show linear behavior, but more on that below).

- Both SSGs, basic and advanced, are quite clearly distinguishable in the graphs showing the processing of files from the Small sites set. This was to be expected. However, it was unexpected that Next is faster than Jekyll on a set of 32,000 files, and that it bypasses both Jekyll and Eleventy on 64,000 files. Also, surprisingly, Jekyll is 64,000 files faster than Eleventy.

- SSG . Next, , , . Hugo , — - .

- , Gatsby , , . , .

- , , , . , , Hugo 170 , Gatsby. 64000 Hugo 25 . , Hugo, SSG, . , - .

What does all of this mean?

When I shared my findings with the creators of these SSGs, and with those who support them, I received from them, if not go into details, the same messages. If these messages are reduced to some kind of "average" message, then you get the following:

Generators that spend more time building a project work this way because they have to solve more problems. They give developers more options, while the faster tools (that is, "basic") are mainly concerned with converting templates to HTML files.

I agree with that.

Putting it all in a nutshell, it turns out that scaling Jamstack sites is very difficult.

The difficulties that a developer, whose project is growing and developing, will face, depends on the characteristics of each specific project. There is no data to support this. And they can't be here, since each project is, in one way or another, unique.

But it all comes down to the personal preferences of the developer, to that compromise between the time of building the site and the convenience of working with SSG, which he is ready to make.

For example, if you are going to create a large site full of pictures and plan to use Gatsby, then you need to be prepared for the fact that this site will take a long time to build. But in return, you get a huge ecosystem of plugins and the foundation for building a robust, well-organized, component-based website. If you use Jekyll in the same project, you will have to put in much more effort in order to keep the project in a well-organized state, in order to ensure the effectiveness of work on the project. And the site assembly will be faster.

At work, I usually build sites with Gatsby(or using Next, depending on the required level of dynamic interactivity of the project). We worked with Gatsby to create a framework on which to quickly build highly customizable websites containing many images based on a huge number of components. As these sites grew in size, it took longer and longer to build them, and we started to get creative. It's about implementing microfront-ends , image processing outside of the main build system, content previews, and many other optimizations.

In own projectsI usually use Eleventy. Usually only I write the code for such projects, my needs are rather modest (I perceive myself as my own good client). I have better control over build results, which helps me achieve high client-side productivity. And this is important to me.

As a result, the choice of SSG is not only a choice between "fast" and "slow". It is the selection of the tool that is best suited for a specific project, one whose work results justify the time that passes in waiting for these results.

Outcome

In fact, what I have said is just the beginning. The goal of my work is to create a base from which we can all measure the relative build times of projects produced by the popular SSG.

Have you found any inconsistencies in my proposed testing process for static site generators? How to improve the testing procedure? How to bring trials closer to reality? Do I need to transfer image processing to a separate computer? I invite everyone who cares about SSG to join me and help me find answers to these and many other questions.

What static site generators do you use?