Blur and replace background based on MediaPipe

An overview of our Web ML solution

Meet's new features are powered by MediaPipe , Google's open source streaming platform. Other ML solutions are based on this framework, such as tracking the hand , iris, and body position in real time.

The main requirement of any mobile technology is to achieve high performance. To do this, the MediaPipe web pipeline uses the low-level binary format WebAssembly.designed specifically for web browsers to speed up complex computing tasks. At run time, the browser translates WebAssembly instructions into machine code that runs much faster than traditional JavaScript. Additionally, Chrome 84 recently implemented WebAssembly SIMD support , where each instruction processes multiple data points, which more than doubles performance.

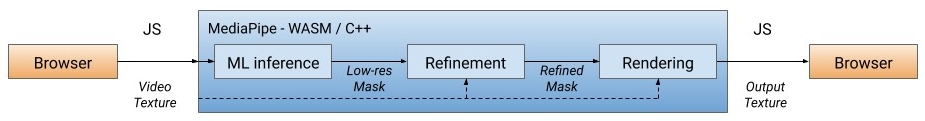

Our solution first processes each video frame, separating the user from the background (see below for more on the segmentation model), using the ML output to compute a low-res mask. If necessary, we further refine the mask to align it with the image borders. The mask is then used to render the video output throughWebGL2 and the background is blurred or replaced.

WebML pipeline: all computational operations are implemented in C ++ / OpenGL and performed in the browser via WebAssembly

In the current version, the computation is performed on the client's CPU with the lowest power consumption and the widest device coverage. To achieve high performance in real time, we have developed efficient ML models using the XNNPACK library to speed up the calculation. It is the first video output engine specifically designed for the new WebAssembly SIMD specification. By accelerating XNNPACK and SIMD, the segmentation model works in real time on the Internet.

Thanks to the flexible configuration of MediaPipe, the background blur / replace solution adapts its processing depending on the capabilities of the device. On high-end devices it runs a full pipeline to ensure the highest visual quality, while on low-end devices it switches to lightweight ML computational models without specifying the mask.

Segmentation model

ML models on the device should be ultra-lightweight for fast computing, low power consumption and small footprint. For models in the browser, the input resolution strongly affects the number of floating point operations (FLOPS) required to process each frame, and therefore should be small as well. Before feeding the image to the model, we reduce it. Recovering the most accurate mask from a low-resolution image complicates model design.

The general segmentation network has a symmetric encoding / decoding structure. Decoder layers (light green) are symmetric to encoder layers (light blue). In particular, channel attention(channel-wise attention) with global average pooling is used in both encoder and decoder blocks, which reduces the load on the CPU.

The architecture of the model with the MobileNetV3 encoder (light blue) and the symmetric decoder (light green)

For the encoder, we modified the MobileNetV3-small neural network , the design of which was automatically designed by searching for the network architecture to achieve the best performance on weak hardware. To halve the size of the model, we exported it to TFLite with float16 quantization, which resulted in a small loss of precision, but without noticeable impact on quality. The resulting model has 193 thousand parameters and the size is only 400 KB.

Rendering effects

After segmentation, we use OpenGL shaders for video processing and rendering effects. The challenge is to render efficiently without artifacts. In the refinement phase, the bilateral joint filter smooths the low-resolution mask.

Suppression of rendering artifacts. Left: A joint two-sided filter smooths the segmentation mask. Middle: Separate filters remove ghosting artifacts. Right: change the background by a light wrapper

shader blur simulates the effect of bokeh , blur adjusting power in each pixel is proportional to the values of the segmentation mask, like the spot scatteringin optics. Pixels are weighted by the spot radius so that foreground pixels do not blend into the background. We have implemented weighted blur split filters instead of the popular Gaussian pyramid , as they remove halo artifacts around humans. For performance, the blur is done at low resolution - and blended with the input frame at its original resolution.

Background Blur Examples

When replacing the background, a technique known as light wrap is used.(light wrapping) for overlaying segmented faces on a custom background. Light wrap helps soften the edges of the segmentation, allowing background light to shine on foreground elements, making the composition more realistic. It also helps to minimize halo artifacts when there is a lot of contrast between foreground and background.

Background replacement examples

Performance

To optimize performance on different devices, we provide model options with multiple input image sizes (for example, 256x144 and 160x96 in the current version), automatically choosing the best option according to the available hardware resources.

We evaluated model inference speed and through pipeline on two popular devices: the MacBook Pro 2018 with a 2.2GHz 6-core Intel Core i7 processor and the Acer Chromebook 11 with an Intel Celeron N3060 processor. For 720p input, the MacBook Pro can run a higher quality model at 120 fps and a pass-through pipeline at 70 fps, while on a Chromebook the model runs at 62 fps with a lower quality model and a pass-through pipeline produces 33 FPS.

| Model

|

FLOPS

|

Device

|

Model output

|

Conveyor

|

| 256x144

|

64 million

|

MacBook Pro 18

|

8.3 ms (120 FPS)

|

14.3 ms (70 FPS)

|

| 160x96

|

27 million

|

Acer Chromebook 11

|

16.1 ms (62 FPS)

|

30 ms (33 FPS)

|

To quantify the accuracy of the model used popular metric: coefficient Zhakar (intersection-over-union, IOU ) and a boundary F-measure (boundary F-score) ... Both models perform well, especially on such a lightweight network:

| Model

|

IOU

|

Boundary

F-measure |

| 256x144

|

93.58%

|

0.9024

|

| 160x96

|

90.79%

|

0.8542

|

We've also made a segmentation map of the model publicly available , detailing the specifications for the evaluation. Estimates include images from 17 geographic sub-regions of the world, annotated for skin tone and gender. The analysis showed that the model demonstrates consistently high results across different regions, skin tones and genders, with slight deviations in IOU scores.

Conclusion

Thus, we introduced a new ML browser-based solution for blurring and replacing backgrounds in Google Meet. Thanks to this solution, ML models and OpenGL shaders show efficient performance on the Internet. The developed features provide real-time performance with low power consumption, even on low-power devices.