The goal of the project is to completely simplify access to the capabilities of NLP (Natural Language Processing) for application developers. The main idea of the system is to strike a balance between ease of entry into NLP problems and support for a wide range of industrial library capabilities. The goal of the project is uncompromising - simplicity without oversimplification.

At the time of version 0.7.1, the project is in the incubation stage of the Apache community and is available at https://nlpcraft.apache.org .

Key system features

- Semantic modeling. A simple built-in mechanism for recognizing model elements in the query text, which does not require machine learning.

- Java API that allows you to develop models in any Java compatible language - Java, Scala, Kotlin, Groovy, etc.

- A Model-as-a-Code approach that allows you to create and edit models using tools that developers are familiar with.

- The ability to interact with all types of devices with APIs - chatbots, voice assistants, smart home devices, etc., as well as use any custom data sources, from databases to SaaS systems, closed or open.

- An advanced set of NLP tools, including a system for working with short-term memory, dialog templates, etc.

- Integration with many NER component providers ( Apache OpenNlp , Stanford NLP , Google Natural Language API , Spacy )

Limitations - current version 0.7.1 only supports English.

Let's agree on some terms and concepts used in the further presentation.

Terminology

- Named Entity is a named entity. In simple words, it is an object or concept recognized in the text. The full definition is here . Entities can be generic, such as dates, countries, and cities, or model-specific.

- NER (Named Entity Recognition) components - software components responsible for recognizing entities in text.

- Intent, . — , . — , .

- Data model

NER , .. Json Yaml .

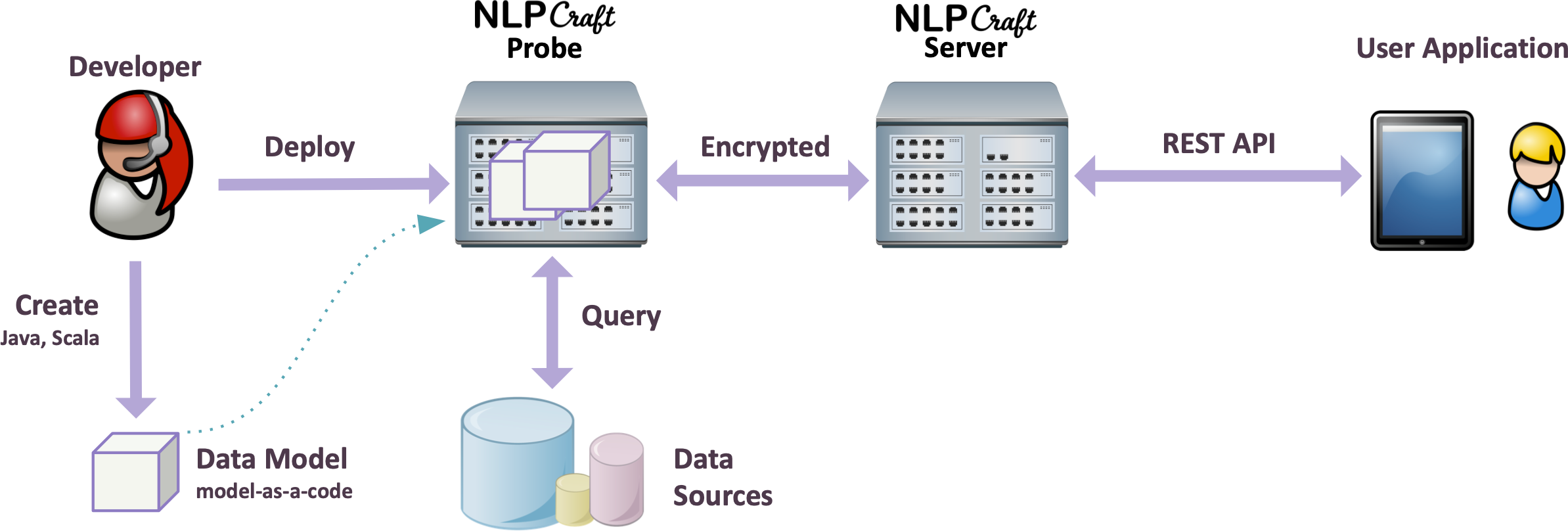

- Data probe

. , , .. Data Probe, Data Probe .

- REST server

Provides REST API for custom applications

Example using NlpCraft

Using the example of a smart home control system, consider the sequence of work with NlpCraft. The control system we are developing must understand commands such as "Turn on the lights in the whole house" or "Turn off the lamps in the kitchen." Please note that NlpCraft does not deal with speech recognition and accepts already prepared text as an argument.

We should:

- Determine what named entities we need in our work and how we can find their text.

- Create intents for different sets of entities, that is, different types of commands.

To develop an example, we need three entities - two action signs, "on" and "off", and a place of action.

Now we must design the model, that is, define the mechanism for finding these elements in the text. By default, NlpCraft uses a synonym list search engine for non-standard entities. To make the task of compiling a list of synonyms as simple and convenient as possible, NlpCraft provides a set of tools, including macros and Synonym DSL.

Below is the static lightswitch_model.yaml configuration , which includes the definition of our three entities and one intent.

id: "nlpcraft.lightswitch.ex"

name: "Light Switch Example Model"

version: "1.0"

description: "NLI-powered light switch example model."

macros:

- name: "<ACTION>"

macro: "{turn|switch|dial|control|let|set|get|put}"

- name: "<ENTIRE_OPT>"

macro: "{entire|full|whole|total|*}"

- name: "<LIGHT>"

macro: "{all|*} {it|them|light|illumination|lamp|lamplight}"

enabledBuiltInTokens: [] # This example doesn't use any built-in tokens.

elements:

- id: "ls:loc"

description: "Location of lights."

synonyms:

- "<ENTIRE_OPT> {upstairs|downstairs|*} {kitchen|library|closet|garage|office|playroom|{dinning|laundry|play} room}"

- "<ENTIRE_OPT> {upstairs|downstairs|*} {master|kid|children|child|guest|*} {bedroom|bathroom|washroom|storage} {closet|*}"

- "<ENTIRE_OPT> {house|home|building|{1st|first} floor|{2nd|second} floor}"

- id: "ls:on"

groups:

- "act"

description: "Light switch ON action."

synonyms:

- "<ACTION> {on|up|*} <LIGHT> {on|up|*}"

- "<LIGHT> {on|up}"

- id: "ls:off"

groups:

- "act"

description: "Light switch OFF action."

synonyms:

- "<ACTION> <LIGHT> {off|out}"

- "{<ACTION>|shut|kill|stop|eliminate} {off|out} <LIGHT>"

- "no <LIGHT>"

intents:

- "intent=ls term(act)={groups @@ 'act'} term(loc)={id == 'ls:loc'}*"

Briefly about the content:

- , “ls:loc”, : “ls:on” “ls:off”, “act” .

- Synonym DSL . , , “ls:on” “turn”, “turn it”, “turn all it” .., “ls:loc” — “light”, “entire light”, “entire light upstairs” .. 7700 .

- The search by synonyms in the text is carried out taking into account the initial forms of words ( lemma and stemma ), the presence of stop words , possible permutations of words in phrases, etc.

- The model defines one intent named “ ls ”. Intent triggering condition - the request must contain one entity of the “ act ” group and may contain several entities of the “ ls: loc ” type. The complete Intents DSL syntax can be found here .

For all its simplicity, this type of modeling is powerful and flexible.

Note that if necessary, the NER model specific component can be programmed by the NlpCraft user in any other way, using neural networks or other approaches and algorithms. An example is the need for a non-deterministic time-of-day entity recognition algorithm, etc.

How the match works:

- The text of the user request is split into components (words, tokens),

- words are brought into basic form (lemmas and stemmas), parts of speech and other low-level information are found for them.

- Further, based on tokens and their combinations, named entities are searched in the request text.

- Found entities are matched against templates of all intents specified in the model, and if a suitable intent is found, the corresponding function is called.

The following example intent function “ ls ” is written in Java, but it can be any other Java compatible programming language.

public class LightSwitchModel extends NCModelFileAdapter {

public LightSwitchModel() throws NCException {

super("lightswitch_model.yaml");

}

@NCIntentRef("ls")

NCResult onMatch(

@NCIntentTerm("act") NCToken actTok,

@NCIntentTerm("loc") List<NCToken> locToks

) {

String status = actTok.getId().equals("ls:on") ? "on" : "off";

String locations =

locToks.isEmpty() ?

"entire house" :

locToks.stream().

map(p -> p.meta("nlpcraft:nlp:origtext").toString()).

collect(Collectors.joining(", "));

// Add HomeKit, Arduino or other integration here.

// By default - just return a descriptive action string.

return NCResult.text(

String.format("Lights are [%s] in [%s].", status, locations)

);

}

}

What happens in the body of the function:

- The static configuration we defined above using the lightswitch_model.yaml file is read using the NCModelFileAdapter.

- As input arguments, the function receives a set of data from entities that match its intent template.

- The element of the group “ act ” determines the specific action to be performed.

- From the “ ls: loc ” list, information is retrieved about the specific place or places where the action should be performed.

The data received is sufficient to access the API of the system we manage.

In this article, we will not give a detailed example of integration with any specific API. Also outside the scope of the example will be managing the context of the conversation, accounting for the dialogue, working with the short-term memory of the system, configuring the intent matching mechanism, issues of integration with standard NER providers, utilities for expanding the list of synonyms, etc. etc.

Similar systems

The closest and most well-known “analogues” Amazon Alexa and Google DialogFlow have a number of significant differences from this system. On the one hand, they are somewhat easier to use, since they do not even require an IDE for their initial examples. On the other hand, their capabilities are very limited and in many ways they are much less flexible.

Conclusion

With the help of a few lines of code, we were able to program a simple, but quite working prototype of a lighting control system in a smart home, which understands many commands in various formats. Now you can easily expand the capabilities of the model. For example, to supplement the list of synonyms for entities or add something new that is necessary for additional functionality, such as "light intensity", etc. Changes will take minutes and do not require additional training on the model.

I hope that thanks to this post, you were able to get a first, albeit superficial, understanding of the Apache NlpCraft system.