There is almost no systematic information about the NSX ALB in Russian on the network, only the documentation from the vendor in English. Therefore, in the first part, I summarized disparate sources and made an overview of the product: I told about the features, architecture and logic of work, including for geographically dispersed sites. In the second part I will describe how to deploy and configure the system. Hopefully, both articles will be helpful to those looking for a balancer for cloud environments and want to quickly assess the NSX ALB's capabilities.

Features and capabilities

NSX ALB is an Enterprise-level Software Defined Load Balancer (SDLB). This is not typical for load balancing systems of this level, where hardware load balancers are commonly used. This approach to building the system gives the NSX ALB easy manageability and horizontal and vertical scalability.

What features does NSX ALB provide:

- Automatic power control of the balancer. When the load on the part of the clients increases, the capacity is automatically increased, when the load decreases, it is dropped.

- Load balancing across geographically dispersed servers. A separate Global Server Load Balancing (GSLB) mechanism is responsible for this.

- Balancing at one of the levels: L4 (over TCP / UDP) and L7 (over HTTP / HTTPS).

- . NSX ALB ( VMware) on-premise :

- Built-in Application Intelligence. The system monitors application performance and collects data: time for each stage of connection processing, assessment of application status and traffic logs in real time. If there is a problem, monitoring can quickly tell where to look for it.

Real-time data is collected on concurrently open connections, round-trip time (RTT), throughput, errors, response delays, SSL handshake delays, response types, and more. All information in one place:

In the Log Analytics block on the right, statistics on the main connection parameters are collected. You can hover the mouse over the desired section and quickly familiarize yourself.

In addition, NSX ALB has:

- Support for multi-tenancy to differentiate access to resources.

- Health Monitor .

- Web Application Firewall (WAF).

- IPAM DNS.

- , . . IP-, . : Botnet, DoS, Mobile threats, Phishing, Proxy, Scanner, Spam source, Web attacks, Windows exploit .., – .

- Parsing the HTTP headers of passing packets. You can use scripts (DataScript) based on the Lua language and define Avi actions depending on the values in these headers: redirecting a request, closing or resetting a connection, spoofing URIs or values in an HTTP header, selecting a specific server pool to process a request, working with cookies etc.

- Acting as an Ingress controller for Kubernetes.

NSX ALB can be managed through GUI, CLI and REST API.

Architecture and scheme of work NSX ALB

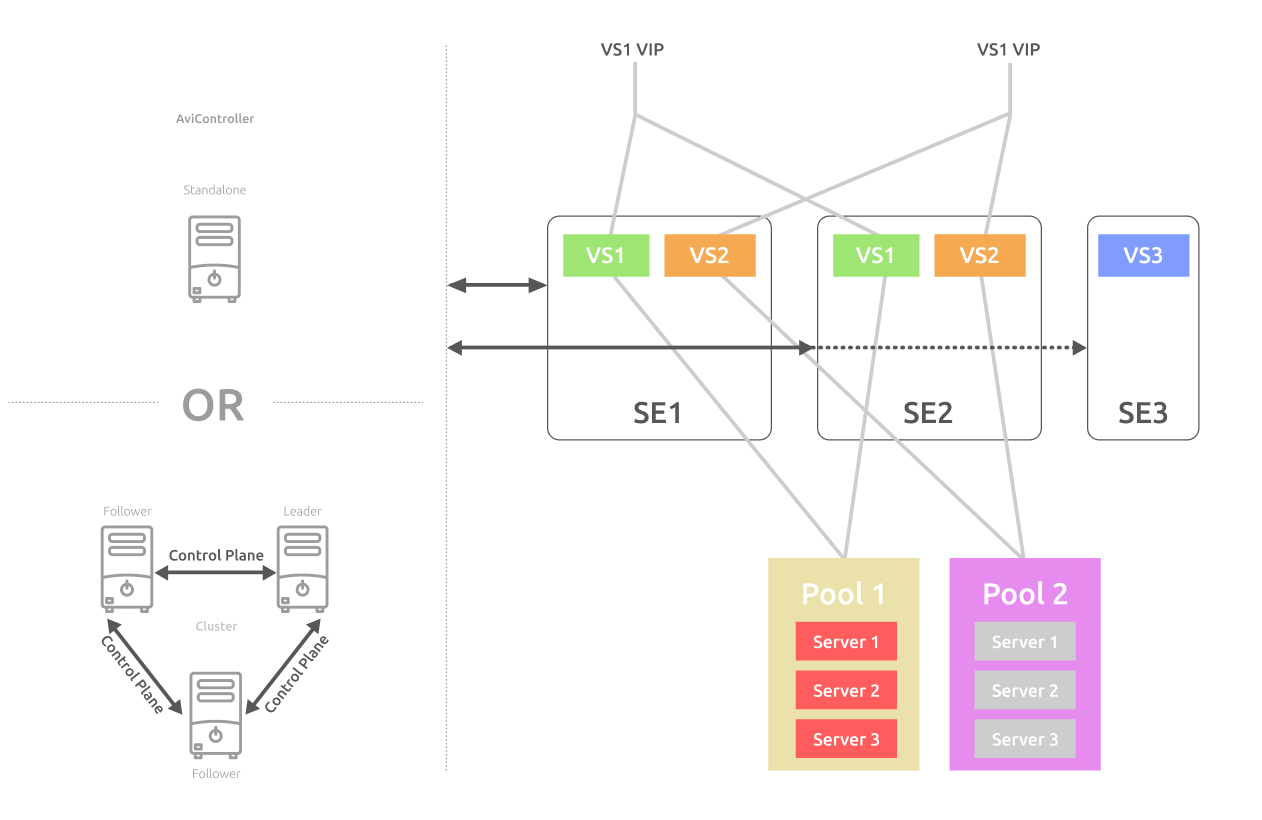

The NSX ALB works according to standard principles for most SDLBs. The servers providing the balancing service are combined into pools . Above the pools, the system administrator creates virtual services (VS) . They contain parameters of the service being balanced, for example: application type, balancing algorithm, connection settings. VS also provide clients with an external virtual IP address (Virtual IP, VIP) to access the balanced service.

Let's take a closer look at what the architecture looks like: The

key element of the system is the controller (Avi Controller)... He is responsible for the automatic power build-up and centrally controls the VS. You can deploy a single controller or a failover cluster of controllers in your infrastructure.

In the failover configuration, the controller cluster consists of 3 nodes. One of the nodes becomes the leader, the rest of the followers. All 3 nodes are active and share the load among themselves. The main scenarios for a cluster of controllers:

- if one node fails, this does not affect the operation of the cluster;

- if the leading node fails, this role is transferred to one of the remaining;

- if 2 nodes fail, the controller service on the remaining node goes into read-only mode to avoid a split-brain and waits for another node to be available again.

After deploying the controller, the engineer creates a VS and a server pool for each VS. For servers inside the pool, you can choose a balancing algorithm :

- Round Robin - the new connection will go to the next server in the pool.

- Least Connections - the new connection will go to the server with the fewest concurrent connections.

- Least Load - the new connection will go to the server with the least load regardless of the number of connections.

- Consistent Hash - New connections are distributed across servers based on the computed hash. The key for calculating the hash is specified in a special field or in a custom string. For each request, a hash is calculated using this key. The connection is sent to the server corresponding to the computed hash.

- Fastest Response – .

- Fewest Tasks – ( Avi CLI REST API.

- Fewest Servers – , .

After creating VS and the server pool, the controller itself deploys service virtual machines, Service Engine (SE) , on which the balanced service is located. Each service (VS) is distributed across several SEs, which process client requests in parallel. This ensures service resiliency in the event of virtual machine failures.

The controller can evaluate the load and add new SEs or delete unloaded ones. Due to this architecture, NSX ALB can scale both horizontally - increasing the number of SEs, and vertically - increasing the capacity of each SE.

The more services the SE balances, the more network interfaces are used. In the detailed diagram below, we see 2 types of networks:

- the network for control and transmission of service information forms the Control Plane ,

- data networks form the Data Plane .

Each SE has a separate network interface on the control network for communication with the controller. The rest of the interfaces are connected to the external network and the network where the server pool for balancing is located. This separation of the network infrastructure improves security.

For each VS, you need to define the SE parameters on which the service will be hosted. These parameters are set in the SE Group (SE Group) . When creating VS, we select the SE group: you can specify a default group (Default Group) or create a new group if VS needs special settings for the virtual machine.

The selected group will determine how the new VS will be placed. For example, if SEs of the default group are already deployed in the system and these SEs still have resources, then the new VS with the specified default group will be located on them. If we specify a new group for VS, new SEs with different parameters will be deployed under it.

At the SE group level, set the following VS placement settings:

- maximum number of SEs in a group,

- maximum number of VS per SE,

- way of placing VS on SE: Compact, when we first fill the first SE as tightly as possible and move on to the next, or Distributed with a uniform distribution:

- SE virtual machine parameters: vCPU number, memory and disk size,

Bandwidth per 1 vCPU is approximately 40K connections / s, with an average of 4 GB / s. This indicator differs for different balancing levels and the protocol used: it is more on L4, less on L7, due to the need to analyze traffic, with SSL it is much less, due to the need for encryption.

- the lifetime of an unused SE, after which it must be deleted,

- resources to host SE. In a VMware environment, you can specify or exclude a specific cluster or hosts and datastore for use.

Let's move on to the architecture and workflow of the GSLB global balancing service.

Architecture and workflow for geographically dispersed servers

In a regular virtual service, we can add servers to the pool from only one cloud. Even if we add several clouds on the controller at the same time, we will not be able to combine servers from different clouds within one VS. The GSLB global balancing service solves this problem. It allows you to balance geographically dispersed servers located in different clouds.

Within one global service, you can use both private and public clouds at the same time. Here are the cases when you might need a GSLB:

- high service availability if all servers in one of the clouds are unavailable,

- disaster recovery if one cloud is completely inaccessible,

- , , . .

Let's look at the architecture of GSLB:

The scheme of work in a nutshell: balancing is performed by the local DNS service deployed inside the NSX ALB. The client sends a request to connect to the service using the FQDN name. DNS gives the client the virtual IP of the local VS in the most optimal cloud. The service chooses the most optimal cloud based on the balancing algorithm, data on the availability of local VS on the Health Monitor and the client's geographic location. You can set different balancing algorithms - both at the global service level and at the GSLB level.

As you can see from the GSLB diagram, it is based on the elements of the previous diagram: server pools, above them are local virtual services (VS) with local virtual IP (VIP) and service virtual machines (SE). New elements appear when building the GSLB.

Global service (global VS) - a service balanced between geographically dispersed servers or private and public clouds.

A GSLB Site includes the controller and the SEs managed by it, located in the same cloud. For each site, you can set geolocation in latitude and longitude. This allows GSLB to select the server pool based on distance from the client.

GSLB sites based on the NSX ALB system are divided into leaders (leaders) and followers (followers). As in the case of controllers, this scheme provides fault tolerance for the GSLB service.

The lead site makes balancing decisions, handles connections, and monitors. You can only change the GSLB configuration from the master site controller.

Slave sites can be active or passive.

- A passive slave site only handles incoming client connections if the master site chooses its local VS.

- The active slave site receives its configuration from the master site and, if it crashes, can take over the leading role.

GSLB sites can also be External sites built on third-party balancers.

The global pool is different from the local pool, which contains local servers. In a global pool, you can combine geographically dispersed virtual services from different sites. In other words, the global pool contains local VSs, which are established at the level of GSLB sites.

Balancing connections between servers in the global pool is performed:

- by Round Robin algorithm,

- by server geolocation,

- ,

- Consistent Hash.

For one global service, you can create multiple global pools and include one or more sites in each local VS. In this case, new connections will be distributed according to geolocation or according to specified priorities. To set priorities for the servers in the pool, you can set a different weight for each.

An example of balancing between global pools . This is how the global VS will distribute connections in this scheme:

Pool GslbPool_3 has a priority of 10 and will be preferred for client connections. Of these connections, 40% of the load will go to VS-B3 and 60% to VS-B4. If GslbPool_3 becomes unavailable, all client connections will completely go to GslbPool_2, and the load between VS-B3 and VS-B4 will be evenly distributed.

Local DNScontain records with FQDN names of services balanced through it.

GSLB DNS is the local DNS VS operating mode, which is used to balance connections between GSLB sites.

Local DNS VS begins to act as GSLB DNS when we select it as the DNS service for a raised GSLB. Such a DNS VS should be deployed on all sites included in global pools.

GSLB adds global service FQDN records to each local DNS. NSX ALB populates this record with the virtual IPs of the local VS from all sites included in the global VS pool. These additional VIPs are automatically added with new local VSs added to the pool. The data in the records is updated as information about the service load, the availability of servers and the remoteness of clients from sites is accumulated. When a new client connects using the FQDN, one of the local DNS issues the VIP address of the local VS, taking into account this accumulated actual data.

How to deploy and configure the NSX ALB system, as well as raise the GSLB service in it, I will describe in the second part of this article.