Mythical rhino unicorn. MS TECH / PIXABAY

Training in less than one try helps a model identify more objects than the number of examples it has trained on.

Typically, machine learning requires lots of examples. For an AI model to recognize a horse, you need to show her thousands of images of horses. This is why technology is so computationally expensive and very different from human learning. A child often needs to see just a few examples of an object, or even one, to learn to recognize it for life.

In fact, children sometimes don't need any examples to identify something. Show pictures of a horse and a rhino, tell them the unicorn is in between and they will recognize the mythical creature in the picture book as soon as they see it for the first time.

Mmm ... Not really! MS TECH / PIXABAY

Now research from the University of Waterloo in Ontario suggests that AI models can do this too - a process that researchers call learning "in less than one" try. In other words, the AI model can clearly recognize more objects than the number of examples it has trained on. This can be critical in an area that becomes more and more expensive and inaccessible as the datasets used grow.

« »

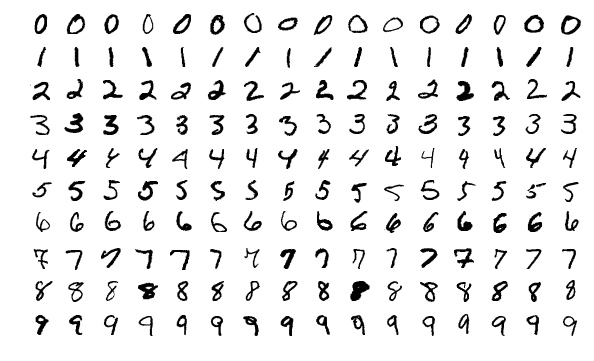

The researchers first demonstrated this idea by experimenting with a popular computer vision training dataset known as MNIST. MNIST contains 60,000 images of handwritten digits 0 through 9, and the set is often used to test new ideas in this area.

In a previous article, researchers at the Massachusetts Institute of Technology presented a method of "distilling" giant datasets into small ones. As a proof of concept, they compressed MNIST to 10 images. Images were not sampled from the original dataset. They have been carefully designed and optimized to contain the equivalent of a complete set of information. As a result, when trained on these 10 images, the AI model achieves almost the same accuracy as trained on the entire MNIST set.

Sample images from the MNIST set. WIKIMEDIA

10 pictured, "distilled" from MNIST, can train an AI model to achieve 94 percent handwritten digit recognition accuracy. Tongzhou Wang et al.

Researchers at Wotrelu University wanted to continue the distillation process. If it is possible to reduce 60,000 images to 10, why not compress them to five? The trick, they realized, was to mix several numbers in one image, and then feed them into an AI model with so-called hybrid or "soft" labels. (Imagine a horse and a rhino that have been given the features of a unicorn.)

“Think of the number 3, it looks like the number 8, but not the number 7,” says Ilya Sukholutsky, a Waterloo graduate student and main author of the article. - Soft marks try to capture these similarities. So instead of telling the car: "This image is number 3", we say: "This image is 60% number 3, 30% number 8 and 10% number 0" ".

Limitations of the new teaching method

After researchers successfully used soft labels to achieve MNIST adaptations to learning in less than one attempt, they began to wonder how far the idea could go. Is there a limit to the number of categories that an AI model can learn to identify from a tiny number of examples?

Surprisingly, there seems to be no limitation. With carefully designed soft labels, even two examples could theoretically encode any number of categories. “With just two dots, you can divide a thousand classes, or 10,000 classes, or a million classes,” says Sukholutsky.

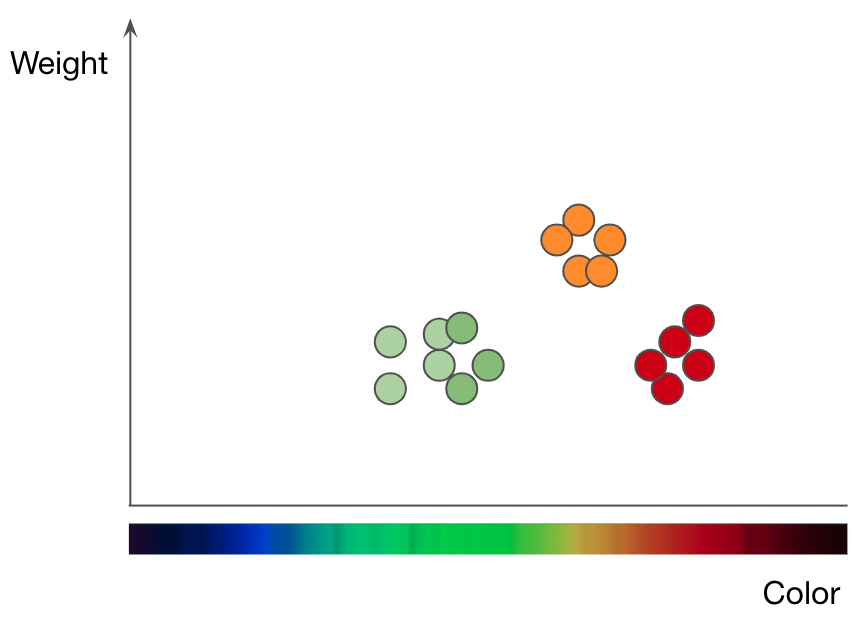

Breakdown of apples (green and red dots) and oranges (orange dots) by weight and color. Adapted from Jason Mace's presentation Machine Learning 101

This is what the scientists showed in their last article through purely mathematical research. They implemented this concept using one of the simplest machine learning algorithms known as k-nearest neighbors (kNN), which classifies objects using a graphical approach.

To understand how the kNN method works, let's take a fruit classification problem as an example. To train the kNN model to understand the difference between apples and oranges, you first need to select the functions you want to use to represent each fruit. If you choose color and weight, then for each apple and orange you enter one data point with fruit color as x value and weight as y value... The kNN algorithm then plots all the data points in a 2D chart and draws a line halfway between apples and oranges. The graph is now neatly split into two classes, and the algorithm can decide whether the new data points represent apples or oranges - depending on which side of the line the point is on.

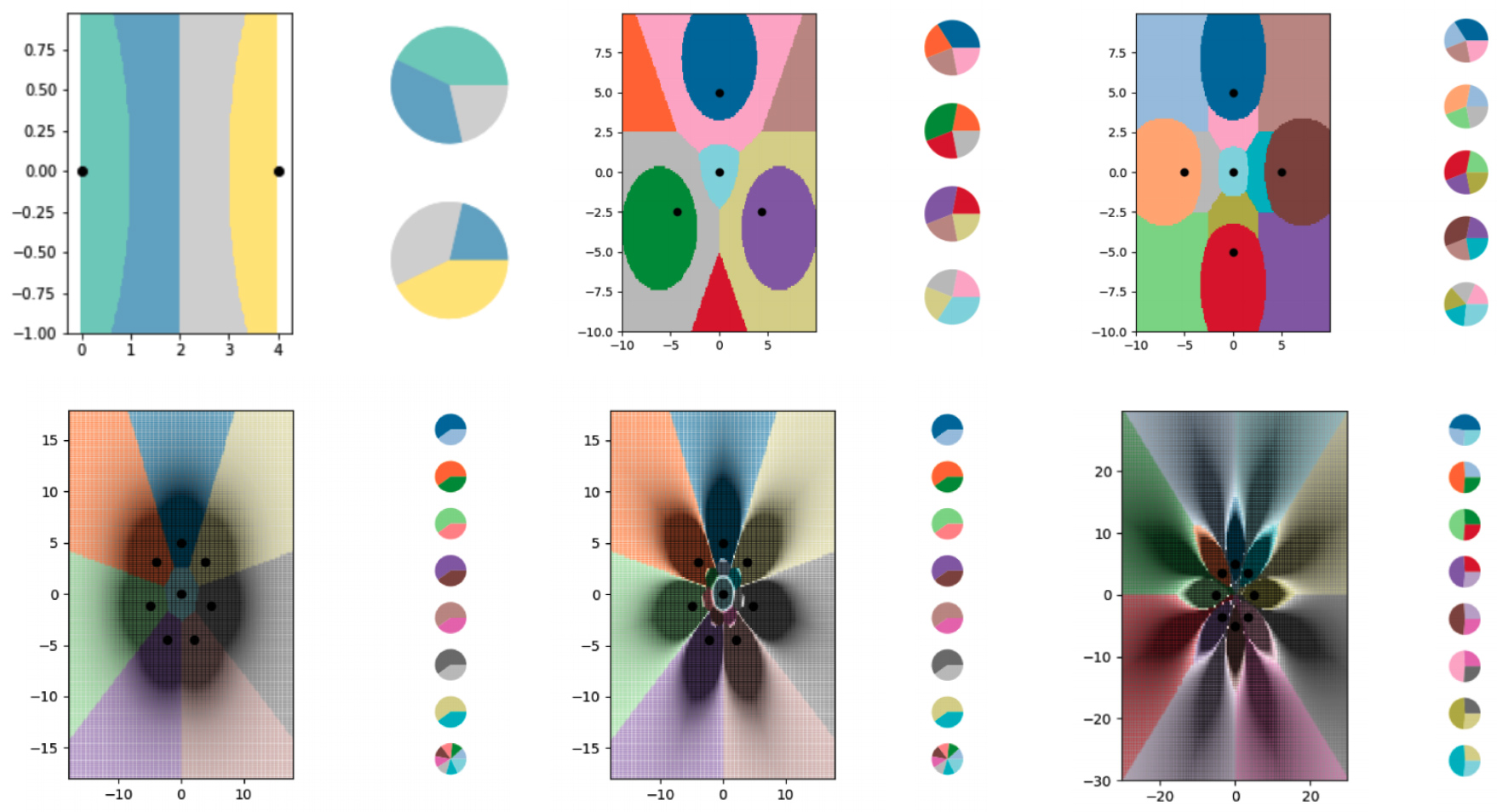

To study learning in less than one attempt with the kNN algorithm, the researchers created a series of tiny synthetic datasets and carefully thought out their soft labels. They then allowed the kNN algorithm to plot the boundaries it saw and found that it successfully split the graph into more classes than there were data points. Researchers have also largely controlled where the borders run. Using various modifications to the "soft" labels, they forced the kNN algorithm to draw precise patterns in the form of flowers.

The researchers used soft-label examples to train the kNN algorithm to encode increasingly complex boundaries and break the diagram into more classes than it has data points. Each of the colored areas represents a separate class, and the pie charts along the side of each graph show the distribution of soft labels for each data point.

Ilya Sukholutskiy et al.

Various diagrams show the boundaries constructed using the kNN algorithm. Each chart has more and more boundary lines encoded in tiny datasets.

Of course, these theoretical studies have some limitations. While the idea of learning from "less than one" attempt would be desirable to transfer to more complex algorithms, the task of developing examples with a "soft" label becomes much more complicated. The kNN algorithm is interpretable and visual, allowing people to create labels. Neural networks are complex and impenetrable, which means the same may not be true for them. Data distillation, which is good for developing soft-label examples for neural networks, also has a significant drawback: it requires you to start with a giant dataset, shrinking it down to something more efficient.

Sukholutsky says he is trying to find other ways to create these small synthetic datasets - either by hand or with another algorithm. Despite these additional research complexities, the article presents the theoretical foundations of learning. “Regardless of what datasets you have, you can achieve significant efficiency gains,” he said.

This is what interests Tongzhou Wang, a graduate student at the Massachusetts Institute of Technology. He directed the prior research on distillation data. “This article builds on a really new and important goal: training powerful models from small datasets,” he says of Sukholutsky's contribution.

Ryan Hurana, a researcher at the Montreal Institute for the Ethics of Artificial Intelligence, shares this view: "More importantly, learning in less than one try will drastically reduce the data requirements for building a functioning model." This could make AI more accessible to companies and industries that have hitherto been hampered by data requirements in this area. It can also improve the privacy of the data, as training utility models will require less information from people.

Sukholutsky emphasizes that the research is at an early stage. Nevertheless, it already excites the imagination. Whenever an author starts presenting his paper to fellow researchers, their first reaction is to argue that the idea is beyond the realm of possibility. When they suddenly realize they are wrong, a whole new world opens up.