In this post, we share with you a selection of useful data science insights from the co-founder and CTO of DAGsHub, a community and web platform for data revision control and collaboration between data scientists and machine learning engineers. The selection includes a variety of sources, from Twitter accounts to full-fledged engineering blogs that are targeted at those who know exactly what they are looking for. Details under the cut.

From the Author:

You are what you eat, and as a knowledge worker you need a good informational diet. I want to share the sources of information on Data Science, Artificial Intelligence and related technologies that I find most useful or attractive. I hope this helps you too!

Two minute papers

A YouTube channel that is good for keeping up with the latest news. The channel is updated frequently, and the presenter has an infectious enthusiasm and positive attitude in all the topics covered. Expect coverage of interesting work not only on AI, but also on computer graphics and other visually appealing topics.

Yannick Kilcher

On his YouTube channel, Yannick technically explains meaningful research in deep learning in technical detail. Instead of reading the study yourself, it is often quicker and easier to watch one of its videos to gain a deeper understanding of important articles. The explanations convey the essence of the articles, without neglecting mathematics and without getting lost in the three pines. Yannick also shares his views on how the studies compare to each other, how seriously to take the results, broader interpretations, etc. It is more difficult for beginners (or non-academic practitioners) to arrive at these discoveries on their own.

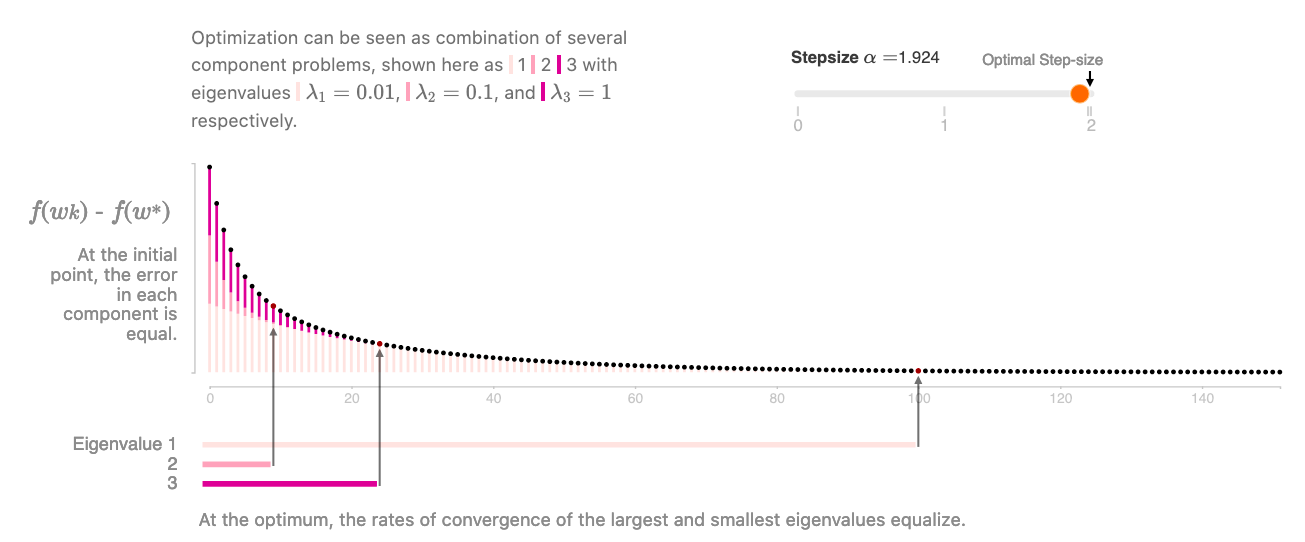

Distill.pub

In their own words:

Machine learning research needs to be clear, dynamic and vibrant. And Distill was created to help with research.

Distill is a unique publication with machine learning research. Articles are promoted with stunning visualizations to give the reader a more intuitive understanding of topics. Spatial thinking and imagination tend to work very well in helping to understand the topics of Machine Learning and Data Science. In contrast, traditional publication formats tend to be rigid in their structure, static and dry, and sometimes “mathematical” . Chris Olah, one of the creators of Distill, also maintains an amazing personal blog on GitHub . It hasn't been updated for a long time, but it still remains a collection of the best explanations ever written on the topic of deep learning. In particular, the description of LSTM helped me a lot !

a source

Sebastian Ruder

Sebastian Ruder writes a very informative blog and newsletter, primarily about the intersection of neural networks and natural language text analysis. He also gives a lot of advice to researchers and presenters at scientific conferences, which can be very helpful if you are in academia. Sebastian's articles are usually in the form of reviews, summarizing and explaining the state of modern research and methods in a particular area. This means that the articles are extremely useful for practitioners who want to get their bearings quickly. Sebastian also tweets .

Andrey Karpati

Andrey Karpati needs no introduction. In addition to being one of the most famous deep learning researchers on Earth, he creates widely used tools like arxiv sanity preserver as side projects. Countless people have entered the field through his Stanford course on cs231n , and you will find it helpful to know his recipe for learning a neural network. I also recommend watching his talk about the real-world challenges Tesla must overcome when trying to apply machine learning on a massive scale in the real world. Speech is informative, impressive and sobering. In addition to articles about ML directly, Andrey Karpati gives good life tips forambitious scientists . Read Andrew on Twitter and Github .

Uber Engineering

The Uber engineering blog is truly impressive in scope and breadth, covering a ton of topics, including artificial intelligence . What I particularly like about the engineering culture of Uber, is their tendency to produce some very interesting and valuable projects with open source at a breakneck pace. Here are some examples:

- ludwig

- h3

- react-vis

- aresdb

- And the list goes on and on ... Hats off, Uber

OpenAI Blog

Disagreements aside, the OpenAI blog is undoubtedly beautiful. From time to time, the blog posts content and ideas about deep learning that can only come at the scale of OpenAI: the hypothetical deep double descent phenomenon . The OpenAI team tends to post infrequently, but it is important.

a source

Taboola Blog

The Taboola blog isn't as well known as some of the other sources in this post, but I find it unique - the authors write about very mundane, real-world challenges when trying to apply ML in manufacturing for a "normal" business: less self-driving cars and RL agents winning world champions, more about "how do I know my model is now predicting things with false confidence?" These problems are relevant to almost everyone working in the field, and they receive less press coverage than the more mainstream AI topics, but it still takes world-class talent to properly tackle these problems. Fortunately, Taboola has both that talent and the willingness and ability to write about it so other people can learn too.

Along with Twitter, there is nothing better on Reddit than getting hooked on research, tools, or the wisdom of the crowd.

- reddit.com/r/machinelearning

- reddit.com/r/datascience

State of AI

Posts are published only annually, but they are filled with information very densely. Compared to other sources on this list, this one is more accessible to non-tech business people. What I love about the reports is that it tries to provide a more holistic view of where the industry and research is headed, linking together advances in hardware, research, business, and even geopolitics from a bird's eye view. Be sure to start at the end to read about conflicts of interest.

Podcasts

Quite frankly, I think podcasts are ill-suited for learning about technical topics. After all, they only use sound to explain topics, and data science is a very visual field. Podcasts tend to give you a reason to do more research later on, or to have fun philosophical discussions. However, here are some guidelines:

- podcast by Lex Friedman when he talks to prominent researchers in the field of artificial intelligence. The episodes with Francois Schollet are especially good!

- Data Engineering podcast . Nice to hear about new data infrastructure tools.

Awesome lists

There is less to watch out for, but more resources to help when you know what you are looking for:

- github.com/josephmisiti/awesome-machine-learning

- awesomedataengineering.com

-

, , — Twitter. . -

— . -. , , . , , . -

fast.ai, . -

ML Github, . - François

Chollet, the creator of Keras, is now trying to update our understanding of what intelligence is and how to test it. - Hardmaru Research

Scientist at Google Brain.

Conclusion

The original post may be updated as the author finds great sources of content that it would be a shame not to list. Feel free to follow him on Twitter if you want to recommend some new source! DAGsHub also hires Advocate [approx. transl. public practitioner] in Data Science, so if you're creating your own Data Science content, feel free to write to the author of the post.

Develop yourself by reading the recommended sources, and using the HABR promo code , you can get an additional 10% to the discount indicated on the banner.

- Online bootcamp for Data Science

- Training the Data Analyst profession from scratch

- Data Analytics Online Bootcamp

- Teaching the Data Science profession from scratch

- Python for Web Development Course

More courses

- Data Analytics Course

- DevOps course

- Profession Web developer

- The profession of iOS developer from scratch

- Android-

- Java-

- JavaScript

- Machine Learning

- « Machine Learning Data Science»

- «Machine Learning Pro + Deep Learning»

Recommended articles

- How to Become a Data Scientist Without Online Courses

- 450 free courses from the Ivy League

- How to learn Machine Learning 5 days a week for 9 months in a row

- How much data analyst earns: overview of salaries and vacancies in Russia and abroad in 2020

- Machine Learning and Computer Vision in the Mining Industry