We usually start with the thesis that Kubernetes is just Kubernetes, and OpenShift is already a Kubernetes platform, like Microsoft AKS or Amazon EKS. Each of these platforms has its own advantages, focused on one or another target audience. And after that, the conversation already flows into a comparison of the strengths and weaknesses of specific platforms.

In general, we thought to write this post with a conclusion like "Listen, it doesn't matter where to run the code, on OpenShift or on AKS, on EKS, on some custom Kubernetes, or on any Kubernetes (for brevity, let's call it KUK) is really simple, both there and there. "

Then we planned to take the simplest "Hello World" and, using its example, show what is common and what are the differences between KUK and Red Hat OpenShift Container Platform (hereinafter, OCP or simply OpenShift).

However, as we wrote this post, we realized that we got used to using OpenShift for so long and so much that we just don't realize how it has grown and turned into an amazing platform that has become much more than just a Kubernetes distribution. We're used to taking the maturity and simplicity of OpenShift for granted while losing sight of its brilliance.

In general, the time has come for active repentance, and now we will step by step compare the commissioning of our "Hello World" on KUK and on OpenShift, and we will do it as objectively as possible (well, perhaps showing sometimes a personal attitude to the subject). If you are interested in a purely subjective opinion on this issue, then you can read it here (EN) . In this post, we will stick to facts and facts only.

Clusters

So our "Hello World" needs clusters. Let's say "no" to any public clouds right away so as not to pay for servers, registries, networks, data transfer, etc. Accordingly, we choose a simple single-node cluster on Minikube (for KUK) and Code Ready Containers (for OpenShift cluster). Both of these options are really easy to install, but will require quite a lot of resources on your laptop.

Assembly on KUK-e

So let's go.

Step 1 - building our container image

I'll start off by deploying our "Hello World" to minikube. This will require:

- 1. Installed Docker.

- 2. Installed Git.

- 3. Installed Maven (actually this project uses the mvnw binary, so you can do without it).

- 4. Actually, the source itself, i.e. clone of the repository github.com/gcolman/quarkus-hello-world.git

The first step is to create a Quarkus project. Don't be alarmed if you have never worked with Quarkus.io - it's easy. You just select the components that you want to use in the project (RestEasy, Hibernate, Amazon SQS, Camel, etc.), and then Quarkus itself, without your any participation, configures the maven archetype and uploads everything to github. That is, literally one mouse click - and you're done. That's why we love Quarkus.

The easiest way to build our "Hello World" into a container image is to use the quarkus-maven Docker extensions, which will do all the work. With the advent of Quarkus, this has become really easy and simple: add the container-image-docker extension and you can create images with maven commands.

./mvnw quarkus:add-extension -Dextensions=”container-image-docker”

Finally, we build our image using Maven. As a result, our source code turns into a ready-made container image that can already be launched in the container runtime.

./mvnw -X clean package -Dquarkus.container-image.build=true

That, in fact, is all, now you can start the container with the docker run command, having mapped our service to port 8080 so that you can access it.

docker run -i — rm -p 8080:8080 gcolman/quarkus-hello-world

After the container instance has started, it remains only to check with the curl command that our service is running:

So everything works and it was really easy and simple.

Step 2 - pushing our container to the container image repository

So far, the image we have created is stored locally, in our local container storage. If we want to use this image in our KUK environment, then it must be put into some other repository. Kubernetes doesn't have such features, so we'll be using dockerhub. Because, firstly, it's free, and secondly, (almost) everyone does it.

This is also very simple, and you only need a dockerhub account.

So, we put dockerhub and send our image there.

Step 3 - launching Kubernetes

There are many ways to build the kubernetes configuration to run our "Hello World", but we will use the simplest of them, we are the kind of people we are ...

First, start the minikube cluster:

minikube start

Step 4 - deploying our container image

Now we need to transform our code and container image in the kubernetes configuration. In other words, we need a pod and deployment definition with an indication of our container image on dockerhub. One of the easiest ways to do this is to run the create deployment command pointing to our image:

kubectl create deployment hello-quarkus — image =gcolman/quarkus-hello-world:1.0.0-SNAPSHOT

With this command, we told our KUK to create a deployment configuration that should contain the pod specification for our container image. This command will also apply this configuration to our minikube cluster, and create a deployment that downloads our container image and starts the pod on the cluster.

Step 5 - open access to our service

Now that we have a deployed container image, it's time to think about how to configure external access to this Restful service, which, in fact, is programmed in our code.

There are many ways. For example, you can use the expose command to automatically create the appropriate Kubernetes components such as services and endpoints. Actually, this is what we will do by executing the expose command for our deployment object:

kubectl expose deployment hello-quarkus — type=NodePort — port=8080

Let's dwell on the "- type" option of the expose command for a moment.

When we expose and create the components needed to run our service, we want, among other things, to be able to connect from the outside to the hello-quarkus service that sits inside our SDN. And the type parameter allows us to create and connect things like load balancers to route traffic to that network.

For example, by writing type = LoadBalancer, we will automatically initialize the load balancer in the public cloud to connect to our Kubernetes cluster. This, of course, is great, but you need to understand that such a configuration will be rigidly tied to a specific public cloud and it will be more difficult to transfer it between Kubernetes instances in different environments.

In our example, type = NodePort , that is, the call to our service goes by the IP address of the node and the port number. This option allows you not to use any public clouds, but requires a number of additional steps. First, you need your own load balancer, so we will deploy an NGINX load balancer in our cluster.

Step 6 - install the load balancer

Minikube has a number of platform functions that make it easy to create components needed for external access, such as ingress controllers. Minikube comes with the Nginx ingress controller, and we just need to enable it and configure it.

minikube addons enable ingress

Now, with just one command, we will create an Nginx ingress controller that will work inside our minikube cluster:

ingress-nginx-controller-69ccf5d9d8-j5gs9 1/1 Running 1 33m

Step 7 - Configuring Ingress

Now we need to configure the Nginx ingress controller to accept hello-quarkus requests.

Finally, we need to apply this configuration.

kubectl apply -f ingress.yml

Since we do all this on our computer, we simply add the IP address of our node to the / etc / hosts file to route http requests to our minikube to the NGINX load balancer.

192.168.99.100 hello-quarkus.info

That's it, now our minikube service is accessible from the outside through the Nginx ingress controller.

Well, that was easy, right? Or not really?

Launch on OpenShift (Code Ready Containers)

Now let's see how this is all done on the Red Hat OpenShift Container Platform (OCP).

As with minikube, we choose a schema with a single node OpenShift cluster in the form of Code Ready Containers (CRC). It used to be called minishift and was based on the OpenShift Origin project, but now it is a CRC and is built on Red Hat's OpenShift Container Platform.

Here we, sorry, cannot help but say: "OpenShift is great!"

Initially, we thought to write that development on OpenShift is no different from development on Kubernetes. And in fact it is so. But in the process of writing this post, we remembered how many unnecessary movements you have to perform when you do not have OpenShift, and therefore, again, it is wonderful. We love it when everything is done easily, and how easy it is compared to minikube, our example is deployed and run on OpenShift, in fact, that prompted us to write this post.

Let's go through the process and see what we need to do.

So, in the minikube example, we started with Docker ... Stop, we no longer need Docker installed on the machine.

And we don't need a local git.

And Maven is not needed.

And you don't have to create a container image with your hands.

And there is no need to search for any repository of container images.

And you don't need to install an ingress controller.

And you don't need to configure ingress either.

You get it, right? You don't need any of the above to deploy and run our application on OpenShift. And the process itself looks like this.

Step 1 - Launching Your OpenShift Cluster

We use Code Ready Containers from Red Hat, which is essentially the same Minikube, but only with a full-fledged single-node Openshift cluster.

crc start

Step 2 - Build and Deploy the Application to the OpenShift Cluster

It is at this step that the simplicity and convenience of OpenShift is manifested in all its glory. As with all Kubernetes distributions, we have many ways to run an application on a cluster. And, as in the case of KUK, we deliberately choose the simplest one.

OpenShift has always been built as a platform for building and running containerized applications. Building containers has always been an integral part of this platform, so there are a bunch of additional Kubernetes resources for related tasks.

We will be using the OpenShift Source 2 Image (S2I) process, which has several different ways to take our source (code or binaries) and turn it into a container image that runs on an OpenShift cluster.

For this we need two things:

- Our source code in the git repository

- Builder image on the basis of which the build will be performed.

There are many such images out there, maintained both by Red Hat and community level, and we will use the OpenJDK image, since I’m building a Java application.

You can start S2I build both from the OpenShift Developer graphical console and from the command line. We'll use the new-app command to tell it where to get the builder image and our source code.

oc new-app registry.access.redhat.com/ubi8/openjdk-11:latest~https://github.com/gcolman/quarkus-hello-world.git

That's it, our application has been created. In doing so, the S2I process did the following things:

- Created a service build-pod for all sorts of things related to building the application.

- Created the OpenShift Build config.

- Downloaded the builder image to the internal docker registry of OpenShift.

- Cloned "Hello World" to local repository.

- Saw there was a maven pom in there and so I compiled the app using maven.

- Created a new container image containing the compiled Java application and put that image into the internal container registry.

- Created Kubernetes Deployment with pod specifications, service, etc.

- Launched deploy of container image.

- Removed the service build-pod.

There are a lot of things in this list, but the main thing is that all the build takes place exclusively inside OpenShift, the internal Docker registry is inside OpenShift, and the build process creates all Kubernetes components and runs them in the cluster.

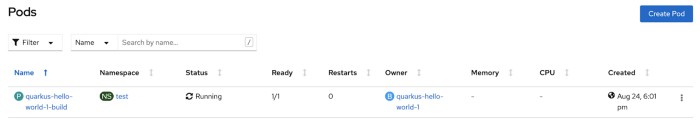

If you visually track the launch of S2I in the console, you can see how the build pod is launched during the build.

Now let's take a look at the logs of the builder pod: first, it shows how maven does its job and downloads the dependencies to build our java application.

After the maven build is complete, the container image build is started, and then this built image is pushed to the internal repository.

That's it, the assembly process is complete. Now let's make sure that the pods and services of our application are running in the cluster.

oc get service

That's all. And just one team. We just have to expose this service for external access.

Step 3 - expose the service for external access

As in the case of KUK, on the OpenShift platform, our "Hello World" also needs a router to direct external traffic to a service within the cluster. OpenShift makes this very easy. Firstly, the HAProxy routing component is installed in the cluster by default (it can be changed to the same NGINX). Secondly, there are special and highly configurable resources called Routes, which resemble Ingress objects in good old Kubernetes (in fact, OpenShift's Routes greatly influenced the design of Ingress objects, which can now be used in OpenShift) , but for our "Hello World", and in almost all other cases, the standard Route without additional configuration is enough for us.

To create a routable FQDN for "Hello World" (yes, OpenShiift has its own DNS for routing by service name), we simply expose our service:

oc expose service quarkus-hello-world

If you look at the newly created Route, you can find the FQDN and other routing information there:

oc get route

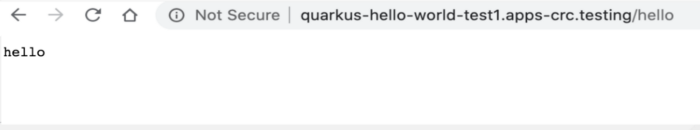

Finally, we access our service from the browser:

Now it was really easy!

We love Kubernetes and everything that technology allows us to do, and we also love simplicity and lightness. Kubernetes was built to make it incredibly easy to operate distributed, scalable containers, but its simplicity is no longer enough to roll out applications. And this is where OpenShift comes into play, which keeps pace with the times and offers Kubernetes, focused primarily on the developer. A lot of effort has been put into making the OpenShift platform exactly for the developer, including the creation of tools such as S2I, ODI, Developer Portal, OpenShift Operator Framework, IDE integration, Developer Catalogs, Helm integration, monitoring and many others.

We hope this article was interesting and useful for you. And you can find additional resources, materials and other useful things for development on the OpenShift platform on the Red Hat Developers portal .