See previous articles: Simple motion recognition algorithm and an algorithm for finding displacement of objects in the image . Let me remind you that I was motivated to write these articles by the fact that when I started working on my master's thesis on "Analysis of the spatial structure of dynamic images", I faced the problem that it is very difficult to find some ready-made examples of algorithms for recognizing patterns and moving objects. Everywhere, both in literature and on the Internet, there is only one naked theory. Quite a long time has passed since then, I managed to successfully defend my thesis and receive a red diploma, and now I am writing to share my experience.

So, when I started to work on my dissertations, my knowledge in the field of computer vision was zero. Where did I start? From the simplest experiments on images, which are described in the above articles. I wrote a couple of primitive algorithms, one of which showed me in the form of spots where moving objects are located, and the second found a piece of the picture in a larger picture (it worked, naturally, slowly).

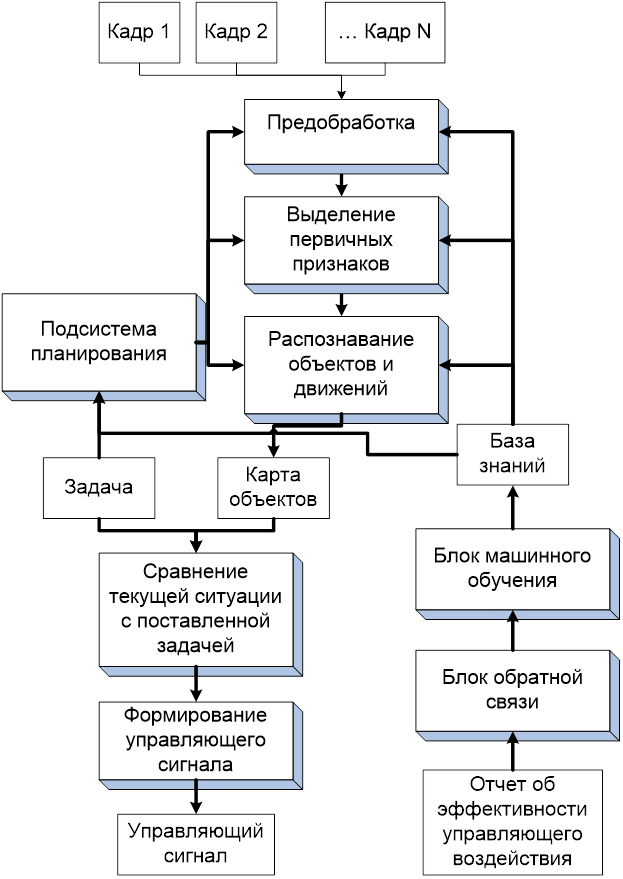

What's next? Further plugging. My task was for the unmanned aerial vehicle to navigate through aerial photography or track a car moving along the road. And I had no idea how to start the task. What have I started to do? Read theory. And the theory said that computer vision is broken down into the following stages:

- Image preprocessing (noise removal, contrast enhancement, scaling, and so on).

- Finding details (lines, borders, points of interest)

- Detection, segmentation.

- High-level processing.

Well, ok, I drew a diagram of the program that should do all this:

In short, it turned out that I had to create something grandiose at the level of Artificial Intelligence. Well ok, I'll try to create it. I take Visual Studio and start sculpting classes in C #. More precisely, class blanks. A little later I realized what I was aiming at….

So, I start to practice the first step. Preprocessing. I started with her because

- This is the simplest.

- This is the first step on the list.

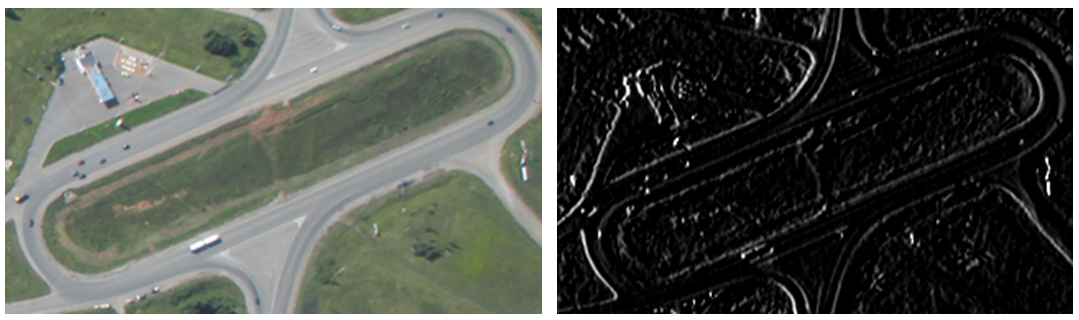

I began to apply different filters to the image, to see what happened. For example, I tried to apply the Sobel filter:

Remove noise from the image using Gaussian blur: I

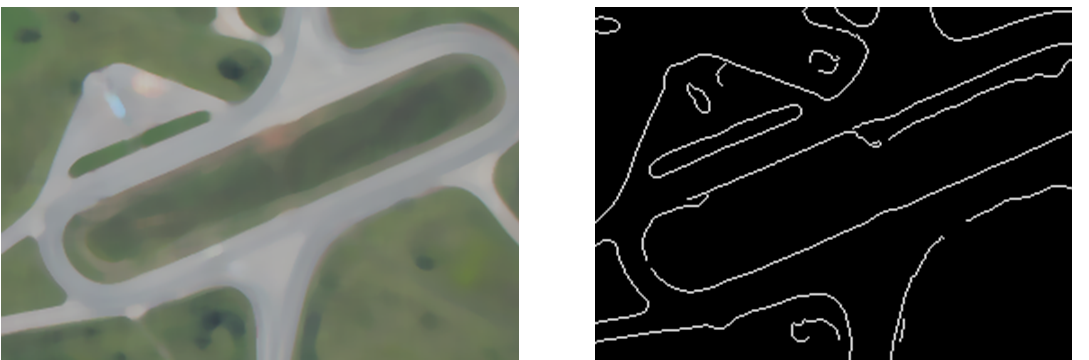

studied median filtering and edge selection:

A lecture on computer vision from the lecture hall helped me a lot .

So, the knowledge has become much more, but still it is not clear how to solve the problem. By that time, the topic and task of the master's thesis had been revised several times, and as a result it was formulated as follows: "Tracking the trajectory of a UAV using aerial photography frames." That is, I needed to take a number of photographs and build a trajectory along them.

I got the idea to describe the contour in the form of broken lines (described by many segments), and then compare how far these lines have shifted. But it turned out that the contours, even in two adjacent frames, turned out to be so different that there was no way to adequately compare the sets of the resulting broken lines. I tried to improve the contours themselves using various methods and their combinations:

- Classic Canny edge selection from OpenCV library

- Improved edge detection algorithm developed by my supervisor.

- Selection of contours by binarization.

- Selection of contours by segmentation. Segmentation was carried out in various ways, in particular, using texture features.

As a result, we got a jumble of algorithms that worked very slowly but did not bring one iota closer to the result. Some of my work was used as materials for this article .

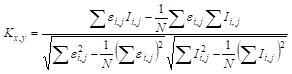

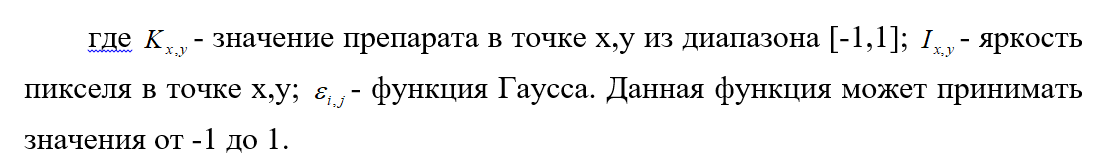

Well, then the supervisor suggested an idea: you need to use special points. And he even gave an algorithm on how to calculate these key points. I must say that this was a very non-standard method. This is not a Harris detector, or BRISK, or MSER or AKAZE. Although I tried to use them too. But, as it turned out, the detector proposed by the supervisor worked better. And here's how it works. First, we calculate the contour preparation using this formula:

Then we find the extrema of this function. These are the special points. Tellingly, points can be of two types "Peaks" and "Pits". Here is an example of these points in the image:

Next, 50 points with the maximum response are selected from the obtained points. For all these points, triangles are built, the number of triangles formed by these points is equal to:

where k is the number of singular points involved in the calculation. For each triangle, a special index is calculated from 0 to 16383. The next step is to distribute the triangles over a special array in which the cell number corresponds to the triangle index. Each cell in such an array is a list of triangles. Such an array is compiled from two compared frames. The comparisons are made by matching each cell in the array with the corresponding cell in the array of another frame. In total, 16384 groups need to be matched, which is quite a feasible task for a computer in a fairly short period of time.

When matching arrays, we fill in the matching matrix. The matrix horizontally is the angles between the matched triangles, and the vertical scale is the scale, which is calculated as the ratio of the lengths of the longest sides. The scale and angle found is the cell in the matrix that has the most matches. A similar comparison is made to calculate the horizontal and vertical displacement of images.

Read more about this method in the article.

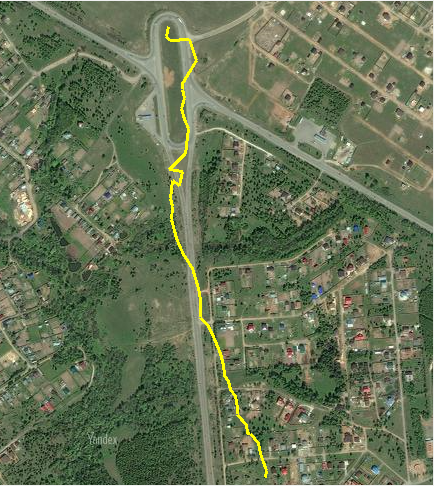

Thus, we found the horizontal and vertical displacement, angle and scale change (that is, the UAV went up or down). Now it remains to write a program that simulates tracing a trajectory over a series of frames and draws this very trajectory, and we can say that the dissertation is ready: