50 Years of Video Cards' History (1970-2020): A Complete History of Video Cards and Their Progenitors

Part 1

Computer graphics. Hearing these words, we present stunning special effects from major blockbusters, beautiful character models from AAA games, and everything that has to do with the visual beauty of modern technology. But computer graphics, like any technological aspect, have been developing for more than a decade, having overcome the path from displaying several symbols on a monochrome display to amazing landscapes and heroes, every year more and more difficult to distinguish from reality. Today we will start a story about how the history of computer graphics began, remember how the term "video card" and the abbreviation for GPU appeared, and what technical boundaries the market leaders have overcome year after year in an effort to conquer a new audience.

Foreword of the era. The birth of computers (1940s / 1950s)

The era of computer technology, many associate with the beginning of the era of personal computers in the early 80s, but in fact, the first computers appeared much earlier. The first development of such machines began before the Second World War, and prototypes, vaguely reminiscent of future PCs, were released already in 1947. The first such device was the IBM 610, an experimental computer developed by John Lenz of Columbia University's Watson Laboratory. He was the first in history to receive the proud name "Personal Automatic Computer" (PAC), although it was slightly exaggerated - the machine cost $ 55 thousand, and only 150 copies were made.

The first impressive visual systems appeared in the same years. Already in 1951, IBM, with the participation of General Electric and a number of military contractors, developed a flight simulator for the needs of the army. It used the technology of three-dimensional virtualization - the pilot, who was behind the simulator, saw the projection of the cockpit and could act as he would at the controls of a real plane. Later, the graphic prototype was used by Evans & Sutherland, which created a full-fledged CT5 pilot simulator, based on an array of DEC PDP-11 computers. Just think - it's still the 50s, and we already had three-dimensional graphics!

1971-1972. Magnavox Odyssey and PONG

The boom in semiconductor technology and the manufacture of microcircuits has completely changed the balance of power in the market, formerly owned by bulky analog computers that occupied entire halls. Moving away from vacuum tubes and punch cards, the industry has stepped into the era of family entertainment, introducing the Western world to "home video game systems," the great-grandmothers of modern consoles.

A pioneer in video game entertainment was a device called the Odyssey Magnavox, the first officially released gaming system. The Odyssey had controllers outlandish by modern standards, and the entire graphics system displayed only a line and two dots on the TV screen, which were controlled by the players. The creators of the device approached the matter with imagination, and the console came with special colored overlays on the screen that could "paint" the game worlds of several projects that come with Odyssey. A total of 28 games were released for the device, among which was a seemingly simple ping-pong, which inspired enthusiasts from the young Atari company to release a slot machine called Pong with an identical game. It was Pong that became the beginning of the magic of slot machines, which, by the way, completely captured both Japan and the Western world by the beginning of the 80s.

Despite their obvious simplicity, the Magnavox Odyssey used real cartridges - though in many ways only for effect. There were no memory chips in them - the cartridges served as a set of jumpers, magically transforming one arrangement of a line with dots into another, thereby changing the game. The primitive set-top box was far from a full-fledged video chip, but the popularity of Magnavox Odyssey showed a clear interest of the public, and many companies started developing their own devices, feeling the potential for profit.

1976-1977. Fairchild Channel F and Atari 2600

It did not take long to wait for the first serious battle for the newborn gaming market. In 1975, the rapidly aging Magnavox Odyssey disappeared from the shelves, and two devices fought in its place for the title of the best new generation console - Channel F from Fairchild and Atari VCS from the very company that gave the world Pong.

Despite the fact that the development of consoles went on almost simultaneously, Atari did not have time - and Fairchild was the first to release its device called the Fairchild Video Entertainment System (VES).

The Fairchild console hit store shelves in November 1976 and is a treasure trove of technical strengths. Instead of indistinct Odyssey controllers, comfortable ones appeared, fake cartridges were replaced with real ones (inside which there were ROM chips with game data), and a speaker was installed inside the console that reproduced sounds and music from a running game. The attachment was able to draw an image using an 8-color palette (in black-and-white line mode or color) with a resolution of 102x54 pixels. Separately, it should be noted that the Fairchild F8 processor installed in the VES system was developed by Robert Noyce, who in 1968 founded a small but promising company Intel.

Atari was on the verge of despair - the Stella project, the basis of the future console, was far behind in terms of development, and the market, as you know, will not wait. A lot of things that seemed innovative with the release of Fairchild VES were about to become an integral part of all future consoles. Realizing that everything is on the line, Atari founder Nolan Bushnell signs an agreement with Warner Communications, selling his brainchild for $ 28 million, with the condition that the Atari console will hit the market as soon as possible.

Warner did not disappoint, and work on the console began to boil with renewed vigor. To simplify the logic and reduce the cost of production, the famous engineer Jay Miner was involved in the development, who reworked the video output and audio processing TIA (Television Interface Adapter) chips into a single element, which was the final touch before the console was ready. To annoy Fairchild, Atari marketers named the console VCS (Video Computer System), forcing the competitor to rename Channel F.

But this did not help Channel F to successfully compete with the new product - although at the stage of the console's release in 1977 only 9 games were ready, the developers quickly realized the onset of a new technological era, and began to use the power of the console to the full. The Atari VSC (later to become the Atari 2600) was the first set-top box based on a complex chip, not only processing video and audio, but also commands received from the joystick. The modest sales, which initially embarrassed Warner, gave way to phenomenal success after the decision to license the Space Invaders arcade by Japanese company Taito. The cartridges, initially limited to 4 KB of memory, eventually grew to 32 KB, and the number of games numbered in the hundreds.

The secret of Atari's success lay in the most simplified logic of the device, the ability of developers to flexibly program games using 2600 resources (for example, to be able to change the color of the sprite while drawing), as well as the external attractiveness and convenient controllers called joysticks (from the literal joystick - the stick of happiness) ... So if you didn't know where the term came from, you can thank the Atari developers for it. As well as for the main image of all retro gaming - a funny alien from Space Invaders.

After the Atari 2600's success went beyond all predicted values, Fairchild left the video game market, deciding that the direction would soon fizzle out. Most likely, the company still regrets such a decision.

1981-1986. The era of the IBM PC.

Despite the fact that already in 1979 Apple introduced the Apple II, which forever changed the image of the accessible computer, the concept of "personal computer" appeared a little later, and belonged to a completely different company. Monumental IBM, with decades of cumbersome mainframes (bobbin noise and flickering lights) behind it, suddenly stepped aside and created a market that never existed before.

In 1981, the legendary IBM PC went on sale, preceded by one of the best ad campaigns in marketing history. “No one has ever been fired for buying IBM,” read the same slogan that will forever go down in advertising history.

However, it was not only slogans and flashy advertising inserts that made the name of the IBM personal computer. It was for him that for the first time in history a complex graphics system was developed from two video adapters - a Monochrome Display Adapter (MDA, Monochrome Display Adapter) and a Color Graphics Adapter (CGA, Color Graphics Adapter).

MDA was designed for typing with support for 80 columns and 25 lines on the screen for ASCII characters at a resolution of 720 × 350 pixels. The adapter used 4K video memory and displayed green text on a black screen. In this mode, it was easy and convenient to work with teams, documents and other daily tasks of the business sector.

The CGA, on the other hand, could be called a breakthrough in terms of graphics capabilities. The adapter supported a 4-bit palette in a resolution of 640x200 pixels, had 16 KB of memory, and formed the basis for the computer graphics standard for the actively expanding line of IBM PC computers.

However, there were serious drawbacks to using two different video output adapters. A lot of possible technical problems, high cost of devices and a number of other limitations pushed enthusiasts to work on a universal solution - a graphics adapter capable of working in two modes simultaneously. The first such product on the market was the Hercules Graphics Card (HGC), developed by the eponymous company Hercules in 1984.

Hercules Graphics Card (HGC)

According to legend, the founder of the Hercules company, Van Suwannukul, developed a system specifically for working on his doctoral dissertation in his native Thai language. The standard IBM MDA adapter did not render the Thai font correctly, which prompted the developer to start building HGC in 1982.

Support for 720x348 pixels for both text and graphics, as well as the ability to work in MDA and CGA modes, ensured a long life for the Hercules adapter. The company's legacy in the form of universal video output standards HGC and HGC + was used by developers of IBM-compatible computers and later a number of other systems until the late 90s. However, the world did not stand still, and the rapid development of the computer industry (as well as its graphic part) attracted many other enthusiasts - among them four migrants from Hong Kong - Hwo Yuan Ho, Lee Lau, Francis Lau (Francis Lau) and Benny Lau (Benny Lau), who founded Array Technology Inc, a company that the whole world will recognize as ATI Technologies Inc.

1986-1991. The first boom in the graphics card market. Early successes of ATI

After the release of the IBM PC, IBM did not remain at the forefront of computer technology for long. Already in 1984, Steve Jobs introduced the first Macintosh with an impressive graphical interface, and it became obvious to many that graphical technology was about to take a real leap forward. But despite losing industry leadership, IBM set itself apart from Apple and other competitors in its vision. IBM's open standards philosophy opened the door to any compatible device, which attracted numerous startups of its time to the field.

Among them was the young company ATI Technologies. In 1986, Hong Kong specialists introduced their first commercial product, the OEM Color Emulation Card.

Color Emulation Card

Expanding the capabilities of standard monochrome controllers, ATI engineers provided three font colors on a black screen - green, amber and white. The adapter had 16 KB of memory and proved to be a part of Commodore computers. In its first year of sales, the product brought ATI over $ 10 million.

Of course, this was only the first step for ATI - after the expanded graphics solution with 64 KB of video memory and the ability to work in three modes (MDA, CGA, EGA), the ATI Wonder line entered the market, with the advent of which the previous standards could be written into archaisms ...

Sounds too bold? Judge for yourself - adapters of the Wonder series received a buffer of 256 KB of video memory (4 times more!), And instead of a four-color palette, 16 colors were displayed on the screen at a resolution of 640x350. At the same time, there were no restrictions when working with various output formats - ATI Wonder successfully emulated any of the early modes (MDA, CGA, EGA), and, starting with the 2nd series, it received support for the latest Extend EGA standard.

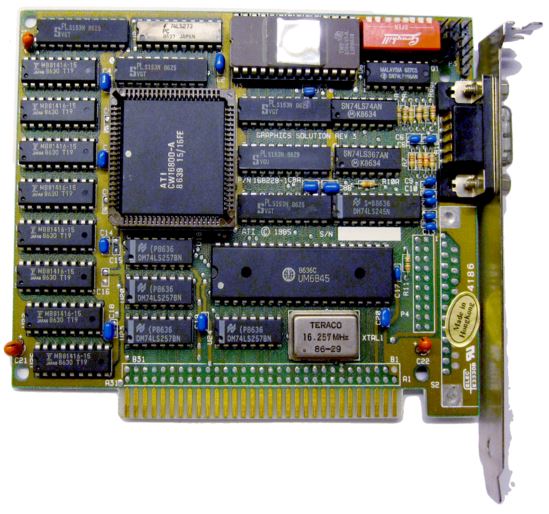

ATi Wonder

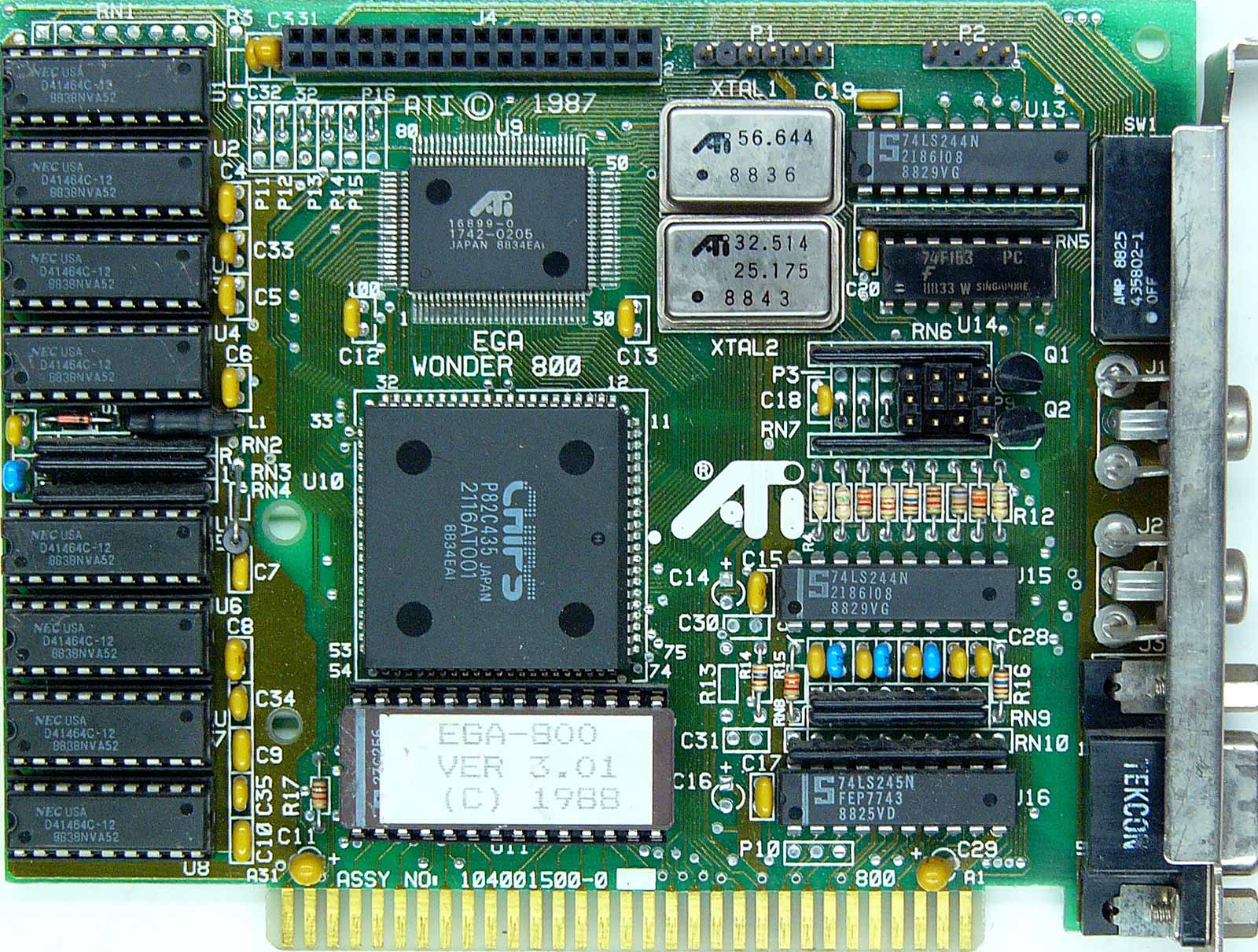

The culmination of the development of the line in 1987 was the famous ATI EGA Wonder 800, which displayed a 16-color palette already in VGA format in an incredibly high resolution of 800x600. The adapter was sold in the more affordable VGA Improved Performance Card (VIP) format with limited VGA output support.

The first heyday of the video card market. ATI innovations, the beginning of the competition

ATI's significant success in the development of commercial graphics adapters attracted the attention of many other companies - between 1986 and 1987, brands such as Trident, SiS, Tamarack, Realtek, Oak Technology, LSI (G-2 Inc), Hualon, Cornerstone Imaging and Windbond. In addition to new faces, current representatives of Silicon Valley - such companies as AMD, Western Digital / Paradise Systems, Intergraph, Cirrus Logic, Texas Instruments, Gemini and Genoa - became interested in entering the graphics market - each of them somehow presented the first graphics product in the same period of time.

In 1987, ATI entered the OEM market as a supplier with the Graphics Solution Plus series of products. This line was designed to work with the 8-bit bus of IBM PC / XT computers based on the Intel 8086/8088 platform. The GSP adapters also supported the MDA, CGA and EGA output formats, but with the original switching between them on the board itself. The device was well received in the market, and even a similar model from Paradise Systems with 256 KB of video memory (GSP had only 64 KB) did not prevent Ati from adding a new successful product to its portfolio.

Over the next years, the Canadian company ATI Technologies Inc. remained at the peak of graphic innovation, constantly outstripping the competition. The then well-known Wonder line of adapters was the first on the market to switch to 16-bit color; received support for EVGA (in Wonder 480 and Wonder 800+ adapters) and SVGA (in Wonder 16). In 1989, ATI lowered the prices for the Wonder 16 line and added a VESA connector for the ability to connect two adapters to each other - we can say these were the first fantasies about bundles of several devices that will appear on the market much later.

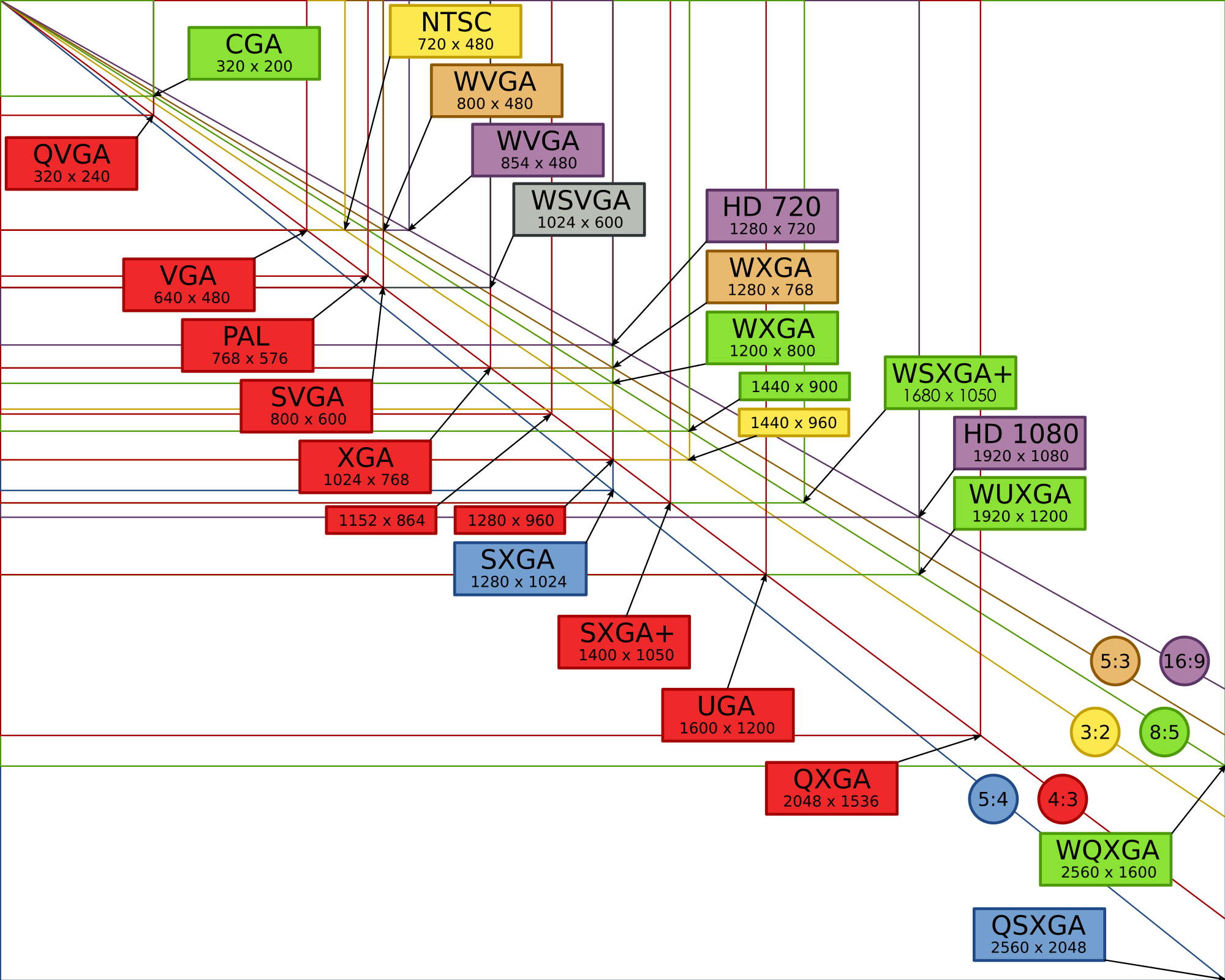

Due to the proliferation of a market with countless formats and manufacturers, a dedicated organization was required to regulate them and develop key standards for market players. In 1988, VESA (Video Electronics Standard Association) was founded by the efforts of six key manufacturers, which took over the centralization of standards and resolution formats, as well as the color palette of graphics adapters. The first format was SVGA (800x600 pixels), which was already used in Ati Wonder 800 cards. Later there were more of them, and some (including HGC and HGC +) were used for decades.

ATi's technological leadership continued in the early 1990s. In 1991, Wonder XL went on sale - the first graphics adapter with support for 32 thousand colors and support for 800x600 resolution at a refresh rate of 60 Hz. This was achieved through the use of the Sierra RAMDAC converter. In addition, Wonder XL became the first adapter with 1 MB of onboard video memory.

In May of the same year, ATI introduced Mach8, the first product in a new line of Mach for handling simple 2D operations such as line drawing, color filling, and bitmapping. Mach8 was available for purchase both as a chip (for subsequent integration - for example, in OEM-format business systems), and as a full-fledged board. Now, many will find it strange to release a separate adapter for such things, but 30 years ago, many special calculations still lay on the shoulders of the central processor, while graphics adapters were intended for a narrow range of tasks.

However, this way of things did not last long - following the interesting VGA Stereo F / X, a symbiosis of a graphics adapter and a Sound Blaster card for emulating a codec in mono "on the fly", the industry leader presented a product for working with both 2D and 3D graphics - VGA Wonder GT. By combining the capabilities of Mach8 and Wonder Ati were the first to solve the problem of the need for an additional adapter to work with different types of tasks. The release of the popular Windows 3.0 operating system, which for the first time focused on a wide range of tasks in working with 2D graphics, contributed to the significant success of the new products. Wonder GT was in demand among system integrators, which had a beneficial effect on the company's profits - in 1991, ATI's turnover exceeded $ 100 million. The future promised to be bright, but competition in the market never waned - new challenges awaited the leaders.

1992-1995. OpenGL development. The second boom in the graphics card market. New frontiers in 2D and 3D

In January 1992, Silicon Graphics Inc. introduced the first multi-platform OpenGL 1.0 programming interface, supporting both 2D and 3D graphics.

The future open standard is based on the proprietary library IRIS GL (Integrated Raster Imaging System Graphical Library, Integrated system library for processing raster graphics). Realizing that many companies will soon introduce their libraries of this kind on the market, SGI decided to make OpenGL an open standard, compatible with any platforms on the market. The popularity of this approach was difficult to overestimate - the entire market drew attention to OpenGL.

Initially, Silicon Graphics aimed at the professional UNIX market, planning specific tasks for a future open library, but thanks to its availability for developers and enthusiasts, OpenGL quickly took a place in the emerging 3D games market.

However, not all major market players welcomed this SGI approach. Around the same time, Microsoft was developing its own Direct3D software library, and was in no hurry to integrate OpenGL support into the Windows operating system.

Direct3D was openly criticized by the famous Doom author John Carmack, who personally ported Quake to OpenGL for Windows, emphasizing the advantages of simple and understandable code of an open library against the background of a complex and "garbage" version of Microsoft.

John Carmack

But Microsoft's position remained unchanged, and after the release of Windows 95, the company refused to license the OpenGL MCD driver, thanks to which the user could independently decide through which library to launch an application or a new game. SGI found a loophole by releasing an Installable Client Driver (ICD) driver, which, in addition to OpenGL rasterization, received support for processing lighting effects.

The explosive growth in the popularity of OpenGL led to the fact that SGI's solution became popular in the segment of workstations, which forced Microsoft to devote all possible resources to create its proprietary library in the shortest possible time. The basis for the future API was provided by the RenderMorphics studio, bought in February 1995, whose Reality Lab software library formed the basic principles of Direct3D.

The rebirth of the video card market. The wave of mergers and acquisitions

But let's go back a little to the past. In 1993, the video card market experienced a rebirth, and many promising new companies came to the attention of the public. One of them was NVidia, founded by Jensen Huang, Curtis Prahm and Chris Malachowski in January 1993. Huang, who has worked as a software engineer at LSI, has long had the idea of starting a graphics card company, and his colleagues at Sun Microsystems just managed to work on the GX graphics architecture. By joining forces and raising $ 40,000, the three enthusiasts launched a company that was destined to play a key role in the industry.

Jensen huang

However, in those years, no one dared to look into the future - the market was rapidly changing, new proprietary APIs and technologies appeared almost every month, and it was obvious that not everyone would survive in the turbulent cycle of competition. Many of the companies that entered the graphics arms race by the late 1980s were forced to declare bankruptcy, including Tamerack, Gemini Technology, Genoa Systems and Hualon, while Headland Technology was acquired by SPEA, and Acer, Motorola and Acumos became property Cirrus Logic.

As you might have guessed, ATI stood out in this cluster of mergers and acquisitions. Canadians continued to work hard and produce innovative products no matter what - even if it got much more difficult.

In November 1993, ATI introduced the VideoIt! Video capture card! which was based on the 68890 decoder chip, capable of recording a video signal in a resolution of 320x240 at 15 frames per second or 160x120 at 30 frames per second. Thanks to the integration of the Intel i750Pd VCP chip, the owner of the novelty could perform compression-decompression of the video signal in real time, which was especially useful when working with large amounts of data. VideoIt! the first on the market was taught to use the central bus to communicate with the graphics accelerator, and no cables and connectors were required as before.

ATI problems and S3 Graphics successes

For ATI, 1994 was a real test - due to serious competition, the company incurred losses of $ 4.7 million. The main cause of trouble for Canadian developers was the success of S3 Graphics. The S3 Vision 968 graphics accelerator and Trio64 adapter have secured a dozen major OEM contracts with market leaders such as Dell, HP and Compaq for the American development company. What was the reason for such popularity? An unprecedented level of unification - the Trio64 graphics chip assembled a digital-to-analog converter (DAC), a frequency synthesizer and a graphics controller under one cover. New from S3 used a pooled framebuffer and supported hardware video overlay (implemented by allocating a portion of video memory during rendering).The mass of advantages and the absence of obvious shortcomings of the Trio64 chip and its 32-bit fellow Trio32 led to the emergence of many options for partner versions of motherboards based on them. Diamond, ELSA, Sparkle, STB, Orchid, Hercules and Number Nine offered their solutions. Variations ranged from basic VirGe-based adapters for $ 169, to the ultra-powerful Diamond Stealth64 with 4 MB of video memory for $ 569.

In March 1995, ATI returned to the big game with a bunch of innovations with the introduction of Mach64, the first 64-bit graphics accelerator on the market and the first to run on PC and Mac systems. Along with the popular Trio 958, Mach64 provided hardware accelerated video capabilities. Mach64 opened the way for ATI to enter the professional market - the first solutions of Canadians in this sector were 3D Pro Turbo and 3DProTurbo + PC2TV accelerators. New items were offered at a price of $ 899 for as much as 4 MB of video memory.

Another important newcomer to the graphics accelerator market is technology startup 3Dlabs. The priority direction for the young company was the release of high-end graphics accelerators for the professional market - Fujitsu Sapphire2SX with 4 MB of video memory was offered at a price of $ 1600, and ELSA Gloria 8 with 8 MB of onboard memory cost an incredible $ 2600 for those years. 3Dlabs tried to enter the market of mass gaming graphics with the Gaming Glint 300SX, but the high price and only 1 MB of video memory did not bring popularity to the adapter.

Other companies also presented their products to the consumer market. Trident, an OEM supplier of 2D graphics solutions, has unveiled the 9280 chip, which has all the benefits of Trio64 at an affordable price of $ 170 to $ 200. At the same time, Weitek's Power Player 9130 and Alliance Semiconductor's ProMotion 6410 went on sale, which provided excellent smoothness during video playback.

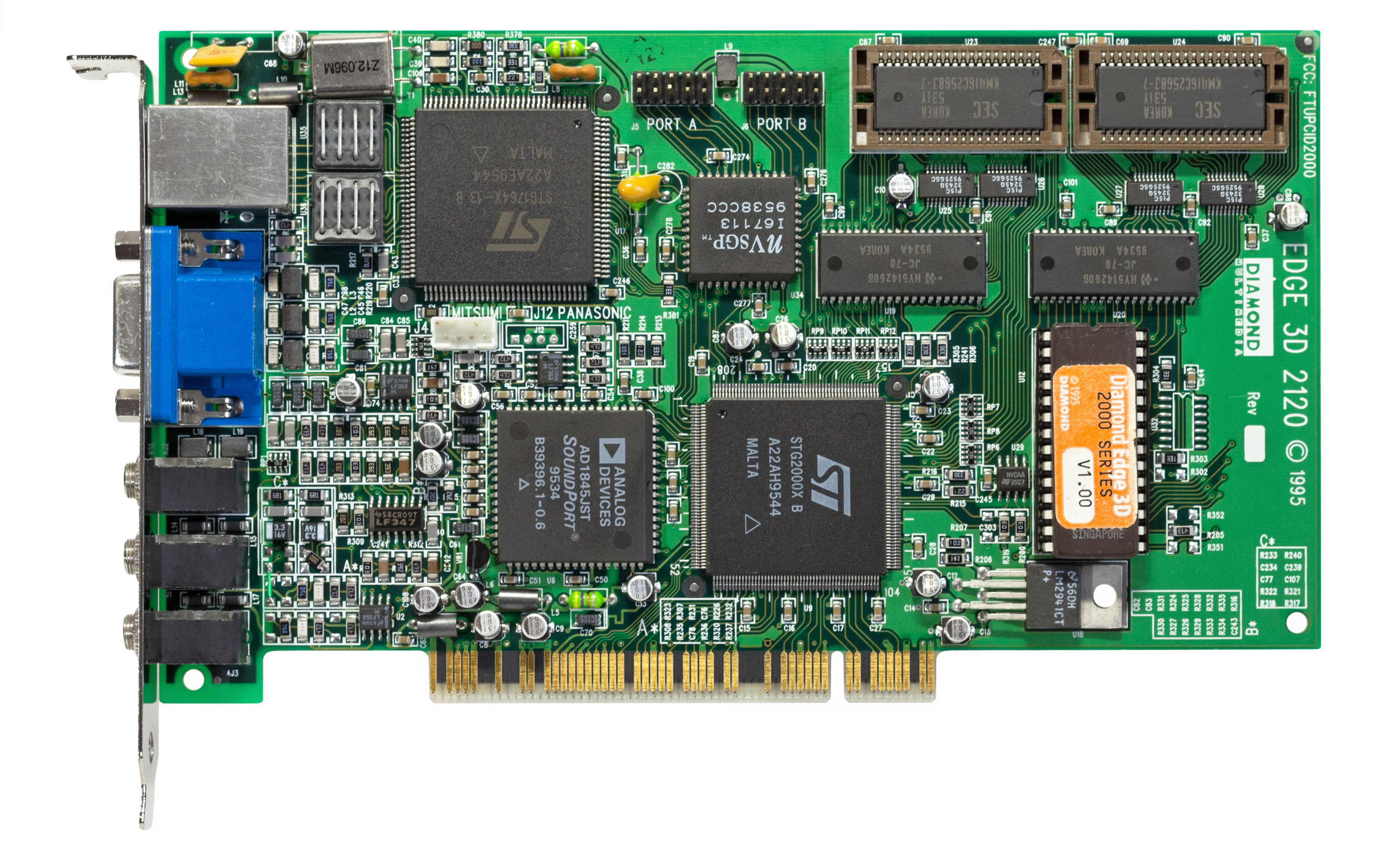

NV1 - NVidia debut and problem areas

In May 1995, NVIDIA joined the newcomers, presenting its first graphics accelerator with the symbolic name NV1. The product was the first in the commercial market to combine the capabilities of 3D rendering, hardware video acceleration and a graphical interface. Restrained commercial success did not bother the young company in any way - Jen-Hsun Huang and his colleagues were well aware that it would be very difficult to “shoot” in a market where new solutions are presented every month. But the profits from the NV1 sale were enough to keep the company afloat and provide incentives to keep working.

Diamond EDGE 3D 2120 (NV1)

ST Microelectronics was in charge of the production of chips based on the 500 nm process technology, but unfortunately for Nvidia, just a few months after the introduction of partner solutions based on NV1 (for example, Diamond Edge 3D), Microsoft introduced the first version of the long-awaited DirectX 1.0 graphics API. "Finally!" - exclaimed gamers from all over the world, but the manufacturers of graphics accelerators did not share such enthusiasm.

The main feature of DirectX is triangular polygons. Many people mistakenly believe that the notorious triangles have always existed, but in fact this is a delusion. Nvidia engineers included quadratic texture mapping in their first product (instead of triangles-polygons there were squares), which is why applications and the first games with DirectX support caused a lot of compatibility problems for NV1 owners. To solve the problem, Nvidia included a handler in the driver for converting square textures to triangular, but the performance in this format left much to be desired.

Most games with quadratic texture mapping support were ported from the Sega Saturn console. Nvidia considered these projects so important that they placed two ports of the new console on the 4MB NV1 model, connected to the card via ribbon connectors. When it went on sale (September 1995), Nvidia's first product cost customers $ 450.

By the time Microsoft's API was launched, most manufacturers of graphics accelerators depended heavily on proprietary solutions from other companies - when the developers of Bill Gates' company were just starting to work on their own graphics library, there were already many APIs on the market, such as S3d (S3), Matrox Simple Interface, Creative Graphics Library, C Interface (ATI) and SGL (PowerVR), and later included NVLIB (Nvidia), RRedline (Rendition) and the famous Glide. This diversity greatly complicated the life of the developers of the new hardware, since the APIs were incompatible with each other, and different games supported different libraries. The release of DirectX put an end to all third-party solutions, because the use of other proprietary APIs in games for Windows simply did not make sense.

But we cannot say that the new product from Microsoft was devoid of serious flaws. After the DirectX SDK was presented, many manufacturers of graphics accelerators have lost the ability to control hardware resources of video cards when playing digital video. Numerous driver problems on the recently released Windows 95 angered users accustomed to the stable operation of Windows 3.1. Over time, all problems were resolved, but the main battle for the market lay ahead - ATI, who had taken a break, was preparing to conquer the universe of three-dimensional games with a new line of 3D Rage.

ATI Rage - 3D Rage

Demonstration of the new graphics accelerator took place at the Los Angeles E3 1995 exhibition. ATI engineers have combined the advantages of the Mach 64 chip (and its outstanding 2D graphics capabilities) with a new 3D processing chip, taking full advantage of previous designs. The first 3D accelerator ATI 3D Rage (also known as Mach 64 GT) entered the market in November 1995.

ATI 3D Rage

As in the case of Nvidia, engineers had to face a lot of problems - the later revisions of DirectX 1.0 caused numerous problems associated with the lack of a deep buffer. The card had only 2 MB of EDO RAM, so 3D applications and games were launched at a resolution of no more than 640x480 at 16-bit color or 400x300 at 32-bit color, while in 2D mode the screen resolution was much higher - up to 1280x1024. Attempts to run the game in 32-bit color at 640x480 usually resulted in color artifacts on the screen, and the gaming performance of 3D Rage was not outstanding. The only indisputable advantage of the novelty was the ability to play video files in MPEG format in full screen mode.

ATI worked on bugs and redesigned the chip with the release of Rage II in September 1996. Having corrected hardware flaws and added support for the MPEG2 codec, the engineers for some reason did not think about the need to increase the memory size - the first models still had a ridiculous 2 MB of video memory on board, which inevitably hit the performance when processing geometry and perspective. The defect was corrected in later revisions of the adapter - for example, in Rage II + DVD and 3D Xpression +, the memory buffer grew to 8 MB.

But the war for the 3D market was just beginning, as three new companies were preparing their products for the latest games - Rendition, VideoLogic and 3dfx Interactive. It was the latter that managed to present a graphics chip in the shortest possible time, significantly outstripping all competitors and starting a new era in 3D graphics -3Dfx Voodoo Graphics .

1996-1999. The era of 3Dfx. The Greatest Graphics Startup Ever. The last stage of great competition for the market

The incredible story of 3Dfx has become a textbook embodiment of a startup, symbolizing both incredible success and dizzying profits, as well as the incompetence of self-confident leadership, and as a result - collapse and oblivion. But the sad ending and bitter experience cannot deny the obvious - 3Dfx single-handedly created a graphics revolution, catching numerous competitors by surprise, and setting a new, phenomenally high performance bar. Neither before nor after this incredible period in the history of the development of video cards have we seen anything even remotely similar to the crazy rise of 3Dfx in the second half of the nineties.

3Dfx Voodoo Graphics was a graphics adapter aimed exclusively at working with 3D graphics. It was assumed that the buyer of the novelty will use another board to work with two-dimensional loads, connecting it to Voodoo through a second VGA connector.

This approach did not confuse many enthusiasts, and the innovative solution immediately attracted many partner manufacturers who released their own variants of Voodoo.

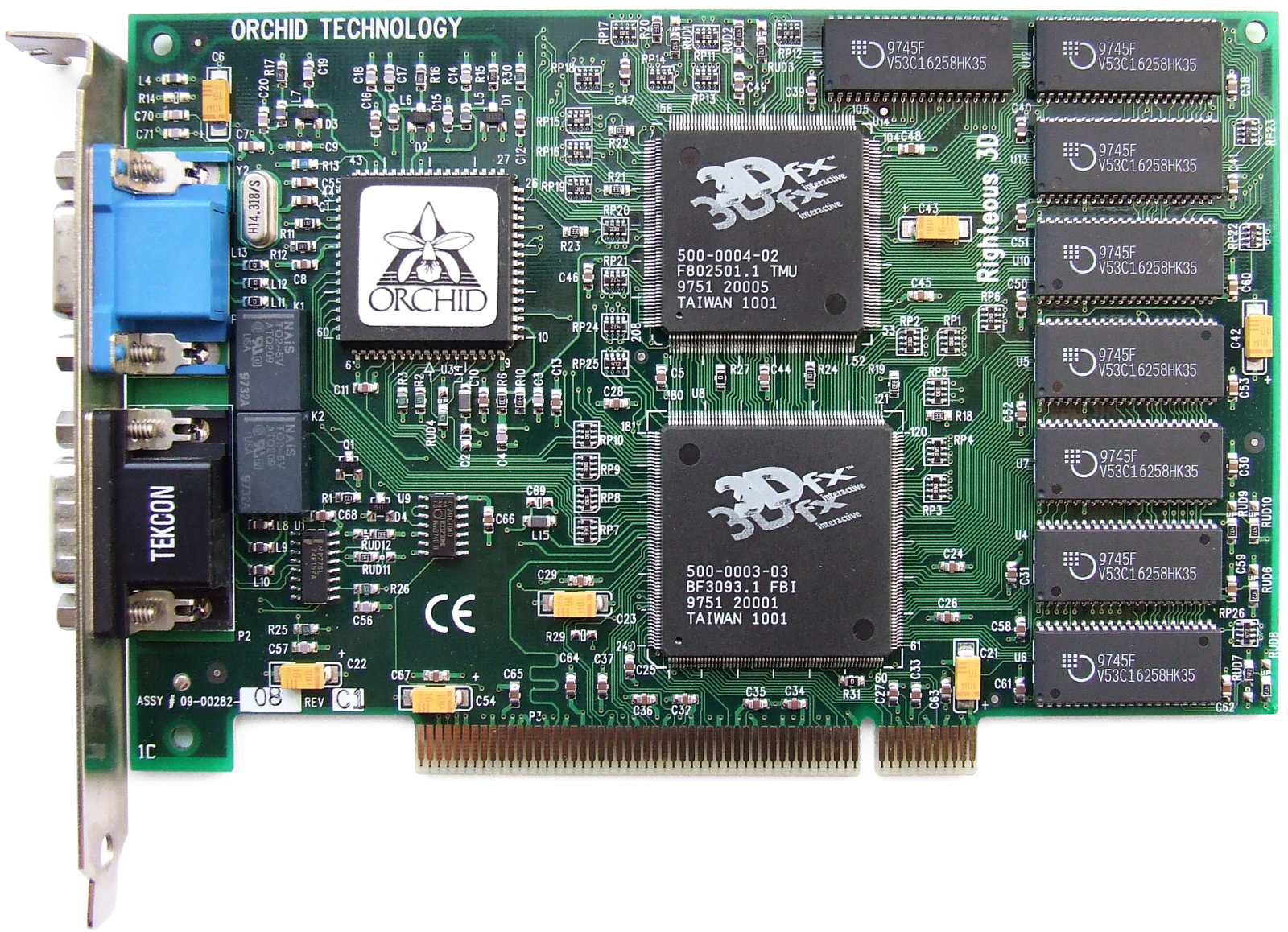

One of the interesting cards based on the first 3Dfx chip was Orchid Righteous 3D from Orchid Technologies. The trademark of the $ 299 adapter was mechanical relays that emit characteristic clicks when launching 3D applications or games.

Orchid Righteous 3D

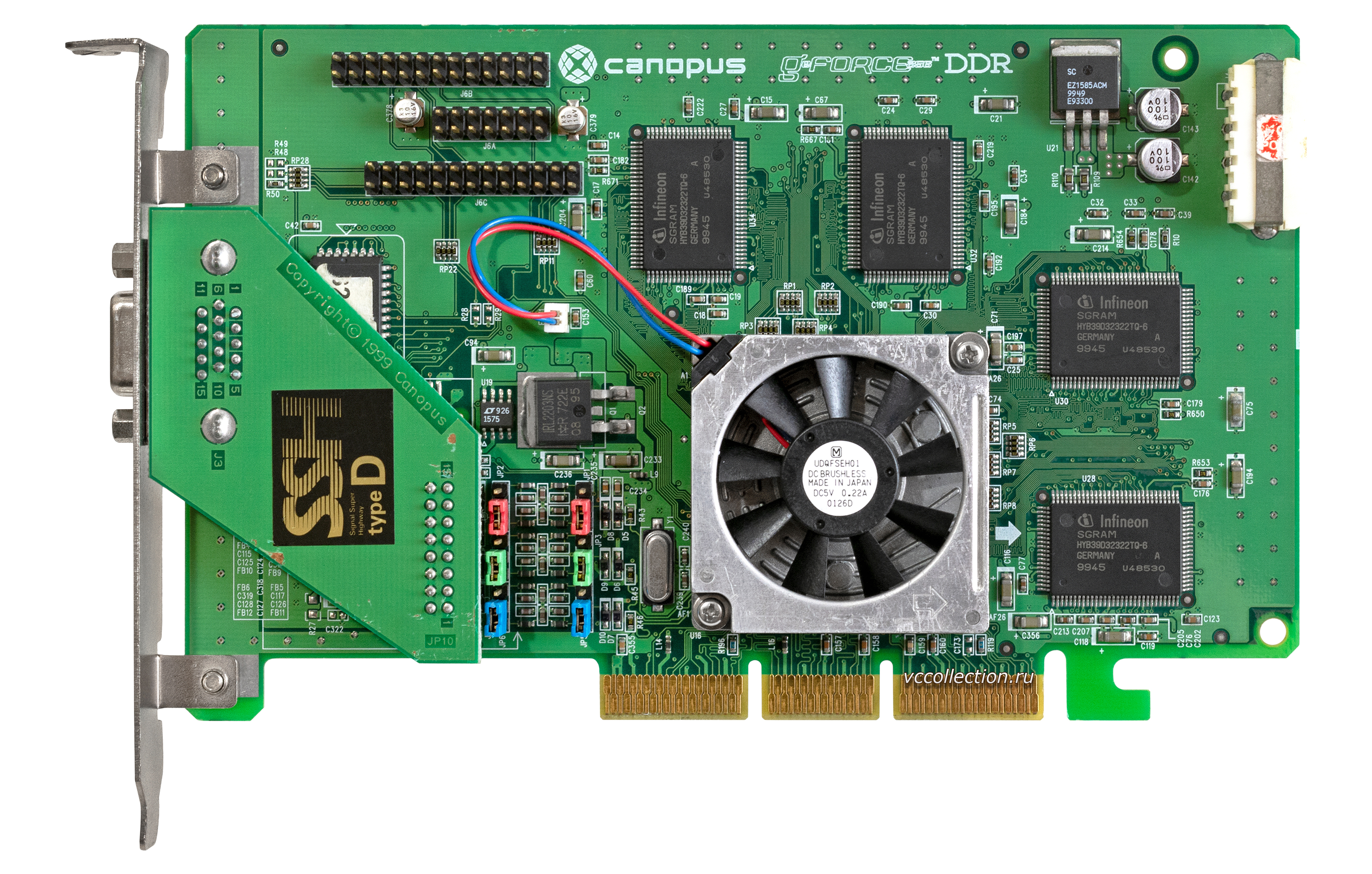

In later revisions, these relays were replaced with solid state components, and the old charm was lost. Together with Orchid, Diamond Multimedia (Monster 3D), Colormaster (Voodoo Mania), Canopus (Pure3D and Quantum3D), Miro (Hiscore), Skywell (Magic3D), and the most pretentious in a series of titles 2theMAX Fantasy FX Power 3D, also presented their versions of the latest accelerator. ... Previously, they did not hesitate to name video cards!

The reasons for this excitement around the new product were obvious - with all possible shortcomings, Voodoo Graphics had incredible performance, and its appearance immediately rendered many other models obsolete - especially those that could only work with 2D graphics. Despite the fact that in 1996 more than half of the 3D accelerator market belonged to S3, 3Dfx was rapidly gaining millions of fans and by the end of 1997 the company owned 85% of the market. It was a phenomenal success.

Competitors 3Dfx. Rendition and VideoLogic

The resounding successes of yesterday's newcomer have not deleted from the game the competitors that we mentioned earlier - VideoLogic and Rendition. VideoLogic created Tiled Deferred Rendering (TBRD) technology, which eliminated the need for pre-Z-buffering a frame. In the final stage of rendering, hidden pixels were cleaned out, and geometry processing began only after textures, shadows and lighting were applied. TBRD technology worked on the principle of splitting a frame into rectangular cells, in which polygons were rendered independently of each other. In this case, the polygons located outside the visible area of the frame were eliminated, and the rendering of the rest began only after calculating the total number of pixels. This approach allowed to save a lot of computing resources at the stage of frame rendering,significantly improving overall performance.

The company has brought to the market three generations of graphics chips from NEC and ST Micro. The first generation was an exclusive product on Compaq Presario computers called Midas 3 (Midas 1 and 2 were prototypes used in arcade machines). The later released PSX1 and PSX2 were focused on the OEM market.

The second generation chips formed the basis for the Sega Dreamcast, the Japanese platform that played a role in the sad fate of 3Dfx. At the same time, VideoLogic did not have time to enter the consumer market of graphic cards - by the time of the premiere, their Neon 250 model was morally outdated, losing to all budget solutions, and this is not surprising, because the novelty reached the shelves only in 1999.

Rendition also excelled in graphics innovations and created the first Vérité 1000 graphics chip with the ability to work not only with 2D, but also with 3D graphics simultaneously thanks to the processor core on the RISC architecture, as well as the use of pixel pipelines. The processor was responsible for the processing of polygons and the mechanism of the rendering pipelines.

Microsoft was interested in this approach to building and processing images - the company used Vérité 1000 during the development of DirectX, but the accelerator had its own architectural flaws. For example, it worked only on motherboards with support for Direct memory access (DMA) technology - data was transferred through it via the PCI bus. Due to its cheapness and a host of software advantages, including anti-aliasing and hardware acceleration in Quake from id Software, the card was popular until Voodoo Graphics. The new product from 3Dfx turned out to be more than 2 times more productive, and the DMA technology quickly lost its popularity among game developers, sending the once promising V1000 to the dustbin of history.

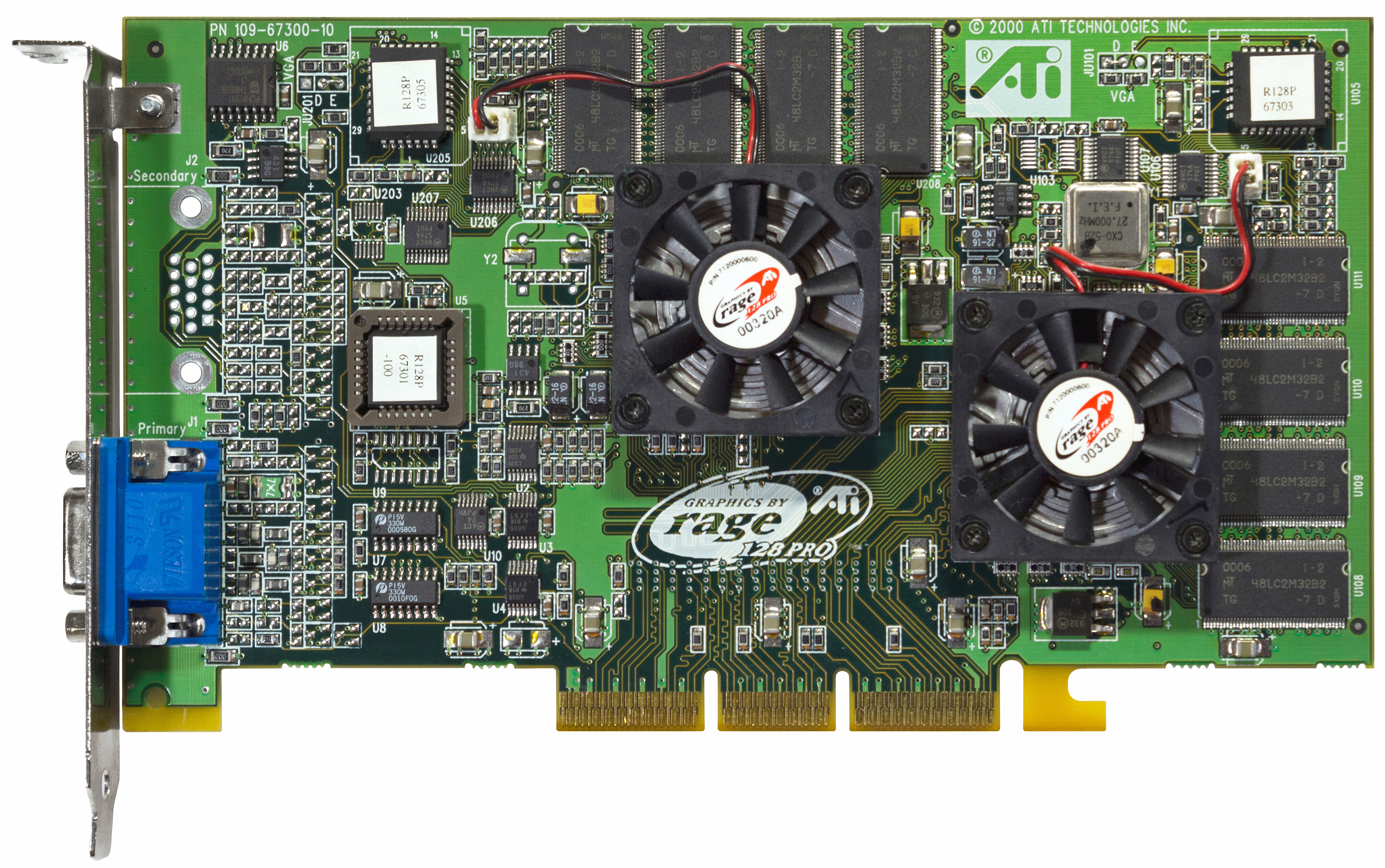

ATI - Race for Voodoo with Rage II and Rage Pro

Meanwhile, ATI did not stop working on new revisions of Rage. Following the Rage II in March 1997, the Rage Pro was introduced - the first AGP video card from the ATI 3D Engineering Group, formed the day before.

Rage Pro with 4 MB of video memory on board practically equaled the legendary Voodoo in performance, and the version on the AGP bus with 8 MB of video memory even surpassed the famous competitor in a number of games. In the Pro version of the card, ATI engineers have improved perspective correction and texture processing, as well as added support for hardware anti-aliasing and trilinear filtering thanks to the increased cache of 4KB. To reduce the dependence of the adapter's performance on the central processor in the computer, a separate chip was soldered on the board for processing floating point operations. Fans of modern multimedia appreciated the support for hardware acceleration when playing video from DVD media.

The entry of Rage Pro allowed ATI to improve its finances and increase its net income to $ 47.7 million on a total turnover of more than $ 600 million. Most of the financial success of the product came from OEM contracts, the implementation of the graphics chip on motherboards and the release of a mobile variant. The card, which was often on sale in the Xpert @ Work and Xpert @ Play variants, had a lot of configurations from 2 to 16 MB of video memory for different needs and market segments.

An important strategic advantage for ATI's staff was the $ 3 million acquisition of Tseng Labs, which worked on technologies for integrating RAMDAC chips on board graphics cards. The company was developing its own graphics adapter, but faced technical problems, which led to a counter offer from Canadian market leaders. Along with the intellectual property, 40 top-class engineers were transferred to ATI's staff and immediately began work.

New competitors. Permedia and RIVA 128

Professionals from 3DLabs never gave up hopes to capture the interests of gamers. For this, a series of Permedia products was released, manufactured using the Texas Instruments 350 nm process technology. The original Permedia had relatively poor performance, which was fixed in Permedia NT. The new card had a separate Delta chip for processing polygons and an anti-aliasing algorithm, but at the same time it was expensive - as much as $ 600. When the updated Permedia 2 line was ready by the end of 1997, it could no longer compete with gaming products - 3DLabs changed marketing and presented new items as professional cards for working with 2D applications and limited 3D support.

Just a month after the latest 3DLabs and ATI premieres, Nvidia is back on the market with its RIVA 128, a highly competitive gaming wallet. Affordable price and excellent performance in Direct3D ensured the new product universal recognition and commercial success, allowing the company to enter into a contract with TSMC for the production of chips for the updated RIVA 128ZX. By the end of 1997, two successful video cards brought Jensen Huang 24% of the market - almost everything that the almighty 3Dfx did not have time to crush.

Ironically, the paths between Nvidia and the market leader began to intersect already when Sega, preparing for the development of the new Dreamcast console, entered into several preliminary contracts with manufacturers of graphics solutions. Among them were Nvidia (with the NV2 chip design) and 3Dfx (with the Blackbelt prototype). The 3Dfx management was completely confident in receiving the contract, but, to their surprise, was refused - the Japanese chose not to risk it and turned to NEC for the development, which had previously proven itself to be a collaboration with Nintendo. Representatives of 3Dfx filed a lawsuit against Sega, accusing the company of intending to embezzle proprietary developments in the course of work on the prototype - a long litigation ended in 1998 with the payment of compensation in the amount of $ 10.5 million in favor of the American company.

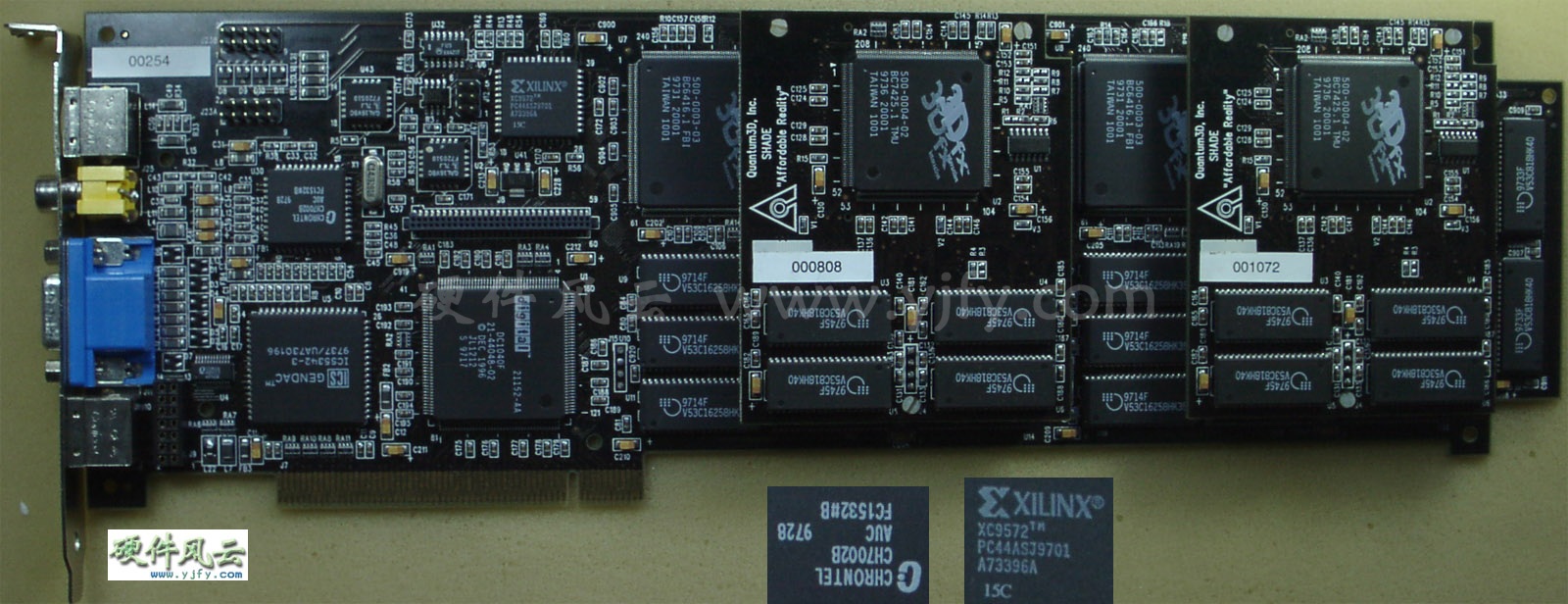

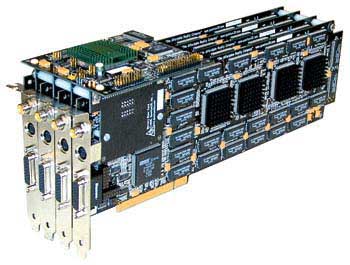

Quantum3D and the first use of SLI

In the meantime, they continued, 3Dfx managed to acquire a subsidiary company. She was Quantum3D, which received several lucrative contracts for the development of professional graphics solutions of the highest level from SGI and Gemini Technology. The future products were based on the innovative development of 3Dfx - SLI (Scan-Line Interleave) technology .

The technology assumed the possibility of using two graphics chips (each with its own memory controller and buffer) within one board or connecting two separate boards using a special ribbon cable. Similarly connected cards (in the format of a dual-chip board or two separate ones) divided the image processing in half. SLI technology provided the ability to increase the screen resolution from 800x600 to 1024x768 pixels, but this luxury was not cheap: the Obsidian Pro 100DB-4440 (two paired cards with Amethyst chips) was offered at a price of $ 2500, while the 100SB-4440 / 4440V dual-chip board would have cost the buyer at $ 1895.

Obsidian Pro 100DB-4440

Specialized solutions, however, did not in any way distract the market leaders from developing new cards of the Voodoo series.

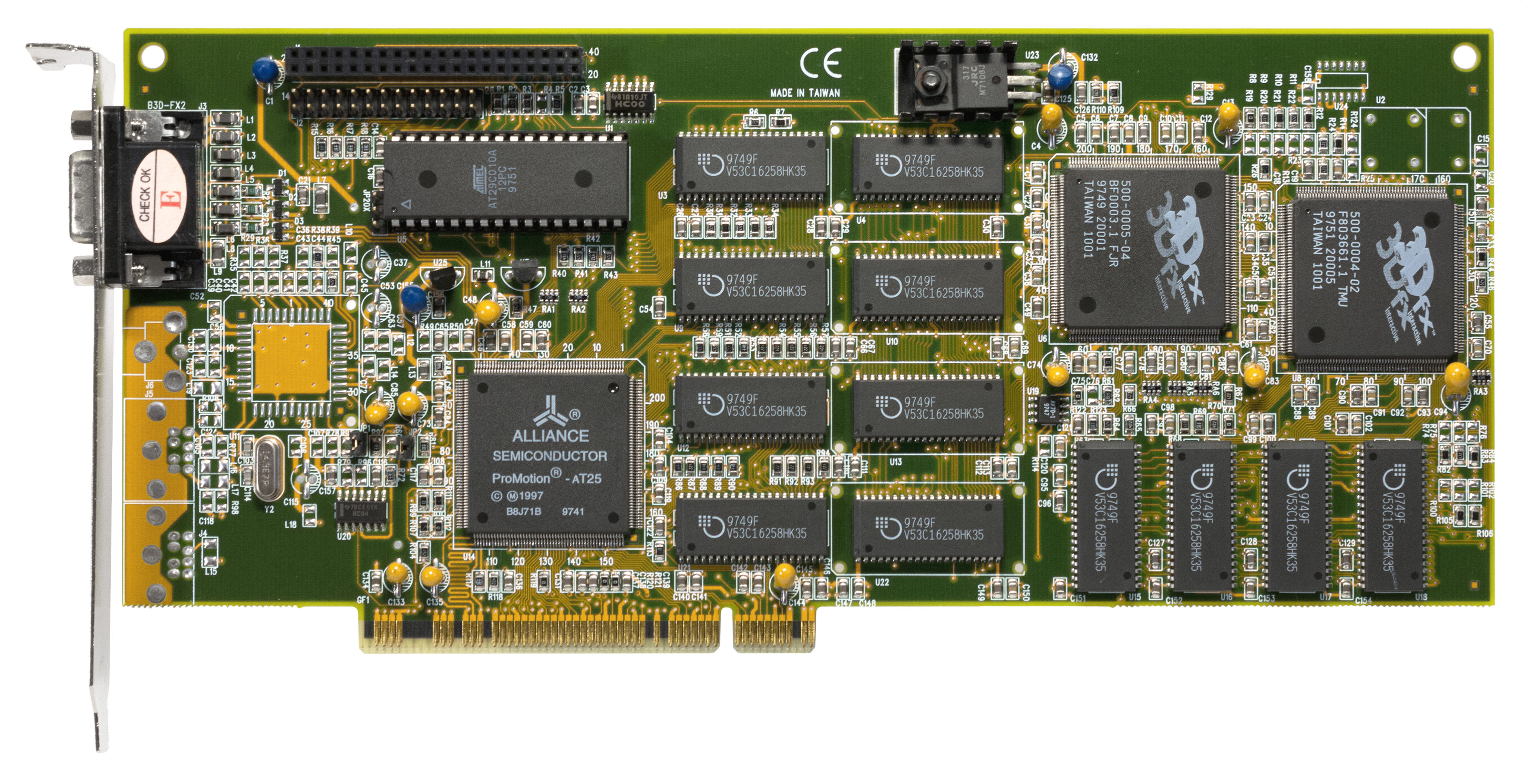

3Dfx Voodoo Rush. Rush, budget and frustration

In the summer of 1997, 3Dfx went public and tried to consolidate the overwhelming success of Voodoo by releasing Voodoo Rush, a card that works with 2D and 3D graphics simultaneously, and no longer requires a second adapter. Initially, it was supposed to be based on the new Rampage chip, but due to technical problems, its release was delayed, and Rush was based on a stripped-down version of the original Voodoo. To reconcile the two kinds of graphics workloads, the board housed two chips - the SST-1 was responsible for processing 3D games using the Glide API, while the more mediocre chip from Alliance or Macronix was responsible for other 2D and 3D applications and games. Due to the desynchronization, the chips worked at different frequencies (50 and 72 MHz), causing random artifacts on the screen and confusion for the owners.

Voodoo rush

In addition, Voodoo Rush had a common video memory buffer, which both chips used simultaneously. The maximum screen resolution suffered from this - it was only half of 1024x768 (512x384 pixels), and low RAMDAC frequencies did not allow providing the desired 60 Hz in screen refresh.

Rendition V2100 and V2200. Leaving the market

Amid big problems with 3Dfx, Rendition has made another attempt to pleasantly surprise the mass market with the Rendition V2100 and V2200. New items entered the market shortly after the premiere of Voodoo Rush, but, to the disappointment of enthusiasts, could not compete even with the stripped-down Voodoo. Unclaimed graphics cards forced Rendition to be the first of many to leave the graphics card market.

As a result, many of the company's projects remained at the prototype stage - one of them was a modified version of the V2100 / V2200 with a Fujitsu FXG-1 geometric processor (in a dual-chip format). However, there was also a single-chip version with FXG-1, which went down in history as the product with the most exquisite name in history - Hercules Thrilled Conspiracy. Together with other developments (for example, the V3300 and 4400E chips), the company was sold to Micron for $ 93 million in September 1998.

Aggressive economy. Battle for the budget segment

Constant productivity growth and the emergence of new technical advantages have made it extremely difficult for second-tier manufacturers to compete with solutions from ATI, Nvidia and 3Dfx, which have occupied not only the niche of enthusiasts and professionals, but also the “popular” segment of the adapter market under $ 200.

Matrox introduced its Mistique graphics card at a tasty price of $ 120 to $ 150, but due to the lack of OpenGL support, the new product immediately fell into the underdog category. S3 started selling the new ViRGE line, with the presence of the widest model range - the mobile version of the adapter with dynamic power management (ViGRE / MX), and a special version of the main ViRGE with support for TV-out, S-Video connector and DVD playback (ViRGE / GX2). Two main adapters designed to compete for the budget market (models ViRGE, VIRGE DX and ViRGE GX) cost from $ 120 for the younger one to $ 200 for the older one at the time of release.

It's hard to imagine, but the ultra-budget category was also a place of fierce competition. Laguna3D from CirrusLogic, models 9750/9850 from Trident, and SiS 6326 competed for wallets of users willing to pay no more than $ 99 for a graphics accelerator. All these cards were compromises with a minimum of features.

After the release of Laguna3D, CirrusLogic left the market - low quality 3D graphics, mediocre (and inconsistent) performance, as well as much more interesting competitors (the same ViRGE, which cost a little more) did not leave the industry veterans a chance to survive. The only sources of income for CirrusLogic were the sale of antediluvian 16-bit graphics adapters for $ 50, interesting only to the most economical customers.

Trident also saw potential in the “below average” market segment - in May 1997, the 3D Image 9750 was released, and a little later the 9850 came out with support for a dual-channel AGP bus. After fixing many of the 9750's PCI-bus problems, the 9850 suffered from mediocre texture processing and received little praise.

Among cards sold for next to nothing, the most successful was the SiS 6326, introduced in June 1997 for under $ 50. With good image quality and relatively high performance, the 6326 sold over 8 million units in 1998. But in the late 90s, the enthusiast world was rocked by a startup full of serious promises - it was BitBoys.

BitBoys - Daring Guys in a Fabulous 3D World

BitBoys announced itself in June 1997 with the announcement of the intriguing Pyramid3D project. The revolutionary graphics chip was developed jointly by the startup itself with Silicon VLSI Solutions Oy and TriTech. Unfortunately for enthusiasts, apart from loud words at the presentations of Pyramid3D, it never appeared anywhere, and the TriTech company was convicted of appropriating someone else's patent for an audio chip, which is why it later went bankrupt and closed.

But BitBoys didn't stop working and announced a second project called Glaze3D. Incredible realism, best-in-class performance and a host of the latest technologies were shown to the public at SIGGRAPH99. The working prototype of the graphics adapter used the RAMBUS and 9 MB of video memory DRAM from Infineon.

Alas, just like the first time, hardware problems and transfers led to the fact that the expected revolution did not come again.

Promotional screenshot designed to emphasize the realism that Glaze3D cards were supposed to achieve

Much later the project was renamed Ax again, and games with DirectX 8.1 support were chosen as the field for competition - the prototype even managed to get the official name Avalanche3D and promised to "blow up" the market in 2001, but not It happened. In the last stage of development, Ax changed to Hammer, which was promised support for DirectX 9.0. The history of the most pretentious long-term construction in the history of graphics ended with the bankruptcy of Infineon, after which BitBoys abandoned the "dream project" and moved to the mobile graphics segment. Now let's go back to cozy 1998.

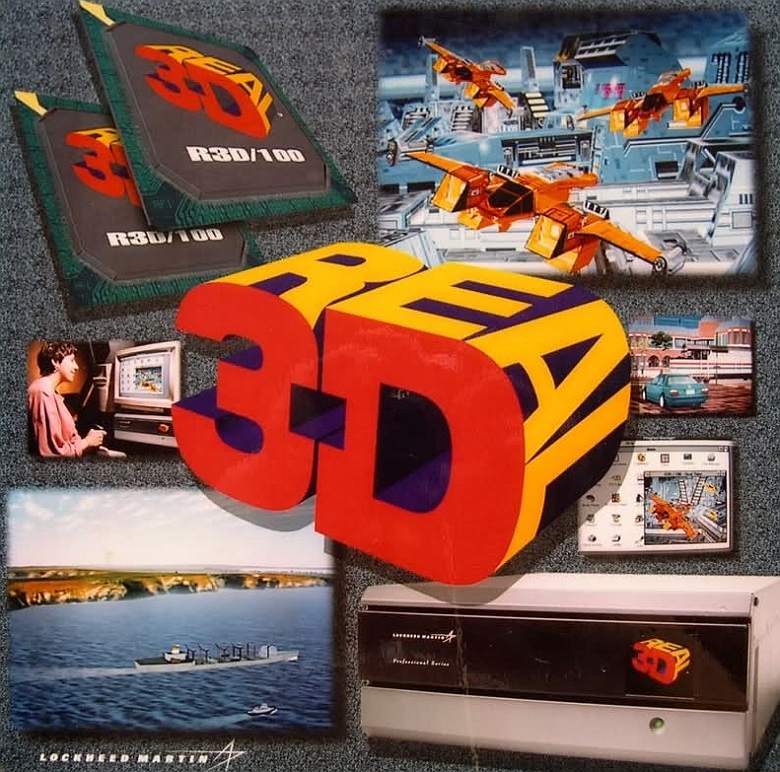

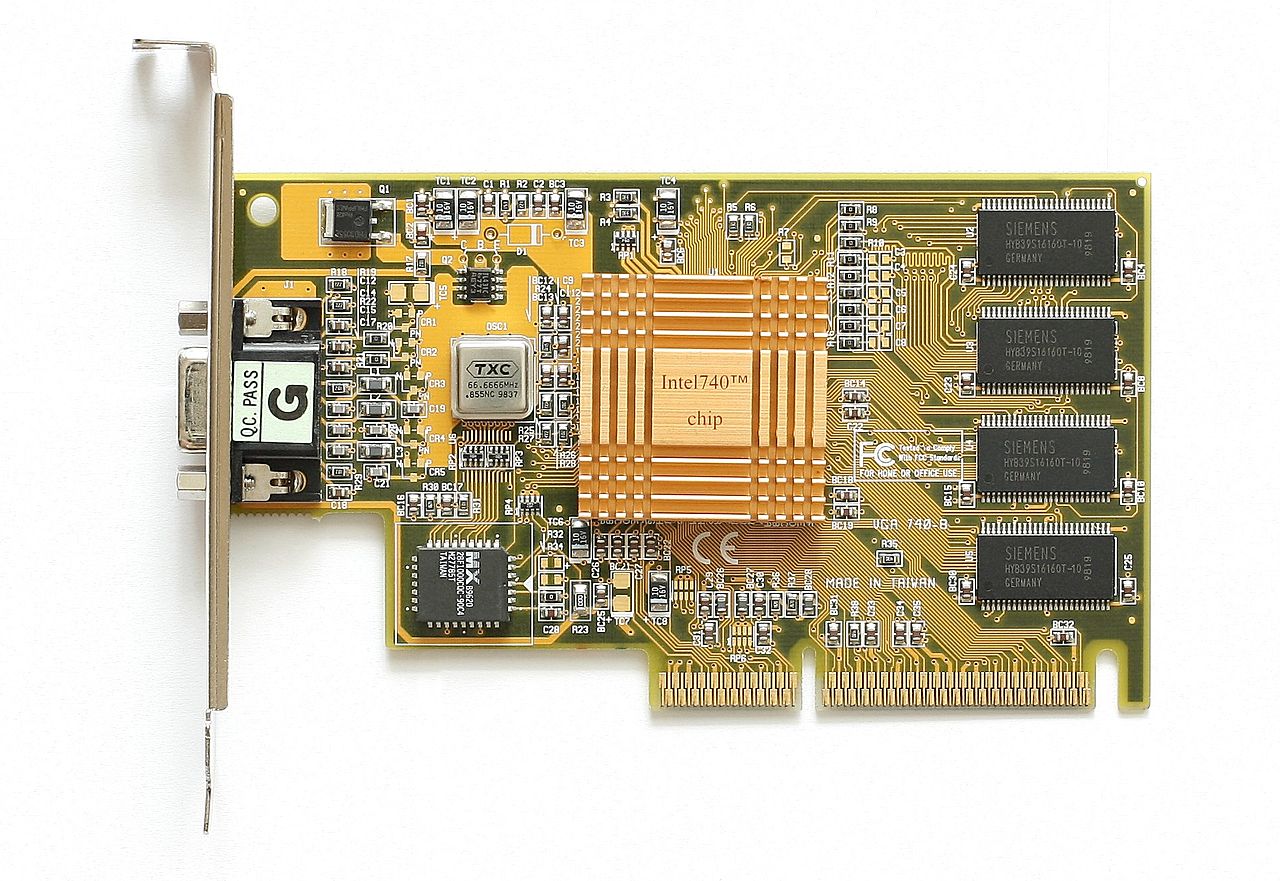

Intel i740 - Big failure of big professionals

Intel released its first (and so far the last) graphics card for 3D gaming called the Intel i740 in January 1998. The project had an interesting history, as it was rooted in a space flight simulation program that NASA and General Electric created for the famous Apollo series of lunar missions. Later, the developments were sold to Martin Marietta, a company that later became part of the defense giant Lockheed (and this is how the Lockheed-Martin appeared). Lockheed-Martin on the basis of the project has created a line of professional devices Read3D, consisting of two models of graphics adapters - Real3D / 100 and Real3D / Pro-1000. Ironically, despite the military origin of the devices, one of the applications of outstanding technologies has become ... a Sega arcade machine using two Pro-1000 boards.

A little later, Lockheed-Martin announced the start of Project Aurora, a collaboration with Intel and Chips and Technologies. A month before the i740 was released, Intel acquired 20% of the Real3D project, and by July 1997, it had completely acquired Chips and Technologies.

A key feature of the Real3D project was the use of two separate chips for processing textures and graphics - Intel combined them to create the i740. The adapter used an original approach to data buffering - the contents of the frame buffer and textures were loaded directly into the computer's RAM via the AGP bus. In the partner versions of the i740, access to RAM occurred only if the adapter's own buffer was completely filled or was heavily segmented. The same approach is often used today - when the memory on board the video card is exhausted, the system increases the buffer using free RAM.

But at a time when buffers were counted in megabytes, and textures were not yet bloated to obscene sizes, this approach might seem at least strange.

To reduce possible latencies when working with the bus, Intel used one of its features - direct access to the memory buffer (Direct Memory Execute or DiME). This method was also called AGP texturization, and it allowed using the computer's RAM for mapping textures, processing them selectively. Average performance coupled with mediocre image quality allowed the i740 to reach the level of last year's cards in its price segment.

For the model with 4 MB of video memory, they asked for $ 119, and the version with 8 MB cost $ 149. At the same time, in addition to the basic version of Intel, only two partner versions saw the light - Real3D StarFighter and Diamond Stealth II G450.

Counting on success, Intel began developing the next i752 chip, but neither OEMs nor enthusiasts showed interest in a budget adapter that is seriously lagging behind current solutions. And this is not surprising, because at the same time ViRGE from S3 was on sale, the purchase of which would please the user much more. The adapter production was phased out, and the i752 chips that entered the market were repurposed for use as integrated graphics solutions.

In October 1999, Lockheed-Martin eliminated Real3D, and the wealthy workforce went to Intel and ATI.

ATI - Back in the Game with Rage Pro and Rage 128

ATI re-established itself with the Rage Pro Turbo in February 1998, but this card turned out to be just a rebrand of the Rage Turbo with driver tweaks for outstanding results in synthetic texts. Reviewers of some publications were pleasantly surprised, but the price of $ 449 was very difficult to justify. Nevertheless, it was with the Rage Pro Turbo that the Finewine phenomenon first appeared - the drivers gradually improved the adapter's performance with each new revision.

ATI made a much more serious attempt to influence the balance of power with the release of the Rage 128 GL and Rage 128 VR in August of the same year. Due to problems with supplies, ATI was unable to ensure the availability of models in stores almost until the winter holidays, which greatly affected the promotion of new products. Unlike OEM contracts, which consistently bring serious profits to Canadians, ordinary enthusiasts could not appreciate the new product due to the lack of adapters on the market. But there was another reason why sales of the Rage 128 were lower than expected.

ATI prepared the Rage 128 for a bright graphics future by equipping the new product with 32 MB of video memory (variants with 16 MB were produced under the All-In-Wonder 128 brand), which worked on a fast and efficient subsystem - in 32-bit color, the direct competitor of NVidia Riva TNT did not have no chances. Alas, at the time of the release of the new product, most games and applications still worked in 16-bit mode, where the Rage 128 did not stand out in any way against the background of NVidia and S3 (and in terms of image quality it was even inferior to models from Matrox). The general public did not appreciate ATI's approach, but it was then that the Canadians were ahead of their time for the first time - only their approach was appreciated much later.

1998 was a record year for ATI - with a turnover of $ 1.15 billion, the company's net profit amounted to a good $ 168.4 million. The Canadian company ended the year with 27% of the graphics accelerator market.

In October 1998, ATI acquired Chromatic Research for $ 67 million. The company, famous for its MPACT media processors, was a PC TV solution provider from Compaq and Gateway, offering excellent MPEG2 performance, high audio quality and excellent 2D performance. Ironically, it was MPACT's only drawback in the form of poor 3D performance that pushed Chromatic Research to the brink of bankruptcy just 4 years after its founding. The time of universal solutions was getting closer and closer.

3Dfx Voodoo 2 and Voodoo Banshee - Market Leader Successful Failure

While Intel was trying to enter the graphics market, its leader in the person of 3Dfx introduced Voodoo 2, which became a technological breakthrough in several areas at the same time. The new graphics adapter from 3Dfx had a unique design - in addition to the central chip, the board had two paired texture processors, thanks to which the card was not only three-chip, but also provided the possibility of multi-texture processing in OpenGL (when two textures were applied to a pixel at the same time, accelerating the overall rendering of the scene) which has never been used before. Like the original Voodoo, the card worked exclusively in 3D mode, but unlike its competitors that combined 2D and 3D processing in a single chip, 3Dfx did not make any compromises, pursuing the main goal of defending its position as the market leader.

The professional versions of the new adapter were not long in coming - the subsidiary company 3Dfx Quantum3D released three noteworthy accelerators based on the novelty - a two-chip Obsidian2 X-24, which could be used in tandem with a 2D board, SB200 / 200Sbi with 24 MB of EDO memory on board, as well as Mercury Heavy Metal - an exotic sandwich in which paired 200Sbi were interconnected by an Aalchemy contact board, which in the future became the prototype of the famous NVidia SLI bridge.

Mercury Heavy Metal

The latest variant was intended for complex graphical simulations and was available for a space price of $ 9999 . The MHM monster required an Intel BX or GX series server motherboard with four PCI slots to function properly.

Realizing the importance of control over the budget market, in June 1998 3Dfx presented Voodoo Banshee - the first graphics accelerator with support for 2D and 3D modes of operation. Contrary to expectations and hopes, the legendary Rampage chip was never ready, and the card had only one texture processing unit on board, which practically destroyed the performance of the novelty in multi-polygonal rendering - Voodoo 2 was many times faster. But even if economical production with a recognizable brand ensured good sales for Banshee, the disappointment of the fans did not disappear anywhere - much more was expected from the creators of “that very Voodoo”, which overnight turned the graphics market.

In addition, despite the leadership of Voodoo 2, the gap from competitors was rapidly decreasing. Having imposed serious competition on ATI and NVidia, 3Dfx faced more and more advanced solutions, and only thanks to effective management (3Dfx produced and sold the cards itself, without the participation of partners) the company received significant profit from sales. But the 3Dfx management saw a lot of opportunities in acquiring its own factories for the production of silicon wafers, so the company bought STB Technologies for $ 131 million with the expectation of drastically reducing production costs and imposing competition on suppliers Nvidia (TSMC) and ATI (UMC). This deal became fatal in the history of 3Dfx - the Mexican factories of STB were hopelessly lagging behind Asian competitors in terms of product quality, and could not compete in the race for the technological process.

After the acquisition of STB Technologies became known, most 3Dfx partners did not support the dubious decision and switched to NVidia products.

Nvidia TNT - Application for Technical Leadership

And the reason for this was the Riva TNT introduced by Nvidia on March 23, 1998. By adding a parallel pixel pipeline to the Riva architecture, the engineers doubled the rendering and rendering speed, which, together with 16MB of SDR memory, significantly increased performance. The Voodoo 2 used much slower EDO memory - and this gave Nvidia's new product a significant advantage. Alas, the technical complexity of the chip bore fruit - due to hardware errors in the production of the 350 nm TSMC chip did not work on the 125 MHz conceived by the engineers - the frequency often dropped to 90 MHz, which is why Voodoo 2 was able to retain the title of the formal leader in performance.

But even with all its flaws, the TNT was an impressive new product. Thanks to the use of a dual-channel AGP bus, the card provided support for gaming resolutions up to 1600x1200 with 32-bit color (and a 24-bit Z-buffer to ensure image depth). Against the background of 16-bit Voodoo 2, the new product from nVidia looked like a real revolution in graphics. Although TNT was not the leader in benchmarks, it remained dangerously close to the Voodoo 2 and Voodoo Banshee, offering more new technologies, better scaling at the processor frequency and higher quality 2D and texture processing thanks to the progressive AGP bus. The only drawback, as in the case of the Rage 128, was delivery delays - a large number of adapters appeared on sale only in the fall, in September 1998.

Another stain on Nvidia's reputation in 1998 was a lawsuit by SGI, according to which NVidia infringed patent rights for its internal texture-mapping technology. As a result of the litigation, which lasted a whole year, a new agreement was concluded, according to which NVidia got access to SGI technologies, and SGI got the opportunity to use Nvidia's developments. At the same time, the graphic division of SGI itself was disbanded, which only benefited the future graphic leader.

Number Nine - Side Step

Meanwhile, on June 16, 1998, OEM graphics maker Number Nine decided to try his luck in the graphics card market by releasing a Revolution IV card under its own brand.

Lagging far behind flagship solutions from ATI and Nvidia, Number Nine has focused on the business sector, offering large companies what classic cards for games and 3D graphics were weak in - support for high resolutions in 32-bit color.

To gain interest from large companies, Number Nine integrated a proprietary 36-pin OpenLDI connector into its Revolution IV-FP, and sold the board bundled with a 17.3 inch SGI 1600SW monitor (supporting 1600x1024 resolution). The set cost $ 2795.

The offer was not very successful, and Number Nine returned to partnering S3 and Nvidia cards until it was bought by S3 in December 1999 and then sold to the company's former engineers who formed Silicon Spectrum in 2002.

S3 Savage3D - A budget alternative to Voodoo and TNT for $ 100

The budget champion in the person of S3 Savage debuted at E3 1998, and unlike the long-suffering dominators in the segment above (Voodoo Banshee and Nvidia TNT) hit store shelves a month after the announcement. Unfortunately, the rush could not pass unnoticed - the drivers were "raw", and OpenGL support was implemented only in Quake, because I could not afford to ignore one of the most popular games of the year, S3.

The frequencies of the S3 Savage were also not smooth. Due to manufacturing flaws and high power consumption, the reference adapter models got very hot and did not provide the intended frequency threshold of 125 MHz - the frequency usually floated between 90 and 110 MHz. At the same time, reviewers from leading publications got their hands on engineering samples that worked properly at 125 MHz, which provided beautiful numbers in all kinds of benchmarks, along with praise from the specialized press. Later, early problems were solved in partner models - Savage3D Supercharged worked stably at 120 MHz, and Terminator BEAST (Hercules) and Nitro 3200 (STB) conquered the coveted 125 MHz bar.Despite the raw drivers and mediocre performance against the background of the leaders of the Big Three, the democratic price within $ 100 and the ability to play high-quality video allowed the S3 to get good sales.

1997 and 1998 were another period of acquisitions and bankruptcies - many companies could not stand the competition in the race for performance and were forced to leave the industry. So Cirrus Logic, Macronix, and Alliance Semiconductor were left behind, while Dynamic Pictures was sold to 3DLabs, Tseng Labs and Chromatic Research were bought out by ATI, Rendition left Micron, AccelGraphics acquired Evans & Sutherland, and Chips and Technologies became part of Intel.

Trident, S3 and SiS - The Final Battle of the Millennium for Budget Graphics

The OEM market has always been the last straw for manufacturers hopelessly lagging behind the competition in 3D graphics and sheer performance. This was also the case for SiS, which released the budget SiS 300 for the needs of the business sector. Despite deplorable 3D performance (limited by a single pixel pipeline) and a hopeless 2D gap behind all competitors in the mainstream market, the SiS 300 conquered OEMs with certain advantages - a 128-bit memory bus (64-bit in the case of the more simplified SiS 305), support for 32-bit color, DirectX 6.0 (and even 7.0 in the case of the 305), support for multi-texture rendering and hardware MPEG2 decoding. There was a graphics card and a TV-out.

In December 2000, the modernized SiS 315 saw the light, which has a second pixel pipeline and a 256-bit bus, as well as full support for DirectX 8.0 and full-screen anti-aliasing. The card received a new lighting and texture processing engine, compensation for delays when playing video from DVD-media and support for the DVI connector. The performance level was in the region of the GeForce 2 MX 200, but this did not bother the company at all.

SiS 315 also entered the OEM market, but already as part of the SiS 650 chipset for motherboards on socket 478 (Pentium IV) in September 2001, and also as part of the SiS552 SoC system in 2003.

But SiS was far from the only manufacturer offering interesting solutions in the budget segment. Trident also fought for the attention of buyers with the Blade 3D, whose overall performance was on par with the failed Intel i740, but the $ 75 price more than offset many of the shortcomings. Later, Blade 3D Turbo entered the market, in which the frequencies increased from 110 to 135 MHz, and the overall performance reached the level of i752. Unfortunately, Trident took too long to develop its solutions for the market, where new products were presented every couple of months, so already in April 2000 this dealt the first blow to the company - VIA, for which Trident was developing integrated graphics, acquired the S3 company, and stopped cooperation with the former partner.

However, Trident has leveraged its business model to its fullest, combining bulk shipments and low manufacturing costs for its budget solutions. The mobile sector remained relatively vacant, and Trident developed several Blade3D Turbo models specifically for it - the T16 and T64 (operating at 143 MHz) and XP (operating at 165 MHz). But the OEM market that attracted many companies was no longer supportive of the simplicity of Trident - the SiS 315, released a little later, gave a check and checkmate to the entire Trident product line. Unable to quickly develop a viable alternative, Trident decided to sell the graphics division to SiS 'subsidiary XGI in 2003.

S3 Savage4 stood apart from other solutions in the budget sector. Announced in February and went on sale in May 1999, the new product offered 16 and 32 MB of onboard memory, a 64-bit four-channel AGP bus and its own texture compression technology, thanks to which the adapter could process blocks with a resolution up to 2048x2048 without much difficulty (although this and was implemented earlier in Savage3D). The card was also capable of multitextural rendering, but even the debugged drivers and impressive technical characteristics could not hide the fact that last year's offerings from 3Dfx, Nvidia and ATI were significantly more productive. And this state of affairs was repeated a year later, when the Savage 2000 entered the market. At low resolutions (1024x768 and less), the new product could compete with Nvidia TNT and Matrox G400.but when a higher resolution was chosen, the balance of power changed radically.

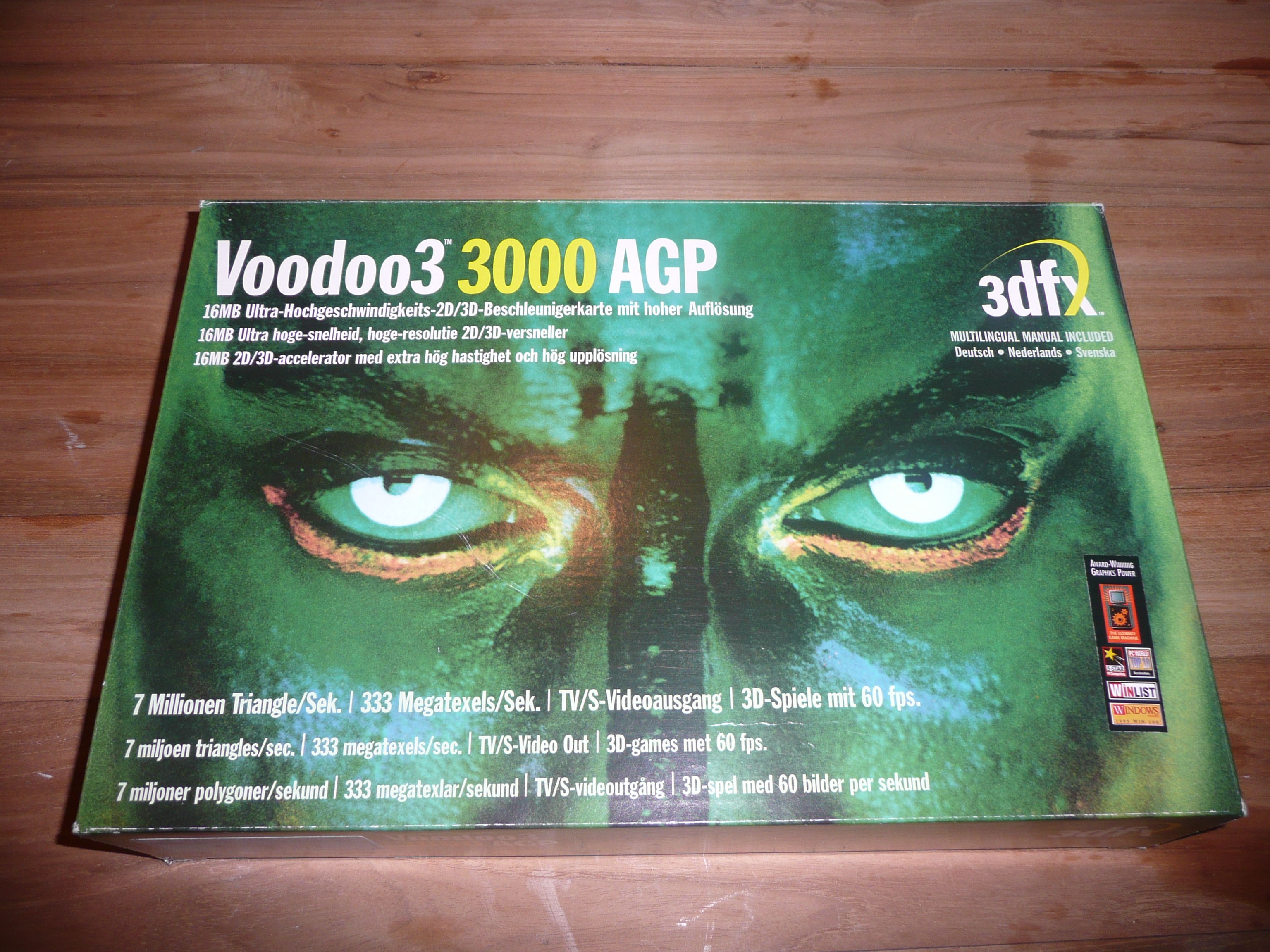

3Dfx Voodoo3 - Paphos, noise and empty excuses

3Dfx could not allow their new product to get lost against the background of progressive competitors, so the premiere of Voodoo3 in March 1999 was accompanied by an extensive advertising campaign - the card was vividly promoted in the press and on television, and the bold design of the box attracted the eyes of potential buyers who dreamed of a new graphic revolution.

Alas, the Rampage chipset, which became the talk of the town, was still not ready, and the architectural novelty was a relative of the Voodoo2 based on the Avenger chip. Archaic technologies like support for exclusively 16-bit color and texture resolution of only 256x256 did not please the fans of the brand, as did the complete lack of support for hardware processing of lighting and geometry. Many manufacturers have already introduced the fashion for high-resolution textures, multi-polygonal rendering and 32-bit color, so for the first time 3dfx was among the laggards in all respects.

Investors had to explain the loss of $ 16 million by the recent earthquake in Taiwan, which, however, had little impact on the financial success of ATI and Nvidia. Realizing the obvious benefits of free distribution of DirectX and OpenGL libraries, the company announced back in December 1998 that their Glide API would be available as open-source. True, at that time there were hardly too many people willing.

Riva TNT2 and G400 - Competitors Ahead

Simultaneously with the bombastic premiere of Voodoo3 from 3Dfx, Nvidia modestly presented its RIVA TNT 2 (with the first Ultra series cards in their history, where gamers received higher core and memory frequencies), and Matrox presented the equally impressive G400.

Riva TNT2 was manufactured using 250nm technology at TSMC factories, and was able to provide Nvidia with an unprecedented level of performance. The novelty smashed Voodoo3 almost everywhere - the exception was only a few games that used AMD 3DNow! Combined with OpenGL. Nvidia also kept up with technology - the TNT2 series featured a DVI connector to support the latest flat panel monitors.

A real surprise for everyone was Matrox G400, which turned out to be even more productive than TNT2 and Voodoo3, lagging behind only in games and applications using OpenGL. For its affordable price of $ 229, the new product from Matrox offered excellent image quality, enviable performance and the ability to connect two monitors via a paired DualHead connector. The second monitor was limited to a resolution of 1280x1024, but many people liked the idea.

The G400 also features Environment Mapped Bump Mapping (EMBM) to improve overall texture rendering. For those who always preferred to buy the best, there was the G400 MAX, which held the title of the most productive card on the market until the release of the GeForce 256 with DDR memory in early 2000.

Matrox Parhelia 3DLabs Permedia –

The great success in the market of gaming graphics accelerators did not inspire Matrox, which returned to the professional market, only once tempted to repeat the success of the G400 with Parhelia, but in 2002 competitors were already mastering DirectX 9.0 with might and main, and support for three monitors simultaneously faded against the background of deplorable gaming performance.

When the audience had already managed to digest the loud releases of three companies, 3DLabs presented the long-prepared Permedia 3 Create! The main feature of the novelty was niche positioning - 3DLabs hoped to attract the attention of professionals who prefer to while away their free time in games. The company focused on high performance in 2D, and attracted specialists from the acquired in 1998 Dynamic Pictures, the authors of the professional line of Oxygen adapters.

Unfortunately for 3DLabs, complex polygon modeling was a priority in professional maps, and often high performance in this direction was provided by a serious decrease in texture processing speed. Game adapters with a priority in 3D worked exactly the opposite - instead of complex calculations, rendering speed and processing textures with high image quality were at the forefront.

Far behind the Voodoo3 and TNT 2 in games, and not too far behind the competition in work applications and tasks, Permedia was 3DLabs' latest attempt to bring a product to the gaming adapter market. Further, the famous graphic engineers continued to expand and support their specialized lines - GLINT R3 and R4 on the Oxygen architecture, where the abundance of models ranged from the budget VX-1 for $ 299 to the premium GVX 420 for $ 1499.

The company's repertoire also included the Wildcat line of adapters based on the Intense3D developments, purchased from Integraph in July 2000. In 2002, as 3DLabs was actively completing the development of advanced graphics chips for the new Wildcat adapters, the company was acquired by Creative Technology with its plans for the P9 and P10 lines.

In 2006, the company left the desktop market, focusing on solutions for the media market, and later became part of the Creative SoC, and became known as ZiiLab. The 3DLabs story finally ended in 2012 when the company was bought by Intel.

ATI Rage MAXX - Dual-Chip Madness Lagging

Since the release of the successful Rage 128, ATI has experienced a number of difficulties with further development of the line. At the end of 1998, engineers successfully implemented support for the AGP 4x bus in the updated version of the Rage 128 Pro adapter, adding a TV-out to the number of connectors. In general, the graphics chip showed itself approximately at the level of TNT 2 in terms of gaming performance, but after the release of TNT 2 Ultra, the championship again passed to Nvidia, which the Canadians did not want to put up with - work on Project Aurora began.

When it became clear that the race for performance was lost, the engineers resorted to a trick that will become one of the features of many generations of red cards in the future - they released the Rage Fury MAXX, a dual-chip graphics card, on which two Rage 128 Pros were powered.

Rage Fury MAXX

Impressive specifications and the possibility of parallel operation of two chips made the card with an odious name a rather productive solution, and brought ATI forward, it was not possible to keep the leadership for a long time. Even the S3 Savage 2000 fought for the title of the best, and the GeForce 256 with DDR memory presented later did not leave ATI's flagship any chance - despite the threatening numbers and progressive technologies. Nvidia liked to be the first, and young Jen-Hsun Huang was in no hurry to give way to the market leader.

GeForce 256 is the first true "video card". The birth of the term GPU

Less than two months have passed since ATI enjoyed a pyrrhic victory in benchmarks with the announcement of Rage Fury MAXX, when Nvidia presented the answer that secured the status of the market leader - GeForce 256. The novelty was the first in history to come out with different types of video memory - October 1, 1999 A version with SDR chips saw the light of day, and on February 1, 2000, sales of an updated version with DDR memory began.

GeForce 256 DDR

A graphics chip of 23 million transistors was manufactured at TSMC factories using a 220 nm process technology, but more importantly, it was the GeForce 256 that became the first graphics adapter to be called a "video card". Have you noticed how carefully we avoided this term throughout the story? That was the point of awkward substitutions. The term GPU (Graphics Processing Unit) comes from the integration of previously separate texture and lighting pipelines as part of the chip.

The architecture's broad capabilities allowed the graphics card's graphics chip to perform heavy floating point calculations, transforming complex arrays of 3D objects and composite scenes into a beautiful 2D presentation for the impressed gamer. Previously, all such complex calculations were carried out by the computer's central processor, which served as a serious limitation of detail in games.

The GeForce 256's status as a pioneer in the use of software shaders with support for transformation and lighting (T&L) technology has often been questioned. Other graphics accelerator manufacturers have already implemented T&L support in prototypes (Rendition V4400, BitBoys Pyramid3D and 3dfx Rampage), either at the stage of raw but workable algorithms (3DLabs GLINT, Matrox G400), or as a function implemented by an additional chip on the board (geometry processor Fujitsu aboard Hercules Thriller Conspiracy).

However, none of the above examples brought the technology to the commercial stage. Nvidia pioneered transformation and lighting as an architectural advantage of the chip, giving the GeForce 256 that very competitive edge and opening up a previously skeptical professional market for the company.

And the interest of professionals was rewarded - just a month after the release of gaming video cards, NVidia presented the first video cards of the Quadro line - the SGI VPro V3 and VR3 models. As you might guess from the name, the cards were developed using proprietary technologies from SGI, an agreement with which Nvidia entered into in the summer of 1999. Ironically, a little later SGI tried to sue Nvidia for its development, but failed, being on the verge of bankruptcy.

Nvidia ended the last fiscal year of the outgoing millennium brightly - profit of $ 41.3 million on a total turnover of $ 374.5 million delighted investors. Compared to 1998, the profit increased by an order of magnitude, and the total turnover more than doubled. The icing on the cake for Jensen Huang was a $ 200 million contract with Microsoft, under which NVidia developed the NV2 chip (a graphics core for the future Xbox console), increasing the company's total assets to $ 400 million when it went public in April 2000.

Of course, in comparison with ATI's $ 1.2 billion in turnover and $ 156 million in net profit, the figures of the growing competitor seemed modest, but the Canadian video card manufacturer did not rest on its laurels, because the generous contracts of the OEM market were under threat due to the release of the progressive integrated graphics from Intel - the chipset 815.

... And ahead was the fall of the great. And the beginning of a new era in the race for performance.

The author of the text is Alexander Lis.

To be continued ...

Graphic Wars playlist on YouTube: