In short, calls to timeBeginPeriod from one process now affect other processes less than before, although the effect is still present.

I think the new behavior is essentially an improvement, but it's weird and deserves to be documented. Honestly, I warn you - I only have the results of my own experiments, so I can only guess about the goals and some side effects of this change. If any of my findings are incorrect, please let me know.

Timer interrupts and their reason for being

First, a little context about operating system design. It is desirable that the program can fall asleep and later wake up. In fact, this should not be done very often - threads usually wait for events, not timers - but sometimes it is necessary. So, Windows has a Sleep function - pass it the desired sleep duration in milliseconds and it will wake up the process:

First, a little context about operating system design. It is desirable that the program can fall asleep and later wake up. In fact, this should not be done very often - threads usually wait for events, not timers - but sometimes it is necessary. So, Windows has a Sleep function - pass it the desired sleep duration in milliseconds and it will wake up the process:

Sleep (1);

It is worth considering how this is implemented. Ideally, when Sleep (1) is called, the processor will go to sleep. But how does the operating system wake up the thread if the processor is sleeping? The answer is hardware interrupts. The OS programs a chip - a hardware timer, which then triggers an interrupt that wakes up the processor, and the OS then starts your thread.

The WaitForSingleObject and WaitForMultipleObjects functions also have timeout values, and these timeouts are implemented using the same mechanism.

If there are many threads waiting for timers, then the OS can program a hardware timer for an individual time for each thread, but this usually leads to the fact that threads wake up at random times, and the processor does not sleep normally. The power efficiency of the CPU is highly dependent on its sleep time ( normal time is 8ms ), and random wakes do not contribute to this. If multiple threads synchronize or pool their timer waits, the system becomes more energy efficient.

There are many ways to combine wakes, but the main mechanism in Windows is to globally interrupt a timer ticking at a constant rate. When a thread calls Sleep (n) , the OS will schedule the thread to start immediately after the first timer interrupt. This means that the thread may end up waking up a little later, but Windows is not a real-time OS, it does not guarantee a specific wake-up time at all (at this time the processor cores may be busy), so it is quite normal to wake up a little later.

The interval between timer interrupts depends on the version of Windows and hardware, but on all my machines it defaulted to 15.625ms (1000ms / 64). This means that if you call Sleep (1)at some random time, then the process will be woken up somewhere between 1.0ms and 16.625ms in the future when the next global timer interrupt is triggered (or one time later if it is too early).

In short, the nature of timer delays is such that (unless active waiting for the processor is used, and please do not use it ), the OS can wake up threads only at certain times using timer interrupts, and Windows uses regular interrupts.

Some programs do not accommodate such a wide range of latencies (WPF, SQL Server, Quartz, PowerDirector, Chrome, Go Runtime, many games, etc.). Fortunately, they can fix the problem with the timeBeginPeriod functionwhich allows the program to request a smaller interval. There is also an NtSetTimerResolution function that allows the interval to be set to less than a millisecond, but it is rarely used and never needed, so I will not mention it again.

Decades of madness

Here's a crazy thing: timeBeginPeriod can be called by any program and it changes the timer interrupt interval, and the timer interrupt is a global resource.

Let's imagine that process A is in a loop with a call to Sleep (1) . This is wrong, but it is, and by default it wakes up every 15.625ms, or 64 times per second. Then process B comes in and calls timeBeginPeriod (2) . This causes the timer to fire more often, and suddenly process A wakes up 500 times per second instead of 64 times per second. This is madness! But this is how Windows has always worked.

At this point, if process C appeared and called timeBeginPeriod (4), that wouldn't change anything - process A would keep waking up 500 times per second. In such a situation, it is not the last call that sets the rules, but the call with the minimum interval.

Thus, calling timeBeginPeriod from any running program can set the global timer interrupt interval. If this program exits or calls timeEndPeriod , then the new minimum takes effect. If one program calls timeBeginPeriod (1) , this is now the system-wide timer interrupt interval. If one program calls timeBeginPeriod (1) and another calls timeBeginPeriod (4) , then the timer interrupt interval of one millisecond becomes a universal law.

This matters because a high timer interrupt rate - and the associated high thread scheduling rate - can waste significant CPU power, as discussed here .

This matters because a high timer interrupt rate - and the associated high thread scheduling rate - can waste significant CPU power, as discussed here .

One application that needs timer based scheduling is the web browser. The JavaScript standard has a setTimeout function that asks the browser to call a JavaScript function after a few milliseconds. Chromium uses timers to implement this and other features (basically WaitForSingleObject with timeouts, not Sleep). This often requires an increased timer interrupt rate. To keep the battery life low, Chromium was recently redesigned to keep its timer interrupt rate below 125 Hz (8ms interval) on battery power .

timeGetTime

The timeGetTime function (not to be confused with GetTickCount) returns the current time, updated by a timer interrupt. Processors have historically not been very good at keeping accurate time (their clocks are deliberately oscillated to avoid serving as FM transmitters, and for other reasons), so CPUs often rely on separate clock generators to maintain accurate time. Reading from these chips is expensive, which is why Windows maintains a 64-bit millisecond counter updated with a timer interrupt. This timer is stored in shared memory, so any process can cheaply read the current time from there without having to go to the clock. timeGetTime calls ReadInterruptTick , which essentially just reads this 64-bit counter. It's that simple!

Since the counter is updated by the timer interrupt, we can track it down and find the timer interrupt frequency.

New undocumented reality

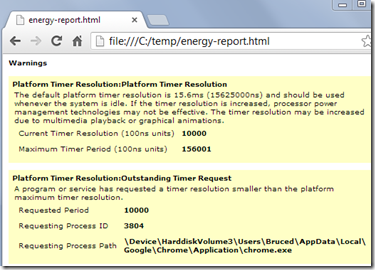

With the release of Windows 10 2004 (April 2020), some of these mechanisms have changed slightly, but in a very confusing way. First, there were messages that the timeBeginPeriod was no longer working . In fact, everything turned out to be much more complicated.

The first experiments gave mixed results. When I ran the program with a call to timeBeginPeriod (2) , clockres showed a timer interval of 2.0ms , but a separate test program with a Sleep (1) loop woke up about 64 times per second instead of 500 times as in previous versions of Windows.

Science experiment

Then I wrote a couple of programs to study system behavior. One program ( change_interval.cpp ) just sits in a loop, calling timeBeginPeriod at intervals of 1 to 15ms . She holds each interval for four seconds, and then moves on to the next, and so on in a circle. Fifteen lines of code. Easy.

Another program ( measure_interval.cpp ) runs several tests to check how its behavior changes when change_interval.cpp changes. The program monitors three parameters.

- She asks the OS what the current resolution of the global timer is using NtQueryTimerResolution .

- timeGetTime, , — , .

- Sleep(1), . .

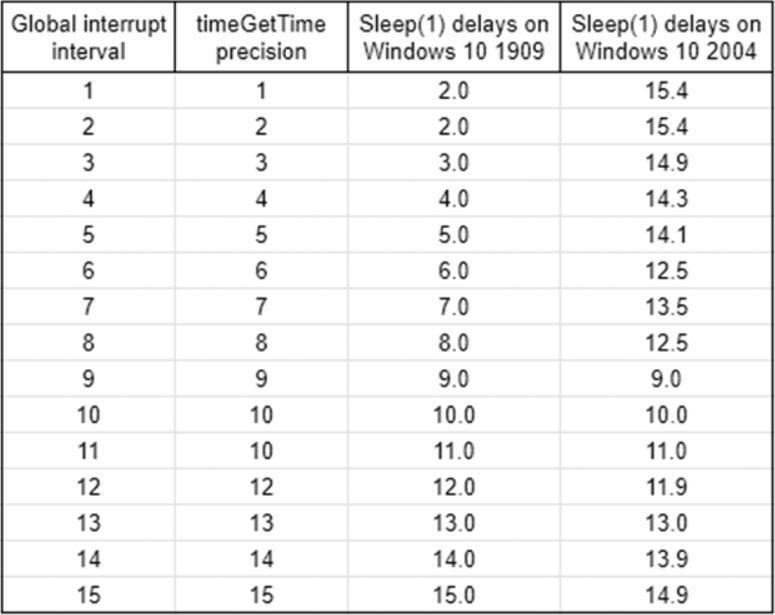

@FelixPetriconi ran tests for me on Windows 10 1909 and I ran tests on Windows 10 2004. Here are the jitter - free results:

This means that timeBeginPeriod still sets the global timer interval in all Windows versions. From the timeGetTime () results, we can say that the interrupt is triggered at this rate on at least one processor core, and the time is updated. Note also that 2.0 in the first line for 1909 was also 2.0 in Windows XP , then 1.0 in Windows 7/8, and then it seems to have returned to 2.0 again?

However, the scheduling behavior changes dramatically in Windows 10 2004. Previously, the delay for Sleep (1)in any process just equals the timer interrupt interval, except for timeBeginPeriod (1), giving a graph like this:

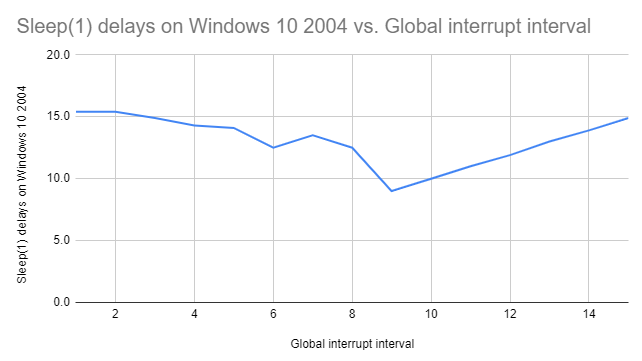

In Windows 10 2004, the relationship between timeBeginPeriod and sleep latency in another process (which did not call timeBeginPeriod ) looks strange:

The exact shape of the left side of the graph is unclear, but it definitely goes in the opposite direction from the previous one!

Why?

Effects

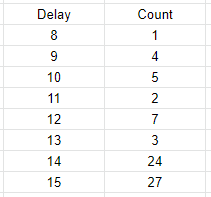

As pointed out in the reddit and hacker-news discussion, it is likely that the left half of the graph is an attempt to mimic "normal" latency as closely as possible, given the available precision of the global timer interrupt. That is, with an interrupt interval of 6 milliseconds, the delay occurs by about 12 ms (two cycles), and with an interrupt interval of 7 milliseconds, it will be delayed by about 14 ms (two cycles). However, measuring actual delays shows that the reality is even more confusing. With a timer interrupt set to 7ms, a Sleep (1) latency of 14ms is not even the most common result:

Some readers may blame random noise on the system, but when the timer interrupt rate is 9ms or higher, the noise is zero, so it is not could be an explanation.Try running the updated code yourself . The timer interrupt intervals from 4ms to 8ms seem to be particularly controversial. Interval measurements should probably be done using QueryPerformanceCounter because current code is randomly affected by changes in scheduling rules and changes in timer precision.

This is all very strange and I don't understand the logic or the implementation. This may be a mistake, but I doubt it. I think there is a complex backward compatibility logic behind this. But the most effective way to avoid compatibility problems is to document the changes, preferably in advance, and here the edits are made without any notice.

This will not affect most programs. If a process wants a faster timer interruption, then it must call timeBeginPeriod itself.... However, the following problems may occur:

- The program might accidentally assume that Sleep (1) and timeGetTime have the same resolution, which is no longer the case. Although, such an assumption seems unlikely.

- , . — Windows System Timer Tool TimerResolution 1.2. «» , . , . , , .

- , , . , . . , , timeBeginPeriod , , .

The change_interval.cpp test program only works if no one requests a higher timer interrupt rate. Since both Chrome and Visual Studio are in the habit of doing this, I had to do most of my experimentation without internet access and coding in notepad . Someone suggested Emacs, but getting involved in this discussion is beyond my power.