The article discusses the problem of cleaning images that accumulate in container registries (Docker Registry and its analogues) in the realities of modern CI / CD pipelines for cloud native applications delivered to Kubernetes. The main criteria for the relevance of images and the resulting difficulties in the automation of cleaning, saving space and meeting the needs of teams are given. Finally, using an example of a specific Open Source project, we will tell you how these difficulties can be overcome.

Introduction

The number of images in the container registry can grow rapidly, taking up more storage space and, accordingly, significantly increasing its cost. To control, limit or maintain an acceptable growth of the space occupied in the registry, it is accepted:

- use a fixed number of tags for images;

- clean up the images in any way.

The first limitation is sometimes valid for small teams. If developers have enough permanent tag (

latest, main, test, borisetc.), the registry will not swell in size and can be a long time not to think about cleaning. After all, all irrelevant images are frayed, and there is simply no work left for cleaning (everything is done by a regular garbage collector).

However, this approach severely limits development and is rarely applicable to CI / CD projects today. Automation has become an integral part of developmentthat allows you to test, deploy and deliver new functionality to users much faster. For example, in all our projects, a CI pipeline is automatically created at each commit. It builds an image, tests it, rolls it out into various Kubernetes circuits for debugging and remaining checks, and if all goes well, the changes reach the end user. And this is not rocket science for a long time, but everyday life for many - most likely for you, since you are reading this article.

Since bugs are fixed and new functionality is developed in parallel, and releases can be performed several times a day, it is obvious that the development process is accompanied by a significant number of commits, which means a large number of images in the registry... As a result, the issue of organizing an effective registry cleaning arises. removal of irrelevant images.

But how can you even determine if an image is relevant?

Image relevance criteria

In the vast majority of cases, the main criteria will be as follows:

1. The first (the most obvious and most critical of all) is the images that are currently used in Kubernetes . Removing these images can lead to serious downtime costs for production (for example, images may be required during replication) or negate the efforts of the team that is engaged in debugging on any of the circuits. (For this reason, we even made a special Prometheus exporter that monitors the absence of such images in any Kubernetes cluster.)

2. The second (less obvious, but also very important and again refers to the operation) - images that are required for rollback in case of serious problemsin the current version. For example, in the case of Helm, these are the images that are used in the saved versions of the release. (By the way, the default limit in Helm is 256 revisions, but hardly anyone really has a need to save such a large number of versions? ..) After all, for this, in particular, we store versions so that you can later use, i.e. "Roll back" to them if necessary.

3. Third - the needs of developers : all the images that are associated with their current work. For example, if we are considering PR, then it makes sense to leave the image corresponding to the last commit and, say, the previous commit: this way the developer can quickly return to any task and work with the latest changes.

4. Fourth - images thatcorrespond to the versions of our application , i.e. are the final product: v1.0.0, 20.04.01, sierra, etc.

NB: The criteria defined here were formulated based on the experience of interacting with dozens of development teams from different companies. However, of course, depending on the specifics of the development processes and the infrastructure used (for example, Kubernetes is not used), these criteria may differ.

Eligibility and existing solutions

Popular services with container registry, as a rule, offer their own image cleaning policies: in them you can define the conditions under which a tag is removed from the registry. However, these conditions are limited by parameters such as names, creation time and number of tags *.

* Depends on specific container registry implementations. We considered the possibilities of the following solutions: Azure CR, Docker Hub, ECR, GCR, GitHub Packages, GitLab Container Registry, Harbor Registry, JFrog Artifactory, Quay.io - as of September 2020.

This set of parameters is quite enough to satisfy the fourth criterion - that is, select images that match versions. However, for all other criteria, one has to choose some kind of compromise solution (a tougher or, conversely, sparing policy) - depending on expectations and financial capabilities.

For example, the third criterion - related to the needs of developers - can be solved by organizing processes within teams: specific naming of images, maintaining special allow lists and internal agreements. But ultimately it still needs to be automated. And if the possibilities of ready-made solutions are not enough, you have to do something of your own.

The situation is similar with the first two criteria: they cannot be satisfied without receiving data from an external system - the one where the applications are deployed (in our case, it is Kubernetes).

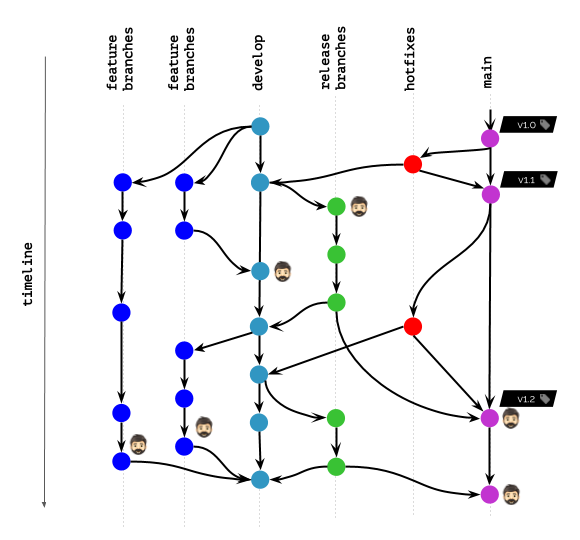

Illustration of a workflow in Git

Suppose you work something like this in Git: The

icon with a head in the diagram marks container images that are currently deployed to Kubernetes for any users (end users, testers, managers, etc.) or are used by developers for debugging and similar goals.

What happens if the purge policies allow you to keep (not delete) images only for the specified tag names ?

Obviously, this scenario will not please anyone.

What will change if the policies allow you not to delete images for a given time interval / number of recent commits ?

The result has become much better, but still far from ideal. After all, we still have developers who need images in the registry (or even deployed in K8s) to debug bugs ...

Summing up the current situation on the market: the functions available in the container registries do not offer sufficient flexibility for cleaning, and the main reason is that there is no possibility interact with the outside world . It turns out that teams that need this flexibility are forced to independently implement image removal "outside" using the Docker Registry API (or the native API of the corresponding implementation).

However, we were looking for a universal solution that would automate image cleaning for different teams using different registries ...

Our path to universal image cleaning

Where does this need come from? The fact is that we are not a separate group of developers, but a team that serves many of them at once, helping to comprehensively solve CI / CD issues. And the main technical tool for this is the open source werf utility . Its peculiarity is that it does not perform a single function, but accompanies continuous delivery processes at all stages: from assembly to deployment.

Publishing images to the registry * (immediately after they are built) is an obvious function of such a utility. And since the images are placed there for storage, then - if your storage is not unlimited - you need to be responsible for their subsequent cleaning. How we achieved success in this, satisfying all the specified criteria, will be discussed further.

* Although the registries themselves may be different (Docker Registry, GitLab Container Registry, Harbor, etc.), their users face the same problems. The universal solution in our case does not depend on the implementation of the registry, since runs outside the registries themselves and offers the same behavior for everyone.

Despite the fact that we are using werf as an example of implementation, we hope that the approaches used will be useful to other teams that face similar difficulties.

So, we took up the externalimplementation of a mechanism for cleaning images - instead of the capabilities that are already built into the registries for containers. The first step was to use the Docker Registry API to create all the same primitive policies by the number of tags and the time of their creation (mentioned above). An allow list has been added to them based on the images used in the deployed infrastructure , i.e. Kubernetes. For the latter, it was enough through the Kubernetes API to go through all the deployed resources and get a list of values

image.

This trivial solution closed the most critical problem (criterion # 1), but it was only the beginning of our journey to improve the cleaning mechanism. The next - and much more interesting - step was the decision to associate the published images with the Git history .

Tagging schemes

To begin with, we chose an approach in which the final image must store the necessary information for cleaning, and built the process on tagging schemes. When publishing an image, the user selected a specific tagging option (

git-branch, git-commitor git-tag) and used the corresponding value. In CI systems, these values were set automatically based on environment variables. Basically, the final image was associated with a specific Git primitive , storing the necessary data for cleaning in labels.

This approach resulted in a set of policies that allowed Git to be used as the only source of truth:

- When deleting a branch / tag in Git, the associated images in the registry were also automatically deleted.

- The number of images associated with Git tags and commits could be controlled by the number of tags used in the chosen scheme and the time the associated commit was created.

In general, the resulting implementation met our needs, but soon a new challenge awaited us. The fact is that during the use of tagging schemes for Git primitives, we encountered a number of shortcomings. (Since their description is beyond the scope of this article, anyone can read the details here .) So, after deciding to move to a more efficient approach to tagging (content-based tagging), we had to revise the implementation of image cleaning.

New algorithm

Why? When tagged as content-based, each tag can accommodate multiple commits in Git. When cleaning up images, you can no longer only rely on the commit at which the new tag was added to the registry.

For the new cleaning algorithm, it was decided to move away from tagging schemes and build the process on meta-images , each of which stores a bunch of:

- the commit on which the publication was performed (it does not matter if the image was added, changed or remained the same in the container registry);

- and our internal identifier corresponding to the built image.

In other words, the published tags were linked to the commits in Git .

Final configuration and general algorithm

When configuring cleanup, users now have access to policies by which the selection of actual images is carried out. Each such policy is defined:

- multiple references, i.e. Git tags or Git branches that are used during crawling;

- and the limit of the required images for each reference from the set.

To illustrate, this is how the default policy configuration began to look:

cleanup:

keepPolicies:

- references:

tag: /.*/

limit:

last: 10

- references:

branch: /.*/

limit:

last: 10

in: 168h

operator: And

imagesPerReference:

last: 2

in: 168h

operator: And

- references:

branch: /^(main|staging|production)$/

imagesPerReference:

last: 10

This configuration contains three policies that comply with the following rules:

- Save an image for the last 10 Git tags (by the date the tag was created).

- Save no more than 2 images published in the last week, for no more than 10 branches with activity over the last week.

- Save 10 images for each branch

main,stagingandproduction.

The final algorithm is reduced to the following steps:

- Getting manifests from the container registry.

- Excluding images used in Kubernetes because we have already pre-selected them by polling the K8s API.

- Scanning Git history and excluding images for specified policies.

- Removing the remaining images.

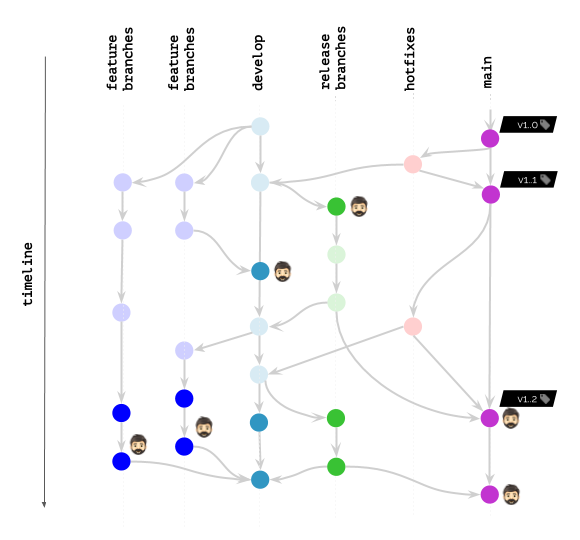

Returning to our illustration, here's what happens with werf:

However, even if you don't use werf, a similar approach to advanced cleaning of images - in one implementation or another (in accordance with the preferred approach for tagging images) - can be applied in other systems as well. / utilities. To do this, it is enough to remember about the problems that arise and find those opportunities in your stack that allow you to build their solution in the most smooth way. We hope that the path we have traveled will help to look at your particular case with new details and thoughts.

Conclusion

- Sooner or later, most teams face the problem of registry overflow.

- When looking for solutions, first of all, it is necessary to determine the criteria for the relevance of the image.

- The tools offered by the popular container registry services allow for a very simple cleanup that does not take into account the "outside world": the images used in Kubernetes and the specifics of team workflows.

- A flexible and efficient algorithm must have an understanding of CI / CD processes, operate not only with Docker image data.

PS

Read also on our blog: