One of the basic requirements of the Kubernetes networking model is that each pod must have its own IP address, and every other pod in the cluster must be able to contact it at that address. There are many network "providers" (Flannel, Calico, Canal, etc.) that help implement this network model.

When I first started working with Kubernetes, it was not entirely clear to me how exactly pods get their IP addresses. Even with an understanding of how the individual components functioned, it was difficult to imagine them working together. For example, I knew what CNI plugins were for, but I had no idea how they were called. Therefore, I decided to write this article to share my knowledge about various networking components and how they work together in a Kubernetes cluster, which allow each pod to get its own unique IP address.

There are different ways of organizing networking in Kubernetes, just as there are different runtime options for containers. This post will use Flannel to network the cluster and use Containerd as the runtime . I also proceed from the assumption that you know how the networking between containers works, so I will only briefly touch on it, solely for context.

Some basic concepts

Containers and networking at a glance

There are plenty of excellent publications on the Internet explaining how containers communicate with each other over the network. Therefore, I will only give a general overview of the basic concepts and limit myself to one approach, which involves creating a Linux bridge and encapsulating packets. The details are omitted, since the very topic of container networking deserves a separate article. Links to some particularly informative and informative publications will be given below.

Containers on a single host

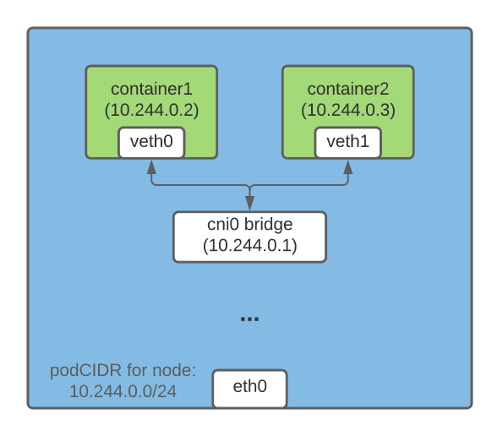

One way to establish IP address communication between containers running on the same host is to create a Linux bridge. For this, veth (virtual ethernet) virtual devices are created in Kubernetes (and Docker ) . One end of the veth device connects to the container's network namespace, the other connects to the Linux bridge on the host's network.

All containers on the same host have one end of the veth connected to a bridge through which they can communicate with each other using IP addresses. The Linux bridge also has an IP address and acts as a gateway for egress traffic from pods to other nodes.

Containers on different hosts

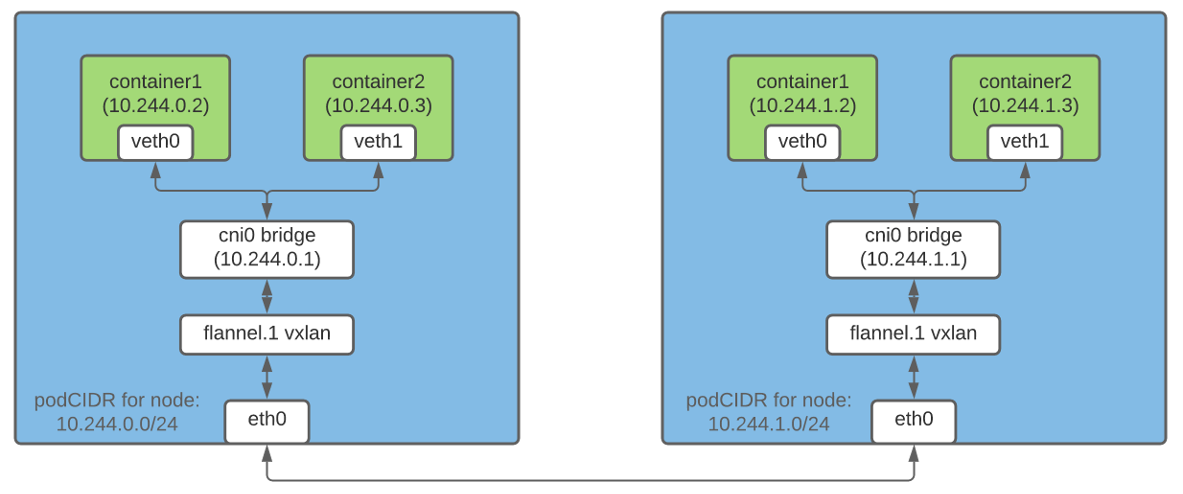

Packet encapsulation is one way to allow containers on different hosts to communicate with each other using IP addresses. In Flannel , vxlan technology is responsible for this feature , which "packs" the original packet into a UDP packet and then sends it to its destination.

In a Kubernetes cluster, Flannel creates a vxlan device and augments the route table on each node accordingly. Each packet destined for a container on a different host goes through the vxlan device and is encapsulated in a UDP packet. At the destination, the nested package is extracted and redirected to the desired pod.

Note: This is just one way of organizing networking between containers.

What is CRI?

CRI (Container Runtime Interface) is a plugin that allows kubelet to use different container runtime environments. The CRI API is built into various runtime environments, so users can choose the runtime of their choice.

What is CNI?

The CNI project is a specification for organizing a universal networking solution for Linux containers. In addition, it includes plugins responsible for various functions when setting up a pod's network. The CNI plugin is an executable file that conforms to the specification (we will discuss some of the plugins below).

Subnetting hosts to assign IP addresses to pods

Since each pod in the cluster must have an IP address, it is important to make sure that this address is unique. This is accomplished by assigning each host a unique subnet, from which IP addresses are then assigned to the pods on that host.

Host IPAM Controller

When

nodeipampassed as a parameter of the --controllers kube-controller-manager flag , it allocates a separate subnet for each node (podCIDR) from the CIDR cluster (i.e. the range of IP addresses for the cluster network). Since these podCIDRs do not overlap, it becomes possible for each pod to assign a unique IP address.

A Kubernetes node is assigned a podCIDR when it first registers with the cluster. To change the podCIDR of the nodes, you need to deregister them and then re-register them, in the meantime making the appropriate changes to the configuration of the Kubernetes control layer. You can display the podCIDR of a node using the following command:

$ kubectl get no <nodeName> -o json | jq '.spec.podCIDR'

10.244.0.0/24

Kubelet, container launcher and CNI plugins: how it all works

Scheduling a pod per node involves many preparatory steps. In this section, I will focus only on those that are directly related to pod networking.

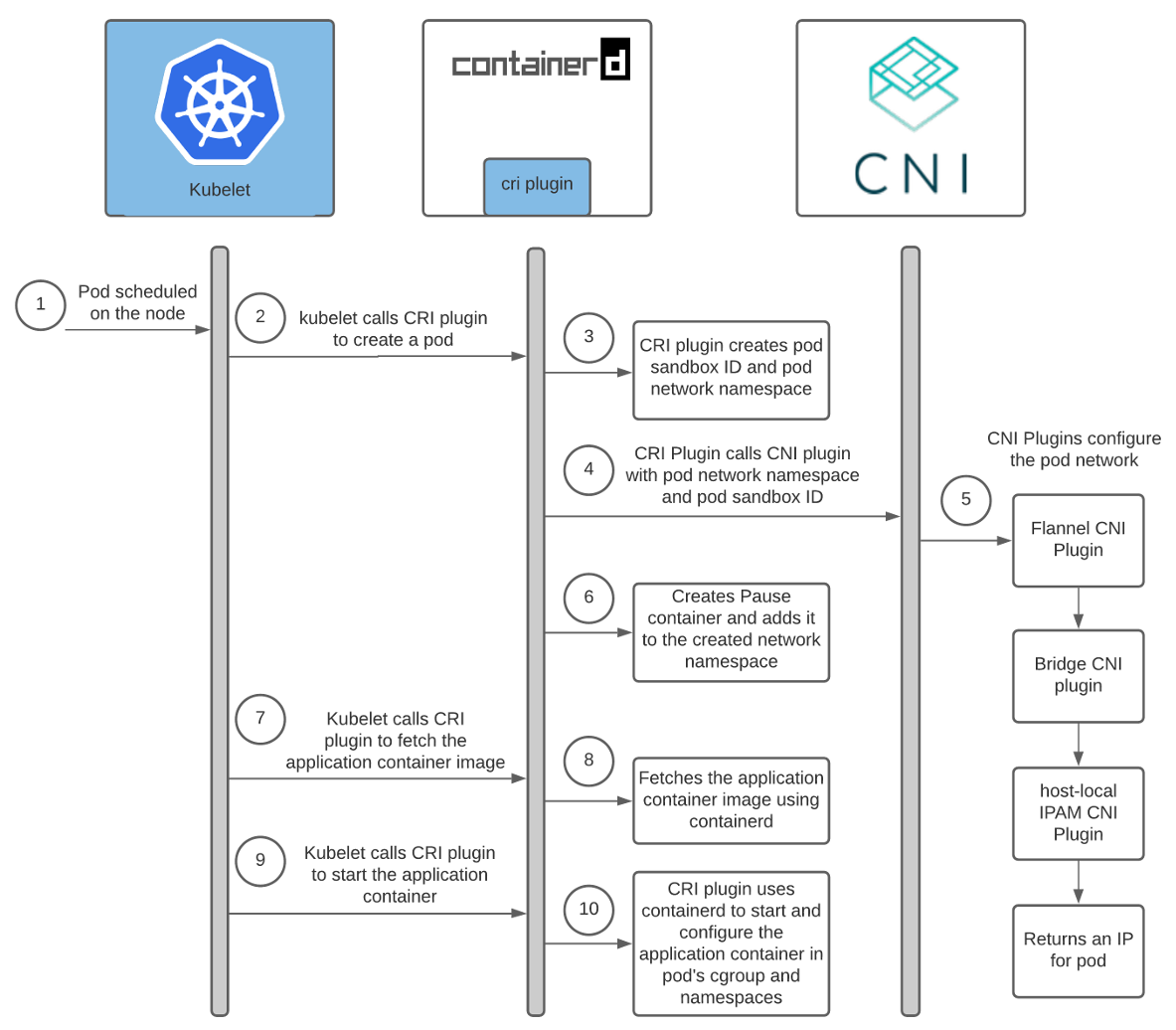

Scheduling a pod to a node triggers the following chain of events:

Help: Containerd CRI Plugin Architecture .

Interaction between the container launcher and CNI plugins

Each network provider has its own CNI plugin. The container runtime launches it to configure the network for the pod as it starts up. In case of containerd, the Containerd CRI plugin is responsible for launching the CNI plugin .

Moreover, each provider has its own agent. It is installed on all Kubernetes nodes and is responsible for networking the pods. This agent either comes with the CNI config, or creates it on the node itself. The config helps the CRI plugin to determine which CNI plugin to call.

The location of the CNI config can be customized; by default it lies in

/etc/cni/net.d/<config-file>. Cluster administrators are also responsible for installing CNI plugins on each cluster node. Their location is also customizable; the default directory is /opt/cni/bin.

When using containerd, the paths for the config and plugin binaries can be set in a section

[plugins.«io.containerd.grpc.v1.cri».cni]in the containerd configuration file .

Since we are using Flannel as our network provider, let's talk a little about setting it up:

- Flanneld (Flannel's daemon) is usually installed in the cluster as a DaemonSet with

install-cnian init container . Install-cnicreates a CNI configuration file (/etc/cni/net.d/10-flannel.conflist) on each node.- Flanneld creates a vxlan device, fetches network metadata from the API server, and monitors pods for updates. As they are created, it distributes routes for all pods throughout the cluster.

- These routes allow pods to communicate with each other using IP addresses.

For more information on how Flannel works, I recommend using the links at the end of the article.

Here is a diagram of the interaction between the Containerd CRI plugin and the CNI plugins:

As you can see above, kubelet calls the Containerd CRI plugin to create a pod, which already calls the CNI plugin to configure the pod's network. In doing so, the network provider CNI plugin calls other basic CNI plugins to configure various aspects of the network.

Interaction between CNI plugins

There are various CNI plugins that are designed to help set up networking between containers on a host. This article will focus on three of them.

Flannel CNI Plugin

When using Flannel as the network provider, the Containerd CRI component invokes the Flannel CNI plugin using the CNI configuration file

/etc/cni/net.d/10-flannel.conflist.

$ cat /etc/cni/net.d/10-flannel.conflist

{

"name": "cni0",

"plugins": [

{

"type": "flannel",

"delegate": {

"ipMasq": false,

"hairpinMode": true,

"isDefaultGateway": true

}

}

]

}

The Flannel CNI plugin works in conjunction with Flanneld. At startup, Flanneld extracts the podCIDR and other network-related details from the API server and saves them to a file

/run/flannel/subnet.env.

FLANNEL_NETWORK=10.244.0.0/16

FLANNEL_SUBNET=10.244.0.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=false

The Flannel CNI plugin uses the data from

/run/flannel/subnet.envto configure and invoke the bridge CNI plugin.

CNI plugin Bridge

This plugin is called with the following configuration:

{

"name": "cni0",

"type": "bridge",

"mtu": 1450,

"ipMasq": false,

"isGateway": true,

"ipam": {

"type": "host-local",

"subnet": "10.244.0.0/24"

}

}

On the first call, it creates a Linux bridge with

«name»: «cni0», which is indicated in the config. Then a veth pair is created for each pod. One end of it connects to the container's network namespace, the other goes into the Linux bridge on the host's network. The CNI Bridge plugin connects all host containers to a Linux bridge on the host network.

When finished configuring the veth pair, the Bridge plugin invokes the host-local CNI IPAM plugin. The IPAM plugin type can be configured in the CNI config, which the CRI plugin uses to call the Flannel CNI plugin.

Host-local IPAM CNI plugins

Bridge CNI calls the host-local IPAM CNI plugin with the following configuration:

{

"name": "cni0",

"ipam": {

"type": "host-local",

"subnet": "10.244.0.0/24",

"dataDir": "/var/lib/cni/networks"

}

}

Host-local IPAM-plug ( IP A ddress M anagement - IP-address management) returns the IP-address for the subnet of the container and stores the selected IP in the host in a directory specified in section

dataDir- /var/lib/cni/networks/<network-name=cni0>/<ip>. This file contains the ID of the container that is assigned this IP address.

When the host-local IPAM plugin is called, it returns the following data:

{

"ip4": {

"ip": "10.244.4.2",

"gateway": "10.244.4.3"

},

"dns": {}

}

Summary

Kube-controller-manager assigns podCIDR to each node. The pods of each node get IP addresses from the address space in the allocated podCIDR range. Since the podCIDRs of the nodes do not overlap, all pods receive unique IP addresses.

The Kubernetes cluster administrator configures and installs kubelet, container launcher, network provider agent, and copies CNI plugins to each node. During startup, the agent of the network provider generates the CNI config. When a pod is scheduled for a node, kubelet calls the CRI plugin to create it. Further, if containerd is used, the Containerd CRI plugin calls the CNI plugin specified in the CNI config to configure the pod network. This gives the pod an IP address.

It took me a while to figure out all the subtleties and nuances of all these interactions. I hope the experience gained will help you to better understand how Kubernetes works. If I am wrong about anything, please contact me on Twitter or at hello@ronaknathani.com . Feel free to contact if you would like to discuss aspects of this article or anything else. I will be happy to talk to you!

Links

Containers and Network

How Flannel works

CRI and CNI

PS from translator

Read also on our blog: