Zeppelin is an interactive notebook that data engineers love. He knows how to work with Spark and is great for interactive data analysis.

The project recently reached version 0.9.0-preview2 and is actively developing, but, nevertheless, many things are still not implemented and are waiting in the wings.

One such thing is an API for getting more information about what's going on inside the notebook. On the one hand, there is an API that completely solves the problems of high-level laptop management. But if you want something non-trivial, bad news.

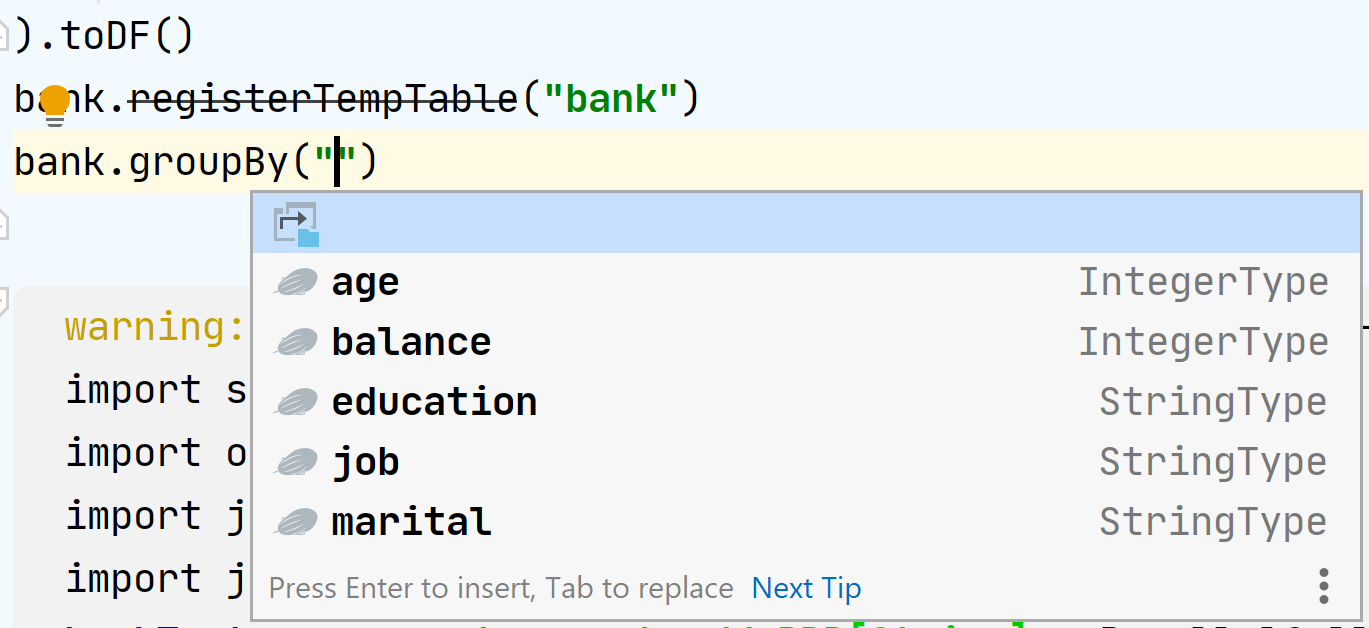

This problem was faced by the developers of Big Data Tools , a plugin for IntelliJ IDEA that provides integration with Spark, Hadoop and makes it possible to edit and run notebooks in Zeppelin. The ability to create and delete laptops is not enough to fully work in the IDE. You need to unload a whole wagon of information that will allow you to do things like smart autocomplete.

, API Zeppelin. , GitHub. , . -, , Zeppelin. -, - — , - . , API .

ZTools — , Zeppelin, API. "" GitHub Apache License 2.0. 90% Scala, — Java.

( , Scala REPL, Zeppelin).

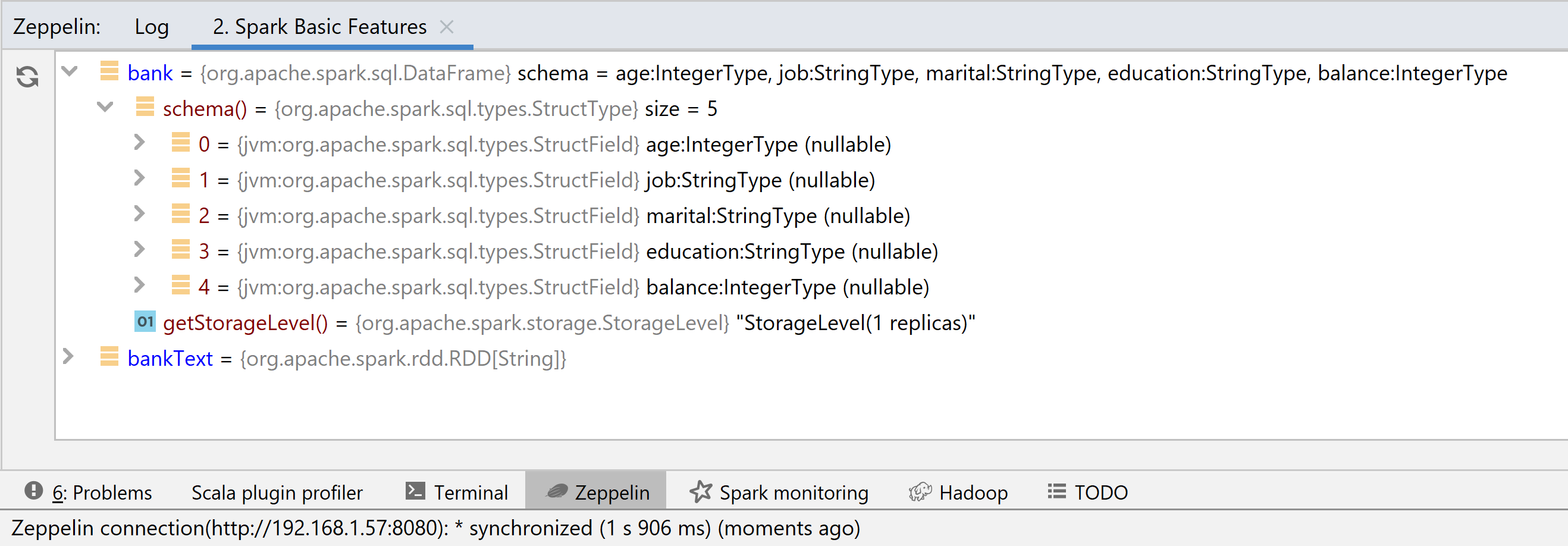

, ZTools, Variables View Big Data Tools. IntelliJ Ultimate Edition, , , . ZTools , , .

Big Data Tools, ZTools. :

, ? ?

, , , - .

. - sql.DataFrame, !

.

ZTools. , .

:

, Zeppelin .

. ( GitHub), ( ).

: , , . , . , , Zeppelin.

, mitmproxy Wireshark. , — .

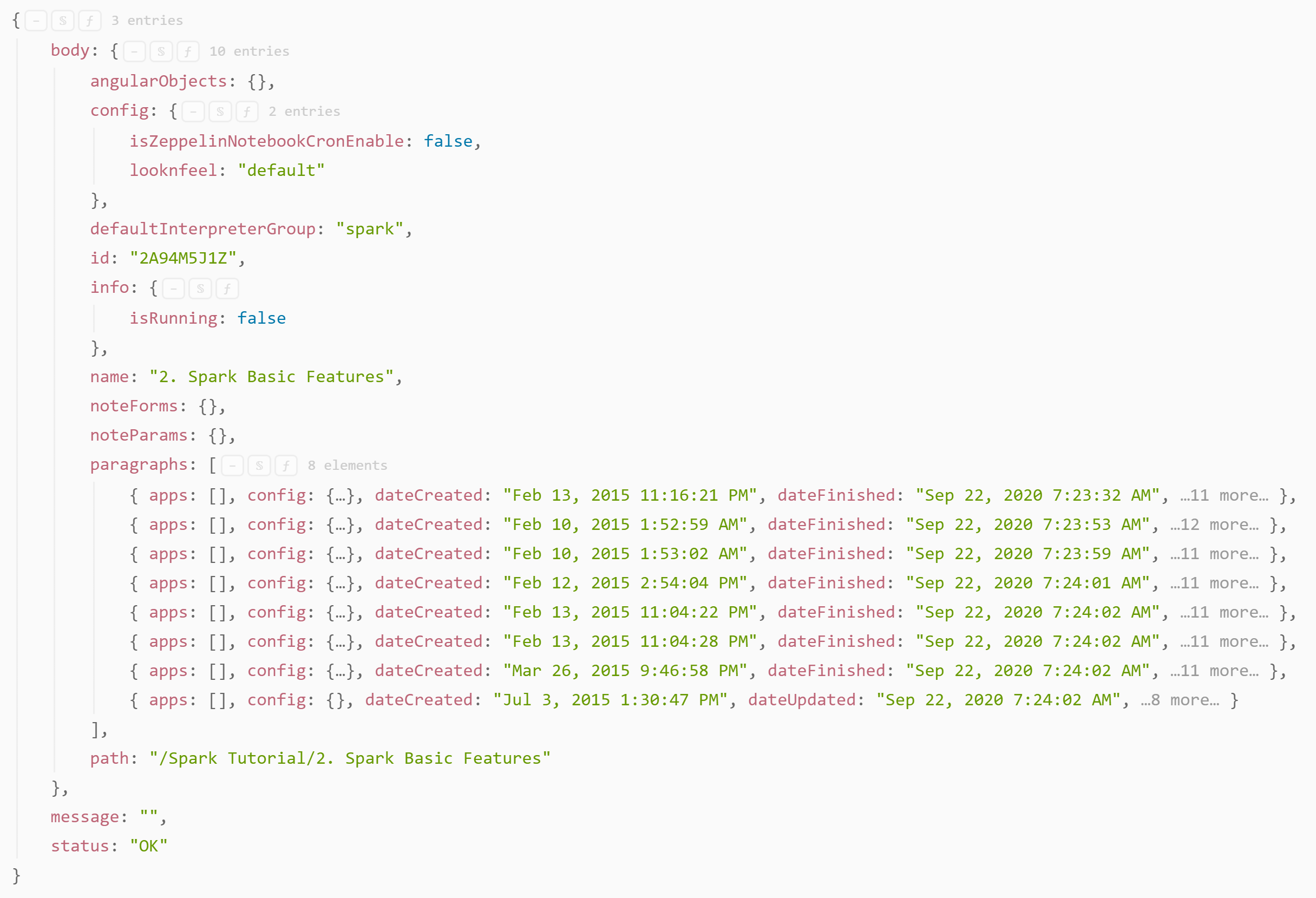

- Zeppelin — "Spark Basic Features".

mitmproxy , Zeppelin API /api/notebook ( ).

, . , ? , WebSocket?

Wireshark :

, , , JSON . .

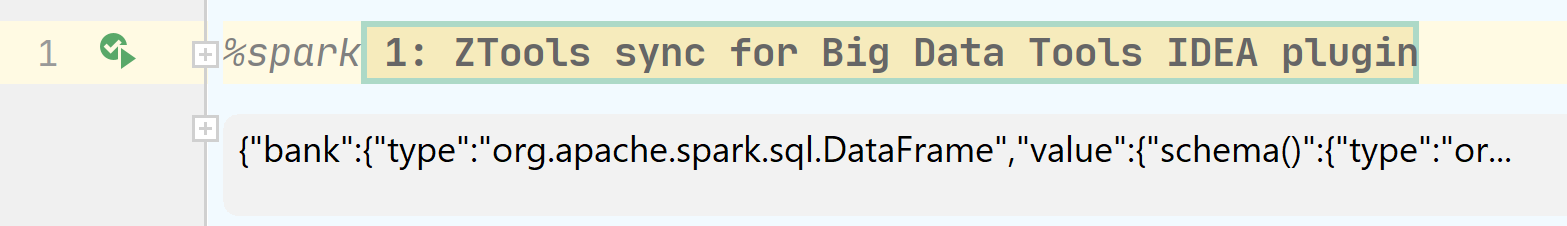

- . Big Data Tools, IntelliJ IDEA, :

, .

-, . , :

%spark

// It is generated code for integration with Big Data Tools plugin

// Please DO NOT edit it.

import org.jetbrains.ztools.spark.Tools

Tools.init($intp, 3, true)

println(Tools.getEnv.toJsonObject.toString)

println("----")

println(Tools.getCatalogProvider("spark").toJson)", . ZTools, , GitHub.

.

- bank bankText.

- .

, : , — .

, .

- , Zeppelin ZTools: , ZTools, ( ). Big Data Tools.

- Tools. ZTools.

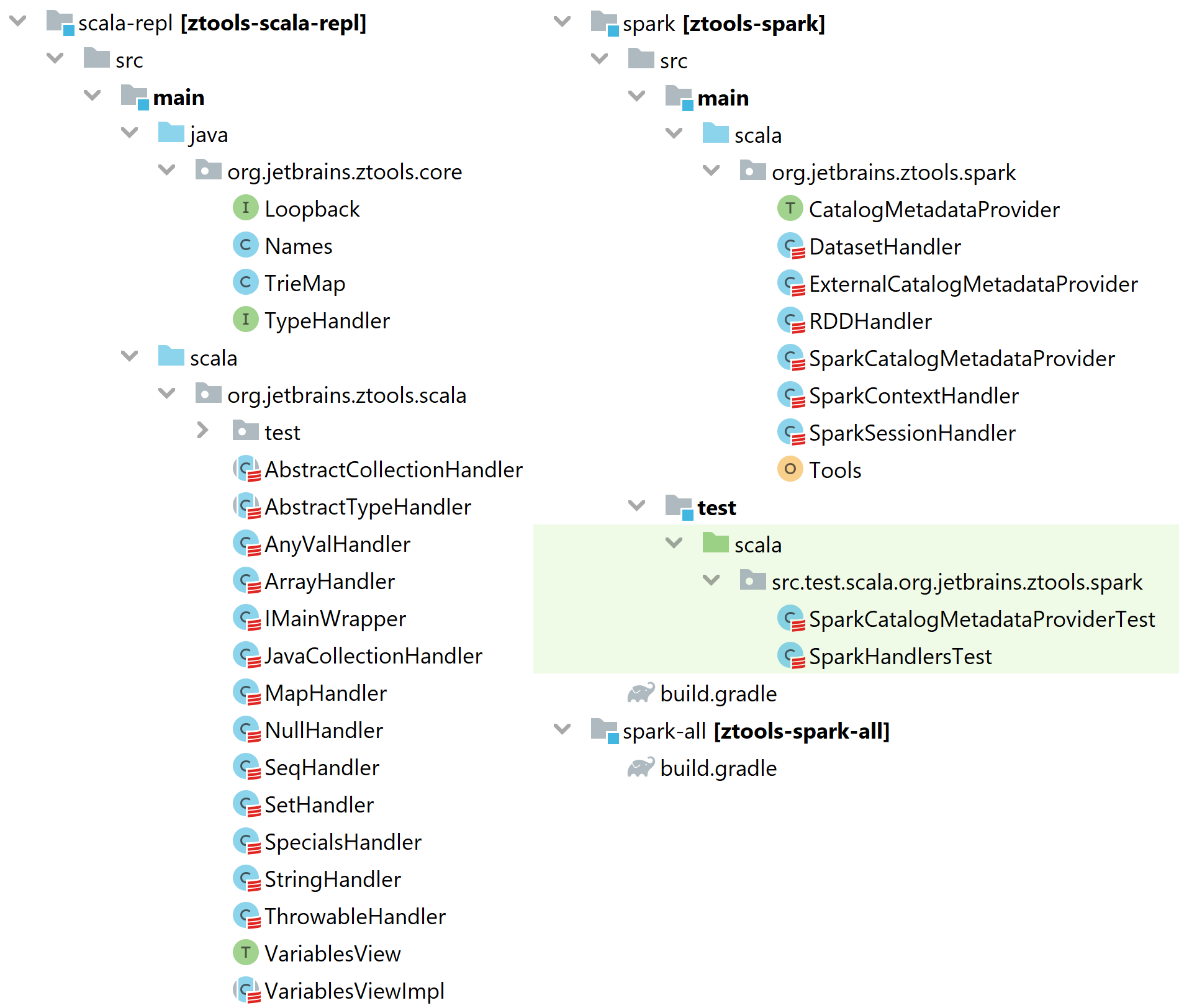

: ZTools

ZTools GitHub

: "scala-repl" "spark".

:

%spark

// It is generated code for integration with Big Data Tools plugin

// Please DO NOT edit it.

import org.jetbrains.ztools.spark.Tools

Tools.init($intp, 3, true)

println(Tools.getEnv.toJsonObject.toString)

println("----")

println(Tools.getCatalogProvider("spark").toJson)", . Catalog.listTables(), Catalog.listTables(), JSON .

, . Tools, VariablesView, . .

, , :

@Test

def testSimpleVarsAndCollections(): Unit = {

withRepl { intp =>

intp.eval("val x = 1")

val view = intp.getVariablesView()

assertNotNull(view)

var json = view.toJsonObject

println(json.toString(2))

val x = json.getJSONObject("x")

assertEquals(2, x.keySet.size)

assertEquals(1, x.getInt("value"))

assertEquals("Int", x.getString("type"))

assertEquals(1, json.keySet.size)

}Scala .

, ( ), Scala, — scala.tools.nsc.interpreter.

def withRepl[T](body: Repl => T): T = {

// ...

val iLoop = new ILoop(None, new JPrintWriter(Console.out, true))

iLoop.intp = new IMain(iLoop.settings)

// ...

}ILoop — , IMain — . IMain interpret(code). .

:

trait VariablesView {

def toJson: String

def toJsonObject: JSONObject

def toJsonObject(path: String, deep: Int): JSONObject

def variables(): List[String]

def valueOfTerm(id: String): Option[Any]

def registerTypeHandler(handler: TypeHandler): VariablesView

def typeOfExpression(id: String): String

}. . Scala, iMain: \

override def variables(): List[String] =

iMain.definedSymbolList.filter { x => x.isGetter }.map(_.name.toString).distinct JSON, " " — . toJsonObject() , .

-, — 100 — 400 . , ( Big Data Tools ) . , , - .

ZTools Tools, . $intp, sc, spark, sqlContext, z engine. , .

- ZTools Zeppelin API;

- , ;

- Zeppelin, Scala — . REPL ZTools. ZTools, - ;

- , Tools, : 400 , 100 JSON.

:

, ZTools IntelliJ IDEA . GitHub.

, Java, JavaScript (, TypeScript) Node.js. HTTP Axios — .

-, - , “Spark Basic Features” .

const notes = await <em>axios</em>.get(NOTE_LIST_URL);

let noteId: string = null;

for (let item: Object of notes.data.body) {

if ( item.path.indexOf('Spark Basic Features') >= 0 ) {

noteId = item.id;

break;

}

}, :

const PAR_TEXT = `%spark

import org.jetbrains.ztools.spark.Tools

Tools.init($intp, 3, true)

println(Tools.getEnv.toJsonObject.toString)

println("----")

println(Tools.getCatalogProvider("spark").toJson)`;:

const CREATE_PAR_URL = `${Z_URL}/api/notebook/${noteId}/paragraph`;

const par: Object = await axios.post(CREATE_PAR_URL, {

title: 'temp',

text: PAR_TEXT,

index: 0

});:

const RUN_PAR_URL = `${Z_URL}/api/notebook/run/${noteId}/${parId}`;

await axios.post(RUN_PAR_URL);

:

const INFO_PAR_URL = `${Z_URL}/api/notebook/${noteId}/paragraph/${parId}`;

const { data } = await axios.get(INFO_PAR_URL);:

const DEL_PAR_URL = `${Z_URL}/api/notebook/${noteId}/paragraph/${parId}`;

await axios.delete(DEL_PAR_URL);JSON:

const [varInfoData, dbInfoData] = (data.body.results.msg[0].data)

.replace('\nimport org.jetbrains.ztools.spark.Tools\n', '')

.split('\n----\n');

const varInfo = JSON.parse(varInfoData);

const dbInfo = JSON.parse(dbInfoData);:

for (const [key, {type}] of Object.entries(varInfo)) {

console.log(`${key} : ${type}`);

}:

for (const [key, database] of Object.entries(dbInfo.databases)) {

console.log(`Database: ${database.name} (${database.description})`);

for (const table of database.tables) {

const columnsJoined = table.columns.map(val => `${val.name}/${val.dataType}`).join(', ');

Logger.direct(`${table.name} : [${columnsJoined}]`);

}

}, , .

:

Zeppelin — Zeppelin, ZTools.

, , , . . .

, ZTools , , Maven JAR-.

- : https://repo.labs.intellij.net/big-data-ide

- : org.jetbrains.ztools:ztools-spark-all:0.0.13

- JAR-: https://dl.bintray.com/jetbrains/zeppelin-dependencies/org/jetbrains/ztools/ztools-spark-all/0.0.13/ztools-spark-all-0.0.13.jar

ZTools , Gradle, JAR- Apache License 2.0.

Big Data Tools ZTools Zeppelin. — , .

- ZTools Zeppelin API;

- , — , Apache License 2.0;

- — , ;

- . " " Big Data Tools.