The task of building IT platforms for accumulating and analyzing data sooner or later arises for any company whose business is based on an intellectually loaded service delivery model or the creation of technically complex products. Building analytical platforms is a difficult and time-consuming task. However, any task can be simplified. In this article, I want to share my experience of using low-code tools to help you create analytical solutions. This experience was gained during the implementation of a number of projects in the Big Data Solutions direction of the Neoflex company. Since 2005, the Big Data Solutions department of Neoflex has been dealing with the issues of building data storages and lakes, solving problems of optimizing the speed of information processing and working on a data quality management methodology.

No one will be able to avoid conscious accumulation of weakly and / or highly structured data. Perhaps even if we are talking about a small business. Indeed, when scaling a business, a promising entrepreneur will face the issues of developing a loyalty program, want to analyze the effectiveness of points of sale, think about targeted advertising, and be puzzled by the demand for accompanying products. As a first approximation, the problem can be solved on the knee. But with the growth of a business, coming to an analytical platform is still inevitable.

However, in what case can data analytics tasks grow into tasks of the "Rocket Science" class? Perhaps at that moment when it comes to really big data.

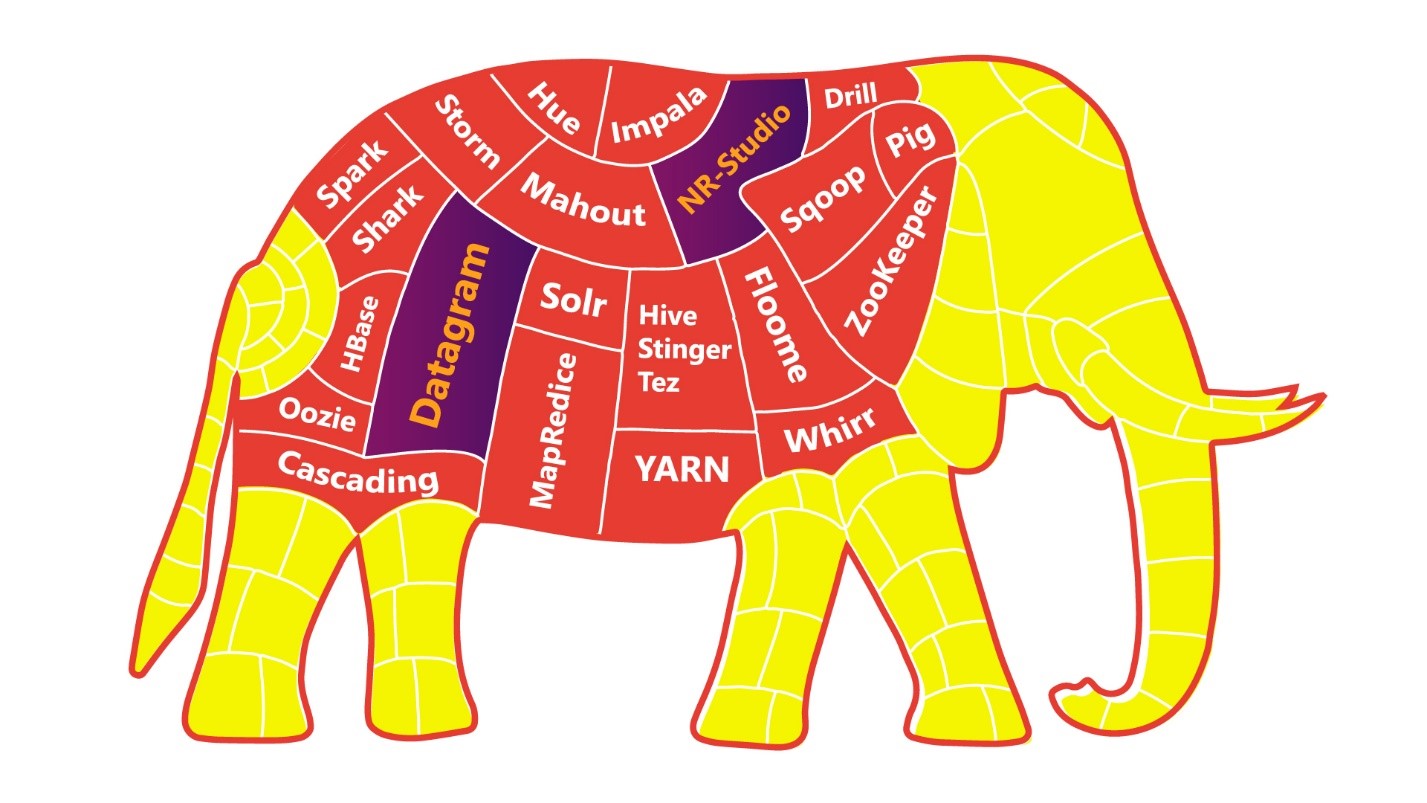

To simplify the Rocket Science task, you can eat the elephant piece by piece.

The more discretion and autonomy your applications / services / microservices will have, the easier it will be for you, your colleagues and the whole business to digest an elephant.

Almost all of our clients came to this postulate, rebuilding the landscape, based on the engineering practices of DevOps teams.

But even with a "split, elephant" diet, we have a good chance of "oversaturation" of the IT landscape. At this point, it is worth stopping, breathing out and looking towards the low-code engineering platform .

Many developers are intimidated by the prospect of an impasse in their careers when moving away from direct writing of code towards "dragging" arrows in UI-interfaces of low-code systems. But the emergence of machine tools did not lead to the disappearance of engineers, but brought their work to a new level!

Let's figure out why.

Data analysis in the field of logistics, telecom industry, in the field of media research, the financial sector, is always associated with the following questions:

- The speed of automated analysis;

- The ability to conduct experiments without affecting the main flow of data production;

- The reliability of the prepared data;

- Tracking changes and versioning;

- Data proveance, Data lineage, CDC;

- Fast delivery of new features to the production environment;

- And the proverbial: development and support cost.

That is, engineers have a huge number of high-level tasks, which can be performed with sufficient efficiency only by clearing their minds of low-level development tasks.

Evolution and digitalization of business became the prerequisites for the developers' transition to a new level. Developer value is also changing: there is a significant shortage of developers who can dive deep into the concepts of an automated business.

Let's make an analogy with low-level and high-level programming languages. The transition from low-level languages towards high-level languages is a transition from writing "direct directives in the language of iron" towards "directives in the language of people." That is, adding some layer of abstraction. In this case, the transition to low-code-platforms from high-level programming languages is a transition from “directives in the language of people” to “directives in the language of business”. If there are developers who are saddened by this fact, then they are saddened, perhaps even from the moment Java Script was born, which uses array sorting functions. And these functions, of course, have software implementation under the hood by other means of the same high-level programming.

Therefore, low-code is just the emergence of another layer of abstraction.

Applied experience of using low-code

The topic of low-code is quite broad, but now I would like to talk about the applied application of "low-code concepts" on the example of one of our projects.

The Big Data Solutions division of the Neoflex company specializes to a greater extent in the financial sector of business, building storage and data lakes and automating various reporting. In this niche, the use of low-code has long become a standard. Other low-code tools include tools for organizing ETL processes: Informatica Power Center, IBM Datastage, Pentaho Data Integration. Or Oracle Apex, which acts as an environment for rapid development of interfaces for accessing and editing data. However, the use of low-code development tools is not always associated with the construction of highly targeted applications on a commercial technology stack with a clear vendor dependency.

Using low-code platforms, you can also organize the orchestration of data streams, create data-science platforms or, for example, data quality control modules.

One of the applied examples of the experience of using low-code development tools is the collaboration between Neoflex and Mediascope, one of the leaders in the Russian media research market. One of the business objectives of this company is the production of data, on the basis of which advertisers, Internet sites, TV channels, radio stations, advertising agencies and brands decide to buy advertising and plan their marketing communications.

Media research is a technology-intensive business area. Recognizing video sequences, collecting data from devices that analyze viewing, measuring activity on web resources - all this implies that a company has a large IT staff and tremendous experience in building analytical solutions. But the exponential growth in the amount of information, the number and variety of its sources makes the IT data industry constantly progress. The simplest solution for scaling the already functioning analytical platform Mediascope could be an increase in the IT staff. But a much more efficient solution is to speed up the development process. One of the steps leading in this direction may be the use of low-code platforms.

At the time of the start of the project, the company already had a functioning product solution. However, the implementation of the solution in MSSQL could not fully meet the expectations for scaling the functionality while maintaining an acceptable cost of revision.

The task before us was truly ambitious - Neoflex and Mediascope had to create an industrial solution in less than a year, provided the MVP was released within the first quarter of the start date.

The Hadoop technology stack was chosen as the foundation for building a new data platform based on low-code computing. HDFS has become the data storage standard using parquet files. To access the data in the platform, Hive was used, in which all available storefronts are presented in the form of external tables. Loading data into the storage was implemented using Kafka and Apache NiFi.

The lowe-code-tool in this concept was used to optimize the most labor-intensive task in building an analytical platform - the task of calculating data.

The low-code Datagram tool was chosen as the main mechanism for data mapping. Neoflex Datagram is a tool for designing transformations and data streams.

By using this tool, you can avoid writing Scala code "by hand". Scala code is generated automatically using the Model Driven Architecture approach.

An obvious plus of this approach is the acceleration of the development process. However, in addition to speed, there are also the following advantages:

- Viewing the content and structure of sources / destinations;

- Tracking the origin of data flow objects to individual fields (lineage);

- Partial execution of transformations with viewing intermediate results;

- Viewing the source code and correcting it before execution;

- Automatic validation of transformations;

- Automatic data loading 1 in 1.

The threshold for entering low-code solutions for generating transformations is quite low: the developer needs to know SQL and have experience with ETL tools. It should be noted that code-driven transformation generators are not ETL tools in the broad sense of the word. Low-code tools may not have their own code execution environment. That is, the generated code will be executed in the environment that was on the cluster even before the installation of the low-code solution. And this is, perhaps, another plus in low-code karma. Since, in parallel with the low-code-command, a “classic” command can work, which implements the functionality, for example, in pure Scala-code. Pulling the work of both teams into production will be simple and seamless.

Perhaps it is worth noting that in addition to low-code, there are also no-code solutions. And in their essence they are different things. Low-code allows the developer to interfere with the generated code to a greater extent. In the case of Datagram, it is possible to view and edit the generated Scala code, no-code may not provide such an opportunity. This difference is very significant not only in terms of solution flexibility, but also in terms of comfort and motivation in the work of data engineers.

Solution architecture

Let's try to figure out how exactly a low-code tool helps to solve the problem of optimizing the development speed of the data calculation functionality. First, let's take a look at the functional architecture of the system. In this case, an example is the data production model for media research.

Data sources in our case are very heterogeneous and diverse:

- (-) — - , – , , . – . Data Lake , , , . , , ;

- ;

- web-, site-centric, user-centric . Data Lake research bar VPN.

- , - ;

- -.

The as is implementation of loading from source systems into the primary staging of raw data can be organized in various ways. If low-code is used for these purposes, it is possible to automatically generate boot scripts based on metadata. In this case, there is no need to go down to the level of development of source to target mappings. To implement automatic loading, we need to establish a connection with the source, and then define in the loading interface a list of entities to be loaded. The creation of the directory structure in HDFS will be automatic and will correspond to the data storage structure in the source system.

However, in the context of this project, we decided not to use this opportunity of the low-code platform due to the fact that Mediascope has already independently begun work on the production of a similar service on the Nifi + Kafka link.

It should be noted right away that these tools are not interchangeable, but rather complementary to each other. Nifi and Kafka are capable of working both in direct (Nifi -> Kafka) and in reverse (Kafka -> Nifi) bundles. For the media research platform, the first link was used.

In our case, I needed to process various types of data from source systems and send them to the Kafka broker. At the same time, the direction of messages to a specific Kafka topic was carried out using the PublishKafka Nifi processors. Orchestration and maintenance of these pipelines is done in a visual interface. The Nifi tool and the use of the Nifi + Kafka bundle can also be called a low-code approach to development, which has a low threshold for entry into Big Data technologies and accelerates the application development process.

The next stage in the implementation of the project was the reduction to the format of a single semantic layer of detailed data. If an entity has historical attributes, the calculation is performed in the context of the partition in question. If the entity is not historical, then it is optionally possible either to recalculate the entire contents of the object, or to refuse to recalculate this object at all (due to the absence of changes). At this stage, keys are generated for all entities. The keys are saved in the Hbase directories corresponding to the master objects, containing the correspondence between the keys in the analytical platform and the keys from the source systems. Consolidation of atomic entities is accompanied by enrichment with the results of preliminary calculation of analytical data. The framework for calculating data was Spark.The described functionality of converting data to a single semantics was also implemented on the basis of mappings of the low-code-tool Datagram.

The target architecture required providing SQL data access for business users. Hive was used for this option. Objects are registered in Hive automatically when the “Registr Hive Table” option is enabled in the low-code instrument.

Payment flow control

Datagram has an interface for building workflow designs. Mappings can be launched using the Oozie scheduler. In the interface of the developer of streams, it is possible to create schemes of parallel, sequential, or depending on the specified conditions for the execution of data transformations. There is support for shell scripts and java programs. It is also possible to use the Apache Livy server. Apache Livy is used to run applications directly from the development environment.

If the company already has its own process orchestrator, it is possible to use the REST API to embed mappings into an existing stream. For example, we had a fairly successful experience of embedding Scala mappings into orchestrators written in PLSQL and Kotlin. The REST API of a low-code tool implies the presence of such operations as generating an executable year based on a mapping design, calling a mapping, calling a sequence of mappings, and, of course, passing parameters to the URL to launch mappings.

Along with Oozie, it is possible to organize a flow of calculation using Airflow. Perhaps I will not dwell on the comparison of Oozie and Airflow for a long time, but I will simply say that in the context of work on a media research project, the choice fell towards Airflow. The main arguments this time turned out to be a more active community developing the product and a more developed interface + API.

Airflow is also good because it uses the beloved Python to describe the calculation processes. In general, there are not so many open source workflow management platforms. Launching and monitoring the execution of processes (including those with a Gantt chart) only add points to Airflow's karma.

The format of the configuration file for running low-code solution mappings is spark-submit. This happened for two reasons. First, spark-submit allows you to run the jar file directly from the console. Second, it can contain all the information you need to configure the workflow (making it easier to write scripts that form the Dag).

The most common element of the Airflow workflow in our case is the SparkSubmitOperator.

SparkSubmitOperator allows you to run jar`niks - packed Datagram mappings with pre-formed input parameters for them.

It should be mentioned that each Airflow task runs on a separate thread and knows nothing about the other tasks. In this connection, interaction between tasks is carried out using control operators, such as DummyOperator or BranchPythonOperator.

In the aggregate, the use of the Datagram low-code solution in conjunction with the universalization of configuration files (forming Dag) has led to a significant acceleration and simplification of the process of developing data download streams.

Showcase calculation

Perhaps the most intelligently loaded stage in the production of analytical data is the storefront building step. In the context of one of the data flows of the research company, at this stage, there is a conversion to a reference broadcast, taking into account the correction for time zones with reference to the broadcasting grid. It is also possible to adjust for the local broadcasting network (local news and advertising). Among other things, this step breaks down the continuous viewing intervals of media products based on the analysis of the viewing intervals. Immediately, the viewing values are "weighted" based on information about their significance (calculation of the correction factor).

Data validation is a separate step in preparing data marts. The validation algorithm uses a number of mathematical science models. However, the use of a low-code platform allows a complex algorithm to be broken down into a number of separate, visually readable mappings. Each of the mappings performs a narrow task. As a result, intermediate debugging, logging and visualization of data preparation stages is possible.

It was decided to discretize the validation algorithm into the following sub-stages:

- Plotting regressions of dependences of watching a television network in the region with watching all networks in the region for 60 days.

- Calculation of studentized residuals (deviations of the actual values from those predicted by the regression model) for all regression points and for the calculated day.

- A sample of anomalous region-network pairs, where the studentized remainder of the estimated day exceeds the norm (specified by the operation setting).

- Recalculation of the corrected studentized remainder for abnormal region-network pairs for each respondent who viewed the network in the region with the determination of the contribution of this respondent (the value of the change in the studentized remainder) when excluding this respondent from the sample.

- Search for candidates, the exclusion of which brings the studentized balance of the settlement day back to normal.

The above example confirms the hypothesis that a data engineer should have too much in his head anyway ... And if this is really an “engineer”, not a “coder”, then the fear of professional degradation when using low-code tools he must finally retreat.

What else can low-code do?

The scope of a low-code tool for batching and streaming data without manually writing Scala code does not end there.

The use of low-code in the development of datalakes has already become a standard for us. Perhaps we can say that solutions on the Hadoop stack follow the path of development of the classic DWH based on RDBMS. Low-code tools on the Hadoop stack can solve both data processing tasks and tasks of building final BI interfaces. Moreover, it should be noted that BI can mean not only the representation of data, but also their editing by the forces of business users. We often use this functionality when building analytical platforms for the financial sector.

Among other things, using low-code and, in particular, Datagram, it is possible to solve the problem of tracing the origin of data flow objects with atomicity to individual fields (lineage). To do this, the low-code tool implements interface with Apache Atlas and Cloudera Navigator. In fact, the developer needs to register a set of objects in the Atlas dictionaries and refer to the registered objects when building mappings. The mechanism for tracking the origin of data or analyzing the dependencies of objects saves a lot of time if it is necessary to make improvements to the calculation algorithms. For example, when constructing financial statements, this feature allows you to more comfortably survive the period of legislative changes. After all, the better we understand the inter-form dependence in the context of the objects of the detailed layer,the less we will encounter "sudden" defects and reduce the number of rework.

Data Quality & Low-code

Another task implemented by the low-code tool on the Mediascope project is the task of the Data Quality class. The peculiarity of the implementation of the data verification pipeline for the project of the research company was the lack of impact on the performance and speed of the main data flow. The familiar Apache Airflow was used to enable orchestration of data validation by independent threads. As each step of data production was ready, a separate part of the DQ pipeline was launched in parallel.

It is good practice to monitor the quality of data from its inception in the analytics platform. Having information about the metadata, we can, from the moment the information enters the primary layer, check whether the basic conditions are met - not null, constraints, foreign keys. This functionality is implemented based on automatically generated mappings of the data quality family in Datagram. Code generation in this case is also based on model metadata. On the Mediascope project, the interface was carried out with the metadata of the Enterprise Architect product.

By pairing the low-code tool and Enterprise Architect, the following checks were automatically generated:

- Checking for the presence of "null" values in fields with the "not null" modifier;

- Checking for the presence of duplicates of the primary key;

- Entity foreign key validation;

- Checking the uniqueness of a string against a set of fields.

For more sophisticated data availability and validity checks, a Scala Expression mapping was created that accepts an external Spark SQL check code prepared by analysts at Zeppelin.

Of course, it is necessary to come to the auto-generation of checks gradually. Within the framework of the described project, this was preceded by the following steps:

- DQ implemented in Zeppelin notebooks;

- DQ embedded in mapping;

- DQ in the form of separate massive mappings containing a whole set of checks for a particular entity;

- Universal parameterized DQ mappings that accept metadata and business validation information as input.

Perhaps the main advantage of creating a service of parameterized checks is the reduction in the delivery time of functionality to the production environment. New quality checks can bypass the classic pattern of delivering code indirectly through development and test environments:

- All metadata checks are generated automatically when the model changes in EA;

- Data availability checks (determining the presence of any data at a point in time) can be generated based on a directory that stores the expected timing of the appearance of the next piece of data in the context of objects;

- Business data validation is created by analysts in Zeppelin notebooks. From where they go straight to the DQ module setup tables in the production environment.

There are no risks of direct shipping of scripts to production as such. Even with a syntax error, the maximum that threatens us is failure to perform one check, because the flow of data calculation and the flow of launching quality checks are separated from each other.

In fact, the DQ service is permanently running on the production environment and is ready to start its work when the next piece of data appears.

Instead of a conclusion

The advantage of using low-code is obvious. Developers don't need to develop an application from scratch. A programmer freed from additional tasks gives results faster. Speed, in turn, frees up an additional resource of time to resolve optimization issues. Therefore, in this case, you can count on a better and faster solution.

Of course, low-code is not a panacea, and magic won't happen on its own:

- The low-code industry is going through a stage of "growing", and so far there are no uniform industrial standards;

- Many low-code solutions are not free, and purchasing them should be a deliberate step, which should be done with full confidence in the financial benefits from their use;

- GIT / SVN. ;

- – , , « » low-code-.

- , low-code-. . / IT- .

However, if you know all the shortcomings of the chosen system, and the benefits from its use, nevertheless, are in the dominant majority, then go to the small code without fear. Moreover, the transition to it is inevitable - as any evolution is inevitable.

If one developer on a low-code platform can do their job faster than two developers without low-code, then this gives the company a head start in all respects. The threshold for entering low-code solutions is lower than in "traditional" technologies, and this has a positive effect on the issue of staff shortages. When using low-code tools, it is possible to accelerate the interaction between functional teams and make faster decisions about the correctness of the chosen data-science research path. Low-level platforms can drive the digital transformation of an organization, since the solutions produced can be understood by non-technical specialists (in particular, business users).

If you have a tight deadline, busy business logic, a lack of technological expertise, and you need to speed up the time to market, then low-code is one of the ways to meet your needs.

There is no denying the importance of traditional development tools, but in many cases the use of low-code solutions is the best way to improve the efficiency of the problems being solved.