The service can be considered as a process distributed over time, which has several points through which you can influence its outcome (cancel through the portal, refuse the agency, send information about the change in the status of the service to the portal, and also send the result of its provision). In this regard, each service goes through its own life cycle through this process, accumulating data about the user's request, errors received, the results of the service, etc. This allows at any time to be able to control and make a decision on further actions to process the service.

We will talk further about how and with what help you can organize such processing.

Choosing a business process automation engine

To organize data processing, there are libraries and systems for automating business processes, widelyon the market: from embedded solutions to full-featured systems providing a framework for process control. We chose Workflow Core as a tool for automating business processes. This choice was made for several reasons: firstly, the engine is written in C # for the .NET Core platform (this is our main development platform), so it's easier to include it in the overall product outline, unlike, for example, Camunda BPM. In addition, it is an embedded engine, which provides ample opportunities for managing instances of business processes. Secondly, among the many supported storage options, there is also PostgreSQL used in our solutions. Thirdly, the engine provides a simple syntax for describing a process in the form of a fluent API (there is also a variant of describing a process in a JSON file, however,it seemed less convenient to use due to the fact that it becomes difficult to detect an error in the description of the process until the moment of its actual execution).

Business processes

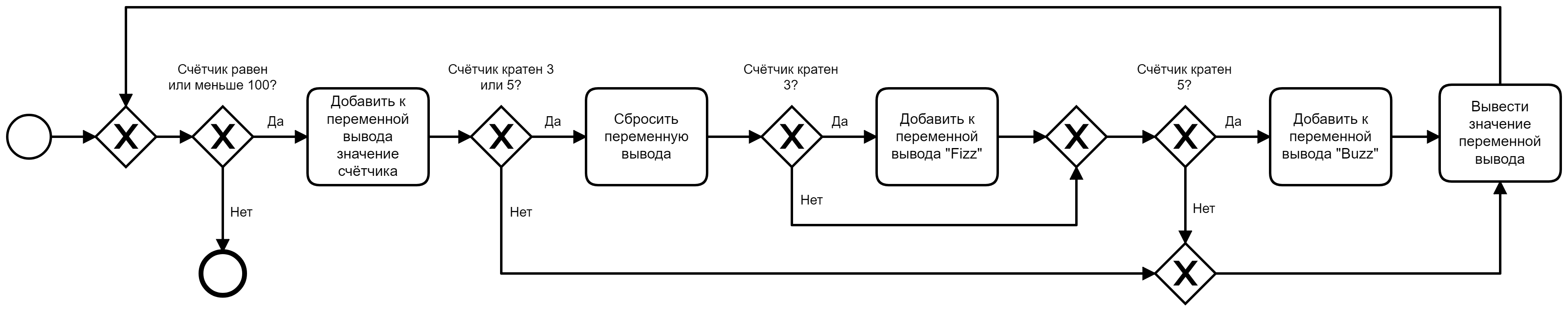

Among the generally accepted tools for describing business processes, the BPMN notation should be noted . For example, the solution to the FizzBuzz problem in BPMN notation might look like this:

The Workflow Core engine contains most of the building blocks and statements presented in the notation, and, as mentioned above, allows you to use the fluent API or JSON data to describe specific processes. The implementation of this process by means of the Workflow Core engine can take the following form :

// .

public class FizzBuzzWfData

{

public int Counter { get; set; } = 1;

public StringBuilder Output { get; set; } = new StringBuilder();

}

// .

public class FizzBuzzWorkflow : IWorkflow<FizzBuzzWfData>

{

public string Id => "FizzBuzz";

public int Version => 1;

public void Build(IWorkflowBuilder<FizzBuzzWfData> builder)

{

builder

.StartWith(context => ExecutionResult.Next())

.While(data => data.Counter <= 100)

.Do(a => a

.StartWith(context => ExecutionResult.Next())

.Output((step, data) => data.Output.Append(data.Counter))

.If(data => data.Counter % 3 == 0 || data.Counter % 5 == 0)

.Do(b => b

.StartWith(context => ExecutionResult.Next())

.Output((step, data) => data.Output.Clear())

.If(data => data.Counter % 3 == 0)

.Do(c => c

.StartWith(context => ExecutionResult.Next())

.Output((step, data) =>

data.Output.Append("Fizz")))

.If(data => data.Counter % 5 == 0)

.Do(c => c

.StartWith(context => ExecutionResult.Next())

.Output((step, data) =>

data.Output.Append("Buzz"))))

.Then(context => ExecutionResult.Next())

.Output((step, data) =>

{

Console.WriteLine(data.Output.ToString());

data.Output.Clear();

data.Counter++;

}));

}

}

}

Of course, the process can be described more simply by adding the output of the desired values right in the steps following the cardinality checks. However, with the current implementation, you can see that each step is able to make some changes to the general "piggy bank" of process data, and can also take advantage of the results of the previous steps. In this case, the process data is stored in an instance

FizzBuzzWfData, access to which is provided to each step at the time of its execution.

Method

Buildtakes a process builder object as an argument, which serves as a starting point for invoking a chain of extension methods that sequentially describe the steps of a business process. Extension methods, in turn, can contain a description of actions directly in the current code in the form of lambda expressions passed as arguments, or they can be parameterized. In the first case, which is presented in the listing, a simple algorithm translates into a rather complex set of instructions. In the second, the logic of the steps is hidden in separate classes that inherit from the type Step(or AsyncStepfor asynchronous variants), which allows you to fit complex processes into a more concise description. In practice, the second approach seems to be more suitable, the first is sufficient for simple examples or extremely simple business processes.

The actual process description class implements the parameterized interface

IWorkflow, and, executing the contract, contains the process identifier and version number. Thanks to this information, the engine is able to spawn process instances in memory, filling them with data and fixing their state in the storage. Versioning support allows you to create new process variations without the risk of affecting existing instances in the repository. To create a new version, it is enough to create a copy of the existing description, assign a next number to the property Versionand change the behavior of this process as needed (the identifier should be left unchanged).

Examples of business processes in the context of our task are:

- – .

- – , , .

- – .

- – , .

As you can see from the examples, all processes are conditionally subdivided into “cyclical”, the execution of which involves periodic repetition, and “linear”, performed in the context of specific statements and, however, do not exclude the presence of some cyclic structures within themselves.

Let's consider an example of one of the processes working in our solution for polling the incoming request queue:

public class LoadRequestWf : IWorkflow<LoadRequestWfData>

{

public const string DefinitionId = "LoadRequest";

public string Id => DefinitionId;

public int Version => 1;

public void Build(IWorkflowBuilder<LoadRequestWfData> builder)

{

builder

.StartWith(then => ExecutionResult.Next())

.While(d => !d.Quit)

.Do(x => x

.StartWith<LoadRequestStep>() // *

.Output(d => d.LoadRequest_Output, s => s.Output)

.If(d => d.LoadRequest_Output.Exception != null)

.Do(then => then

.StartWith(ctx => ExecutionResult.Next()) // *

.Output((s, d) => d.Quit = true))

.If(d => d.LoadRequest_Output.Exception == null

&& d.LoadRequest_Output.Result.SmevReqType

== ReqType.Unknown)

.Do(then => then

.StartWith<LogInfoAboutFaultResponseStep>() // *

.Input((s, d) =>

{ s.Input = d.LoadRequest_Output?.Result?.Fault; })

.Output((s, d) => d.Quit = false))

.If(d => d.LoadRequest_Output.Exception == null

&& d.LoadRequest_Output.Result.SmevReqType

== ReqType.DataRequest)

.Do(then => then

.StartWith<StartWorkflowStep>() // *

.Input(s => s.Input, d => BuildEpguNewApplicationWfData(d))

.Output((s, d) => d.Quit = false))

.If(d => d.LoadRequest_Output.Exception == null

&& d.LoadRequest_Output.Result.SmevReqType == ReqType.Empty)

.Do(then => then

.StartWith(ctx => ExecutionResult.Next()) // *

.Output((s, d) => d.Quit = true))

.If(d => d.LoadRequest_Output.Exception == null

&& d.LoadRequest_Output.Result.SmevReqType

== ReqType.CancellationRequest)

.Do(then => then

.StartWith<StartWorkflowStep>() // *

.Input(s => s.Input, d => BuildCancelRequestWfData(d))

.Output((s, d) => d.Quit = false)));

}

}

In the lines marked with *, you can see the use of parameterized extension methods, which instruct the engine to use step classes (more on that later) corresponding to type parameters. With the help of extension methods

Inputand, Outputwe have the opportunity to set the initial data passed to the step before starting execution, and, accordingly, change the process data (and they are represented by an instance of the class LoadRequestWfData) in connection with the actions performed by the step. And this is how the process looks on a BPMN diagram:

Steps

As mentioned above, it is reasonable to place the logic of the steps in separate classes. In addition to making the process more concise, it allows you to create reusable steps for common operations.

According to the degree of uniqueness of the actions performed in our solution, the steps are divided into two categories: general and specific. The former can be reused in any modules for any projects, so they are placed in the shared solution library. The latter are unique for each customer, through this their place in the corresponding design modules. Examples of common steps include:

Sending Ack requests for a response.

- Uploading files to file storage.

- Extracting data from the SMEV package, etc.

Specific steps:

- Creation of objects in the IAS, enabling the operator to provide a service.

- .

- ..

In describing the steps in the process, we adhered to the principle of limited liability for each step. This made it possible not to hide fragments of the high-level business process logic in steps and to express it explicitly in the process description. For example, if an error is found in the application data, it is necessary to send a message about the refusal to process the application to the SMEV, then the corresponding condition block will be located right in the code of the business process, and different classes will correspond to the steps for determining the fact of the error and responding to it.

It should be noted that steps must be registered in the dependency container, so that the engine will be able to use instances of steps as each process moves through its life cycle.

Each step is a connecting link between the code containing the high-level description of the process, and the code that solves application problems - services.

Services

Services represent the next, lower level of problem solving. Each step in the performance of its duty relies, as a rule, on one or more services (NB The concept of “service” in this context is closer to the analogous concept of “application-level service” from the domain of domain-specific design (DDD)).

Examples of services are:

- The service for receiving a response from the SMEV response queue prepares the corresponding data packet in SOAP format, sends it to the SMEV and converts the response into a form suitable for further processing.

- Service for downloading files from the SMEV repository - provides the reading of files attached to the application from the portal from the file repository using the FTP protocol.

- The service for obtaining the result of the provision of a service - reads data on the results of the service from the IAS and forms the corresponding object, on the basis of which another service will build a SOAP request for sending to the portal.

- Service for uploading files related to the result of the service to the SMEV file storage.

Services in the solution are divided into groups based on the system, interaction with which they provide:

- SMEV services.

- IAS services.

Services for working with the internal infrastructure of the integration solution (logging information about data packets, linking the entities of the integration solution with IAS objects, etc.).

In architectural terms, services are the lowest level, however, they can also rely on utility classes to solve their problems. So, for example, in the solution there is a layer of code that solves the problems of serialization and deserialization of SOAP data packets for different versions of the SMEV protocol. In general terms, the above description can be summarized in a class diagram:

The interface

IWorkflowand the abstract class are directly related to the engine StepBodyAsync(however, you can use its synchronous analog StepBody). The diagram below shows the implementation of "building blocks" - concrete classes with descriptions of Workflow business processes and the steps used in them ( Step). At the lower level, services are presented, which, in essence, are already specific to this particular implementation of the solution and, unlike processes and steps, are not mandatory.

Services, like steps, must be registered in the dependency container so that steps that use their services can get the necessary instances of them by injection through the constructor.

Embedding the engine in the solution

At the time of the beginning of the creation of the integration system with the portal, version 2.1.2 of the engine was available in the Nuget repository. It is built into the dependency container in the standard way in a

ConfigureServicesclass method Startup:

public void ConfigureServices(IServiceCollection services)

{

// ...

services.AddWorkflow(opts =>

opts.UsePostgreSQL(connectionString, false, false, schemaName));

// ...

}

The engine can be configured for one of the supported data warehouses (there are others among them : MySQL, MS SQL, SQLite, MongoDB). In the case of PostgreSQL, the engine uses Entity Framework Core in the Code First variant to work with processes. Accordingly, if there is an empty database, it is possible to apply migration and get the desired table structure. The use of migration is optional, it can be controlled using the method arguments

UsePostgreSQL: the second ( canCreateDB) and third ( canMigrateDB) boolean type arguments allow you to tell the engine whether it can create a database if it does not exist and apply migrations.

Since with the next update of the engine there is a non-zero probability of changing its data model, and the corresponding use of the next migration can damage the already accumulated data, we decided to abandon this option and maintain the database structure on our own, based on the mechanism of database components that is used in our other projects.

So, the issue of storing data and registering the engine in the dependency container has been resolved, let's move on to starting the engine. For this task, the hosted service option came up, and heresee an example of a base class for creating such a service). The code taken as a basis was slightly modified to maintain modularity, which means dividing an integration solution (called "Onyx") into a common part that provides engine initialization and execution of some service procedures, and a part specific to each specific customer (integration modules) ...

Each module contains process descriptions, infrastructure for executing business logic, as well as some unified code to enable the developed integration system to recognize and dynamically load process descriptions into an instance of the Workflow Core engine:

Registration and launch of business processes

Now that we have ready-made descriptions of business processes and an engine connected to the solution, it's time to tell the engine about what processes it will work with.

This is done using the following code, which can be located within the previously mentioned service (the code that initiates the registration of processes in the connected modules can also be placed here):

public async Task RunWorkflowsAsync(IWorkflowHost host,

CancellationToken token)

{

host.RegisterWorkflow<LoadRequestWf, LoadRequestWfData>();

// ...

await host.StartAsync(token);

token.WaitHandle.WaitOne();

host.Stop();

}

Conclusion

In general terms, we covered the steps you need to take to use Workflow Core in an integration solution. The engine allows you to describe business processes in a flexible and convenient manner. Keeping in mind the fact that we are dealing with the task of integration with the "Gosuslug" portal through SMEV, it should be expected that the projected business processes will cover a range of fairly diverse tasks (polling the queue, uploading / downloading files, ensuring compliance with the exchange protocol and ensuring confirmation of receipt of data, error handling at different stages, etc.). Hence, it will be quite natural to expect the occurrence of some at first glance unobvious moments of implementation, and it is to them that we will devote the next, final article of the cycle.

Study Links

- Workflow Core - business process engine for .Net Core

- Workflow Core Guide

- Workflow Core on GitHub