Foreword

The Mir Plat.Form payment ecosystem includes several dozen systems, most of which interact with each other using various protocols and formats. We, the Integration Testing team, verify that these interactions meet the established requirements.

At the moment, the team is working with 13 mission and business critical systems. Mission critical systems ensure that Mir Plat.Form performs its main functions, which ensure the stability and continuity of the functioning of the banking card system of the Russian Federation. Business critical systems are responsible for supporting additional services provided to Mir Plat.form clients, on which the direct operational activities of the company depend. The frequency of rolling out releases to PROD varies from once a week to once a quarter, it all depends on the system and the participants' readiness for the frequency of updates. In total, we counted about 200 releases that went through our team last year.

Simple math says the following: the number of chains tested is N-systems * M-integrations between them * K-releases. Even using the example of 13 systems * 11 integrations * 27 release versions, there are approximately 3,861 possible system compatibility options. The answer seems to be obvious - autotests? But the problem is a little more serious, only autotests won't save you. Given the growing number of systems and their integrations, as well as the different frequency of releases, there is always the risk of testing the wrong system version chain. Therefore, there is a risk of missing a defect in the intersystem interaction, for example, affecting the correct operation of the Mir payment system (PS).

Naturally, in PRODA the presence of such bugs is unacceptable, and the task of our team is to reduce this risk to zero. If you remember the text above, any “sneeze” affects not only the internal systems of Mir Plat.form, but also the market participants: banks, merchants, individuals and even other payment systems. Therefore, to eliminate the risks, we went the following way:

- Introduced a unified release base. For this task, the release calendar in Confluence was enough, indicating the versions of systems installed in PROD;

- We track integration chains in accordance with release dates. Here we also did not begin to reinvent the wheel, we will need it further. To solve this problem, we used Epic structures in JIRA for integration testing of releases. An example structure for release 1.111.0 of System3:

On the one hand, all these actions helped to improve the team's understanding of the tested integrations, system versions and the sequence of their release to PROD. On the other hand, there is still the possibility of incorrect testing due to the human factor:

- If the release date of any system has been moved, then a team member needs to manually correct the calendar and the entire structure in JIRA, including the deadlines for completing tasks and, possibly, the versions of the tested systems;

- Before testing the integration, you need to make sure that the testing environment consists of the correct versions of the systems. To do this, you need to manually go over the test benches and execute a couple of console commands.

In addition, additional routine work has appeared, sometimes taking up a significant part of the time.

It became obvious that this process of preparing for integration testing of releases needs to be somehow automated and, if possible, combined into one interface. This is where our own life-saving bike comes in: Integration Testing Monitoring System or simply SMIT.

What options would you like to implement in the system under development?

1. A clear release calendar with the ability to display versions of all systems for a specific date;

2. Monitoring environments for integration testing:

- list of environments;

- visual display of test benches and systems that are part of a separate environment;

- version control of systems deployed on test benches.

3. Automated work with tasks in Jira:

- creating an Epic release structure;

- lifecycle management of testing tasks;

- updating tasks in case of a shift in the release date;

- putting allure reports into testing tasks.

4. Automated work with branches in Bitbucket, namely the creation of release branches in projects:

- integration autotests;

- auto-heating of the integration environment.

5. Intuitive UI for running autotests and updating system versions.

What is SMITH

Since the system is not complicated, we did not become too smart with technology. The backend was written in Java using Spring Boot. The frontend is React. There were no special requirements for the database, so we chose MySql. Since it is customary for us to work with containers, all of the above components were wrapped in Docker, building with Docker Compose. SMITH works quickly and as reliably as other Mir Plat.Form systems.

Integration

- Atlassian Jira. In the jir, tasks for testing each specific integration are created, opened, taken into work and closed, if all tests are successful, a link to the allure report is attached in the comments.

- Atlassian BitBucket. , , / . “” , .

- Jenkins. Jenkins, . , , glue Cucumber.

- . . ssh.

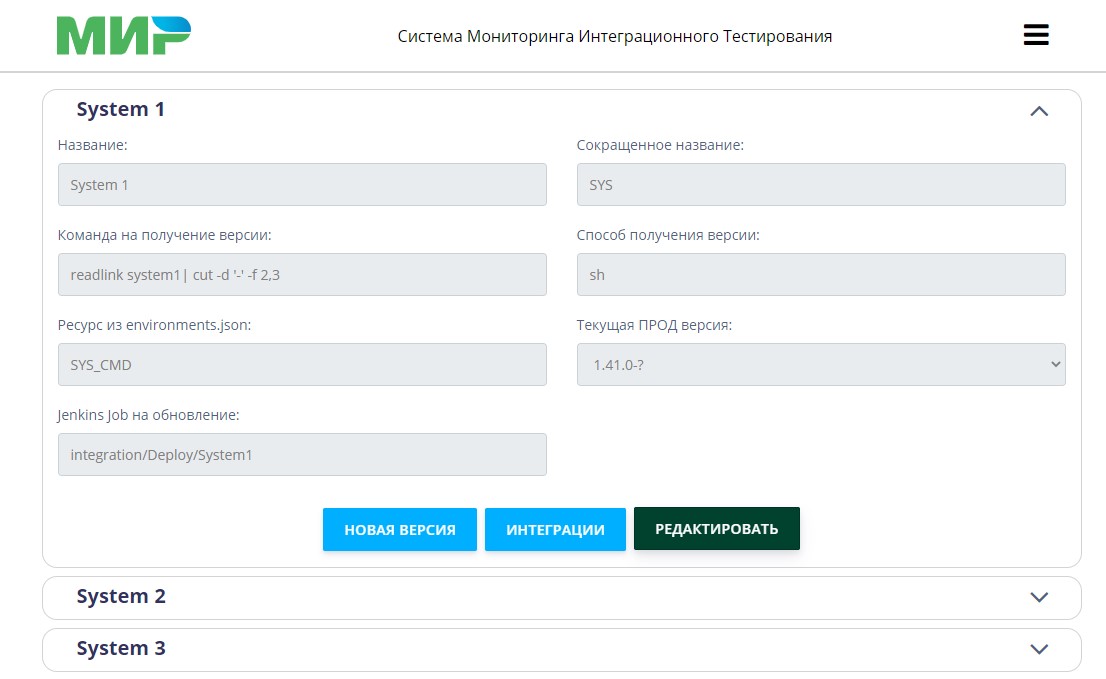

Before you can maintain a calendar and monitor the state of environments in SMIT, you need to create a list of systems under test and the relationships between them. All settings can be made via the web interface:

After adding the system under test to the SMIT list:

- Will "knock" on all hosts of systems named SYS_CMD in the list of environments;

- finds out the version of this system using the command specified in the configuration;

- will write to its database the current version of this system and the environment in which it appears.

As a result, SMIT will contain information about all systems deployed on the used environments, including their version numbers. Based on this information, you can visualize the release calendar.

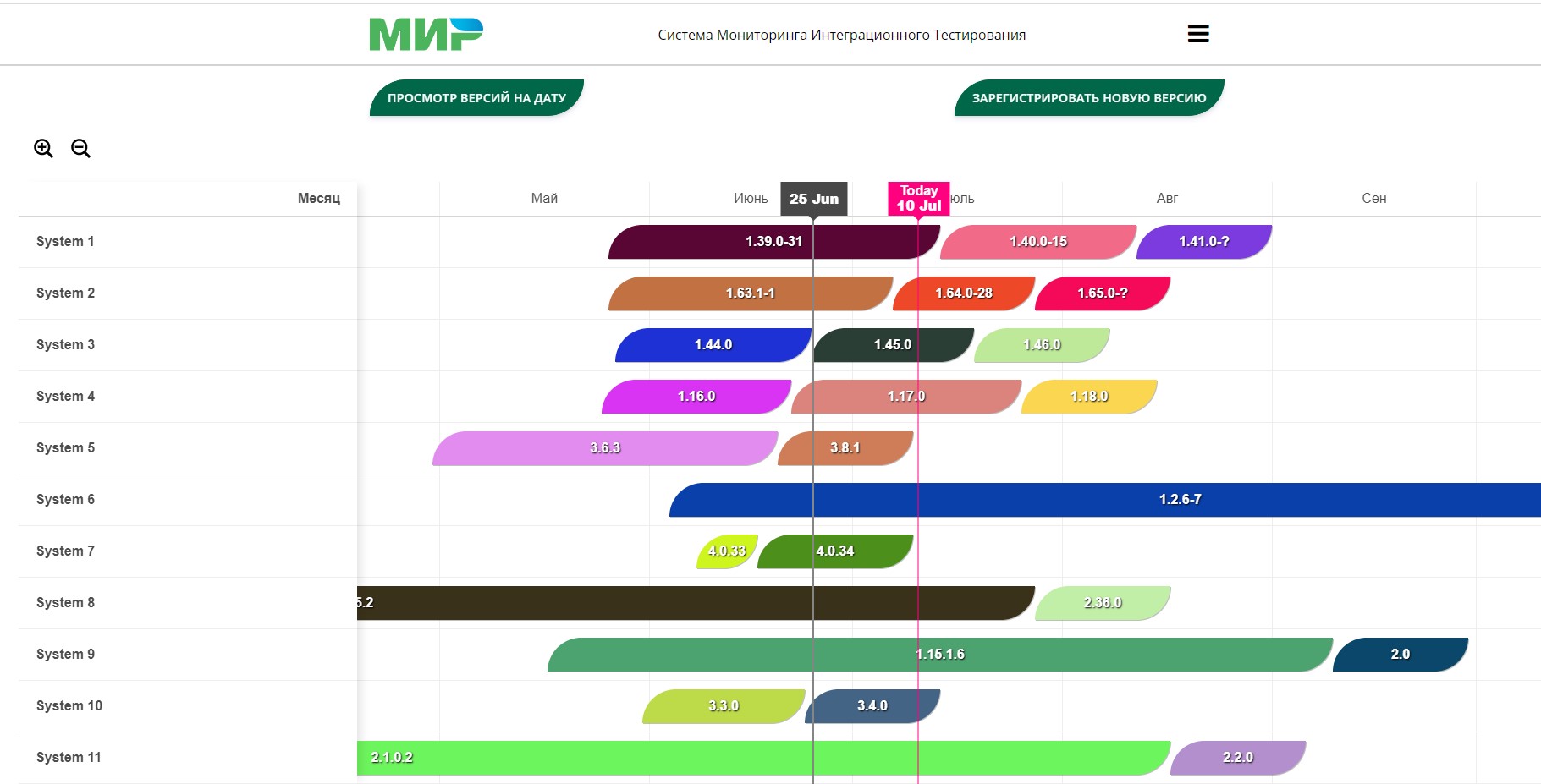

Release calendar

After the owners of systems or team leads of the product development teams tell us the date of installation of a new release in PROD, we register this release in the calendar. It turns out this is the picture:

You can easily notice conflicts, where several releases are installed at once in a few days and a "heat" is possible. Product owners are notified of these conflicts, because it is really dangerous to install several new versions of systems on the same day.

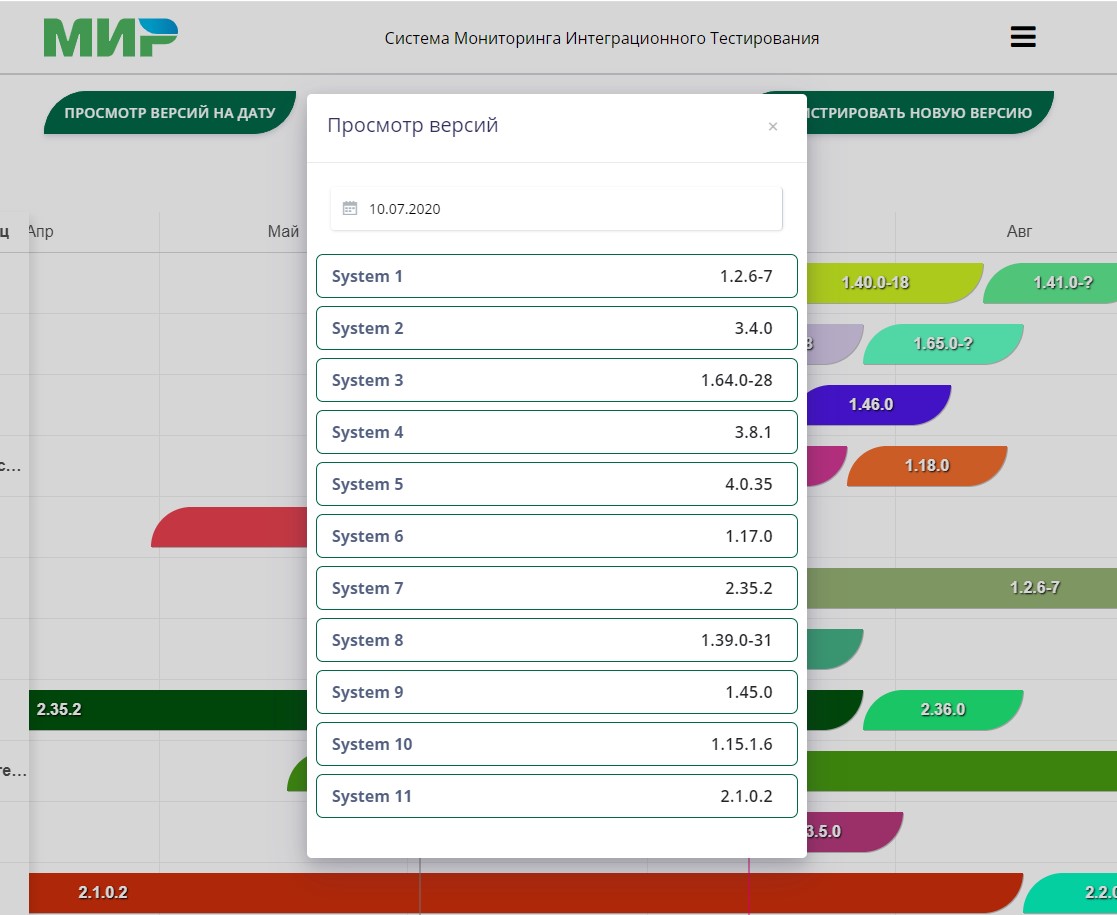

Also on the page with the calendar there is a function to display versions of all systems for a specific date:

It is worth noting that when registering a new release in the calendar, SMIT automatically creates an Epic structure in Jira and release branches in projects in Bitbucket.

Environment state

Another very convenient function of SMITH is to view the current state of a particular environment. On this page you can find a list of systems included in the environment and the relevance of their versions.

As you can see in the screenshot, SMITH found an out-of-date System 4 version on host-4.nspk.ru and offers to update it. If you press the red button with a white arrow, then SMITH will call Jenkins job to deploy the current version of the system in the current environment. It is also possible to update all systems after pressing the corresponding button.

Integration Testing Environments

It is worth telling a little about how we define test environments. One environment is a set of stands with deployed Mir Plat.form systems and customized integration (at one stand - one system). In total, we have 70 stands, divided into 12 environments.

In the project of integration autotests, we have a configuration file in which testers set test environments. The file structure looks like this:

{

"properties":{

"comment":" system property Environment. property, , System.getProperties()",

"common.property":"some global property"

},

"environments":[

{

"comment":" name, Environment common + . common1",

"name":"env_1",

"properties":{

"comment":" system property Environment. property. , System.getProperties()",

"env1.property":"some personal property"

},

"DB":{

"comment":" TestResource' DbTestResource. id, ",

"url":"jdbc:mysql://11.111.111.111:3306/erouter?useUnicode=yes&characterEncoding=UTF-8&useSSL=false",

"driver":"com.mysql.jdbc.Driver",

"user":"fo",

"password":"somepass"

},

"SYS_CMD":{

"comment":" TestResource' RemoteExecCmd. type = remote",

"type":"remote",

"host":"10.111.111.111",

"username":"user",

"password":"somepass"

}

}

]

}In addition to the fact that this file is necessary for the integration autotest project to work, it is also an additional configuration file for SMIT. When you request to update information about environments in SMIT, an HTTP request is sent to the API of our bitbucket, where we store the project with integration autotests. In this way SMITH gets the actual contents of the configuration file from the master branch.

Running tests

One of the goals of creating SMIT was to simplify the procedure for launching integration autotests as much as possible. Let's consider what we ended up with using an example:

On the system testing page (in this example, System 3), you can select a list of systems with which you want to check the integration. After selecting the required integrations and clicking on the “Start Testing” button, SMITH:

1. Forms a queue and sequentially launches the corresponding Jenkins jobs;

2. monitors job execution;

3.changes the status of the corresponding issues in Jira:

- If the job has completed successfully, the task in Jira will be automatically closed, a link to an allure report and a comment stating that no defects were found in this integration will be attached to it.

- If the job is faulty, the task in Jira will remain open and will wait for a decision from the employee responsible for the integration, who will be able to determine the cause of the tests failing. The person responsible for the integration can be seen in the integration card.

Output

SMITH was created to minimize the risks of integration testing, but as a team we wanted more! In particular, one of the wishes was that with one click of the button, autotests were launched with the correct test environment, everything was checked in the necessary integration matches, and tasks in Jira were opened and closed along with reports. Such a utopia of auto-testers: tell the system what to check - and go and drink coffee :)

Let's summarize what we managed to implement:

- Visual release calendar with the ability to display versions of all systems on a specific date;

- UI , , ;

- ;

- UI ;

- Epic Task Jira, Allure ;

- Bitbucket.

At the moment, the system is undergoing closed beta testing among direct members of the integration testing team. When all the defects found are eliminated and the system stably performs its functions, we will open access to employees of related teams and product owners so that they can independently run our tests and study the result.

Thus, in an ideal scenario, all that needs to be done to verify that the system meets the integration requirements is to go to the SMIT web interface, update the necessary system through it, select all the checkboxes and run the tests, and then check that they have all been completed successfully. Tasks will be created automatically, allure-reports will be filled in, the corresponding statuses will be assigned to these tasks.