Hello. This article was written for those who are still rushing between the choice of virtualization platforms and after reading an article from the series "We installed proxmox and everything is fine in general, 6 years of uptime is not a single gap." But after installing one or another boxed solution, the question arises, how can I correct it here, so that monitoring is more understandable and here, in order to control backups…. And then the time comes and you understand that you want something more functional, well, or you want everything to become clear inside your system, and not this black box, or you want to use something more than a hypervisor and a bunch of virtual machines. In this article, there will be a little thought and practice based on the Opennebula platform - I chose it. not demanding on resources and the architecture is not that complicated.

And so, as we can see, many cloud providers work on kvm and make external connections to control machines. It is clear that large hosters write their connections for cloud infrastructure, the same YANDEX for example. Someone uses openstack and makes a binding on this basis - SELECTEL, MAIL.RU. But if you have your own hardware and a small staff of specialists, then you usually choose something from the ready-made - VMWARE, HYPER-V, there are free licenses and paid ones, but now this is not about that. Let's talk about enthusiasts - these are those who are not afraid to suggest and try something new, despite the fact that the company has clearly made it clear “Who will serve it after you?” “And we’ll roll it out into production later? Scary." But after all, you can first apply these solutions in a test bench, and if everyone likes it,then the question of further development and use in more serious environments can be raised.

Also here is a link to the report www.youtube.com/watch?v=47Mht_uoX3A from an active participant in the development of this platform.

Perhaps in this article, something will be superfluous and already clear to an experienced specialist, and in some cases I will not describe everything, since such commands and descriptions are on the network. Here is just my experience with this platform. I hope active participants will add in the comments what could be done better and what mistakes I made. All actions were in a home stand, consisting of 3 PCs with different characteristics. Also, I specifically did not specify how this software works and how to install it. No, just the experience of administration and the problems I encountered. Perhaps someone will find it useful in choosing.

And so, let's get started. As a system administrator, the following points are important to me, without which I will hardly use this solution.

1. Installation repeatability

There are tons of instructions for installing opennebula, so there should be no problem. From version to version, new features appear that may not always be able to earn when moving from version to version.

2. Monitoring

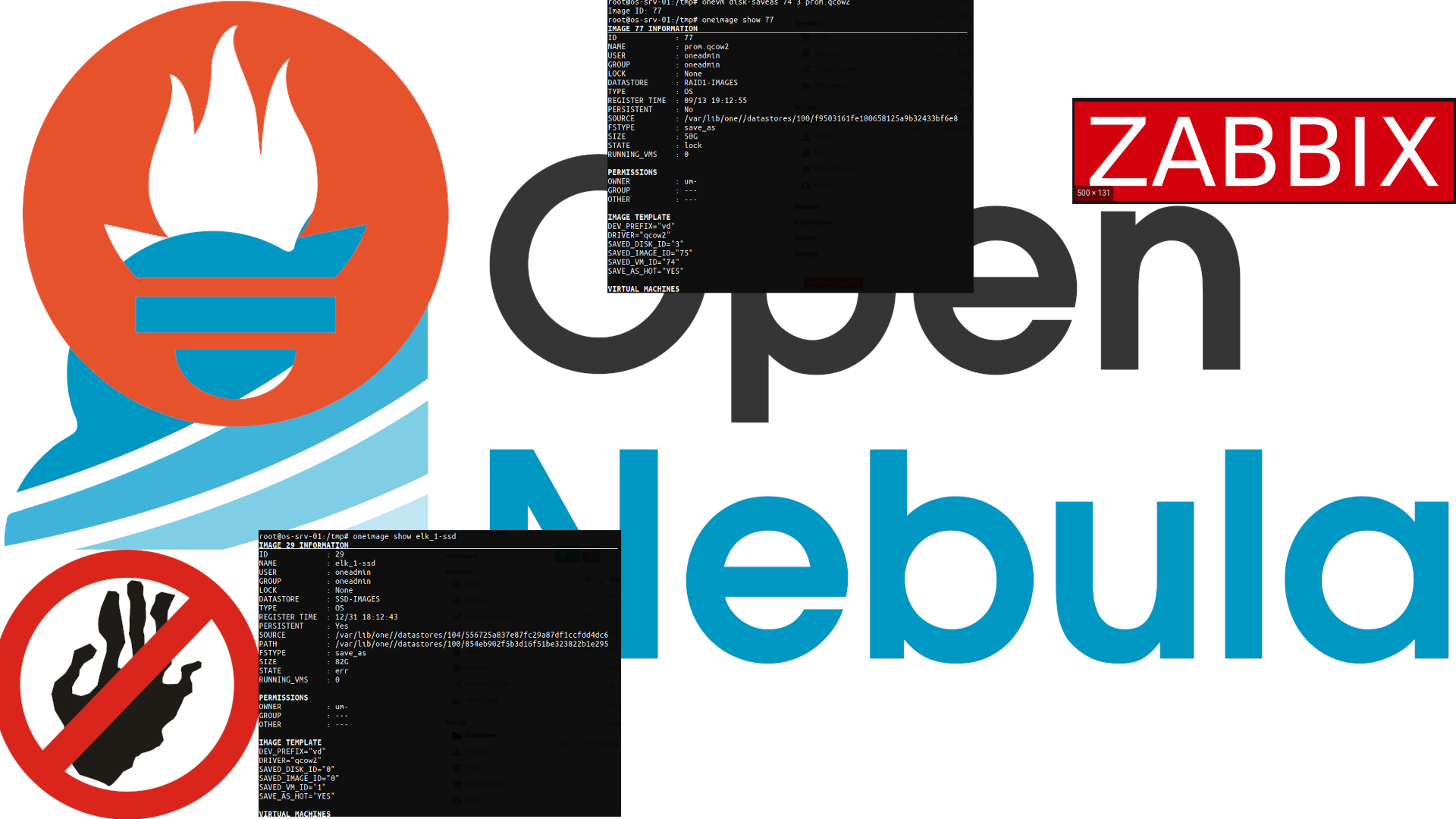

We will monitor the node itself, kvm and opennebula. The good is already there. There are a lot of options about monitoring linux hosts, the same zabbix or node exporter - whoever likes what - at the moment I define it so that monitoring of system metrics (temperature where it can be measured, the consistency of the disk array), through zabbix, but as for applications through the exporter to prometheus. For monitoring kvm, for example, you can take the project github.com/zhangjianweibj/prometheus-libvirt-exporter.git and put the launch through systemd, it works quite well and shows kvm metrics, there is also a ready-made dashboard grafana.com/grafana/dashboards/12538 .

For example, here's my file:

/etc/systemd/system/libvirtd_exporter.service

[Unit]

Description=Node Exporter

[Service]

User=node_exporter

ExecStart=/usr/sbin/prometheus-libvirt-exporter --web.listen-address=":9101"

[Install]

WantedBy=multi-user.targetAnd so we have 1 exporter, we need a second one to monitor the opennebula itself, I used this github.com/kvaps/opennebula-exporter/blob/master/opennebula_exporter

You can add the following to a regular node_exporter to monitor the system.

In the file for node_exporter, change the start in this way:

ExecStart=/usr/sbin/node_exporter --web.listen-address=":9102" --collector.textfile.directory=/var/lib/opennebula_exporter/textfile_collectorCreate a directory mkdir -p / var / lib / opennebula_exporter

bash script presented above, first check the work through the console, if it shows what is needed (if it gives an error, then put xmlstarlet), copy it to /usr/local/bin/opennebula_exporter.sh

Add to CZK task for each minute:

*/1 * * * * (/usr/local/bin/opennebula_exporter.sh > /var/lib/opennebula_exporter/textfile_collector/opennebula.prom)Metrics began to appear, you can pick them up with a prometheus and build graphs and make alerts. For example, you can draw such a simple dashboard in grafan.

(you can see that here I did overcommit cpu, ram)

For those who love and use zabbiks, there is github.com/OpenNebula/addon-zabbix

Monitoring everything, the main thing is it. Of course, you can, in addition, use the built-in monitoring tools for virtual machines and upload data to billing, here everyone has their own vision, until they got down to it more closely.

I haven't started logging yet. The easiest option is to add td-agent to parse the / var / lib / one directory with regular expressions. For example, the sunstone.log file is suitable for regexp nginx and other files that show the history of calls to the platform - what's the plus? Well, for example, we can explicitly track the number of "Error, error" and quickly track where and at what level there is a malfunction.

3. Backups

There are also paid doped projects - for example sep wiki.sepsoftware.com/wiki/index.php/4_4_3_Tigon: OpenNebula_Backup. Here we must understand that just backing up the image of the machine, in this case, is not at all the same, because our virtual machines must work with full integration (the same file context, which describes the network settings, vm name and custom settings for your applications). Therefore, here we decide what and how we will back up. In some cases it is better to make copies of what is in the vm itself. And maybe you only need to back up one disk from this machine.

For example, we decided that all machines are started with persistent images, therefore, after reading docs.opennebula.io/5.12/operation/vm_management/img_guide.html,

it means that first we can unload the image from our vm:

onevm disk-saveas 74 3 prom.qcow2

Image ID: 77

,

oneimage show 77

/var/lib/one//datastores/100/f9503161fe180658125a9b32433bf6e8

. , . , opennebula .Also in the vastness of the network I found an interesting report and there is also such an open project , but here only for qcow2 storage.

But as we all know, sooner or later there comes a time when you want incremental backups, it is more difficult here and perhaps the management will allocate money for a paid solution, or go the other way and understand that here we only saw resources, and make redundancy at the application level and adding a number of new nodes and virtual machines - yes, here, I say that using the cloud is purely for launching application clusters, and running the database on another platform or taking a ready-made one from the supplier, if possible.

4. Ease of use

In this paragraph, I will describe the problems that I encountered. For example, by images, as we know there is persistent - when this image is mounted to vm, all data is written to this image. And if non-persistent, then the image is copied to the storage and the data is written to what was copied from the original image - this is how template templates work. Repeatedly he made problems to himself that he forgot to specify persistent and the 200 GB image was copied, the problem is that for sure this procedure cannot be canceled, you have to go to the node and nail the current "cp" process.

One of the major drawbacks is that you cannot undo actions simply by using the gui. Rather, you cancel them and see that nothing happens and start again, cancel and in fact there will already be 2 cp processes that copy the image.

And then it comes to understanding why opennebula each new instance is numbered with a new id, for example, in the same proxmox created a vm with id 101, deleted it, then you create id 101 again. In opennebula, this will not happen, each new instance will be created with a new id and this has its own logic - for example, clearing old data or unsuccessful installations.

The same goes for storage, most of all this platform is aimed at centralized storage. There are addons for using local, but in this case, not about that. I think that in the future someone will write an article on how it was possible to use local storage on nodes and successfully use it in production.

5. Maximum simplicity

Of course, the further you go, the fewer those who will understand you.

In the conditions of my stand - 3 nodes with nfs storage - everything works fine. But if we conduct experiments to turn off the power, then for example when starting a snapshot and turning off the power of the node, we save the settings in the database, which is a snapshot, but in fact it is not (well, we all understand that we originally wrote the database about this action in sql , but the operation itself was not successful). The plus is that when creating a snapshot, a separate file is formed and there is a "parent", therefore, in case of problems and even if it does not work through the gui, we can pick up the qcow2 file and recover separately docs.opennebula.io/5.8/operation/vm_management/vm_instances .html

Unfortunately, not everything is so simple on networks. Well, at least it's easier than in openstack, I used only vlan (802.1Q) - it works fine, but if you make changes in the settings from the template network, then these settings will not be applied on already running machines, that is, you need to delete and add the network map, then the new settings are applied.

If you still want to compare with openstack, then we can say so, in opennebula there is no clear definition of which technologies to use for storing data, managing a network, resources - each administrator decides for himself how it is most convenient for him.

6. Additional plugins and installations

After all, as we understand the cloud platform can manage not only kvm, but also vmware esxi. Unfortunately, I didn't have a pool with Vcenter, if anyone tried to write.

Supported by other cloud providers docs.opennebula.io/5.12/advanced_components/cloud_bursting/index.html

AWS, AZURE.

I also tried to screw Vmware Cloud from the selector, but nothing worked - in general, I scored because there are many factors, and there is no point in writing to the technical support of the hosting provider.

Also, now in the new version there is a firecracker - this is the launch of microvm, such as kvm binding over docker, which gives even more versatility, security and increased performance, since there is no need to spend resources on emulating hardware. I see only an advantage in relation to docker in that it does not take an additional number of processes and there are no occupied sockets when using this emulation, i.e. it is quite possible to use it as a load balancer (but it is probably worth writing a separate article about it, until you have completed all the tests to the fullest).

7. Positive experience of use and debugging of errors

I wanted to share my observations about the work, I described part of it above, I want to write more. Indeed, probably I'm not the only one who at first thinks that this is not the right system and in general everything is crutches here - how do they generally work with this? But then comes the understanding that everything is quite logical. Of course not to please everyone and some points require improvements.

For example, a simple operation to copy a disk image from one datastore to another. In my case, there are 2 nodes with nfs, I send the image - copying goes through the frontend opennebula, although we are all used to the fact that data should be copied directly between hosts - in the same vmware, hyper-v we are used to this, but here by to another. Here is a different approach and a different ideology, and in version 5.12 the button "migrate to datastore" was removed - only the machine itself is transferred, but not the storage. means centralized storage.

Further, there is a popular error with various reasons "Error deploying virtual machine: Could not create domain from /var/lib/one//datastores/103/10/deployment.5" Below is the top that you need to look at.

- Image rights for the oneadmin user;

- Rights for user oneadmin to run libvirtd;

- datastore? , ;

- , frontend , vlan br0, — bridge0 — .

system datastore stores metadata for your vm, if you start vm with persistent image, then vm needs to have access to the originally created configuration on the storage where you created the vm - this is very important. Therefore, when transferring vm to another datastore, you need to double-check everything.

8. Documentation, community. Further development

And the rest, good documentation, community and most importantly that the project continues to live in the future.

Here, in general, everything is quite well documented and even according to the official source it will not be difficult to establish and find answers to questions.

Community active. Publishes many ready-made solutions that you can use in your installations.

At the moment, some policies in the company have changed since 5.12.forum.opennebula.io/t/towards-a-stronger-opennebula-community/8506/14 it will be interesting to know how the project will develop. In the beginning, I specifically pointed out some of the vendors who use their solutions and what the industry offers. Of course, there is no clear answer on what to use. But for small organizations, maintaining their small private cloud may not be as expensive as it sounds. The main thing is to know exactly what you need it.

As a result, no matter what you choose as a cloud system, you should not stop at one product. If you have time, it's worth looking at other more open-ended solutions.

There is a good chat t.me/opennebula actively helping and do not send to search for a solution to the problem in Google. Join us.