Photo source The

pygmy white-toothed shrew , the smallest mammal by mass. There is a small, holistic, complex brain inside, which in principle can be mapped. The

short answer is possible, but not complete and not very accurate. That is, we still cannot copy her consciousness, but we are closer to this than ever. Live another twenty years - and, perhaps, your brain will also be able to back up.

To get closer to the digitalization of consciousness and such an exotic type of immortality, you should first deal with living neural networks. Their reverse engineering shows us how the thinking (computing) process can generally be arranged in well-optimized systems.

60 years ago, on September 13, 1960, scientists gathered the first symposium of biologists and engineers so that they could figure out what is the difference between a complex machine and an organism. And is it even there. Science was called bionics, and the goal was the application of methods of biological systems to applied engineering and new technologies. Biosystems were seen as highly efficient prototypes of new technology.

Military neuroanatomist Jack Steele became one of the people who markedly influenced the further progress in the field of technology, including in the field of AI, where such areas as neuromorphic engineering and bioinspired computing were developed. Steele was a physician, versed in psychiatry, was fond of architecture, knew how to fly an airplane and repaired his own equipment, that is, he was a quite good applied engineer. Steele's scientific work became the prototype for the script for the movie Cyborg. So, with some stretch, you can call him the great-grandfather of the Terminator. And where the Terminator is, Skynet is there, as you know.

This post is based on materials from the future book of our colleague Sergei Markov "Hunting the Electric Sheep: A Big Book of Artificial Intelligence."

In general, the question of the relationship between physiological processes occurring in the human nervous system and mental phenomena is one of the most intriguing questions of modern science. Imagine that a secret computer processor has fallen into your hands and you want to copy it. You can slice it into thin layers and copy it carefully layer by layer. But how exact do you need a copy to be fully or at least partially functional? It is extremely difficult to answer such a question without experiments.

Bionics, or biomimetics, copied the principles of biosystems or took them as a basis. As Leonardo da Vinci watched the flight of birds and invented an ornithopter (unfortunately, no suitable materials and energy sources were found then), so in the twentieth century we copied more and more complex systems. RFID chips, medical adhesives (adhesives), hydrophobic structures, nanosensors - all this and much more was created using bioprototypes. Somewhere, the very existence of a prototype in nature made it possible to understand that technology is, in principle, possible. If plants are able to synthesize sugars and starch from carbon dioxide and water, then a device can be created that performs the same function.

And if evolution optimizes systems towards adaptability to the environment, then we could optimize them for our tasks. From the point of view of evolution, the human brain must consume little energy, must be resistant to physical influences (you are unlikely to like it if you completely lose memory from the fall of the apple on your head), the baby's head must freely overcome the birth canal at birth, and so on.

In the case of developing a device using the same principles, we do not have these restrictions. Like hundreds of others.

By the way, our ancestors often adhered to the "terminal" theory of thinking, suggesting that the processes take place somewhere remotely (in the soul) and are transmitted in the form of commands through some organ. Aristotle and his colleagues believed that the terminal of the soul is in the heart. But the experiments of ancient doctors were limited by the technical level of civilization. This continued roughly until Luigi Galvani discovered in 1791 that current causes muscles to contract. These experiments gave rise to research in the field of bioelectric phenomena. At some point, Cato decided to measure the potentials of everything around and began to open animals for his measurements. He found that the outer surface of the gray matter was charged more positively than the deep structures of the brain. He also noted that electrical currents in the brain appear to be related to basic function."When I showed the raisins to the monkey, but did not give them, there was a slight decrease in the amperage . " Thanks to him, non-invasive (that is, not associated with penetration through the external barriers of the body) electroencephalography was born. In 1890, physiologist Adolf Beck from Poland discovered low-voltage, high-frequency fluctuations in electrical potentials that occur between two electrodes placed in the occipital cortex of a rabbit's brain.

At that moment it became clear to many scientists that the brain is a fundamentally cognizable thing. Perhaps this is not even a "terminal" for a divine soul, but a completely understandable electric machine, but only very complex. Or it contains such an engineering component and can be studied. Cato created the prerequisites for the subsequent appearance of the EEG. Modern electroencephalography was created by Berger, although he had predecessors such as Pravdich-Neminsky and others.

Two years before Cato's experiments, in 1873, the Golgi method (named after its author, the Italian physiologist Camillo Golgi) was discovered, which allows staining individual neurons (although the word "neuron" was not used until 1891).

Before the discovery of the Golgi in biology, a concept was popular, proposed by the German histologist Joseph von Gerlach, who believed that the fibers emerging from various cellular bodies were connected into a single network called the "reticulum". The popularity of Gerlach's ideas was due to the fact that, unlike the heart or liver, the brain and nervous system could not be divided into separate structural units: although nerve cells were described in tissue by many researchers of that time, the connection between nerve cells and the axons connecting them and dendrites was unclear. The main reason for this was the lack of microscopy. Thanks to his discovery, Golgi saw that the branched processes of one cell body do not merge with others. He did not, however, reject Gerlach's concept, suggesting that the long, slender appendages were probablyconnected into one continuous network.

It was like what the mechanics and electricians already knew. The mechanistic approach triumphed. True, it was still decidedly unclear how it works. Or at least how it might work.

Fourteen years later, in 1887, the Spanish neuroanatomist Santiago Ramon y Cajal proved that the long, thin processes that emerge from the cell bodies are not at all connected into a single network. The nervous system, like all other living tissues, consisted of separate elements. In 1906, Ramon y Cajal and Camillo Golgi received the Nobel Prize in Physiology and Medicine for their works on the structure of the nervous system . Sketches of Ramon-i-Cajal, of which about 3,000 have survived to this day , remain today one of the most detailed descriptions of the structural diversity of the brain and nervous system.

Sketch by Santiago Ramon y Cajal

Further research has shown more and more detail that we can fundamentally figure out how we think - at the engineering level. This means that we can do applied biomimetics.

Although it had been known since the days of Galvani that nerves could be excited electrically, the stimuli used to excite the nerves were quite difficult to control. What strength and duration should the signal be? And how can the connection between stimulus and excitability be explained by the underlying biophysics? These questions were asked at the turn of the 19th and 20th centuries by the pioneers in the study of nervous excitability Jan Horweg (Jan Leendert Hoorweg, 1841-1919, sometimes inaccurately rendered as "Hoorweg"), Georges Weiss (Jules Adolphe Georges Weiss, 1851-1931) andLouis Lapicque (1866-1952) . In his first study in 1907, Lapik presents a model of the nerve, which he compares with data obtained by stimulating a frog's nerve. This model, based on a simple capacitor circuit, will serve as the basis for future models of the neuron's cell membrane.

Just so that you understand the complexities of science in those years, it is worth giving a couple of examples. The stimulus that Lapik used was a short electrical impulse that was delivered through two electrodes designed and manufactured specifically for this purpose. Ideally, stimulation experiments could use current pulses, but suitable current sources were not easy to create. Instead, Lapik used a voltage source - a battery. Voltage regulation was carried out using a voltage divider, which was a long wire with a slider, similar to a modern potentiometer. It was also difficult to obtain accurate pulses with a duration of only a few milliseconds, a tool invented a little earlier for this was called a "rheotome". The device consisted of a pistol with a capsule lock,whose bullet first broke the first jumper, creating a current in the stimulating circuit, then broke the second jumper on its way, interrupting the contact.

The work of 1907 led Lapik to a number of theoretical considerations. He postulated that the activation of a chain of nerve cells depends on the sequential electrical stimulation of each cell by an impulse or action potential of the previous one. Lapik proposed a theory of neural processes that resembled tuning or resonance between oscillatory radio circuits.

In 1943, Lapik's book La machine nerveuse [Nervous machine] was published , summarizing the scientist's many years of research.

Publisher Paris: Maison parisienne Neurdein (ND. Phot.), Sd

Often, when discussing Lapik 's results for computational neurobiology, one may encounterwith the statement that Lapik is the creator and researcher of the first neuron model, called "integrate-and-fire". In accordance with this model, the algorithm of a neuron can be described as follows: when a current is applied to the input of a neuron, the potential difference (voltage) across the membrane increases with time until it reaches a certain threshold value at which a jump-like change in the output potential occurs, the voltage is reset until the residual potential, after which the process can be repeated over and over again. In fact, the connection between nerve excitation and the formation of a nerve impulse at the time of Lapik was still unclear, and the scientist does not put forward hypotheses either about this or about how the membrane returns to its original state after the impulse is issued.

Further development of Lapik's ideas within the framework of computational neurobiology led to the emergence of many more accurate and complete models of biological neurons. These include the leaky integrate-and-fire model, the fractional-order leaky integrate-and-fire model, the Galves-Löckerbach model [ Galves – Locherbach model], the exponential integrate-and-fire model, and many others. The 1963 Nobel Prize was awarded for the research of Sir Alan Lloyd Hodgkin (1914-1998) and Sir Andrew Fielding Huxley (1917-2012, not to be confused with a writer).

A source

The long-footed coastal squid (Doryteuthis pealeii), like other squids, is an extremely convenient model organism for neurophysiologists due to the presence of giant axons in it. The giant squid axon is a very large (usually about 0.5 mm in diameter, but sometimes up to 1.5 mm) axon that controls part of the squid's water-reactive system, which it uses mainly for short but very fast movements in water. A siphon is located between the squid tentacles, through which water can be quickly pushed out due to muscle contractions of the animal's body wall. This contraction is initiated by the action potentials in the giant axon. Since electrical resistance is inversely proportional to the cross-sectional area of an object, action potentials propagate faster in a larger axon than in a smaller one.Therefore, an increase in the diameter of the giant axon was maintained in the process of evolution, as it allowed an increase in the speed of muscle reaction. This was a real gift for Hodgkin and Huxley, who were interested in the ionic mechanism of action potentials - after all, thanks to the large diameter of the axon, it was possible to install clamping electrodes in its lumen!

Source

The Hodgkin-Huxley model is a system of nonlinear differential equations that approximately describes the electrical characteristics of excited cells. The result was a model that served as the basis for more detailed research - this was a major breakthrough in neurophysiology in the twentieth century.

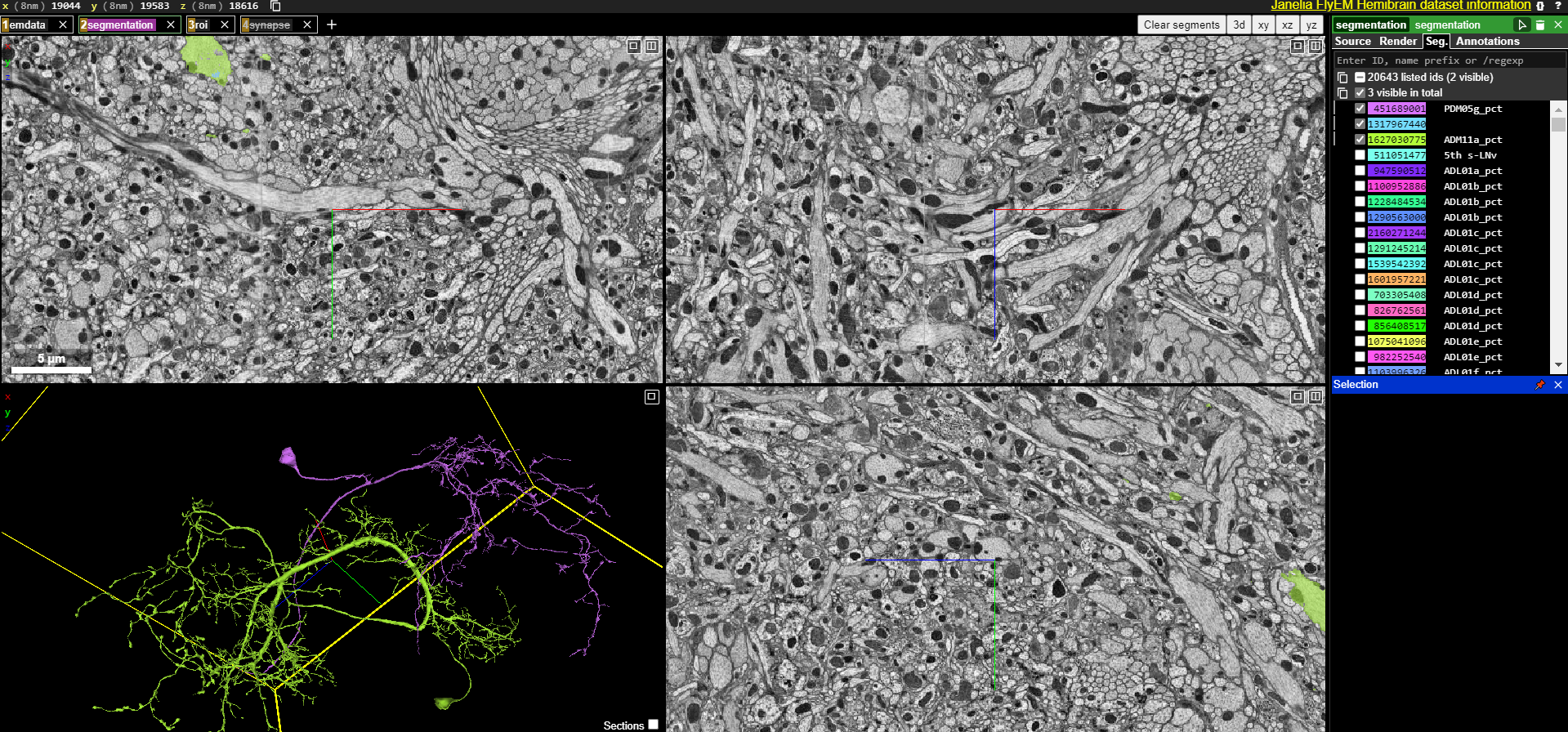

One of the more interesting projects is carried out by scientists from Sebastian Seung's laboratory. The immediate goal of the project was to create a map of connections between neurons in the retina of a mouse named Harold. The retina was chosen as a model object for testing the technologies needed to achieve a long-term scientific goal - a complete description of the human brain connectome. The mouse brain was removed from the skull and sliced into thin layers.

The obtained sections were passed through an electron microscope. When the laboratory staff realized that reconstructing a map of connections of one single neuron requires about fifty hours of a specialist's working time and mapping the retina of a mouse for a group of one hundred scientists will take almost two hundred years, it became clear that a fundamentally different solution was needed. And it was found. He created the online game EyeWire, in which players compete against each other to color photographs of slices of mouse brains.

In 2014, two years after the launch of EyeWire, laboratory staff made the first discovery and reported it in the journal Nature. Scientists have figured out exactly how mammals recognize movement. When light hits the photoreceptor cells, they transmit a signal to bipolar cells, then amacrine cells - and finally ganglion cells. Scientists analyzed 80 amacrine stellate neurons (29 of which EyeWire players helped describe) and the bipolar cells connected to them. They noticed that different types of bipolar cells bind differently to amacrine neurons: bipolar cells of one type are located far from the "soma" (body) of a stellate cell and transmit a signal quickly, cells of another type are located close, but the signal is transmitted with a delay.

If the stimulus in the field of vision moves away from the body (soma) of the stellate amacrine cell, then the "slow" bipolar cell is activated first, then the "fast" one. Then, despite the delay, the signals of both types of cells reach the stellate amacrine neuron at the same time, it emits a strong signal and passes it on to the ganglion cells. If the stimulus moves towards the soma, the signals of different types of bipolar neurons do not "meet" and the signal from the amacrine cell is weak .

The data labeled by the players was used to train the corresponding machine learning models on them, which can then perform the coloring on their own.... A kind of irony lies in the fact that these models are based on convolutional neural networks (we will talk about them in detail a little later), created, in turn, under the influence of scientific data obtained in the course of studying the visual cortex of the brain.

On April 2, 2013, the BRAIN Initiative began. The first brick in the foundation was an article by Paul Alivizatos, which outlined experimental plans for a more modest project, including methods that can be used to build a "functional connectome" and also lists the technologies that will need to be developed during the project. It was planned to move from worms and flies to larger biosystems, in particular, the pygmy shrew. It is the smallest mammalian known by body weight, and its brain consists of only about a million neurons. It will be possible to move from shrews to primates, including at the last stage - to humans.

The first connectome of a living creature, namely, the nematode C. elegans, was built back in 1986 by a group of researchers led by the biologist Sydney Brenner (1927-2019) from Cambridge. Brenner and his colleagues carefully cut the millimeter worms into thin slices and photographed each section using a film camera mounted on an electron microscope, and then manually traced all the connections between the neurons from the images . However, C. elegans has only 302 neurons and about 7,600 synapses. In 2016, a team of scientists from Dalhousie University in Canada repeated the feat of their colleagues for the larva of the tunicate Ciona intestinalis, whose central nervous system, as it turned out, consisted of 177 neurons and 6,618 synaptic connections.... However, it should be noted that the methods used to construct a connectome are ineffective for large nervous systems. Researchers did not seriously consider embarking on much larger projects until 2004, when physicist Winfried Denk and neuroanatomist Heinz Horstmann proposed using an automated microscope to dissect and visualize the brain, and software to collect and combine the resulting images .

In 2019, the journal Nature published a publication by Dr. Scott Emmons with a detailed report on the reconstruction of the nematode Caenorhabditis elegans connectome using a new method... A year earlier, a group of scientists led by Zhihao Zheng from Princeton University completed work on scanning the Drosophila brain, which consists of approximately 100,000 neurons. The system, developed by Zheng and his colleagues, made it possible to pass through a transmission scanning electron microscope more than 7,000 thinner sections of the brain of a fly, each of which was about 40 nm thick, and the total size of the resulting images was 40 trillion pixels .

In April 2019, employees of the Institute of the Brain. Allen in Seattle celebrated breaking the final milestone in a project to map one cubic millimeter of the mouse brain with its 100,000 neurons and one billion connections between them. To process a sample the size of a mustard seed, the microscopes operated continuously for five months, collecting over 100 million images from 25,000 sections of the visual cortex. Then the software developed by the institute scientists took about three months to combine the images into a single three-dimensional array of 2 petabytes. All images of our planet, collected over more than 30 years by Landsat missions, occupy only about 1.3 petabytes, which makes mouse brain scans practically "the whole world in a grain of sand." The ultimate goal - a nanoscale human brain connectome - is still a long way off.The number of neurons in it is comparable to the number of stars in the Milky Way (about 1011). With today's imaging technology, it will take dozens of microscopes operating around the clock for thousands of years to collect the data needed to achieve the ultimate goal. But advances in microscopy, as well as the development of more powerful computers and algorithms for image analysis, have propelled the field of connectomics forward so quickly that it surprises researchers themselves. “Five years ago, it was too ambitious to think about a cubic millimeter,” says Reid. Today, many researchers believe that complete mapping of the mouse brain, which is about 500 cubic millimeters in volume, will be possible in the next decade. “Today, mapping the human brain at the synaptic level may seem incredible. But if progress continues at the same pace,both in computing power and in scientific methods, another thousandfold increase in capabilities alreadydoes not seem inconceivable to us . "

The source of the

BRAIN Initiative is not the only large-scale program in this area. Scientists from the Blue Brain Project and Human Brain Project are also engaged in the creation of a functional model of the rat brain (with an eye on the human brain). The China Brain Project is also moving forward.

Actually, now that you understand the complexity of these biological prototypes, you can move on to an engineering approach and gradually begin to discuss the application of the principles in modern computing. But more about that next time. Or - in much more detail this part and the following - in the book by Sergei Markov "The Hunt for the Electric Sheep: A Big Book of Artificial Intelligence", which is being prepared for publication by the publishing house "Alpina Non-Fiction". While the book can not be bought yet, but the posts on the materials can already be read. Well, in generaloulenspiegel very cool specialist.