At first, the story sounds quite simple: the storage system works well for three years on an extended warranty, the fourth is relatively normal, and a new one is bought instead of the outdated one on the fifth. Vendors squeeze money out of you by raising the cost of support and all sorts of paid features like VDI support. Can this scheme be broken? Maybe yes.

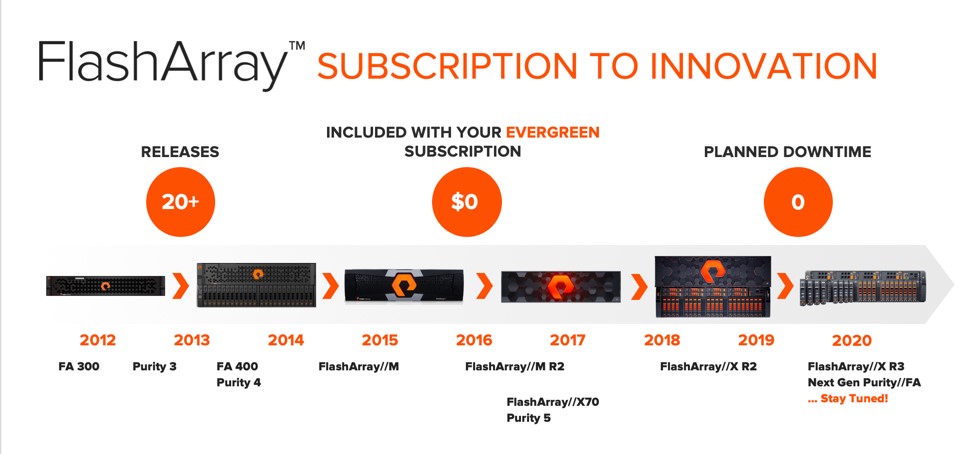

The company entered the market with an intriguing proposal: a piece of hardware always works, it always does it quickly, the cost of support is the same every year, all features are available immediately. Well, that is, they just took the box and from time to time they change the components in it so that they become obsolete at about the speed of replacement. The controllers are updated every three years, it is possible to replace old disks with more modern ones, that is, the space occupied by the storage system in the rack can not only grow, but also decrease, while the volume and performance increase.

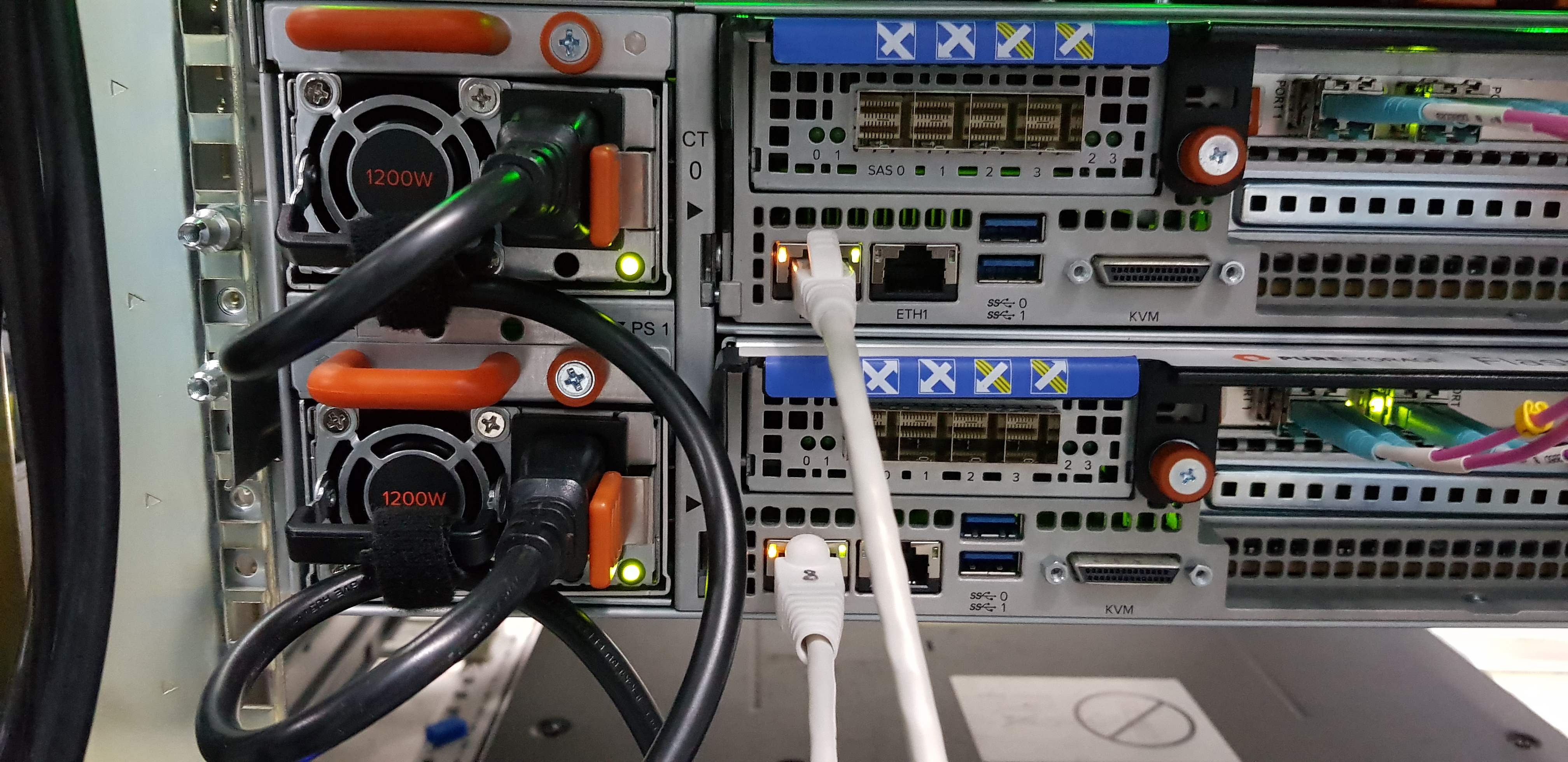

Actually, the first thing you see in the rack is the handles with the model name, by which you can and should pull the controllers from the array.

This is done without shutting down, making money, and the margin of productivity is such that the banking systems do not slow down during replacement. To do this, you had to write your own file system (more precisely, an analogue of RAID), assemble a cluster inside and make a couple of improvements, at the same time throwing out the overhead inherited from hard drives.

Let's see what happened and how it turned out. Let's start with architecture.

For starters, the procedure for working with the array does not include power buttons. Absolutely. Will not need. To shut down, simply pull the cables out of the PDU.

Pure storage architecture

The company started from the fact that it developed from scratch a very good architecture, sharpened for flash (since 2017 - NVMe), and effective algorithms for deduplication and data compression. The calculation was as follows: then there were arrays of hard drives, hybrid solutions and SSD all-flash on the market. Flash drives were expensive and disc drives were slow. Accordingly, they broke into the competitive environment with flash arrays at the cost of owning disk arrays.

We did this:

- We wrote our own operating system for disks. The main feature is fast data compression before recording, and then post-processing with powerful dedup, which allows you to tamp them even more densely and accurately.

- We took only flash drives (now it's generally strictly NVMe) and powerful hardware for computing.

The first implementations were for VDI environments, since the data there compressed very well. Algorithms of deduplication and compression gave a gain of six to nine times in the space used, that is, with all the advantages of all-flash, they dropped prices by about an order of magnitude. Plus, the economic model bribed me: a fixed cost for support and the ability not to change the piece of hardware. Then I saw the first replacements of two racks for three or six-unit versions, but I still did not believe that this piece of iron would be used somewhere outside of VDI.

And then LinkedIn began to store on these pieces of iron. AT&T connected. Top banks and telecoms in the US also bought in prod.

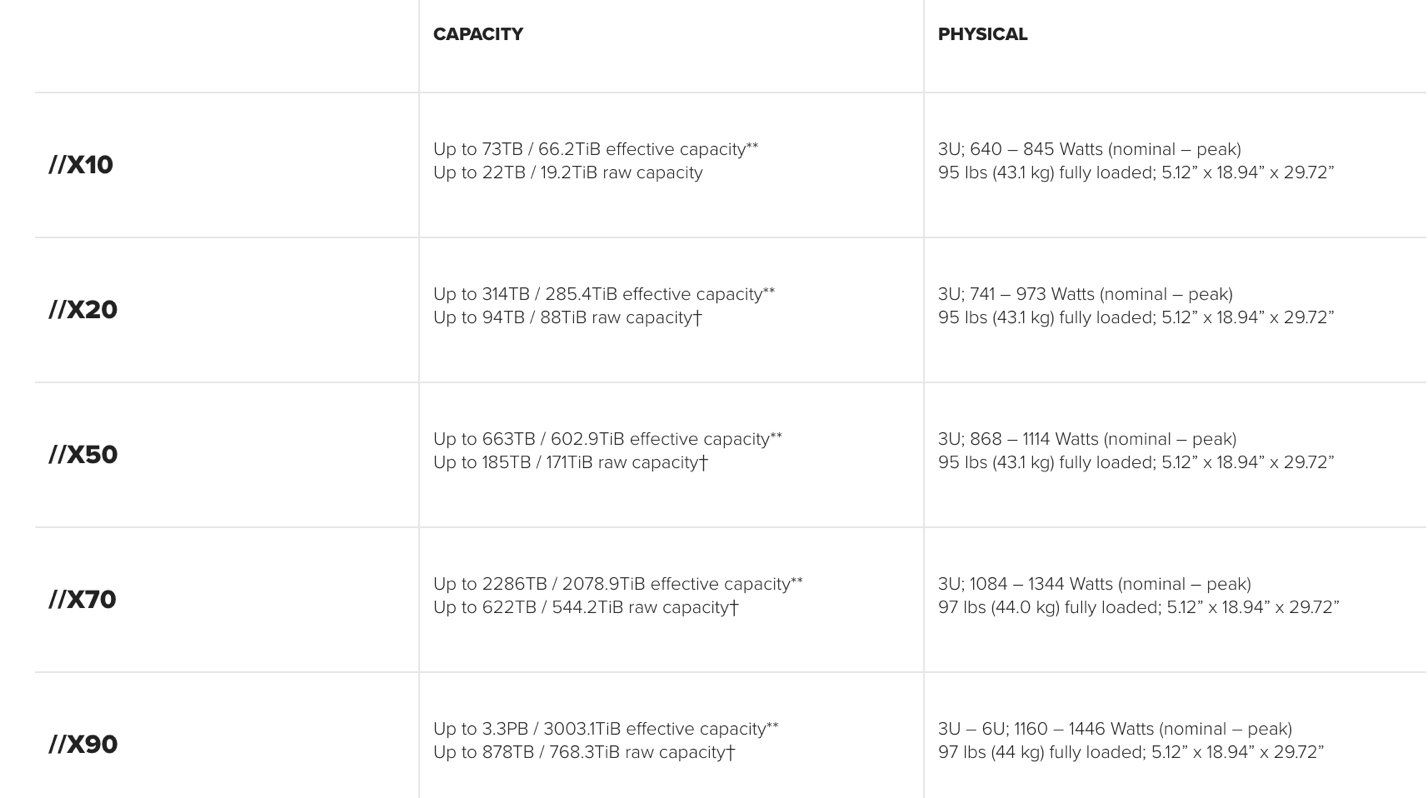

It turned out that compression algorithms are quite well suited for development and testing environments. After replacing SSD with NVME, competition suddenly began in the conventional transactional databases in the banking segment. Because the array turned out to be fast and reliable due to its architecture "at any moment we can lose any two flash modules." Then a flash array on cheaper chips (QLC) came out with a response time of 2-4 ms, and not 1 ms as in top models, and I began to observe the removal of the same VNX and Compellent. It became clear that the piece of iron is quite competitive.

Naturally, the cost of TB will continue to be high where there is incompressible data: encryption, archiving, video streams (video surveillance) and image libraries, but sometimes such implementations also happen when a client requires high performance. I know a case when a video (seemingly compressed data) was compressed by an additional 10%.

But even for conventional databases, it turned out to be quite workable at a price per gigabyte.

And it was here that the model of "evergreen" storage system began to bribe.

Constant upgrade

For five years, only the chassis and power supplies remain in the piece of iron from the old one, in fact. You can move in jerks with transfers, or you can change components as in a cluster. Actually, this is the cluster, only assembled in one three-unit (or six-unit) box. Iron was made from scratch for themselves. Let's look at the architecture first, and then move on to why it is convenient to change it piece by piece.

Interesting solutions are:

- The computing power is always twice as much: it is necessary to replace the controller without degrading performance. At the same time, both controllers work on the front, and one controller is used on the backend for writing to flash modules.

- RAID- , N + 2, . , — , . .

- N + 2, , . , . RAID, , , , ( ) , .

- ! , , . , - .

- , ! , , ( ), - . , , , . . , RAID 10.

- — NVMe-, — NVRAM. Optane. — , ( SCM-), .

- . - , , . , .

- 3:1, . 512 , 8 . — , . . HDD, .

- ( ). , .

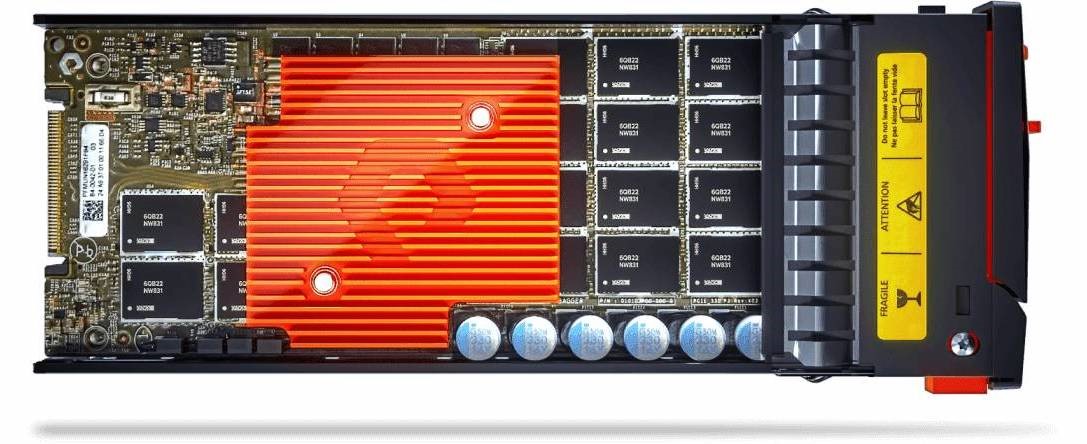

But it was not enough for the company to assemble its architecture and write a virtual server OS for it. They got into the low level of the flash chips themselves and released their own. But at the same time they are compatible with standards. Above is an NVMe interface, inside are chips of our own design.

Violin took this path, which once gave them cosmically fast arrays. Only they made their own standard, but here they use an open and publicly available one. What is it for? The chip firmware is part of the controller firmware, and therefore the storage system knows exactly what is happening on each individual brick.

If in a regular disk shelf every SSD or NVMe module is a small black box for a controller, then here he sees everything. It was necessary to solve the problem of a large addressable volume, because the problems of flash arrays are the same: wear management, garbage collection, etc. This is done by firmware of the controllers.

That is, as you can see, the puzzle is as follows: a cheap place is achieved by exchanging for performance. High performance means constant CPU redundancy and RAID. Excessive number of processors means powerful compression post-processing and the ability to lose any part without losing performance. RAID matches this idea. That is, all these advantages give the chip almost free of charge to take out any part of the "profit".

Next comes marketing and offers the loud statement "ageless storage". Fixed support price tag, all software included, no additional bundles. Due to a separate service level, you can replace the controllers free of charge every three years (Evergreen GOLD level). There are upgrades as requirements increase: I saw how XR2 changed to XR3. I worked for a year, then a business came in and said we need a new one. The vendor has the option to trade in old controllers and get new ones ahead of time. Nice upgrade. The controllers just change one at a time.

Upgrading disks is more interesting. An additional service shelf with disks comes from the factory. Data is migrated to the shelf without stopping - all data from those media that must be replaced. The shelf works with the main controllers (it has its own). In fact, this is a unit datapack, temporary storage. When the migration is over, the disks are marked as OK, and the engineer removes them from the chassis. In place of the old ones, it inserts new ones and starts the reverse migration. It takes a day or more, but the applications and the server are not noticed. Since these storage systems are often at service providers, there is the possibility of simultaneous replacement and upgrade: within the framework of Evergreen GOLD, you can change old disks for several new capacious and fast ones, plus buy the same ones.

So, it's good to fill in, the weak point is always compression!

We are used to hearing this from disk storage users. There, the story is standard - the functionality was not provided for during the development of the architecture - they turned on compression, the application stopped, then spent a lot of time trying to restore everything again under the abuse of the management. As already mentioned, Pure Storage has taken a different path - deduplication with compression has been made a basic non-disconnectable functionality. The result is that Pure Storage is now worth more than 15,000 installations. During initialization, you can check the box "provide anonymized statistics", and then your storage system will send to the Pure 1 monitoring system. The guarantee for databases, for example, is 3.5: 1. There are specific features - the same VDI from 7: 1 and higher. Arrays are sold not in a damp place, but in a useful container with a guarantee of additional delivery,that is, if during migration your compression level turns out to be lower than guaranteed, the vendor puts more physical disks for free. The vendor says drives are delivered in about 9-10% of cases and the error rarely exceeds a couple of drives. In Russia, I have not seen this before, the coefficients were the same on all installations, except for the case when encrypted data is "revealed", about which the customer did not say that it is encrypted.

Due to the nature of snapshots, test environments are very efficient. There is an example of a client who made a 7: 1 sizing in the calculation, and received 14 kopecks to one.

The vendor states the following:

- 3.5: 1 databases (Oracle, MS SQL).

- 4.2: 1 server virtualization (VMware, Hyper-V).

- 7.1: 1 VDI (Citrix, VMware).

- 5: 1 average ratio across the entire installed base.

Also from the interesting functionality: automation and integration with fashionable youth pieces like Kubernetes, as well as full support for VMware vvol. Everything is simple here - most of Pure Storage's western clients are cloud providers like ServiceNow, the case for which, by the way, is posted on the website. They are used to automating everything as much as possible.

Total

It turned out to be an interesting thing, which at first looks strange, and then more and more joyful and joyful. Five years in Gartner:

Of course, the economic model of Evergreen is not so cheap and cheap, but it saves from a number of hemorrhoids and looks quite competitive when calculating the cost of ownership for several years.

PS An online meetup is available below: "Data storage systems by subscription: truth or fiction."