Movie doubles

You've probably seen double actors on the screen more than once and, most likely, did not even notice the substitution. If before, makeup artists were responsible for similarity, now directors are increasingly attracting development teams from the game development industry.

Gladiator, which I cited as an example, was one of the first films to feature a digital twin. Those of his scenes that did not have time to be filmed with Oliver Reed were collected from partially filmed material, adding frames made using 3D graphics. For 1999, we managed to achieve an absolutely fantastic result, and even CG specialists often do not notice the substitution.

Another famous example of the recreation of a movie hero after the death of an actor is the film "Superman Returns" (2006) by Brian Singer. Making Marlon Brando the father of a superman was a matter of principle for the director, because back in 1978, Brando played the same role in Superman.

A 3D model of the actor's face was created from photographs, and its animation - facial expressions and eye movements - was created on the basis of the actor's footage that was not included in the final editing version. In general, a very time consuming process.

Ten years ago, many complex operations to create a photorealistic model were done manually. And the modern film industry already has a huge technological stack available for the same purposes, and there is no need to “reinvent the wheel”.

Perhaps the most telling example of advances in technology is the process of creating leather in 3D. Human skin transmits light, and a ray passing through it is reflected and distributed internally. In computer graphics, this is called Subsurface scattering - the distribution of light under the surface. Modern render engines, which implement the Subsurface scattering materials functionality, are able to calculate this effect physically correctly. And 10 years ago, you had to program it or create a "fake" in post-processing.

Now the process is automated, and you just need to manually change the color and texture of the skin. Moreover, color management can be carried out by a biologically correct mechanism - by changing the melanin content in the skin material. Modern digital twins are so realistic that you can hardly understand that in a particular frame you see the Digital Human, and not your favorite actor.

Want to work out? Watch the SuperBobrovs series starring the star of Dog's Heart Vladimir Tolokonnikov. Unfortunately, the actor, known to everyone for his role as Polygraph Poligrafovich Sharikov, could not complete the shooting due to cardiac arrest. So all the missing scenes were played by his avatar.

Serious progress in the creation of digital twins is also associated with the development of computing power. If earlier, to calculate a sequence with a digital character, entire farms of processors and RAM were needed, now everything is considered on a home gaming computer - slowly but surely. So I think Antony Proximo could be rendered in about a month by three of us. And if we also take into account the creation of a three-dimensional model (the most time-consuming and most expensive part of the work), the total duration of the project would be 2-2.5 months, and the budget could be limited to $ 100 thousand.

Business Content Factory

Advances in technologies for creating photorealistic models of people are accompanied by a leap in the development of neural networks with which you can control 3D models. Together, this creates the basis for a wider application of the Digital Human. So, by combining a 3D model with neural networks or a chat bot, you can organize a whole factory for the production of video content: you “feed” it the text, and your avatar reads it out with facial expressions and emotions. The development of such a scenario is already on the market , however, they use not 3D models, but photographs of real people.

Looking at the news anchors on federal channels, many think that this is how they work. In fact, no - the presenters not only read the text with facial expressions and emotions, but also write it for themselves. And in the future, of course, neural networks will be able to "write" text for TV and video blogs, and avatars will be able to voice them. And when organizing reports, it will also be possible to save money - send only the operator to the site, and overlay an avatar stand-up with the text written by the editor on the already filmed video material.

We at LANIT-Integration think that two directions of using Digital Human technologies are the most promising.

The first is to replace a person's face with a video... Probably, everyone has already seen the clones of Elon Musk who connected to Zoom conferences. This scenario is called Deep Fake and, as the name implies, is used for all sorts of fakes. Technologically, the same direction is also called Face Swap, but this scenario is no longer for black PR, but for commercial purposes that do not cause ethical controversy. For example, you can debug the production of educational content.

A bank with a federal branch network has many training videos of various quality. Some of them are just recordings of Zoom conferences. The low quality of the video and the situation in the frame, which does not correspond to the corporate standard, do not allow to collect them into a single training course. And with the help of digital avatars and neural networks, you can fix everything - change both the background and the speaker's appearance.

A similar scenario for creating video products is interesting for TV and bloggers. Recently, we were contacted by the producer of one thematic channel and asked to estimate the cost of producing regular issues with a digital avatar as a presenter. Of course, Digital Human will not solve all the problems - there is still no technology that allows an avatar to reproduce emotions when processing text by a neural network - for this you still need a living person, whose facial expressions and whose movements the avatar will use. In addition, you need a specialist who will take over the writing of scripts, texts for voice acting. True, it becomes completely unimportant how the people involved in the production of media content look, what gender they are, what their timbre of voice is and where they are. To shoot, you will need a suit for motion capture, a helmet with a video camera aimed at the face (to "capture" facial expressions),and the avatar itself, which in real time will broadcast on the screen all the movements of the offscreen worker. According to our calculations, this technology will reduce the cost of video production by 10 times.

The second direction of Digital Human application is combining a digital avatar with a chatbot and a voice synthesis system , and it can be very much in demand for communication with clients.

Chatbots are now used in many call centers. But not all clients are comfortable communicating with a robot. Perhaps communication would be more enjoyable if the client saw their digital interlocutor.

By screwing a chatbot, a microphone and a speech recognition and synthesis system to a digital avatar, you can create virtual hostesses, sales consultants, consultants in government institutions ( Soul Machines has a similar case - the virtual assistant Ellacommunicates with visitors to the NZ police headquarters), sommeliers - in general, any employees whose main task is simply to answer client questions. In theory, this will allow saving on payroll, and employees - to do what the neural network cannot do yet.

Experiments with appearance

So, the base for all the above scenarios is a digital avatar. I have already said that its creation is a very laborious process. In fact, we are talking about a virtual sculpture of a person made by a 3D sculptor. He works through all the details of the appearance, and then using simulation tools "grows" the hair.

Appearance questions will not arise if you create a copy of a celebrity or model. What if you need to create a virtual person from scratch? What traits will you give him?

As an experiment, we decided to create our own digital avatar - the LANIT ambassador. Of course, you could ask all your colleagues how he should look. But, firstly, a survey of several thousand people is already too much, and secondly, based on this data, we would have to create a second Ken for Barbie - a pleasant generalized image, completely devoid of individuality. No, we don't need such an ambassador at all. We took a different path.

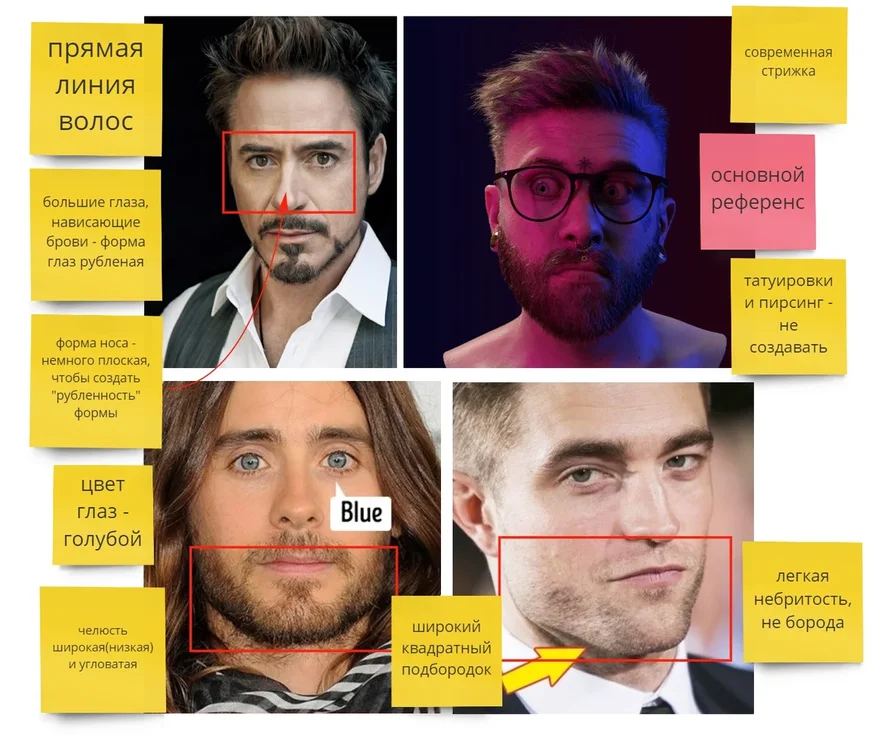

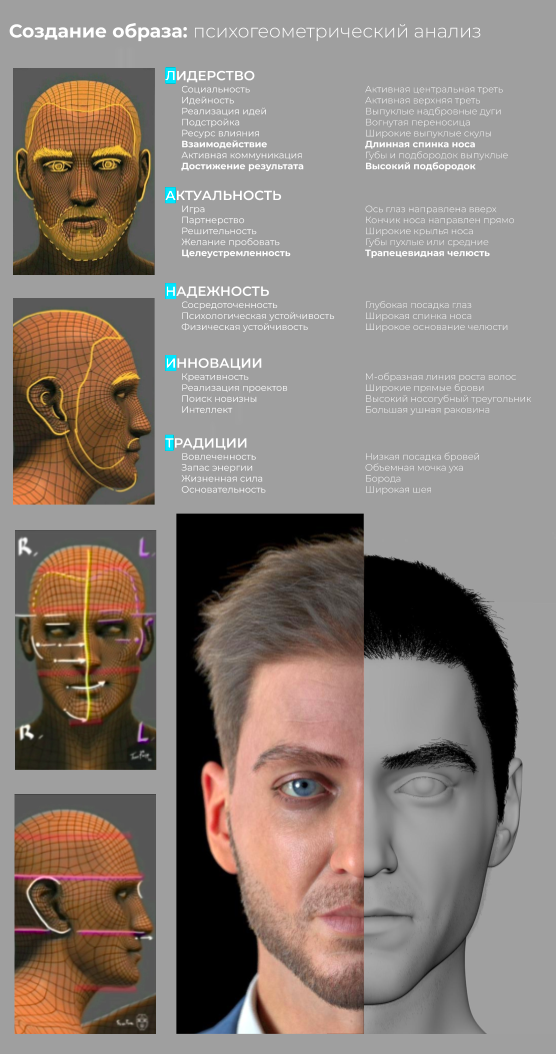

Each of us has formed a set of stereotypes over the years. For example, a thick gray beard is associated with a good disposition (like Santa Claus), wide and straight eyebrows - with straightforwardness and domineering character, etc.

We have formed an expertise in face psychogeometry and trained the neural network to identify, based on perception patterns, the relationship between a person's appearance and the impression he makes. Now she analyzes the features of appearance and gives out a set of words describing how a person may seem to others, for example, kind, vulnerable, insecure, calm, etc. We took these developments and started the process in reverse order - we gave the neural network a description of our future hero (how the target audience should perceive him) and got a certain set of facial features.

I’ll make an important reservation right away: we in no way claim to be scientifically reliable in our experiment. Moreover, there is a lot of research confirming that it is dangerous to look for relationships between facial features and, for example, character or (God forbid) intelligence. So we are not exploring the scientific field, but the possibilities of technology.

So, we “fed” neural networks with human qualities that correspond to the features of the corporate spirit: leadership, innovation, reliability, dedication, etc.

And even such a selective selection of the necessary qualities led to the fact that we got a completely neutral character that does not cause any emotions. Therefore, the results that the neural network gave us had to be manually corrected.

The face and, in general, all computer graphics consists of three components:

- 3D-, ;

- , , ;

- ( , ).

1.

Head

To create Maxim (as we named our avatar), we took the simplified shape of a human head as a basis and formed the details (sculpt) in Zbrush. First, a high-poly model was created, in which the smallest details were worked out, including the pores on the skin (textures were developed for it).

We are using 4K textures. 8K textures give a better result on close-ups, but the need for them is rare, so we refused to use 8K textures for the sake of performance. After the high poly model is ready, we create a low poly copy of it and transfer small details to it using normal maps (surface "bump" maps).

Hair

There are many tools for creating hair. We chose GroomBear for Houdini to avoid bloating the software stack - most of the technical work is done in Houdini.

Clothes.

Marvelous Designer was used to model clothes, and folds and small and characteristic details were completed in Blender.

2. Texturing

We perform texturing in Substance painter - in our opinion, its tools provide the simplest and fastest texturing process. An important point is the hint : in order to correct the image of the avatar, it is enough to change only the texture of the skin, not at all touching the geometric basis. However, this is not a secret for lovers of makeup. Chinese women with the help of cosmetics daily achieve an effect comparable to plastic surgery. And for avatars, such a simple change in appearance means big savings in the production of video content - three clicks, and the image of your character has changed dramatically - so much so that it is already a completely different person.

3. Animation

Our character is tuned to work with motion capture systems: the Xsense body mockup suit and the Dynamixyz face mockup system. We did not use optical motion capture systems, since they are very bulky, not mobile, which means that the content production process would have much more restrictions.

Xsense controls the movements of the trunk, head and limbs. The animation system is hybrid: large arrays of geometry are controlled by bones, which, in turn, are controlled by the data of the mockup system, and folds of clothing in the area of the joints and other characteristic places are controlled by auxiliary blendshaps (states of the model with characteristic folds in the area of the joints), which ensure correct operation geometry where bone animation does not allow for the correct result.

The need to create blendshapes is the main problem of creating a character's wardrobe - it is laborious. For each new garment, the artist needs to create dozens of blendshapes. We are actively looking for ways to automate this routine, and if you have ideas or ready-made solutions, I will be happy to discuss them with you in the comments.

Dynamixyz manages facial animation, and setting up facial animation is the hardest and most time consuming part in the pipeline. The fact is that 57 muscles are hidden under the skin of the face (25% of the total number of muscles in the human body), and the movement of each of them affects the facial expression.

Recognition of faces and emotions is an extremely important ability necessary for existence in society, so people instantly capture implausible facial expressions. So, for the avatar, you need to make a facial animation that is 100% realistic.

Each face can have an infinite variety of expressions, but as practice has shown, 150 blendshapes are enough to create realistic animation. We went a little further and created 300 blendshapes (and continue to create new ones if we find situations where 300 is not enough).

Dynamixyz works on the following principle: a helmet is put on the actor's head, on which specialized cameras with ultra-high data transfer rates are fixed. The video from these cameras is broadcast to the workstation, where the neural network determines the anchor points on the actor's face and in each frame matches them with the anchor points on the virtual face mask, making it move. A system of blendshapes is tied to the movements of the face mask, which "switch" in accordance with the nature of the movement of the face mask. Each blendshape is created for the entire face as a whole, but it can be switched during animation locally, for example, in the area of the mouth or right eye, separately from the rest of the face. These switches are smooth and completely invisible to the eye.

There are alternative, less labor intensive facial animation systems, such as the one recently patented by Sberbank. But reducing labor costs comes at the price of quality and flexibility, which is why we abandoned the use of such technologies.

4. Render

Our Maxim lives in Unreal Engine and Houdini.

In Unreal Engine (UE), we render animations that do not require complex post-processing, because the UE does not allow us to obtain the full channels and masks necessary for post-processing at the output, and also has a number of limitations (for example, it is not possible to make the correct hair mask, therefore that the UE does not know how to make the masks translucent). We experimented with Real time ray tracing (RTX) for some time, but, having not received a significant increase in image quality, we abandoned its use.

We use Arnold for rendering in Houdini. This is a CPU render, and it works quite slowly compared to GPU and RealTime engines, but the choice fell on it, because comparative tests showed that Sub Surface Scattering materials (and the skin material is just that) and the hair material in Arnold work on the order is better than in Redshift and Octane, and V-ray, unfortunately, haphazardly produces artifacts on Sub Surface Scattering materials.

5. Interactive

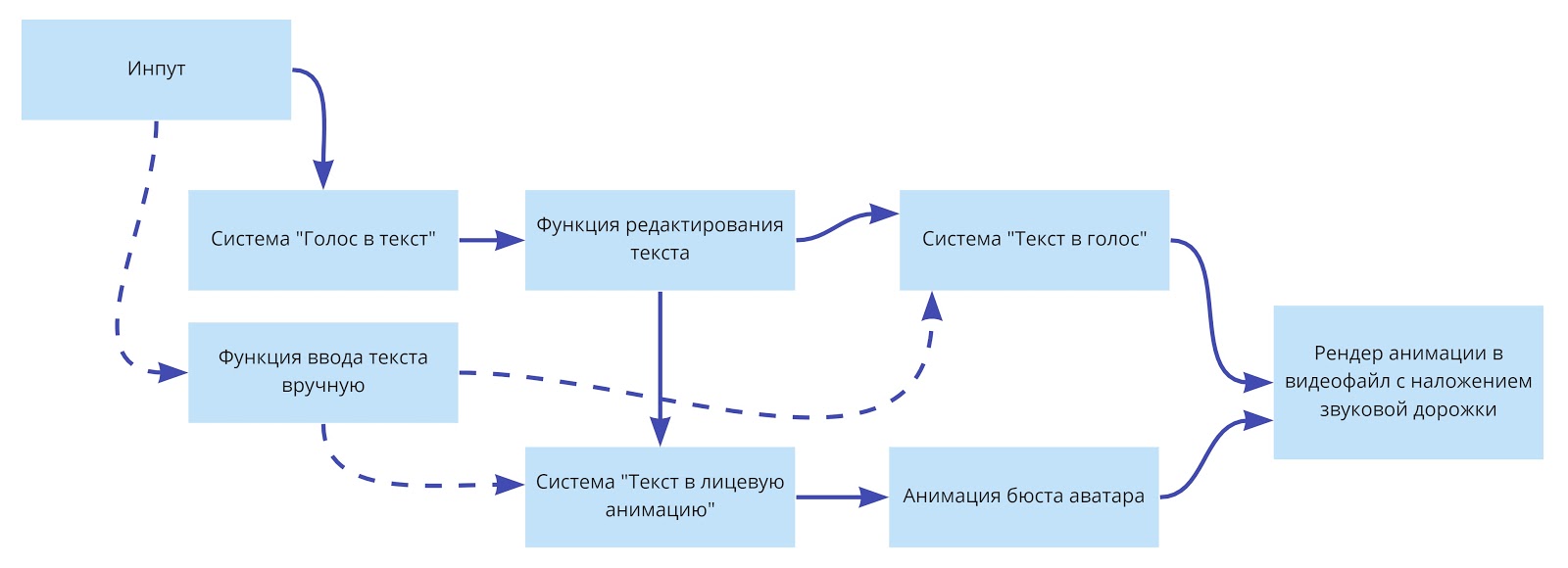

The most promising (but also the most difficult), from our point of view, direction of development of digital avatars is their combination with software products - voice assistants, chat bots, speech2text systems, etc. These integrations open the door to building scalable products. We are currently actively working in this direction, building hypotheses and prototypes. If you have any ideas for similar uses of digital avatars, I would be happy to discuss them.

Cute or disgusting? Movement will enhance this effect.

Perhaps Maxim will remind you of someone you know. Or maybe you negotiated with a similar person yesterday. Was he cute or annoying with his way of communicating? In any case, the image of Maxim evokes emotions.

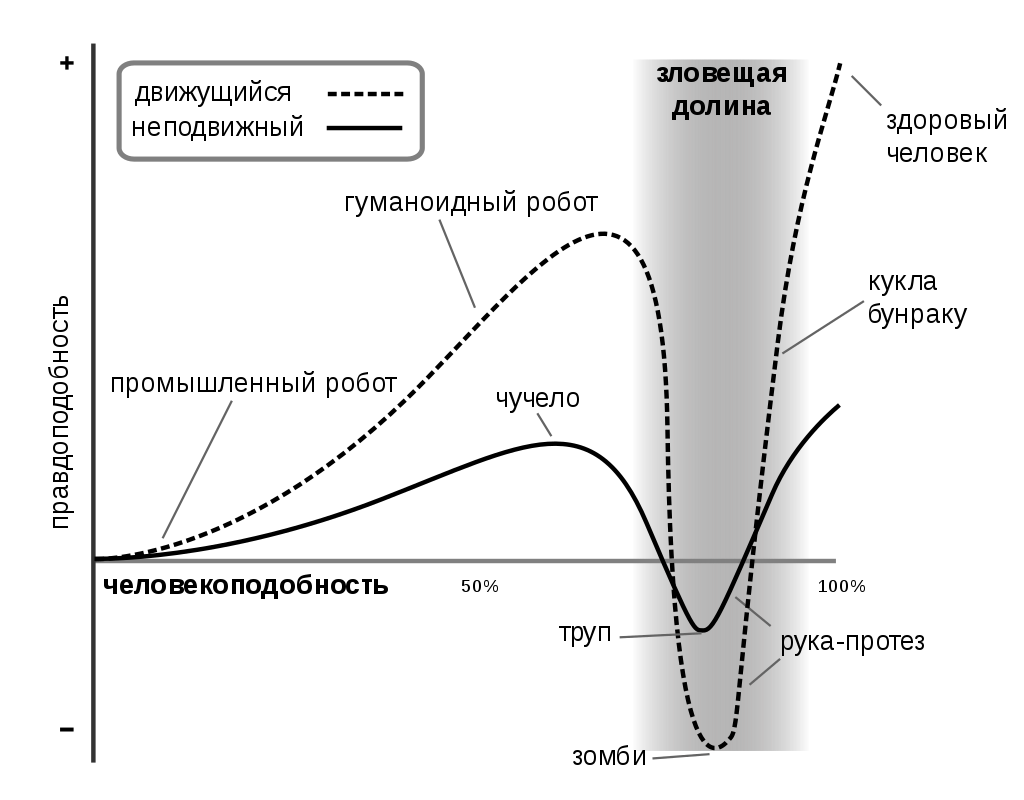

In the process of creating it, we tested on our own experience the effect of the "Evil Valley" described by the Japanese Masahiro Mori, who investigated the perception of humanoid robots by humans. This is detailed in the Wiki, but in short: the more a robot resembles a person, the more sympathy we feel for it. This effect grows up to a certain point. When a robot is almost indistinguishable from a person, we, looking at it, begin to feel discomfort and even fear - all because the robot is given out the smallest details, which we most often cannot even identify and name. This abrupt change in our reactions (correspond to the failure in the graph) is called the "Evil Valley". At the same time, animation enhances both negative and positive effects, however, as can be seen from the graph, full resemblance to a person can be achieved only with the help of animation.

So, our Maxim crossed the "Ominous Valley", but one nuance negatively affects his perception - without animation, he has no facial expression at all, all facial muscles are relaxed, which does not happen in a living person. Therefore, he looks very detached, looks not at the interlocutor, but as if through him, which is very unpleasant.

Maxim seems to us a worthy representative of the avatar family. As Digital Human becomes more and more popular, we look forward to our clients and partners will soon decide to acquire an avatar for marketing purposes. Then Maxim will have brothers and sisters in reason - artificial, of course.

In the meantime, Maxim alone is exploring the opportunities that the B2B market opens up for digital avatars: in October 2020, he will take part in the conference“Smart Solutions - Smart Country: Innovative Technologies for a New Reality” and the Disartive digital art exhibition, will promote LANIT's products and services on social media and possibly give several interviews.

The emerging market for Digital Human

There are companies in Russia that work in a direction similar to ours. But not all are public - many interact with gamedev studios and make characters for games (and this world lives by its own rules, and very often studios use the Hollywood model without disclosing their contractors).

The most famous company is, perhaps, Malivar , in which Sberbank invested 10 million rubles. She owns the virtual character Aliona Pole - an artist, model and author of collections of "digital clothes".

In a split second, a digital model changes a red blouse for a blue one, tries on new looks without stopping movement. And the viewer, brought up on Instagram Stories and TikTok, is happy to watch short videos with a fantastic number of transformations that are not available to a living model.

Aliona is gradually moving away from the image of only a model, acquiring all new human traits on her Instagram - she promotes an environmentally friendly attitude to the world, philosophizes on the topic of personality boundaries, mixing ordinary and virtual reality and “drowns” for individuality and body positivity.

There is an interesting digital avatar project on the global market: Samsung - Neon... Of course, the developers are still far from creating a new life form, but they taught their 3D models to move well. Thanks to an interface that can turn voice into text, the model analyzes incoming information, transforms it into solutions, and gives internal commands to move the hands and other body parts. At CES 2019, the company featured avatars of a nurse, TV presenter, national park scout, fitness trainer and several others. I wrote

about the New Zealand project to create Soulmachines assistants above. Probably, there are other projects worthy of attention, but they have few significant news feeds, although serious funds are now investing in the development of digital avatars.

What do you think about avatars? I would love to have my digital clone to attend Zoom meetings and communicate with the boss and, of course, a set of digital clothes - always an ironed shirt, jacket, tie - everything.