Over the past six months, Recursive Cactus (as it introduced itself when registering on our site) has been preparing for future interviews, allocating at least 20-30 hours every week for LeetCode exercises, algorithm tutorials and, of course, interviewing practice on our platform to assess its progress.

Typical Recursive Cactus workday:

| Time | Occupation |

| 6:30 - 7:00 | Climb |

| 7:00 - 7:30 | Meditation |

| 7:30 - 9:30 | Solving problems by algorithms |

| 9:30 - 10:00 | The way to work |

| 10:00 - 18:30 | Job |

| 18:30 - 19:00 | Way from work |

| 19:00 - 19:30 | Communication with wife |

| 19:30 - 20:00 | Meditation |

| 20:00 - 22:00 | Solving problems by algorithms |

Typical Recursive Cactus Day Off:

| Time | Occupation |

| 8:00 - 10:00 | Solving problems by algorithms |

| 10:00 - 12:00 | Physical Education |

| 12:00 - 14:00 | Free time |

| 14:00 - 16:00 | Solving problems by algorithms |

| 16:00 - 19:00 | Dinner with wife and friends |

| 19:00 - 21:00 | Solving problems by algorithms |

But his overwhelming efforts to prepare for the interview emotionally affected him, his friends and family. His studies have eaten up all his personal time to such an extent that he has practically no life left, except for work and preparation for the interview.

One thought keeps you awake: “What if I don't get the interview? What if all this time has been wasted? "

All of us were once looking for work, and many experienced this state. But why does the Recursive Cactus spend so much time preparing and what is the reason for this frustration?

He feels that he does not meet the high bar for engineers, the generally accepted minimum level of competence that every engineer must demonstrate in order to get a job.

To meet the bar, he chose a specific tactic: meet the generally accepted expectations for an engineer, and not just be the professional that he really is.

It seems silly to deliberately pretend to be someone you are not. But if we want to understand the behavior of a Recursive Cactus, it is advisable to figure out what this bar is. And if you think a little about this topic, it seems that it does not have such a clear definition.

Definition of the "bar"

Let's take a look at how FAANG companies (Facebook, Amazon, Apple, Netflix, Google) set the bar. After all, these are the companies that receive the most attention from virtually everyone, including job seekers.

Few of them provide specific details about the hiring process. Apple does not publicly share any information. Facebook describes the stages of the interview, but not the assessment criteria. Netflix and Amazon say they are hiring candidates who fit their work culture and leadership principles. Neither Netflix nor Amazon describe exactly how they measure the underlying principles. However, Amazon does tell how interviews are conducted and also names topics that can be discussed in developer interviews....

The most transparent of the big companies, Google publicly discloses its interview process in the smallest detail, and Laszlo Bock 's book "Work rules!" adds inside details.

Speaking of tech giants from a historical perspective, Alina (our founder) in the last post mentioned the 2003 book How to Move Mount Fuji? , which talks about the interview process at Microsoft during the time the company was a prominent technology giant.

To gather more information on how companies are evaluating candidates, I also looked into Hacking Programming Interviews by Gaila Luckmann McDowell, which is actually the Bible for interviews for potential candidates, andJoel Spolsky 's Guerrilla Interviewing Guide 3.0 , written by an influential and well-known figure in tech circles.

Plank definitions

| A source | Criteria for evaluation |

|---|---|

| Apple | Not published publicly |

| Amazon | Compliance with Amazon Leadership Principles |

| Not published publicly | |

| Netflix | Not published publicly |

| 1. General cognitive abilities

2. Leadership 3. "Google" 4. Professional knowledge |

|

| Hacking Coding Interviews by Gaila Luckmann McDowell | - Analytical skills

- Programming skills - Technical knowledge / computer science fundamentals - Experience - Cultural relevance |

| Joel Spolsky | - Be smart

- Do your job successfully |

| Microsoft (circa 2003) | - “The purpose of the Microsoft interview is to assess overall problem-solving ability, not specific competence.”

- “Thinking speed, resourcefulness, creative problem-solving ability, thinking outside the box” - “Hire for what people can do, not for what they did " - Motivation |

Definition of "intelligence"

Unsurprisingly, coding and technical knowledge are among the criteria for hiring a developer at any company. After all, this is work.

But besides this, many people mention the criterion of general intelligence. Although they use different words and define terms slightly differently, they all point to some concept of what psychologists call "cognitive ability."

| A source | Determination of cognitive abilities |

|---|---|

| « . , , . , , , GPA SAT» | |

| Microsoft ( 2003 ) | « Microsoft — , … , . , » |

| « - , , , » | |

| « (, ), , , . » |

All of these definitions are reminiscent of the theory of early 19th century psychologist Charles Spearman, the most widely accepted theory of intelligence. In a series of cognitive tests on schoolchildren, Spearman found that those who performed well on one type of test tended to do well on other tests as well. This understanding led Spearman to the theory that there is a single basic common ability factor (“g” or “g-factor”) that affects all metrics, regardless of specific task-specific abilities (“s”).

If you believe in the existence of “g” (many believe, some do not… there are different theories of intelligence ), then finding candidates with high “g” scores clearly aligns with the criteria for intelligence in companies.

While companies consider other criteria as well, such as leadership and culture, the bar is usually not defined in these terms. Plank is defined as possession of technical skills, as well as (and perhaps to a greater extent) general intelligence . After all, candidates usually don't train their leadership and culture.

Then the question arises how to measure it. Measuring technical skills seems difficult but doable, but how do you measure “g”?

Measuring general intelligence

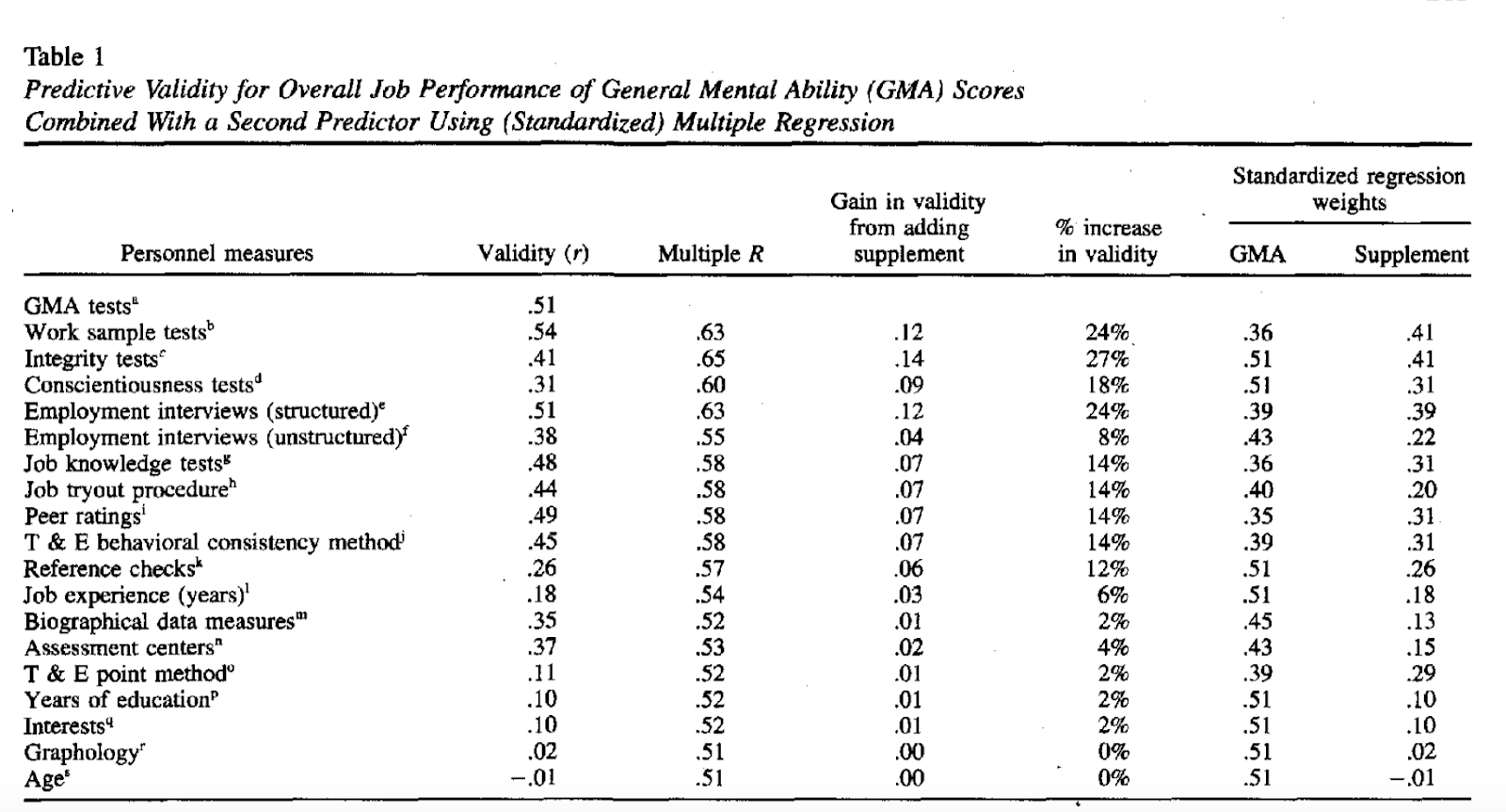

The book Boca mentioned article by Frank Schmidt and John Hunter 1998 "The validity and utility of selection methods in personnel psychology" . She attempts to answer this question by analyzing a wide range of 19 candidate selection criteria. The challenge is to determine which ones best predict future performance. The authors concluded that general intelligence (GMA test) is the best predictor of labor productivity (“predictive validity”).

In this study, the GMA test is considered an IQ test. But around 2003 Microsoft used puzzles like "How many piano tuners are there in the world?" Their explanation:

« Microsoft, , , , , . , , , , »

— « ?», . 20

Fast forward to today. Google condemns this practice , concluding that "performance on these kinds of questions is at best a discrete skill that can be improved through training so that it is of no use in evaluating candidates."

So we have two companies that test general intelligence but fundamentally disagree on how to measure it.

Are we measuring specific abilities or general intelligence?

But maybe, as Spolsky and McDowell have argued, traditional algorithmic and computerized interview questions are, in themselves, effective tests of general intelligence. Research by Hunter and Schmidt provides some support for this theory. Among all single-criterion assessment tools, tests with work samples had the highest predictive validity. In addition, when examining the highest regression result of the validity of a two-criteria scoring instrument (GMA test plus a test with a sample of work), the standardized effect size on the assessment of the working sample was greater than that of the GMA rating, indicating a stronger relationship with the future performance of the candidate.

Research suggests that traditional algorithmic interviews predict future performance, perhaps even more than the GMA / IQ test.

Recursive Cactus does not believe there is such a connection:

“There is little overlap between knowledge gained at work and solving algorithmic problems. Most engineers rarely deal with graphs or dynamic programming. In application programming, the most common data structures are lists and dictionary objects. However, the interview questions associated with them are often viewed as trivial, so the focus is on other categories of problems. "

In his opinion, algorithm questions are similar to Microsoft puzzle questions: you learn tasks from interviews that you will never encounter in real-life work. If so, then this does not really fit with the research of Hunter and Schmidt.

Despite Recursive Cactus' personal convictions, interviewers like Spolsky still believe these skills are extremely important to a productive programmer.

« , , : „ ?” — .

, . , , . , , Ruby on Rails 2.0».

—

Spolsky admits that traditional technical interview questions cannot simulate real work problems. Rather, they test general computer science abilities that are general in some ways but specific in other respects. We can say that this is general intelligence in a certain area.

Thus, if you do not believe that computer intelligence is general intelligence, then McDowell suggests the following:

“There is another reason why knowledge of data structures and algorithms is discussed: because it is difficult to find questions to solve problems that are not related to them. It turns out that the vast majority of problem solving questions involve some of these fundamentals. "

- Gail Luckmann McDowell

This may be true when you look at the world through the lens of computer science. However, it would be unfair to think that non-programmers have more difficulty solving problems.

At this point, we are not talking about measuring general intelligence as Spearman originally defined it. Rather, we are talking about a specific intelligence defined or disseminated by those who grew up or is involved in traditional computer education, in conjunction with general intelligence (Spolsky, McDowell, Bill Gates of Microsoft and four of the five FAANG founders studied computer science or some Ivy League University or Stanford).

Perhaps when we talk about the bar, we really mean something subjective, depending on who makes the measurement, and this definition differs from person to person.

This hypothesis is supported by the ratings that candidates receive from interviewers on our platform.

The bar is subjective

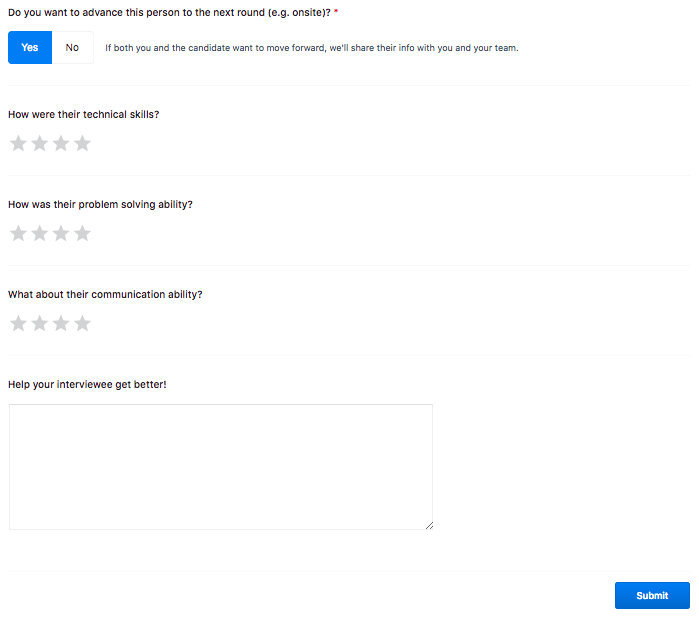

On our interviewing.io platform, people train in technical interviews online, with interviewers from leading companies, and anonymously. Interview questions are similar to those you might hear during a phone screening for a backend developer position, and interviewers usually come from companies like Google, Facebook, Dropbox, Airbnb, and others. Here are some examples of such interviews . After each interview, interviewers rate candidates on several dimensions: technical skills, communication skills, and problem-solving skills on a scale of 1 to 4, where 1 is “bad” and 4 is “amazing!”. This is how the feedback form looks like:

If you feel confident, you can skip the training and apply for a real interview directly with our partner companies (more on this later).

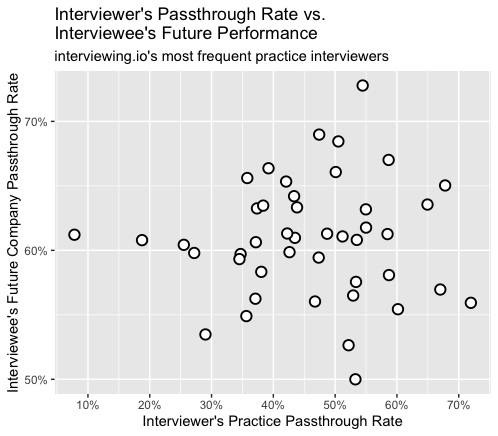

By observing the most active interviewers, we noticed a difference in the percentage of candidates that this person would hire ("pass rate"). This ratio ranges from 30% to 60%. Some interviewers seem a lot tougher than others.

Since interviewees and interviewers are anonymous and randomly selected [1]We do not expect the quality of candidates to vary greatly between interviewers. Therefore, the quality of the interviewees should not be the cause of this phenomenon. However, even taking into account such attributes of a candidate as experience, there is still a difference in the passage rate for different interviewers [2]...

Maybe some interviewers are intentionally strict because their bar is higher. Although candidates who hit the stricter interviewers receive lower grades, they usually perform better in the next interview.

This result can be interpreted in several ways:

- Stricter interviewers systematically underestimate candidates

- Candidates are so jaded by rigorous interviewers that they tend to improve between interviews in an effort to meet the higher bar of the original interviewer

If the latter is true, then candidates who have trained with more rigorous interviewers should perform better in real-life interviews. However, we did not find a correlation between the severity of the interviewer and the speed of passing future real interviews on our platform [3]...

The interviewers on our platform represent the types of people a candidate would encounter in a real-life interview, as the same people conduct phone screening and face-to-face interviews with real-life tech companies. And since we do not dictate the interview methodology, these graphs also show the distribution of opinions about the results of your interview as soon as you hang up or leave the office.

This suggests that regardless of your actual answers, the chances of getting a job really depend on who you are interviewing with . In other words, the bar is subjective.

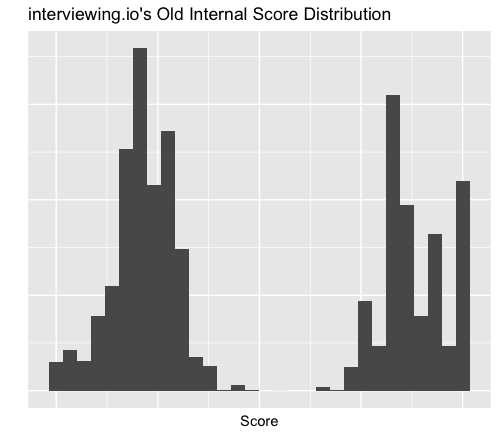

This difference between interviewers has forced us to rethink our own definition of the bar that filters candidates when admitted to interviews with our partner companies. Our definition closely resembled Spolsky's binary criteria (“be smart”), overestimating the interviewer's opinion and underestimating the other three criteria, resulting in a bimodal, camel-like distribution, which is shown in the diagram below.

While the current scoring system correlates reasonably well with future interview results, we found that the interviewer's score is not as strongly correlated with future results as our other criteria. We reduced its weight, which ultimately increased the forecasting accuracy [4]... As in the movie Ricky Bobby: King of the Road, Ricky Bobby learned that there are other places in the race besides the first and the last one , so we also learned that it is useful to go beyond the binary structure of "hire - not hire", or, if you prefer , "Smart - not smart".

Of course, we could not completely get rid of subjectivity, since other criteria are also determined by the interviewer. And this is what makes the assessment difficult: the interviewer's assessment is itself a measure of the candidate's ability.

In such a situation, the accuracy of each specific measurement becomes less certain. It is as if the interviewers were using sticks of different lengths to measure, but everyone assumed that the length of their own stick was known, say, one meter.

When we talked to our interviewers about the assessment of candidates, the theory of sticks of different lengths was confirmed. Here are some examples of how interviewers evaluate candidates:

- Ask two questions. If answers to both, the test is passed

- Asking questions of varying difficulty (easy, medium, difficult). If the answer is average, then the test is passed

- The speed of the response is of great importance. The test is passed if the answers are fast (the term “fast” is not clearly defined)

- Speed doesn't really matter. Pass if there is a working solution

- Candidates start with the highest grade. Points are deducted for every mistake

Different criteria for evaluation - not necessarily bad (and in fact, completely normal). They just introduce a large scatter in our measurements, that is, the candidates' assessments are not entirely accurate.

The problem is that when someone talks about the bar, they usually ignore the uncertainty in the measurements.

It is often advised to hire only top-tier candidates.

“A good rule of thumb is to hire only those who are better than you. No compromise. Always "

- Laszlo Bock

“Don't lower your standards, no matter how difficult it is to find these great candidates.”

- Joel Spolsky

“In the Macintosh division we had a saying: 'Player A hires Players A; B players are hiring C players ”- this means great people are hiring great people too”

- Guy Kawasaki

"Every employee hired should be better than 50% of those currently in similar roles - this raises the bar"

- Bar Raiser blog post at Amazon

These are all good advice. However, they assume that "quality" can be measured reliably. But we have already seen that this is not always the case.

Even when uncertainty is mentioned, variance is ascribed to the candidate's ability, not the measurement process or the interviewer.

“In the middle is a large number of 'potentially useful' employees who seem to be able to contribute to the common cause. The key is to distinguish superstars from these "potentially useful" ones, since you don't want to hire any "potentially useful" ones. Never.

…

If you find it difficult to decide, there is a very simple solution. DO NOT Hire ANYONE. Just don't hire someone you're not sure about. "

- Joel Spolsky

Appraisal of candidates is not a completely deterministic process, but many consider it as such.

Why is the bar so high

The phrase "quality compromise" does not really mean compromise, but decision making in the face of uncertainty. And as you can see from the quotes above, the usual strategy is to only hire when there is absolute confidence.

Regardless of which measuring stick you have, it raises the bar really high. Being completely confident in a candidate means minimizing the possibility of bad hiring (“false positives”). And companies are doing everything they can to avoid it.

“A bad candidate is very expensive considering the time it takes to correct all of his mistakes. Firing a mistakenly hired employee can take months and be a nightmare, especially if they choose to sue. "

- Joel Spolsky

Hunter and Schmidt calculated the cost of poor hiring: "The standard deviation ... is at least 40% of the average annual salary," which is $ 40,000 today, assuming the average salary for an engineer is $ 100,000.

But if you set the bar too high, chances are that you miss out on some good candidates (false negatives). McDowell explains why companies don't really mind a lot of false negatives:

“From a company point of view, it is really acceptable to reject a number of good candidates ... they are willing to put up with it. Of course, they would rather not do this as it increases HR costs. But this is an acceptable compromise, provided that they still receive a sufficient number of good candidates. "

In other words, it is worth waiting for the best candidate if the difference in expected result is large compared to the recruiting costs of continuing your search. In addition, the costs of personnel and legal issues from potentially problem employees are also pushing the bar up as much as possible.

It looks like a very rational calculation of costs and benefits. But has anyone actually done such a calculation in numbers? If so, we'd love to hear from you. But it seems very difficult in practice.

Since all calculations are done by eye, we can do the same and argue that the bar should not be set so high.

As mentioned earlier, the distribution of candidate abilities is not binary, so Spolsky's nightmare scenario will not happen with all supposedly “bad” hires, meaning that the expected performance difference between “good” and “bad” employees may be less than anticipated.

On the other hand, recruiting costs may turn out to be higher than anticipated because candidates become increasingly difficult to select as their qualifications grow. By definition, the higher the bar, the fewer such people. Schmidt and Hunter's “bad hire” damage calculation compares candidates only within the pool. The study does not take into account the relative cost of recruiting high-quality candidates to the pool, which is a major problem for many tech recruiting teams these days. And if other IT companies use the same hiring strategy, then competition increases the average chance that a candidate will turn down an offer. This increases the time it takes to fill the vacancy.

To summarize, if the expected outcome between “good” and “bad” candidates is less than expected, and recruiting costs are higher than expected, then it is logical to lower the bar.

Even if a company has hired an ineffective employee, it can use training and HR tools to mitigate the negative impact. After all, a person's productivity really grows over time, he acquires new skills and knowledge.

However, when hiring people rarely think about the development of employees (Laszlo Bock mentions this in places, but mostly these topics are discussed separately from each other). But if you do take it into account, a connection can be made between hiring and developing employees. You can talk about different methods of increasing labor efficiency: either paying for training existing employees, or hiring new ones.

You can even consider it a compromise. Instead of developing employees internally, why not outsource this development? Let others figure out how to develop raw talent, and you later pay recruiters to find ready-made professionals. Why shop at Whole Foods and cook at home when you can pay to deliver ready meals? Why waste time in management and training when you can do real work (i.e. engineering tasks)?

Perhaps the bar is set so high because companies don't know how to effectively develop people.

So companies reduce risks by shifting the burden of career growth onto the candidates themselves. Conversely, candidates like Recursive Cactus are left with no choice but to practice interviewing.

At first I thought the Recursive Cactus was the exception to the rule. But it turned out that he was not alone.

Candidates practice before the interview

Last year, we asked candidates how many hours they spent preparing for the interview. Almost half of the respondents said that they spent 100 hours or more on preparation [5]...

We became interested in how recruiters understand the situation. Alina asked a similar question on Twitter - and the results showed that HR managers greatly underestimate the efforts of candidates to prepare for interviews.

Apparently, this discrepancy only confirms the hidden and unspoken rule of hiring: if you are not one of the smartest (whatever that means), this is not our problem.

Revision of the bar

So this is what the "plank" is. This is a high standard set by companies to avoid false positives. However, it is not known whether the companies actually performed a proper cost-benefit analysis. Perhaps the high bar can be explained by a reluctance to invest in employee development.

Plank pretty much measures your general intelligence, but actual measurement tools don't necessarily match scientific literature. Even the scientific literature itself on this topic can be called dubious [6]... The bar actually measures a specific intelligence in computer science, but this measurement varies depending on who is interviewing you.

Despite the differences in many aspects of hiring, we talk about the bar as if it had a clear meaning. It allows hiring managers to make clear binary choices, but does not allow them to critically reflect on whether the definition of the “bar” can be improved for their company.

And it helps to understand why the Recursive Cactus spends so much time training. This is in part because his current company is not developing his skills. He prepares for a myriad of possible questions and interviewers that he may face because recruiting criteria vary widely. He explores topics that will not necessarily be used in his daily work - all in order to pass for those who are considered "smart".

This is the current system that has had a significant impact on his personal life.

“My wife has said more than once that she misses me. I have a busy, happy life, but I feel the need to go headlong into preparation for several months to be competitive in interviews. No single mother can prepare like this. ”

- Recursive Cactus

This affects his current job and his colleagues.

“The process takes a lot of effort, so I can no longer work 100%. I wish I could do better, but I can't simultaneously take care of my future, practicing algorithms four hours a day, and do my job well.

This is not a very pleasant feeling. I like my colleagues. I feel responsible. I know that I will not be fired, but I understand that they have an additional burden "

- Recursive Cactus

It's helpful to remember that all of these micro-decisions about false positives, interview structure, puzzles, recruitment and development criteria add up to a system that ultimately affects people's personal lives. Not only the applicants themselves, but also all the people who surround them.

Recruiting employees is far from a solved problem. Even if we somehow solve it, it is unclear if we can ever eliminate all this uncertainty. After all, it’s quite difficult to predict the future outcome of a person’s work after spending an hour or two with them in an artificial work environment. While we definitely need to minimize uncertainty, it is helpful to accept it as a natural part of the process.

The system can be improved. This requires not only coming up with new ideas, but also revisiting the ideas and assumptions made decades ago. You need to take into account the previous work and move on, and not get attached to it.

We are confident that everyone in the IT industry can contribute - and improve the IT hiring system. We know that you can do this, if only because you are smart.

[1]There is some potential for bias, especially with regard to the time candidates choose to train. A cursory analysis shows that the connection is not that significant, but we are studying this issue (perhaps in the future we will write on this topic in a blog). You can also choose between the traditional algorithmic interview and the system design interview on the site, but the vast majority choose the traditional one. Pass rates shown are in line with traditional interviews. [back]

[2]You may be wondering about the relative level of candidates on interviewing.io. Although the true level is difficult to determine (which is the main theme of this article), our practitioner interviewers say that the average level of candidates for interviewing.io corresponds to the level that they encounter during interviews in their own companies, especially during telephone screening. [back]

[3]This includes only candidates who meet our internal recruitment standards and have come for an interview at our office. The graph does not reflect the entire population of candidates who were interviewed. [back]

[4]You may remember that earlier we had an algorithm that adjusted the statistics based on the strictness of the interviewers. Upon further examination, we found that this algorithm introduces variance in the candidates' scores in an unexpected way. Therefore, now we do not rely so much on him. [back]

[5]The bursts at 100 and 200 hours were due to an error in wording and the maximum values of the survey. The following three questions were asked: 1) During your last job search, how many hours did you spend preparing for your interview? 2) How many hours did you spend preparing for the interview before registering for interviewing.io? 3) How many hours did you spend preparing for the interview after registering for interviewing.io (not including time on the website)? Answers to each question were capped at a maximum of 100 hours, but for many respondents, the sum of answers 2 and 3 exceeded 100. The median of answers to question 1 was 94, which is almost identical to the median of the sum of answers 2 and 3, so we used this sum for a distribution greater than 100 hours. Key Lessons: Set the maximum value higher than you expectand double check your survey.[back]

[6]I find it a little difficult to evaluate this study because I am not a psychologist and methods like meta-analysis are a little foreign to me, although they are based on familiar statistical tools. The question is not whether these tools are correct, but how difficult it is to reason about the research input. Like spaghetti code, the validation of underlying datasets is spread across decades of prior scientific work, making analysis difficult. This is probably the nature of psychology, where it is more difficult to obtain useful data when compared with the natural sciences. In addition, other questions arise about methodology, which are discussed in more detail in this article . [return]