See also:

Machine Learning. Neural Networks (Part 1): The Perceptron Learning Process

Machine Learning. Neural Networks (Part 2): Modeling OR, XOR with TensorFlow.js

In previous articles, only one of the types of neural network layers was used - dense, fully-connected, when each neuron of the original layer has a connection with all neurons from the previous layers.

To handle, for example, a 24x24 black and white image, we would have to turn the matrix representation of the image into a vector that contains 24x24 = 576 elements. As you can imagine, with such a transformation we lose an important attribute - the relative position of pixels in the vertical and horizontal directions of the axes, and also, probably, in most cases, the pixel located in the upper left corner of the image hardly has any logically explainable effect on the pixel in lower right corner.

To eliminate these shortcomings, convolutional layers (CNN) are used for image processing.

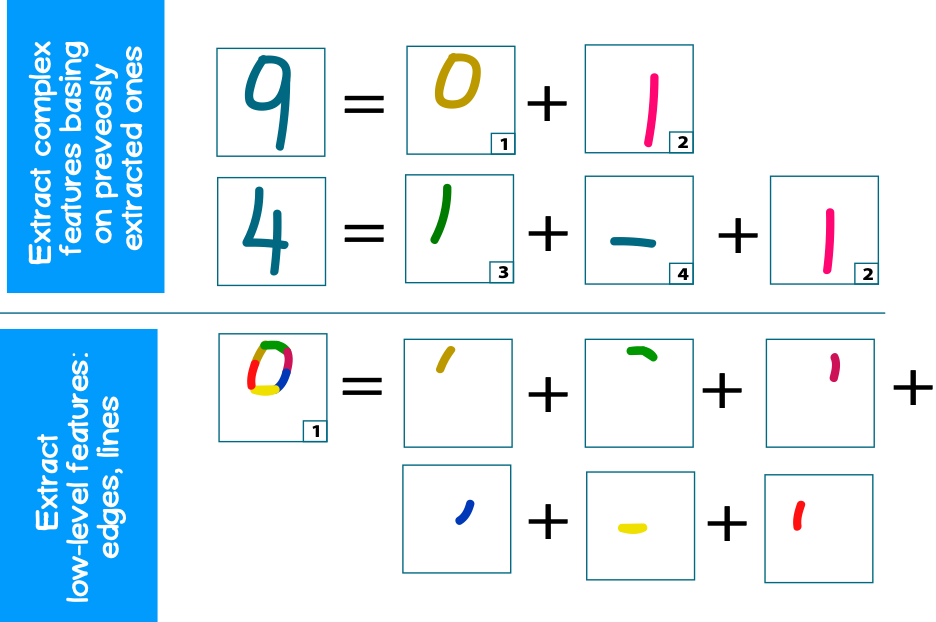

The main purpose of CNN is to extract small parts from the original image that contain supporting (characteristic) features (features), such as edges, contours, arcs or faces. At the next processing levels, more complex repeatable fragments of textures (circles, square shapes, etc.) can be recognized from these edges, which can then be folded into even more complex textures (part of the face, car wheel, etc.).

For example, consider a classic problem - image recognition of numbers. Each number has its own set of figures characteristic of them (circles, lines). At the same time, each circle or line can be composed of smaller edges (Figure 1)

1. (convolutional layer)

CNN ( ), c () , . – CNN – .

, 2x2 ( K) , 2x2 ( N), :

, .

, (fully-connected, dense layers):

, - , – - , ( ).

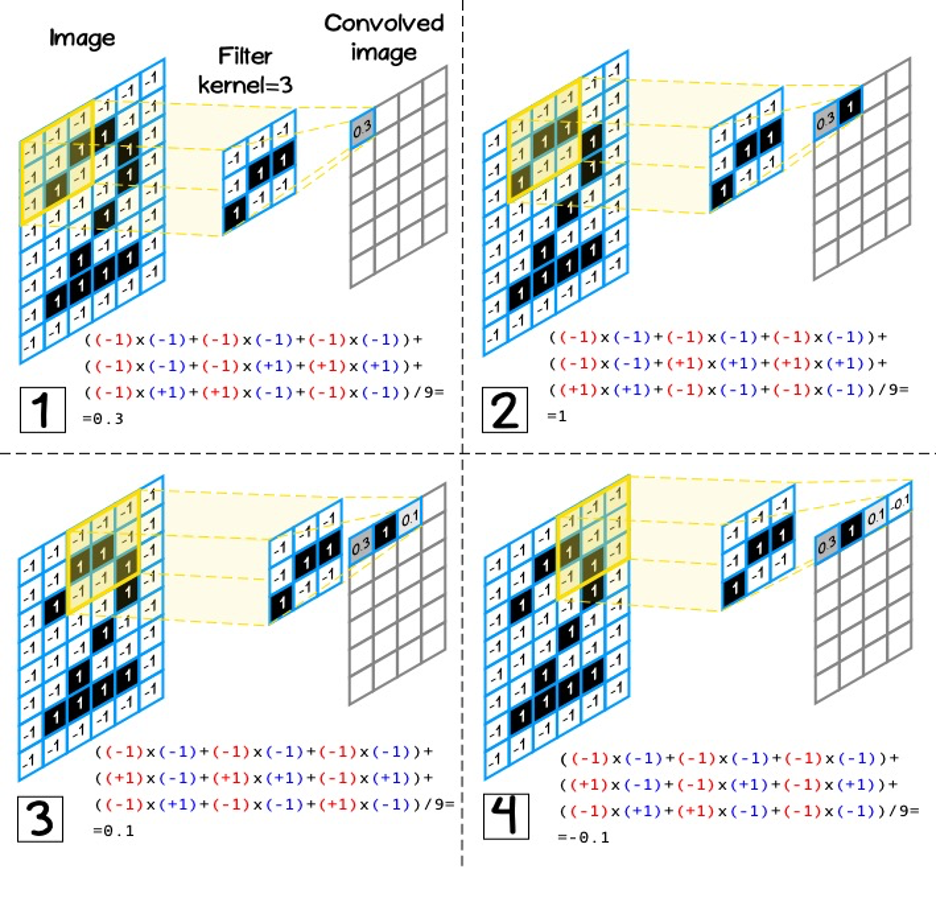

2. , , , .

(kernel size) – 3, 5, 7.

(kernel) [kh, kw], [nh, nw], ( 3):

![3 – [3,3] 3 – [3,3]](https://habrastorage.org/getpro/habr/upload_files/ebc/66a/8ef/ebc66a8ef2e7f8268951b9d3bcaf08ba.png)

, . , . , .

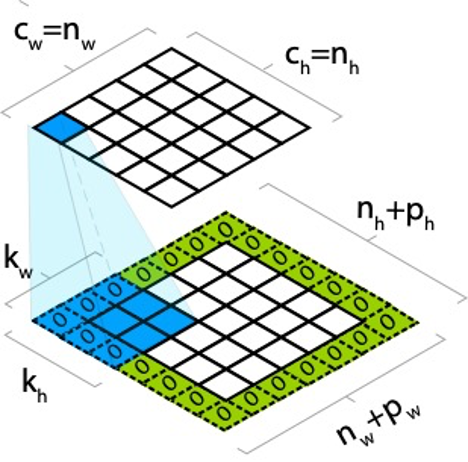

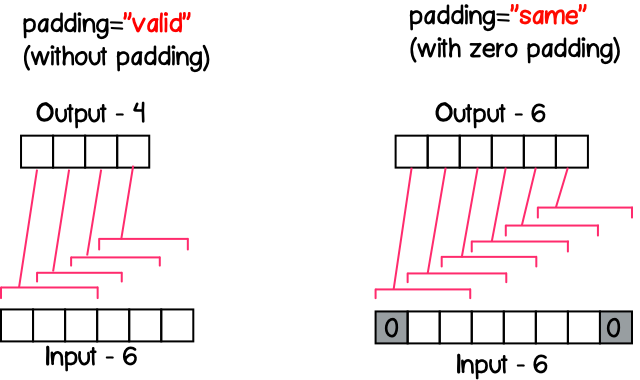

, – (padding). , . , ph pw , :

, , , :

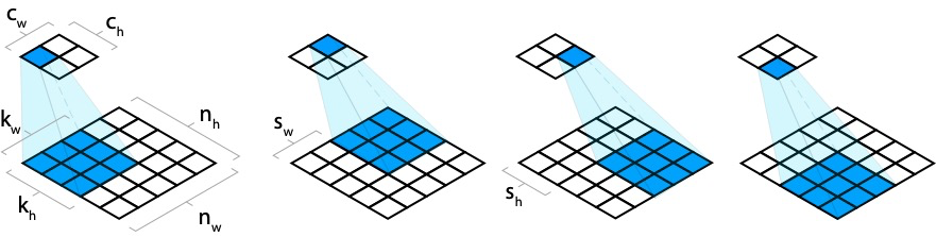

- . , (stride). – (stride).

, sw, sh, :

, ( – ). (). , (CONV1) 9x9x1 ( – - ), 2 1x1 (stride) (padding) , , . 9x9x2 2 – (. 6). CONV2 , , 2x2, , 2, 2x2x2. (CONV2) 9x9x4, 4 – .

, kw kh , nw x nh x nd, nd - , , kw x kh x nd ( 6, CONV2).

7 , RGB, 3x3. , (3 ), 3x3x3.

TensorFlow.js

, : tf.layers.conv2d, – , :

- filter – number –

- kernelSize – number | number[] – , number, , –

- strides – number | number[] - , [1,1], .

- padding – ‘same’, ‘valid’ – , ‘valid’

.

'same'

, , () (stride) . , - 11 , – 5, 13/5=2.6, – 3 ( 8).

stride=1, ( 9), , ( 8).

'valid'

, strides , 8.

TensorFlow.js

, . :

- :

- :

, , tf.browser.fromPixels. , img canvas .

<img src="./sources/itechart.png" alt="Init image" id="target-image"/>

<canvas id="output-image-01"></canvas>

<script>

const imgSource = document.getElementById('target-image');

const image = tf.browser.fromPixels(imgSource, 1);

</script>, , , 3x3, “same” ‘relu’:

const model = tf.sequential({

layers: [

tf.layers.conv2d({

inputShape: image.shape,

filters: 1,

kernelSize: 3,

padding: 'same',

activation: 'relu'

})

]

});[NUM_SAMPLES, WIDTH, HEIGHT,CHANNEL], tf.browser.fromPixel [WIDTH, HEIGHT, CHANNEL], – ( , ):

const input = image.reshape([1].concat(image.shape));. , setWeights Layer, :

model.getLayer(null, 0).setWeights([

tf.tensor([

1, 1, 1,

0, 0, 0,

-1, -1, -1

], [3, 3, 1, 1]),

tf.tensor([0])

]);, , 0-255, NUM_SAMPLES:

const output = model.predict(input);

const max = output.max().arraySync();

const min = output.min().arraySync();

const outputImage = output.reshape(image.shape)

.sub(min)

.div(max - min)

.mul(255)

.cast('int32');canvas, tf.browser.toPixels:

tf.browser.toPixels(outputImage, document.getElementById('output-image-01'));:

2. (pooling layer)

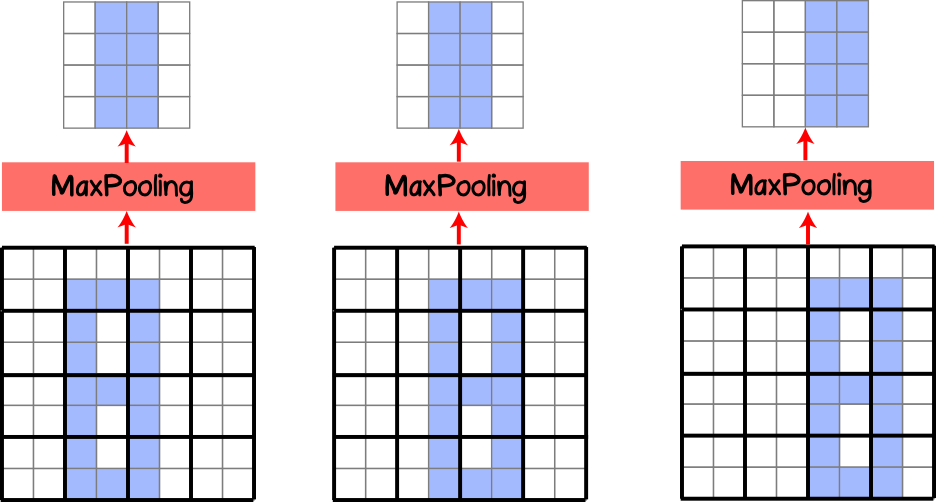

, ( ), , . , , (pooling layer, subsample layer), . MaxPooling .

, .

. (kernel) , (stride) 1x1, . , (. 10).

, 4x4, 2x2 (stride) , 2x2, .

, ( 11) . , , MaxPooling . (translation invariance). , , 50%. , , MaxPooling .

, .

, , – (stride).

MaxPooling AveragePooling, , , . , MaxPooling. AveragePooling , , MaxPooling .

TensorFlow.js (pooling layer)

tf.layers.maxPooling2d tf.layers.averagePooling2d. – , :

- poolSize - number | number [] - the dimension of the filter, if number is specified, then the dimension of the filter takes a square form, if it is specified as an array, then the height and width may differ

- strides - number | number [] is a promotion step, an optional parameter and by default has the same dimension as the specified poolSize.

- padding - 'same', 'valid' - setting zero padding, by default 'valid'