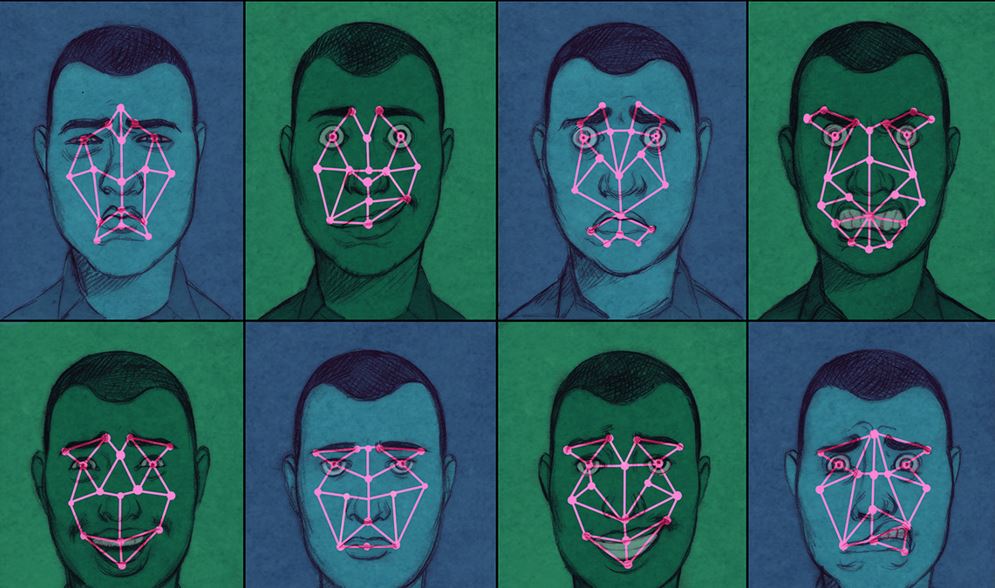

Obtaining information about a person's age and gender occurs exclusively from a photograph of his face. Based on this, we can divide our task into two main stages:

- Detecting faces in the input image.

- Extraction of the region of interest (ROI - Region of Interest) and application of the algorithm of the person's age and gender detector.

For stage 1, there are many ready-made solutions, for example: Haar cascades , HOG ((Histogram of Oriented Gradients), deep learning models, etc. Let's consider the main distinctive features of each of them:

- Haar cascades will be very fast and able to run in real time on embedded devices - the problem is that they are less accurate and highly susceptible to false positives

- HOG models are more accurate than Haar cascades, but slower. They are also not so tolerant of occlusion (i.e. when part of the face is hidden)

- Deep Learning Face Detectors are the most reliable and will give you the best accuracy, but require even more computational resources than the Haar and HOG cascades

To select a method suitable for our task, a comparative analysis of two algorithms was carried out: Haar cascades and the MTCNN (Multi-Task Cascaded Convolutional Neural Network) convolutional neural network . In the first case, the OpenCV library already contains many pre-prepared classifiers for face, eyes, smile, etc. (XML files are stored in the opencv / data / haarcascades / folder), in the second case, you need to install the library using the pip install mtcnn command ...

Importing the required libraries

import cv2

from mtcnn import MTCNN

import matplotlib.pyplot as plt

image = cv2.cvtColor(cv2.imread('f.jpg'), cv2.COLOR_BGR2RGB) # Below is the code to test the operation of the

MTCNN algorithms :

detector = MTCNN() #

result = detector.detect_faces(image) #

for i in range(len(result)):

bounding_box = result[i]['box'] #

cv2.rectangle(image, (bounding_box[0], bounding_box[1]),

(bounding_box[0]+bounding_box[2], bounding_box[1] + bounding_box[3]),

(0,155,255), 2) #

plt.imshow(image) # haar cascade:

face_cascade = cv2.CascadeClassifier(cv2.data.haarcascades + 'haarcascade_frontalface_default.xml') # XML-

faces = face_cascade.detectMultiScale(image, 1.3, 5) #

for (x,y,w,h) in faces: #

cv2.rectangle(image,(x,y),(x+w,y+h),(255,0,0),2) #

plt.imshow(image) # Let's run a series of experiments to check the quality of the algorithms.

- single photo of a person

- Several people in the photo

- Several people in the photo, in the case when the gaze is not directed into the frame

- Several people in the photo when part of their face is hidden, for example, with masks

We can conclude that under ideal conditions (high definition of the photo, the face is directed strictly into the frame), both algorithms work flawlessly, but at the slightest deviation from these conditions, the Haar cascades begin to fail. Therefore, the choice was focused on using the MTCNN model.

Now that we have figured out the choice of a method for detecting faces in a photo, let's move on to the task itself. To determine age and gender, two different models were used, trained on a huge amount of data. Both models were trained by Levy and Hassner, their first work was published in 2015 under the title Age and Gender Classification Using Convolutional Neural Networks". In their work, they demonstrated that training using deep convolutional neural networks (CNNs) can achieve significant performance gains on age and gender prediction tasks.

A model trained on the Adience dataset for age prediction can be downloaded from link 1 (* .caffemodel) and link 2 (* .prototxt) This model solves the problem of classification, not regression. The age range is divided into certain intervals ["(0-2)", "(4-6)", "(8-12)", "(15-20)", "(25-32)", "(38 -43) "," (48-53) "," (60-100) "], each of which is a separate class that the model can predict.

To determine gender, a model was taken from the repositoryfor the article " Understanding and Comparing Deep Neural Networks for Age and Gender Classification. " The models can be downloaded from link 1 (* .caffemodel) and from link 2 (* .prototxt). The model uses the GoogleNet architecture and was pre- trained on the ImageNet dataset .

As you can see, each model is represented by two files, which means that it was trained using Caffe (a deep machine learning framework aimed at ease of use, high speed and modularity). The file with the prototxt extension is responsible for the network architecture, and the file with the caffemodel extension for the weight of the model.

The main code is briefly presented below, as an argument to the model we pass the person defined above.

AGE_BUCKETS = ["(0-2)", "(4-6)", "(8-12)", "(15-20)", "(25-32)","(38-43)", "(48-53)", "(60-100)"]

gender_list=['Male','Female']

faceBlob = cv2.dnn.blobFromImage(face, 1.0, (227, 227), (78.4, 87.7, 114.8), swapRB=False)

ageNet.setInput(faceBlob)

age_preds = ageNet.forward()

age_i = age_preds[0].argmax()

age = AGE_BUCKETS[age_i]

ageConfidence = age_preds[0][age_i]

age_text = "{}: {:.2f}%".format(age, ageConfidence * 100) #

genderNet.setInput(faceBlob)

gender_preds = genderNet.forward()

gender_i = gender_preds[0].argmax()

gender=gender_list[gender_i]

genderConfidence = gender_preds[0][gender_i]

gender_text = "{}: {:.2f}%".format(gender, genderConfidence * 100) #

y = y1 - 10 if y1 - 10 > 10 else y1 + 10

cv2.rectangle(image, (x1, y1), (x1+width, y1 + height), (0,155,255),2)

v2.putText(image, display_text, (x1, y),

cv2.FONT_HERSHEY_SIMPLEX, 0.45, (0, 0, 255), 2)The results obtained during the development of the algorithm

As you can see, the model does not always show the result correctly, sometimes assigns a male gender to photographs where women are depicted. Perhaps this is due to the unbalanced date set on which the model was trained. Provided that you have the necessary computing resources, you can try to train the model yourself, it will take some time, but with a balanced dataset and a properly selected network architecture and training parameters, the accuracy may improve.