At first I wanted to write a comment on the article " I suffered from terrible architectures in C # for ten years ... ", but I realized two things:

- There are too many thoughts to share.

- For such a volume, the comment format is inconvenient either for writing or for reading.

- I have been reading Habr for a long time, sometimes I comment, but I have never written articles.

- I'm not good at numbered lists.

Disclaimer: I am not criticizing @ pnovikov or his idea in general. The text is of high quality (I feel like an experienced editor), I share some of my thoughts. There are many architectures, but that's ok (yes, it sounds like the title of a Korean movie).

However, let's take it in order. First, my opinion about what affects architecture, then about the controversial points in the article about "fixing architectures". I will also tell you what works well for us - maybe it will be useful to someone.

And of course everything said here is personal opinion based on my experience and cognitive biases.

About my opinion

I often make architectural decisions. Once large, once small. Occasionally I come up with architecture from scratch. Well, as if from scratch - for sure everything was invented before us, but we do not know about something, so we have to invent. And not out of love for bicycle building (let's say, not only out of love for it), but because for some tasks there was no ready-made solution that would suit all parameters.

Why do I believe that completely different architectures have the right to exist? One might speculate that programming is an art, not a craft, but I won't. My opinion: once an art, once a craft. It's not about that. The main thing is that the tasks are different. And people. To clarify, tasks are business requirements.

If someday my tasks become the same, I will write or ask someone to write a neural network (or maybe a script will be enough) that will replace me. And I myself will do something less bleak. Until my and, I hope, your personal apocalypse has not come, let's think about how tasks and other conditions affect the variety of architectures. TL&DR; - varied .

Performance versus scalability

This is perhaps the most correct reason to change the architecture. Unless, of course, it is easier to adapt the old architecture to the new requirements. But here it is difficult to briefly tell something useful.

Timing

Let's say the terms (I made sure twice that I wrote with an "o") are very tight. Then we have no time to choose, let alone come up with architecture - take familiar tools and dig. But there is a nuance - sometimes complex projects can be done on time only by applying (and, perhaps, inventing) something fundamentally new. Someone might say that inviting a customer to a bathhouse is an old technique, but I'm talking about architecture now ...

When the timing is comfortable - it often turns out to be a paradoxical situation - it seems like you can come up with something new, but why? True, many successfully succumb to the temptation to take on another more burning project and reduce the situation to the previous one.

In my practice, timing rarely leads to revolutions in architecture, but it happens. And that's great.

Development speed and quality

It happens so - the team (or someone from the management) notices that the development speed has slowed down, or a lot of bugs have run over the iteration. Often the "wrong architecture" is blamed for this. Sometimes - deservedly. More often - just as the most convenient accused (especially if the team does not have her “parent”).

In principle, in some cases it all comes down to the time factor. And in others - to maintainability, more on it later.

Maintainability

An ambiguous topic. Because everything is very subjective and depends a lot on what. For example - from the team, the programming language, the processes in the company, the number of adaptations for different clients. Let's talk about the last factor, it seems to me the most interesting.

Now you have made a custom project. Successfully, on time and on budget, the customer is satisfied with everything. I had this too. Now you look at what you used and think - so here it is - a gold mine! We are now using all these developments, we will quickly create one B2B product, and ... At first everything is fine. The product was made, sold a couple of times. Hired more vendors and developers ("more gold needed"). Customers are satisfied, they pay for support, new sales happen ...

And then one of the customers says in a human voice - "I would have done this thing in a completely different way - how much can it cost?" Well, just think - stick a few if'chiks with different code (let's say, there was no time to screw DI), what bad can happen?

And the first time, really nothing bad will happen. I would not even advise in such a situation to fence something special. Premature complication of architecture is akin to premature optimization. But when it happens the second and third time, this is a reason to remember such things as DI, the "strategy" pattern, Feature Toggle and others like them. And, for a while, it will help.

And then the day comes when you look at the project settings (only a few hundred options) for a specific test bench ... Remember how to count the number of combinations and think - how, your mother, can this be tested? It is clear that in an ideal world this is simple - after all, each feature is designed and implemented in such a way that it does not affect the other in any way, and if it does, then all this is provided for and, in general, our developers have never made mistakes.

Of course, I thickened the colors - you can highlight some sets of features that are used by real customers, write more tests (how and which ones is a topic for another conversation) and simplify the task a little. But think about it - every major release needs to be tested for all customers. Let me remind you that this is not B2C, where you can say “roll out a feature for 5% of users and collect feedback” - for B2B, you can start collecting feedback from courts ...

Solutions? For example, divide the product into modules with a separate life cycle (not forgetting to test their interaction). This will reduce the complexity of maintenance, although it will complicate development. And now I'm not talking about the fertile topic for holivars “monolith vs. microservices "- in a monolith, you can also arrange a similar (although more complicated, in my opinion).

And, mind you, from a pragmatic point of view, at every stage, we had a good architecture.

And what is all this for?

I do not want to tire you (and myself) by listing other reasons for changes in architecture. Let's now agree that architectures tend to change over time, depending on many factors. This means: the ideal architecture that solves "well, all the problems" does not exist.

If I haven't convinced you of this yet, look at the variety of programming languages and frameworks (just not in the frontend - don't open this topic). If someone says that this is bad, I suggest conducting a thought experiment - imagine a world in which there is one specific programming language. With one important condition - you don't like it. For example, because you never used it, and you never intended to.

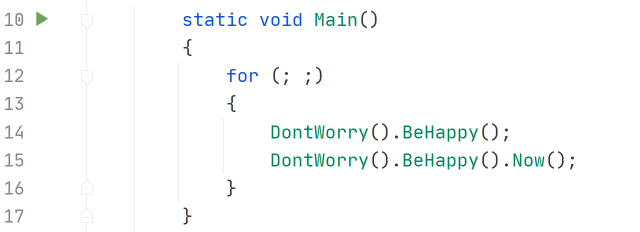

And, I admit, there is another good reason - coming up with something new, optimizing a few parameters, playing with compromises - it's damn fun. Now that we all (right?) Agree that diversity in architecture is okay ...

Discussion of the article about "fixing architectures"

What about IoC?

About IoC I agree that footcloths have a place in the army, and modules are universal good. But here's the rest ...

If, of course, you listen to some apologists for "clean code", then you can code a mountain of services, each of which will have an average of one and a half methods, and in the method - two and a half lines. But why? Honestly, you definitely want to follow the principles that will help you cope with unlikely problems in the distant future, but smear even simple logic across dozens of files? Or is it enough for you to write decently working code now?

By the way, now I am working on a module that will definitely be used in different products and, most likely, will actively "tune". So there I try not to "shallow". Doesn't pay off. Although in it I use the only implementation of interfaces more often than usual.

So, if we have modules and we are not “petty”, then where do IoC performance problems or unsupported “footcloths of IoC configurations” come from? I have not come across.

However, I will clarify our working conditions:

- Our modules are not the ones that "are provided by almost any IoC framework", but "direct modules" - which communicate with each other remotely via the API (sometimes, for performance reasons, you can put them in one process, but the scheme of work will not change).

- IoC is used as simple as possible and as simple as possible - dependencies are stuck into the parameters of the constructor.

- Yes, we now have a microservice architecture, but here we try not to be too small.

Tip: interfaces can be kept in the same file as the class - it is convenient (if, of course, you use a normal IDE, and not notepad). I make exceptions when interfaces (or comments to them) grow. But this is all taste, of course.

What's wrong with ORM and why direct access to the database?

Yes, I myself will say what is wrong - many of them are too far from SQL. But not all. Therefore, instead of “enduring while O / RM removes 3000 objects” or coming up with another one, find one that suits you.

Tip: try LINQ to DB . It is well balanced, there are Update / Delete methods for multiple lines. Only carefully - addictive. Yes, there are no EF features and a slightly different concept, but I liked EF much more.

By the way, it's nice that this is a development of our compatriots. Igor Tkachev - respect (I didn't find it on Habré).

UPD: RouRnoted in the comments that there is an extension for EF Core that allows bulk operations. I won't give up LINQ to DB anyway, because it's good .

What's wrong with the database tests?

Yes, they will be slower than data in memory. Is it fatal? No, of course not. How to solve this problem? Here are two recipes that are best used at the same time.

Recipe number 1. You take a cool developer who loves to do all sorts of cool things and discuss with him how to solve this problem beautifully. I'm lucky becauseforcesolved the problem faster than it appeared (I don't even remember whether we discussed it or not). How? Made (in a day, it seems) a test factory for ORM, which replaces the main subset of operations with accessing arrays.

Perfect for simple unit tests. An alternative option is to use SQLite or something similar instead of "large" databases.

Comment by force: . -, , ORM, , SQL . -, , , , , .. . .

Recipe number 2. I prefer to test business scenarios on real databases. And if the project declares the ability to support multiple DBMS, tests are performed for several DBMS. Why? It's simple. In the statement "I don't want to test the database server", alas, there is a substitution of concepts. I am, you know, not testing whether join works or order by.

I am testing my code working with DB. And knowing that even different versions of the same DBMS can produce different results on the same queries ( proof ), I want to check the main scripts on those databases with which this code will work.

Usually such tests for me look like this:

- For a group of tests (Fixture) it is generated from scratch according to the database metadata. If necessary, the necessary reference books are filled in.

- Each script adds the necessary data itself during the passage (users do it too). Not so in performance tests, but that's a completely different story ...

- After each test, excess data (except for reference books) is deleted.

Advice: if such tests are performed for you objectively for a long time (and not because it's time to optimize queries to the database), make a build that will run them less often (test categories or a separate project to help). Otherwise, developers will not want to run the rest of them themselves - quick tests.

Transaction and email

I will just add to the story "the transaction in the database for some reason fell, and the e-mail went away." And what fun it will be when a transaction waits for an unavailable mail server, stakes the entire system because of some notification that the user then sends to the basket without reading ...

True, I always believed that only June

Outcome

In general, if @ pnovikov has no plans to conquer the world with the help of the only true

I will hardly use the proposed framework. The reason is simple - we already have an ideal architecture ...

PS If you have a desire to discuss something in the comments, I will be glad to take part in it.