It has always been difficult to securely host large numbers of users on a single Kubernetes cluster. The main reason is that all organizations use Kubernetes differently, so a single multi-user model is unlikely to work for everyone. Instead, Kubernetes offers components to build your own solution, such as Role Based Access Control (RBAC) and NetworkPolicies; the better the components, the easier it is to build a secure multi-user cluster.

Namespaces rush to the rescue

By far the most important of these components are namespaces . They are the basis for almost all security and control plane sharing policies in Kubernetes. For example, RBAC, NetworkPolicies, and ResourceQuotas support namespaces by default, while objects like Secret, ServiceAccount, and Ingress are freely available within one space but completely isolated from others .

Namespaces have a couple of key features that make them ideal for policy enforcement. First, they can be used to represent property . Most Kubernetes objects shouldbe in any namespace. By using namespaces to represent ownership, you can always count on those objects to have an owner.

Second, only users with the appropriate rights can create and use namespaces . You need elevated privileges to create namespaces, and other users need explicit permission to work with them — that is, to create, view, or modify objects in those namespaces. Thus, first a namespace is created with an elaborate set of policies, and only after that unprivileged users can create "regular" objects like pods and services.

Namespace restrictions

Unfortunately, in practice, namespaces are not flexible enough and do not fit well in some common use cases. For example, a certain team owns several microservices with different secrets and quotas. Ideally, they should split these services into separate namespaces to isolate them from each other, but this presents two problems.

First, these namespaces lack a single concept of ownership, even though they are both owned by the same team. This means that not only Kubernetes does not know anything about the fact that these namespaces have one owner, but it also lacks the ability to apply global policies to all controlled namespaces at once.

Secondly, as you know, autonomy is the key to effective team work. Since the creation of namespaces requires elevated privileges, it is unlikely that anyone on the development team will have these privileges. In other words, whenever a team decides to create a new namespace, they have to contact the cluster administrator. This may be perfectly acceptable for a small company, but as it grows, the negative effects of such an organization become more pronounced.

Introducing Hierarchical Namespaces

Hierarchical namespaces are a new concept developed by the Kubernetes multi-tenancy ( wg-multitenancy ) working group to address these issues. In simplified form, a hierarchical namespace is a regular Kubernetes namespace with a small custom resource included that points to one (optional) parent namespace. This extends the concept of ownership to the namespaces themselves , not just the objects within them.

The concept of ownership also implements two additional types of relationships:

- : namespace , , , RoleBindings RBAC, .

- : namespace' . : subnamespaces, .

This solves both problems of a typical dev team. The cluster administrator can create one "root" space for it along with the necessary policies and delegate the authority to create subspaces to team members. This way, developers can create subnamespaces for their own use without violating the policies set by the cluster administrators.

A little practice

Hierarchical namespaces are implemented using a Kubernetes extension called Hierarchical Namespace Controller or HNC . HNC consists of two components:

- Manager operates in a cluster, manages subnamespaces, distributes policy objects, ensures that hierarchies are valid, and manages extension points.

- A kubectl plugin called

kubectl-hnsit allows users to interact with the manager.

The component installation guide can be found on the releases page in the project repository.

Let's take a look at how HNC works. Suppose I have no privileges to create namespaces, but I can browse the namespace

team-aand create subnamespaces in it *. The plugin allows me to enter the following command:

$ kubectl hns create svc1-team-a -n team-a

* Technically speaking, you create a small object called "subnamespace anchor" in the parent space and then HNC creates a subnamespace.

This will create a namespace

svc1-team-a. Please note that subnamespaces are no different from regular Kubernetes namespaces, so their names must be unique.

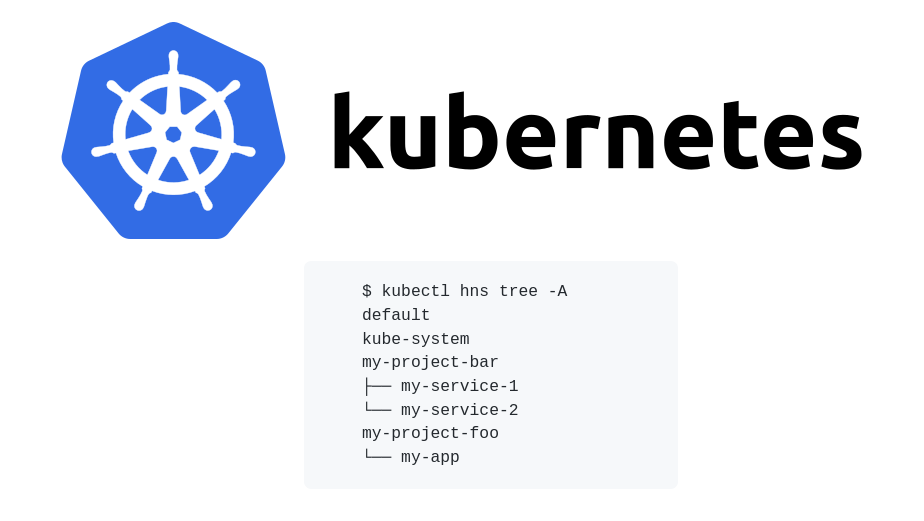

You can view the resulting structure using the command

tree:

$ kubectl hns tree team-a

# Output:

team-a

└── svc1-team-a

If there are any policies in the parent space, they will be copied to the child *. For example, suppose you

team-ahave an RBAC RoleBinding called sres. This RoleBinding will also appear in the corresponding namespace:

$ kubectl describe rolebinding sres -n svc1-team-a

# Output:

Name: sres

Labels: hnc.x-k8s.io/inheritedFrom=team-a # inserted by HNC

Annotations: <none>

Role:

Kind: ClusterRole

Name: admin

Subjects: …

* By default, only Roles and RoleBindings in RBAC are redistributed, but you can configure HNC to propagate any Kubernetes object.

Finally, HNC adds labels to these namespaces with useful information about the hierarchy. They can be used to apply other policies. For example, you can create the following NetworkPolicy:

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: allow-team-a

namespace: team-a

spec:

ingress:

- from:

- namespaceSelector:

matchExpressions:

- key: 'team-a.tree.hnc.x-k8s.io/depth' # Label created by HNC

operator: Exists

This policy will be propagated to descendants

team-aand will also allow ingress traffic between all of these namespaces. Only HNC can assign the label "tree". It is guaranteed to reflect the current hierarchy.

Details on the HNC functionality can be found in the user manual .

Next steps and participation in the process

If you think that hierarchical namespace'y useful in your organization, version HNC v0.5.1 is available on GitHub (available from 28 August to release v0.5.2 - ca. Perevi..) . We would like to know what you think of it, what problems you solve with it and what features you would like to add to it. As with any software in the early stages of development, care must be taken when using HNC in production. And the more feedback we get, the faster we can arrive at HNC 1.0.

We also welcome contributions from third party contributors, be it bug fixes / information about them or help in prototyping new features such as exceptions, improved monitoring, hierarchical resource quoting or configuration optimization.

You can contact us in the repository , newsletter, or Slack . We look forward to your comments!

The original announcement was made by Adrian Ludwin , Software Engineer and Technical Lead for the Hierarchical Namespace Controller.

Bonus! Roadmap and issues

Please post issues - the more, the more fun! Bugs will be analyzed first, and feature requests will be prioritized, after which they will be included in the work plan or backlog.

HNC has not yet achieved GA status, so be careful when using it on clusters with configuration objects that you cannot afford to lose (for example, those that are not stored in a Git repository).

All HNC issues are included in the corresponding work plan. At the moment, the following main stages of this plan have been implemented or planned:

- v1.0: end of I - beginning of II quarter of 2021; HNC is recommended for production.

- v0.8: early 2021; new critical functions may appear.

- v0.7: end of 2020; most likely v1beta1 API will appear.

- v0.6: 2020-; v1alpha2 API .

- v0.5: 2020-; , .

- v0.4: 2020-; API production-.

- v0.3: 2020-; UX subnamespace'.

- v0.2: 2019-; non-production.

- v0.1: 2019-; . , - .

- : .

PS from translator

Read also on our blog:

- “ Designing Kubernetes Clusters: How Many Should There Be? ";

- "The ABC of Security in Kubernetes: Authentication, Authorization, Audit ";

- " Understanding RBAC in Kubernetes ».