World champion Garry Kasparov defeated the IBM Deep Blue computer in 1996, but was defeated in 1997, losing 4-2.

The 1997 victory of the IBM Deep Blue computer over the grandmaster and world champion Garry Kasparov is considered a triumphant watershed in the history of technology, comparable to the landing on the moon. She seems to have demonstrated that computers can beat humans in what was thought to be unique to us: thinking 1 . The symbolic AI technologies used by the DeepBlue computer are considered obsolete today, especially for more complex games like go, which was invented in China two and a half thousand years ago. But in 2016, World Go Champion Lee Sedol was defeated by Google's DeepMind AlphaGo AI system. Researcher and venture capitalist Li Kaifu called this event "Sputnik Moment" for China 2: He believes that it was this that prompted China to invest billions of dollars in AI research in order to catch up and perhaps even surpass the United States. AlphaGo's victory illustrates the flourishing of a new AI paradigm - deep learning and neural networks - that is at the heart of the modern AI revolution.

Why were games like chess and go so important in the history of AI? The pioneers of artificial intelligence research, including Herbert Simon, Allen Newell, John McCarthy and Marvin Minsky, viewed human intelligence through the traditional prism of Western philosophy, the foundations of which were laid by Aristotle. This masculine, Eurocentric view of intelligence, rooted in the Cartesian division of mind and body, prioritized brain skills - logic, mathematics, and the ability to solve problems at the expense of bodily, emotional, social and cultural forms of intelligence. They believed that if Reason (i.e. Logic) distinguishes Man from the Beast, then it is Logic that should be the basis of intelligence.

Blaise Pascal was a philosopher and mathematician. In the 1640s, he invented a machine capable of performing addition to help his father, a tax collector.

Many Western philosophers and mathematicians, from Blaise Pascal to George Boole and Bertrand Russell, have sought to either make computation / logic, which they equated with thought itself, more rigorous mathematically (more "formal"), or to take the next step - to mechanize it. Pascal himself made a computing machine for this purpose, and the culmination of this impulse of Western thought was the invention of the digital computer in the 20th century. The pioneers of AI research in the 1950s and 1960s saw games as another way for humans to demonstrate intelligence by solving problems. If AI researchers could simulate how players do it, they could automate this process. The branch of mathematics called game theory, applied to economics and military affairs, was founded by mathematician and computer pioneer John von Neumann;it provided optimization of strategies and algorithms widely used in computer science. AI research pioneer Herbert Simon has applied these theories to both computer science and economics (in which he won the Nobel Prize). Hence, the idea that games can seriously model aspects of the real world was central to the early days of computer science. In particular, because early computers had difficulty simulating the complexity of the real world, games were considered a simplified “microworld,” whose limitations and rules were well understood by computers that made rapid progress possible in the 1960s.Hence, the idea that games can seriously model aspects of the real world was central to the early days of computer science. In particular, because early computers had difficulty simulating the complexity of the real world, games were considered a simplified "microworld" whose limitations and rules were well understood by the computers that made rapid progress possible in the 1960s.Hence, the idea that games can seriously model aspects of the real world was central to the early days of computer science. In particular, because early computers had difficulty simulating the complexity of the real world, games were considered a simplified “microworld,” whose limitations and rules were well understood by computers that made rapid progress possible in the 1960s.

Wolfgang von Kempelen's Turok chess machine, controlled by a living player hidden inside.

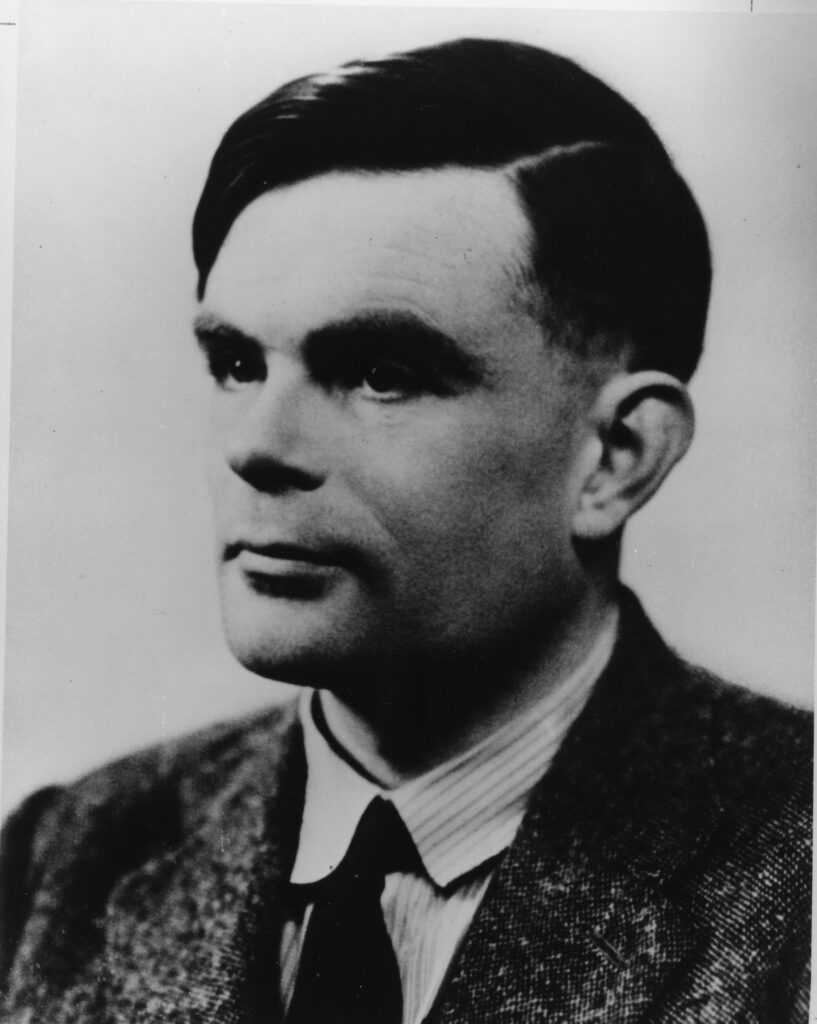

Chess, in particular, has historically been considered the pinnacle of intellectual activity in the West. It was an intellectual game associated with logic and strategy. Think of Mr. Spock from Star Trekdefeating human players in 3D chess. Even in the 18th century, the European elite was fascinated by the idea of machines that could play chess. Wolfgang von Kempelen became famous for his "Mechanical Turk" - a chess machine made for the Austrian Empress Maria Theresa and defeating Benjamin Franklin and Napoleon. Later it turned out that the "Turk" was a fake and a live player was hiding inside it; nevertheless, he struck the imagination of Edgar Allan Poe and Charles Babbage. Interest in chess as an indicator of intelligence extended to the mathematicians who laid down the theory of computation in the 20th century: Alan Turing, Claude Shannon, John von Neumann, Norbert Wiener, and, of course, the pioneers of AI Herbert Simon, Allen Newell and John McCarthy. In particular, Newell and Simon considered chess to be a model challenge for AI,perfect for their preferred solution: search.

1947 .

MIT Bell Labs () 1950 , . MIT ( ). (. 1950 ).

, , 1980 .

What is search and how can you use it when playing chess? In the context of AI, search does not mean searching for text on the web using Google (although a web search engine may use the concept of search in an AI context). In AI, search refers to the process of trial and error in traversing possible solutions to a problem. Search is one of the fundamental methods of classical AI, also known as "symbolic" AI, because such methods involve manipulating lists of symbols, for example, as in solving algebraic problems. All kinds of problem-solving processes, such as theorem proving, puzzle solving, games, and maze-solving, involve deciding what to try first. These choices can be modeled as a branching decision tree.

.

-. , , - «».

Let's say we need to create a robot mouse looking for a way out of a maze (roughly what Claude Shannon did in 1950). If she hits an intersection with four doors, she can move to the right, forward and left, but she is forbidden to go back. This gives us three possible choices. Computer scientists would say that a mouse has a “branching factor” of 3. The simplest way to program a computer to walk through (solve) a maze would be to check each option, or branch, in turn. This is called brute-force search: we test every variation. However, our mouse will, of course, go to one more intersection before it is given the opportunity to return to check all other options at the first intersection. Every time she reaches a new intersectionthe mouse can choose between three more new paths. We can set the number of intersections that the mouse can search in depth before going back and trying a different path.

Claude Shannon moves his electric mouse in a maze (c. 1952).

This is referred to as search depth, and in the context of gaming, “look ahead”. As you can see, the number of paths that need to be searched by the mouse grows very quickly: like 3 (the branching factor), multiplied by itself as many times as we proactively check in the decision tree. In other words, the problem is growing exponentially. In the AI industry, this is often referred to as the "combinatorial explosion" problem.

The chessboard in early theories

A similar method can be used in chess as well. On each player's move, we have a choice of a maximum of 38 possible allowed moves, that is, the chess problem has a branching factor of 38. To select the best of these 38 moves, a quantitative method is used to assess the relative benefit of one chess position over another. This is called the “scoring function”. The average chess game takes 42 moves, and since there are two players, this needs to be multiplied by two, which gives us approximately 38 84 - more than the number of stars in the universe. Even in the early stages of the history of AI, it became clear that such a search by brute-force search for chess and other tasks simply would not work on the equipment of that time; there are too many options and computers are too weak. Claude Shannon was one of the first to use the algorithm "minimax "in a computer chess program (this algorithm is still the basis of most chess programs today), having noticed that thanks to human knowledge and experience in the game, you can quickly cut off many branches without looking at them. Herbert Simon and Allen Newell proposed using" heuristics ", or rules of thumb that humans partly use when solving problems, most often they work, but this does not always happen Heuristics are the kind of human knowledge that can be programmed into a computer.

Chess

Chess has many more branches in its decision tree than tic-tac-toe. It has been calculated that the number of variants of chess games is approximately equal to 10 120 , which is more than the number of atoms in the universe.

Bounded lookahead

Given the huge number of branches, chess programs can look forward through the search tree only to a finite depth, otherwise the search will last forever.

One such heuristic that has proven useful in chess is " alpha-beta cutoff". This means that if the program has determined that one of the moves can easily be counteracted by the enemy, then there is no need to look for other ways in which he can counteract the same move. Further search along this path can be ignored, cutting off this entire branch from the tree This can significantly reduce the branching factor, from 38 to 6, and sometimes to 3. In addition, given the limitations of computers at the time, most programs could only look 4 moves ahead One of the first chess programs capable of competently playing against amateurs , was created around 1959-1962 by MIT student Alan Kotok under the direction of John McCarthy The Kotoka-McCarthy program used alpha-beta clipping.

, IBM 7090 . , - . 1967 , , 3-1 .

1959 MIT , . , , . . 1962 .

Newell and Simon believed that all AI problems, such as chess, could be solved by searching in combination with heuristics, or "heuristic searching." Heuristic search was the central idea behind Newell and Simon's early breakthroughs, the Logic Theorist and General Problem Solver, and became a critical pillar in their theory that intelligence for both humans and machines lies in the simple manipulation of symbols, the fundamental building blocks. mathematics and language. This "physical system of symbols" hypothesis became the premise on which the entire project of symbolic artificial intelligence was based, from its inception in the 1950s to the early 2000s. This theory, which postulated the equivalence of the "brain" of computers and humans, has become extremely influential in cognitive psychology.and later even got into popular culture thanks to works in the cyberpunk genre, in which people could upload their brains to the Internet or replace them with chips.

() , -, Logic Theorist, General Problem Solver NSS ( --). JOHNNIAC RAND.

Computers got faster, and computer scientists who were themselves experienced chess players, such as Richard Greenblatt and Hans Berliner, created their own chess programs. They found that the first chess programs (such as the one written by Kotok) played extremely poorly and added their own knowledge of how real players approach the game to their programs; this knowledge took the form of additional heuristics to improve the estimation of the position of pieces, databases of opening moves and endgames, and recognizers of patterns of the playing field. Over time, however, it became clear that chess programs run on faster computers or specialized equipment can outperform programs that have a large amount of human knowledge built into them. This happened because no heuristic is perfect and cannot account for all situations.Sometimes ingenious moves arise because the player is trying to do something that most people would consider a bad move. Most heuristics would cut off such a move without further search, which means that programs using human knowledge would never make such a move.

Hans Berliner (background), Murray Campbell (left) and Feng Xiong Xu at the 20th Annual ACM Computer Chess Championships in Reno, NV. First place was shared by two teams - HiTech (Berliner's team) and Deep Thought (Campbell's and Xu's team); both of them represented Carnegie Mellon University. Three members of the Deep Thought team (including Campbell and Xu) were later hired by IBM to create Deep Blue.

As computers got faster, they were able to look ahead more deeply, 6, 7, 8 moves, easily defeating programs that predicted only 4 moves ahead. A more efficient search algorithm was discovered called "iterative deepening search"; he could gradually increase the depth of search along the path that looked the most promising. It was first used in Chess 4.5.3 David Slate and Larry Atkins - the first program to win in 1976 in a human chess tournament. The increased memory capacity also allowed programs to retain previously reviewed positions, further reducing the amount of searches required. All these innovations (alpha-beta pruning, iterative deepening, storing verified positions and a database of openings and endgames) were freely exchanged by the developers of chess programs in computer chess tournaments, so they became standard techniques.

In 1977, Ken Thompson (better known as the co-inventor of the Unix operating system) and Joe Condon of Bell Laboratories designed the Belle, a specialized chess machine. Belle's specialized chess equipment and endgame database have revolutionized computer chess.

Belle 13- . Belle , Cray Blitz. 1970 1994 (Association for Computing Machinery, ACM) .

1980- Belle, Bell Labs, . Belle CHAOS WCCC 1980 , , . Belle CHAOS .

Belle Chess 4.0 4- (WCCC), - 1983 . : , . : Chess . Cray Blitz, Bebe.

Despite the advances in software, with the increasing speed of computers in the 1970s, chess programs automatically got better without any software innovation. By the 1980s, the dominant factor in advances in computer chess was the use of hardware to speed up search. They have become a computer design challenge, not an AI challenge. In 1997, Deep Blue still largely used the same programming techniques as chess programs 20 years before; however, he managed to defeat Kasparov mainly because he was a fast computer with many specialized parallel processors. In a sense, as computers grew in speed, chess programs became less intelligent.

Deep Thought I, 1988 . Deep Thought, — , Deep Blue.

Deep Blue.

IBM Deep Blue ( , , , , . . ).

1997 Deep Blue (-).

In the 1980s, depth-first search as the dominant topic in AI research was already in decline. Beginning in the 1960s, researchers such as Ed Feigenbaum at Stanford have created so-called “expert systems,” in which large amounts of human expert knowledge were poured into AI programs in the form of if-then rules. As in the case of the first heuristic programs, these rules were programmed into the software code, but unlike heuristic systems, the "knowledge base" was separated from the logical parts of the program ("inference machines"). Feigenbaum and other adherents of expert systems argued that "knowledge is power." In other words, they believed that a large knowledge base compensates for the lack of complex reasoning: the more knowledge, the less search, and vice versa.

Tiger in a Cage: Applying Knowledge Base Systems, Lecture by Edward Feigenbaum, 1993

Discussion of the history of AI at AAAI-17: expert systems, 2017

In the 1980s, expert systems spawned many commercial companies. All this activity almost did not affect chess programs, which at that time were unfolding in a different direction: back to brute-force searching with the help of specialized equipment. The leading chess machines of this type were Ken Thompson's Belle of Bell Labs and two separate Carnegie Mellon University projects: Hans Berliner's HiTech with Feng Xiong Xu and Murray Campbell's Deep Thought, which later evolved into IBM's Deep Blue. That is, by the time the machine defeated Kasparov, chess programs had practically ceased to be associated with the general field of AI research, although they provided good advertising.

More troubling, however, was the attack on a symbolic AI project based on Newell and Simon's physical symbol hypothesis by the early 1990s. His critics, in particular the philosopher Hubert Dreyfus, began to question the project of symbolic AI back in the 1960s, arguing that the philosophical assumption of the separation of the brain and body was incorrect and outdated. 20th century philosophers such as Martin Heidegger argued that human thought cannot be separated from bodily experience and the immediate cultural environment of the subject.

AI researchers reacted very sharply to criticism of Dreyfus (although he himself was not particularly diplomatic): leading authorities in the field of AI threatened journals when they published Dreyfus's work. They gloated when Dreyfus, who was not very good at chess, was defeated by Richard Greenblatt's MacHack chess program. However, the success of chess programs did not prove that Dreyfus's criticism was wrong. In fact, the very fact that chess programs like Deep Blue used brute-force search meant that they played little role in a larger general-purpose AI project. The drama of Kasparov's thunderous defeat was hailed as a milestone for the victory of Machines over Man, but in reality it was a triumph of Deep Blue engineers over one chess player. And the creators of Deep Blue did not claimthat their computer was intelligent. They said: if a fire started in the building, Kasparov would be smart enough to escape, and the car would remain in place. And although previously AI pioneer John McCarthy considered chess to be the main goal of AI, after the victory of Deep Blue, he criticized chess for the fact that it failed to develop a single new theory on how to mimic human intelligence.

The media portrayed the 1997 replay between world chess champion Garry Kasparov and the specialized supercomputer IBM Deep Blue as a battle between man and machine. On the cover of Newsweek, she was dubbed "The Brain's Last Line of Defense." Such views exaggerated the power of the computer and minimized the labor of the people who built the machine itself.

By the early 1990s, researchers were beginning to take Dreyfus criticism seriously and began to come up with new kinds of AI, such as those that emphatically have a body, such as Rodney Brooks robots 4, or those that deal with emotions. As we'll see in the second part of this article, in the 2000s, a completely different tradition of AI called machine learning began to replace symbolic AI. Machine learning is capable of performing tasks that symbolic AI has never done better than humans, such as recognizing faces or understanding human speech. The same was true of a game that machines could not play competitively with heuristic search, namely go.

However, while search has lost its greatness as a primary AI technique, it has never lost its usefulness in the broader field of computer science. Significant progress has been made in improving search algorithms for optimal and efficient problem solving. This technique is so fundamental that the creation and search of decision trees is extremely widespread; it is almost impossible to list all the programs that use it.

Search plays a role in any task of obtaining information, from executing queries against databases to searching the web. A * search algorithm first invented for the Shakey robotSRI, is widely used for autonomous vehicle routing and GPS applications. And even today, AI programs that play games using machine learning use different types of search, even if it is no longer the most interesting component of them. However, like other techniques that used to be considered "artificial intelligence", modern search is considered just another basic computer technique, no more intelligent than a regular program. This illustrates the historical pattern of AI development: once it becomes standard and automatic, people no longer regard it as "intelligence." Previously, when we talked about "AI", they most likely meant search. When “AI” is mentioned today, it is usually meant to refer to the successor to symbolic AI — machine learning.

In the second part of this article, we'll explore the machine learning revolution in artificial intelligence, the depth of the difference between deep learning versus search and symbolic AI, and how DeepMind's AlphaGo used deep learning to defeat World Go Champion Lee Sedol.

Notes

1. Nathan Ensmenger, “Is Chess the Drosophila of Artificial Intelligence? A Social History of an Algorithm, ” Social Studies of Science 42, no. 1 (February 2012): 22, https://doi.org/10.1177/0306312711424596 .

2. Kai-Fu Lee, AI Superpowers: China, Silicon Valley, and the New World Order. (Boston; New York: Houghton Mifflin Harcourt, 2019), 1-5.

3. Stuart J. Russell and Peter Norvig, Artificial Intelligence: A Modern Approach , 3rd ed., Prentice Hall Series in Artificial Intelligence (Upper Saddle River, NJ: Pentice Hall, 2010), 110.

4. Rodney A. Brooks, “ Elephants Don't Play Chess, ” Robotics and Autonomous Systems, Designing Autonomous Agents, 6, no. 1 (June 1, 1990): 3-15, https://doi.org/10.1016/S0921-8890(05)80025-9 .

Advertising

If you need servers with instant activation on Linux or Windows to work , then you definitely come to us - the server is ready to work in a minute after payment!