Hello, Habr.

Once I came across a description of an Android application that determined the heart rate by the phone's camera, just by the general picture. The camera was not applied to the finger, it was not illuminated by the LED. An interesting point was that the reviewers did not believe in the possibility of such a pulse determination, and the application was rejected. I don't know how the author of the program ended up, but it became interesting to check whether this is possible.

For those who are interested in what happened, the continuation under the cut.

Of course, I will not make an application for Android, it is much easier to test the idea in Python.

We receive data from the camera

First, we need to get a stream from the webcam, for which we will use OpenCV. The code is cross-platform and can run on both Windows and Linux / OSX.

import cv2

import io

import time

cap = cv2.VideoCapture(0)

cap.set(cv2.CAP_PROP_FRAME_WIDTH, 1920)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 1080)

cap.set(cv2.CAP_PROP_FPS, 30)

while(True):

ret, frame = cap.read()

# Our operations on the frame come here

img = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# Display the frame

cv2.imshow('Crop', crop_img)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()The idea of determining the pulse is that the skin tone changes slightly due to the flow of blood in the vessels, so we need a crop of the picture, which will contain only a fragment of the skin.

x, y, w, h = 800, 500, 100, 100

crop_img = img[y:y + h, x:x + w]

cv2.imshow('Crop', crop_img)If everything was done correctly, when starting the program, we should get something like this from the camera (blurred for privacy reasons) and crop:

Treatment

, , . .

heartbeat_count = 128

heartbeat_values = [0]*heartbeat_count

heartbeat_times = [time.time()]*heartbeat_count

while True:

...

# Update the list

heartbeat_values = heartbeat_values[1:] + [np.average(crop_img)]

heartbeat_times = heartbeat_times[1:] + [time.time()]

numpy.average , , .

:

fig = plt.figure()

ax = fig.add_subplot(111)

while(True):

...

ax.plot(heartbeat_times, heartbeat_values)

fig.canvas.draw()

plot_img_np = np.fromstring(fig.canvas.tostring_rgb(), dtype=np.uint8, sep='')

plot_img_np = plot_img_np.reshape(fig.canvas.get_width_height()[::-1] + (3,))

plt.cla()

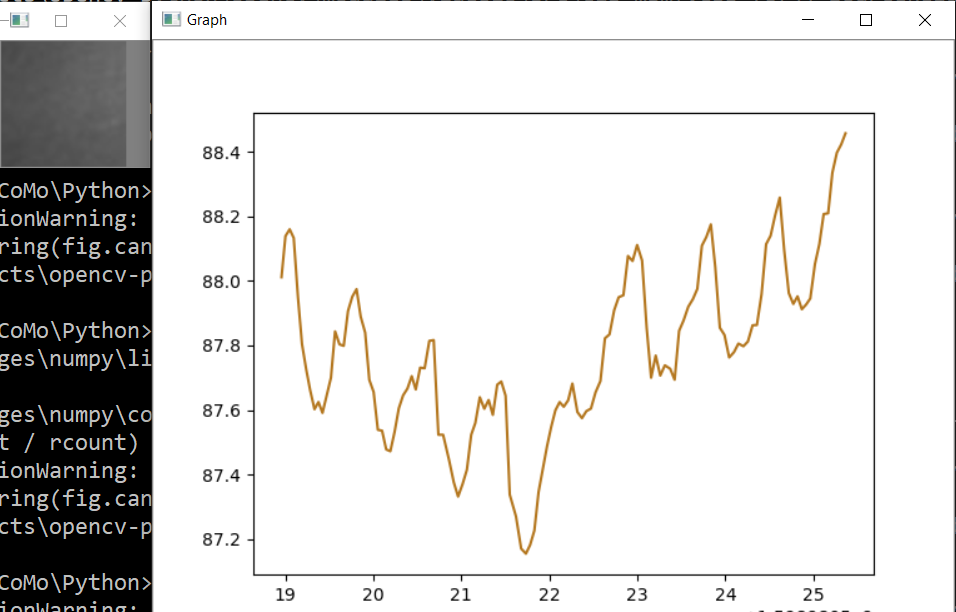

cv2.imshow('Graph', plot_img_np): OpenCV numpy, matplotlib , numpy.fromstring.

.

, , , " ", - . - !

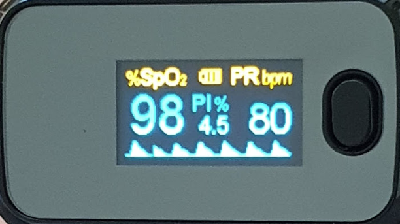

, , , . , ! , 0.5% , " ", . , , 75bpm. , :

, .. , , .

, . , . , , OpenCV . , .

, - , ? , . cap = cv2.VideoCapture(0) cap = cv2.VideoCapture("video.mp4"), .

, .

Spoiler

import numpy as np

from matplotlib import pyplot as plt

import cv2

import io

import time

# Camera stream

cap = cv2.VideoCapture(0)

cap.set(cv2.CAP_PROP_FRAME_WIDTH, 1920)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 1280)

cap.set(cv2.CAP_PROP_FPS, 30)

# Video stream (optional)

# cap = cv2.VideoCapture("videoplayback.mp4")

# Image crop

x, y, w, h = 800, 500, 100, 100

heartbeat_count = 128

heartbeat_values = [0]*heartbeat_count

heartbeat_times = [time.time()]*heartbeat_count

# Matplotlib graph surface

fig = plt.figure()

ax = fig.add_subplot(111)

while(True):

# Capture frame-by-frame

ret, frame = cap.read()

# Our operations on the frame come here

img = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

crop_img = img[y:y + h, x:x + w]

# Update the data

heartbeat_values = heartbeat_values[1:] + [np.average(crop_img)]

heartbeat_times = heartbeat_times[1:] + [time.time()]

# Draw matplotlib graph to numpy array

ax.plot(heartbeat_times, heartbeat_values)

fig.canvas.draw()

plot_img_np = np.fromstring(fig.canvas.tostring_rgb(), dtype=np.uint8, sep='')

plot_img_np = plot_img_np.reshape(fig.canvas.get_width_height()[::-1] + (3,))

plt.cla()

# Display the frames

cv2.imshow('Crop', crop_img)

cv2.imshow('Graph', plot_img_np)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()And as usual, all successful experiments