The Chromium browser, the rapidly expanding open-source parent of Google Chrome and the new Microsoft Edge, has received serious negative attention for a well-intentioned feature that checks to see if a user's ISP is stealing non-existent domain query results.

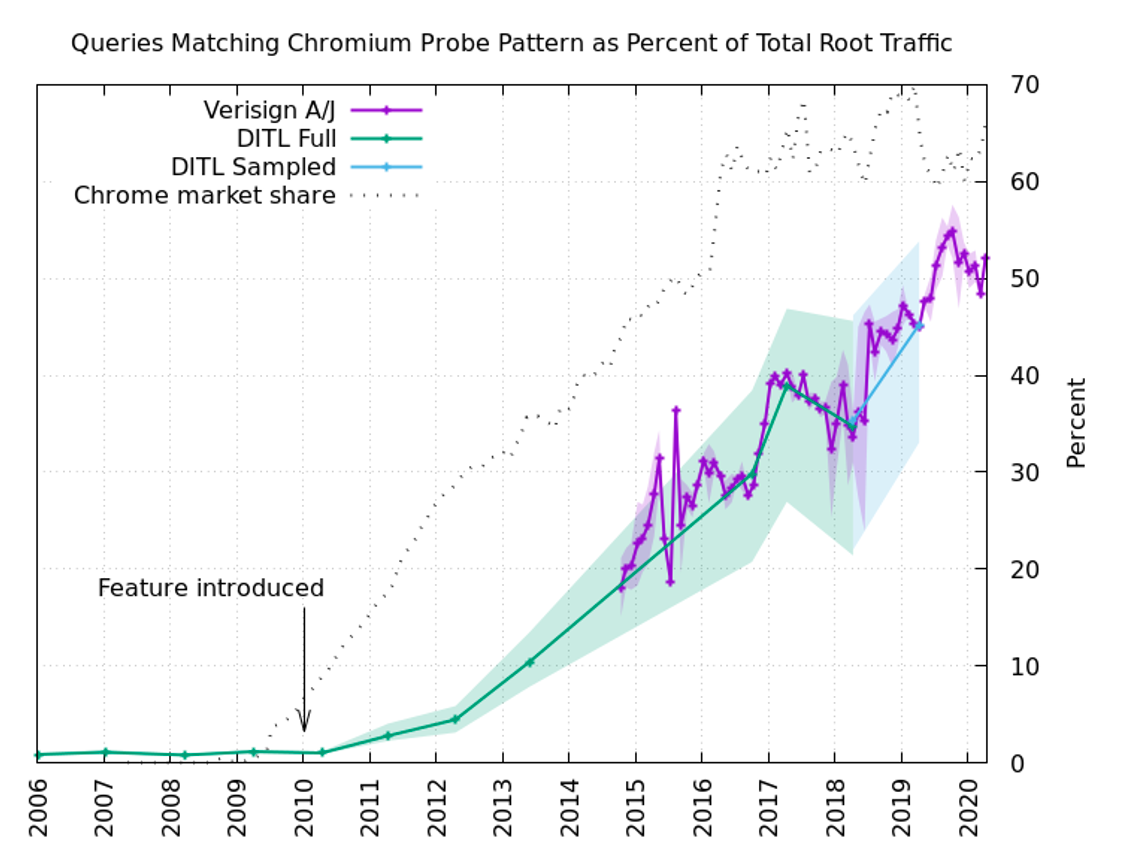

The Intranet Redirect Detector , which generates fake queries for random "domains" that are statistically unlikely to exist, is responsible for about half of the total traffic received by root DNS servers worldwide. Verisign engineer Matt Thomas wrote an extensive blog post on the APNIC blog describing the problem and assessing its scale.

How DNS lookups are usually done

These servers are the ultimate authority to be contacted for resolving .com, .net, and so on, so that they tell you that frglxrtmpuf is not a top level domain (TLD).

DNS, or Domain Name System, is a system by which computers can translate memorable domain names like arstechnica.com into much less convenient IP addresses such as 3.128.236.93. Without DNS, the Internet would not be able to exist in a human-friendly form, which means that unnecessary load on top-level infrastructure is a real problem.

It can take an unimaginable amount of DNS lookups to load a single modern web page. For example, when we analyzed the ESPN homepage, we counted 93 separate domain names, ranging from a.espncdn.com to z.motads.com. All of them are required for a full page load!

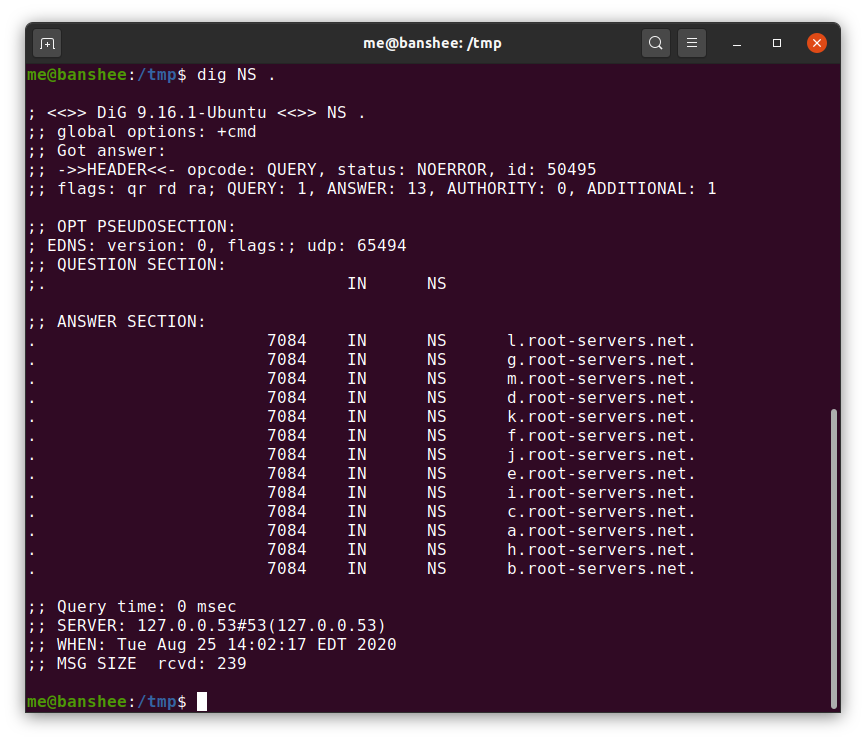

DNS is designed as a multilevel hierarchy to handle this kind of workload that needs to serve the entire world. At the top of this pyramid are root servers - each top-level domain, such as .com, has its own family of servers, which are the ultimate authority for each domain below them. One notch above these servers are the root servers themselves, from

a.root-servers.netto m.root-servers.net.

How often does this happen?

Due to the tiered caching hierarchy of the DNS infrastructure, a very small percentage of the world's DNS queries reach the root servers. Most people get their DNS resolver information directly from their ISP. When a user's device needs to know how to get to a specific site, a request is first sent to a DNS server managed by that local provider. If the local DNS server does not know the answer, it forwards the request to its own "forwarders" (if specified).

If neither the local ISP's DNS server nor its configured "forwarders" have a cached response, the request is routed directly to the authoritative server in the domain above the one you are trying to resolve . When

.comthis will mean that the request is sent to the authoritative servers of the domain itself com, which are located at gtld-servers.net.

The system

gtld-serversto which the request was made responds with a list of authoritative name servers for the domain.com domain, as well as at least one glue record containing the IP address of one such name server. Then the responses go down the chain - each forwarder passes these responses down to the server that requested them, until the response finally reaches the server of the local provider and the user's computer. At the same time, they all cache this response so as not to disturb higher-level systems unnecessarily.

In most cases, the nameserver records for domain.comwill already be cached on one of these forwarders, so the root servers are not disturbed. However, for now we are talking about the usual form of a URL - one that is converted into a regular website. Chrome queries are at a level above that, at the rung of the clusters themselves

root-servers.net.

Chromium and NXDomain theft check

Chromium checks "Is this DNS server fooling me?" account for nearly half of all traffic reaching the Verisign DNS Root Server Cluster.

The Chromium browser, the parent project of Google Chrome, the new Microsoft Edge, and countless lesser-known browsers, wants to provide users with the ease of searching in a single field, sometimes referred to as "Omnibox." In other words, the user enters both real URLs and search engine queries into the same text box at the top of the browser window. Taking it one step further towards simplification, it also does not force the user to enter part of the URL with

http://or https://.

As convenient as it may be, this approach requires the browser to understand what to count as a URL and what as a search query. In most cases this is pretty obvious - for example, a string with spaces cannot be a URL. But things can get trickier when you consider intranets - private networks that can also use private top-level domains to resolve real websites.

If a user enters “marketing” on their company's intranet, and their company's intranet has an internal website with the same name, then Chromium displays an information box asking the user if they want to search for “marketing” or go to

https://marketing... It’s all right, but many ISPs and public Wi-Fi providers “hijack” every misspelled URL, redirecting the user to some page full of banner ads.

Random generation

The Chromium developers did not want users on ordinary networks to see an information window each time they search for one word, asking what they meant, so they implemented a test: when starting a browser or changing a network, Chromium performs DNS lookups of three randomly generated "domains" top-level, seven to fifteen characters long. If any two of these requests return with the same IP address, then Chromium assumes that the local network is "stealing" the errors

NXDOMAINit should receive, so the browser considers all entered queries from the same word as search attempts until further notice.

Unfortunately, on networks that are notsteal the results of DNS queries, these three operations usually go to the very top, to the root name servers themselves: the local server does not know how to translate

qwajuixk, so it forwards this request to its forwarder, which does the same, until finally, a.root-servers.netor one of his "brothers" will not be forced to say "Sorry, but this is not a domain."

Since there are approximately 1.67 * 10 ^ 21 possible fake domain names between seven and fifteen characters long, most of the time each of these tests, performed on a "fair" network, makes it to the root server. This is as much as half of the total load on the root DNS, according to statistics from the part of the clusters

root-servers.netthat belong to Verisign.

History repeats itself

This is not the first time that a well-intentioned project has flooded or nearly flooded a public resource with unnecessary traffic - it immediately reminded us of the long and sad history of D-Link and the Pole-Henning Camp NTP server in the mid-2000s. x.

In 2005, FreeBSD developer Poul-Henning, who also owned Denmark's only Stratum 1 Network Time Protocol server, received an unexpected and large bill for transmitted traffic. In short, the reason was that the D-Link developers registered the addresses of the Stratum 1 NTP servers, including the Campa server, in the firmware of the company's line of switches, routers and access points. This instantly increased the traffic of the Kampa server by nine times, which caused Danish Internet Exchange (Denmark's Internet exchange point) to change its tariff from "Free" to "9,000 dollars per year."

The problem was not that there were too many D-Link routers, but that they "violated the chain of command." Much like DNS, NTP should work in a hierarchical fashion — Stratum 0 servers relay information to Stratum 1 servers, which relay information to Stratum 2 servers, and so on down the hierarchy. A typical home router, switch, or access point, like the one D-Link asked for NTP server addresses, had to send requests to the Stratum 2 or Stratum 3.

The Chromium project, probably with the best intentions, repeated the problem with NTP in the problem with DNS by loading the root servers of the Internet with queries they should never have to handle.

There is hope for a quick solution

There is an open bug in the Chromium project that requires the default Intranet Redirect Detector to be disabled to fix this problem. We must pay tribute to the project Chromium: a bug has been found before , Matt Thomas from Verisign attracted to his great attention to his post in the APNIC blog. The bug was discovered in June, but remained in oblivion until Thomas's post; after the fast, he began to be closely watched.

It is hoped that the problem will be resolved soon, and the root DNS servers will no longer have to respond to approximately 60 billion bogus queries every day.

Advertising

Epic Servers are Windows or Linux VPSs with powerful AMD EPYC processors and very fast Intel NVMe drives. Hurry up to order!