"Are you living in a computer simulation?"

by Nick Bostrom [Published in Philosophical Quarterly (2003) Vol. 53, No. 211, pp. 243-255. (First version: 2001)]

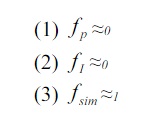

This article states that at least one of the following three assumptions is true:

- (1) it is highly likely that humanity will die out before it reaches the "posthuman" phase;

- (2) every posthuman civilization is extremely unlikely to run a significant number of simulations of its evolutionary history (or its variants), and

- (3) we are almost certainly living in a computer simulation .

It follows from this that the probability of being in the phase of a posthuman civilization that will be able to run simulations of its predecessors is zero, unless we accept the case that we are already living in a simulation as true. Other consequences of this result are also discussed.

1. Introduction

Many works of science fiction, as well as the predictions of serious futurists and technology researchers, predict that colossal amounts of computing power will be available in the future. Let's assume these predictions are correct. For example, future generations with their ultra-powerful computers will be able to run detailed simulations of their predecessors or people like their predecessors. Because their computers will be so powerful, they will be able to run many simulations like this.Suppose that these simulated people have consciousness (and they will have it if the simulation is highly accurate and if a certain widely accepted concept of consciousness in philosophy is correct). This implies that the largest number of minds like ours do not belong to the original race, but rather belong to humans simulated by advanced descendants of the original race. Based on this, it can be argued that it is reasonable to expect that we are among simulated, and not among the original, natural biological minds. Thus, if we do not believe that we are now living in a computer simulation, then we should not assume that our descendants will run many simulations of their ancestors. This is the main idea. In the rest of this work, we will look at it in more detail.

In addition to the interest that this thesis may represent for those involved in futuristic discussions, there is also a purely theoretical interest here. This proof is a stimulus for the formulation of some methodological and metaphysical problems, and it also offers some natural analogies to traditional religious concepts, and these analogies may seem surprising or suggestive.

The structure of this article is as follows: in the beginning we will formulate a certain assumption that we need to import from the philosophy of mind in order for this proof to work. We then look at some of the empirical reasons for believing that running a vast array of simulations of human minds will be available to a future civilization that will develop many of the technologies that have been made clear to be consistent with known physical laws and engineering constraints.

This part is not necessary from a philosophical point of view, but nevertheless encourages to pay attention to the main idea of the article. This is followed by a presentation of the proof in essence, using some simple applications of probability theory, and a section justifying the weak equivalence principle that this proof uses. In the end, we will discuss some interpretations of the alternative mentioned at the beginning, and this will be the conclusion of the proof of the simulation problem.

2. Assumption of carrier independence

A common assumption in the philosophy of mind is the assumption of carrier independence. The idea is that mental states can arise in any medium from a wide class of physical carriers. Provided that the correct set of computational structures and processes is embodied in the system, conscious experiences can arise in it. The intrinsic property is not that intracranial processes are embodied in carbon-based biological neural networks: silicon-based processors inside computers can do exactly the same trick. Arguments in favor of this thesis have been advanced in the existing literature, and although it is not completely consistent, we will take it for granted here.

The proof we offer here, however, does not depend on any very strong version of functionalism or computationalism. For example, we should not accept that the thesis of independence from the carrier is necessarily true (both in the analytical and in the metaphysical sense) - we should only accept that, in reality, a computer under the control of the corresponding program could have consciousness ... Moreover, we must not assume that in order to create consciousness in a computer, we would have to program it in such a way that it behaves in all cases like a human, would pass the Turing test, etc. We only need a weaker the assumption that it is sufficient to create subjective experiencesso that the computational processes in the human brain would be structurally copied in appropriate high-precision details, for example, at the level of individual synapses. This refined version of media independence is widely accepted.

Neurotransmitters, nerve growth factors, and other chemicals that are smaller than synapses clearly play a role in human cognition and learning. The media independence thesis is not that the effects of these chemicals are small or negligible, but that they only affect subjective experience through direct or indirect impact on computational activity. For example, if there are no subjective differences without there being also a difference in synaptic discharges, then the required simulation detail is at the synaptic level (or higher).

3 technological limits of computation

At the current level of technological development, we have neither powerful enough equipment nor the appropriate software to create conscious minds on a computer. However, strong arguments have been put forward that if technological progress continues non-stop, then these limitations will eventually be overcome. Some authors argue that this phase will come in just a few decades. However, for the purposes of our discussion, no timeline assumptions are required. Simulation proof works just as well for those who believe that it will take hundreds of thousands of years to reach a "posthuman" phase of development, when humanity will acquire most of the technological capabilities that can now be shownconsistent with physical laws and material and energy constraints.

This mature phase of technological development will make it possible to transform planets and other astronomical resources into computers of colossal power. At the moment, it is difficult to be sure about any ultimate limits of computing power that will be available to posthuman civilizations. Since we still do not have a "theory of everything", we cannot rule out the possibility that new physical phenomena, forbidden by modern physical theories, can be used to overcome the limitations that, according to our current view, impose theoretical limits on information processing inside a given piece of matter. With a much greater degree of reliability, we can establish the lower bounds of posthuman computation, assuming the implementation of only those mechanisms that are already understood. For instance,Eric Drexler gave a sketch of a system, the size of a sugar cube (excluding the cooling and power supply), that could do 1021 operations per second. Another author gave a rough estimate of 10 42 operations per second for a computer the size of a planet. (If we learn how to create quantum computers, or learn how to build computers from nuclear matter or plasma, we can get even closer to the theoretical limits. Seth Lloyd calculated the upper limit for a 1 kg computer in 5 * 10 50 logical operations per second performed on 10 31 bit. However, for our purposes it is enough to use more conservative estimates, which imply only the currently known operating principles.)

The amount of computer power required to emulate a human brain lends itself to the same rough estimate. One estimate based on how computationally expensive it would be to copy the functioning of a piece of neural tissue that we already understood and whose functionality was already copied in silicon (namely, the contrast enhancement system in the retina was copied) gives an estimate of about 10 14 operations per second. An alternative estimate based on the number of synapses in the brain and the frequency of their firing gives a value of 10 16 -10 17operations per second. Accordingly, even more computing power may be required if we wanted to simulate in detail the inner workings of synapses and dendrite branches. However, it is highly probable that the human central nervous system has a certain measure of redundancy at the micro level to compensate for the unreliability and noise of its neural components. Consequently, one would expect significant gains in efficiency when using more reliable and flexible non-biological processors.

Memory is no more of a limitation than processing power. Moreover, since the maximum flow of human sensory data is on the order of 10 8bits per second, then simulating all sensory events would require negligible cost compared to simulating cortical activity. In this way, we can use the processing power needed to simulate the central nervous system as an estimate of the total computational cost of simulating the human mind.

If the environment is included in the simulation, it will require additional computer power - the amount of which depends on the size and detail of the simulation. Simulating the entire universe down to the quantum level is obviously impossible, except if some new physics is discovered. But it takes much less to get a realistic simulation of the human experience - just as much as is needed to make sure that simulated people interacting in a normal human way with the simulated environment will not notice any difference. The microscopic structure of the interior of the Earth can easily be omitted. Distant astronomical objects can be subject to a very high level of compression: the exact similarity should only be in a narrow range of properties,which we can observe from our planet or from a spacecraft inside the solar system. On the surface of the Earth, macroscopic objects in uninhabited places must be simulated continuously, but microscopic phenomena can fillad hoc , that is, as needed. What you see through an electron microscope should look suspicious, but you usually have no way to check its consistency with unobservable parts of the microworld. Exceptions arise when we purposely design systems to harness unobservable microscopic phenomena that operate according to known principles to produce results that we can independently verify. The classic example of this is the computer. Simulation, therefore, must include continuous simulations of computers down to the level of individual logic gates. This is not a problem since our current computing power is negligible by posthuman standards.

Moreover, a posthuman simulator will have enough computing power to track in detail the state of thought in all human brains at all times. Thus, when he discovers that a person is ready to make some observation about the microcosm, he can fill the simulation with sufficient level of detail as much as necessary. If any mistake happens, the simulation director can easily edit the states of any brain that knew about the anomaly before it ruins the simulation. Alternatively, the director can rewind the simulation a few seconds back and restart it in such a way as to avoid the problem.

It follows from this that the most costly in creating a simulation that is indistinguishable from physical reality for the human minds in it will be to create simulations of organic brains down to the neural or subneural level. While it is not possible to give a very accurate estimate of the cost of a realistic simulation of human history, we can use an estimate of 10 33 -10 36 operations as a rough estimate.

As we gain more experience in creating virtual reality, we will gain a better understanding of the computational requirements that are required to make such worlds appear realistic to their visitors. But even if our estimate is wrong by several orders of magnitude, it doesn't matter much for our proof. We noted that a rough estimate of the computing power of a planet-sized computer is 10 42operations per second, and this is only taking into account the already known nanotechnological designs, which are probably far from optimal. One such computer can simulate the entire mental history of mankind (let's call it a simulation of ancestors) using only one millionth of its resources in 1 second. Posthuman civilization could eventually build an astronomical number of such computers. We can conclude that posthuman civilization can run a tremendous amount of ancestor simulations, even if it spends only a small fraction of its resources on it. We can come to this conclusion, even allowing for a significant error in all our estimates.

- , -, .

4.

The main idea of this article can be expressed as follows: if there is a significant chance that our civilization will someday reach the post-human stage and launch many ancestor simulations, then how can we prove that we do not live in one such simulation?

We will develop this idea in the form of a rigorous proof. Let's introduce the following designations:

- the share of all civilizations of the human level that survive to the posthuman stage;

- the share of all civilizations of the human level that survive to the posthuman stage;

N is the average number of ancestor simulations that posthuman civilization launches;

H is the average number of people who lived in a civilization before it reached the post-human stage.

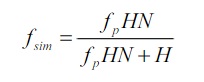

Then the real proportion of all observers with human experience who live in the simulation is:

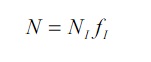

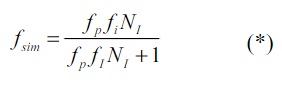

Let us denote as the proportion of posthuman civilizations that are interested in running ancestor simulations (or that contain at least a certain number of individual creatures that are interested in this and have significant resources to run a significant number of simulations) and as the average number of ancestor simulations launched by such interested civilizations, we get:

And therefore:

Due to the colossal computational power of posthuman civilizations is extremely large, as we saw in the previous section. Considering formula (*) we can see that at least one of the following three assumptions is true:

5. The soft principle of equivalence

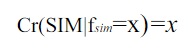

We can go a step further and conclude that given (3) is true, you can almost certainly be sure that you are in a simulation. Generally speaking, if we know that the fraction x of all observers with human-type experience live in a simulation, and we have no additional information that shows that our own particular experience is more or less likely embodied in a machine, and not in vivo than other human experiences, in which case our confidence that we are in a simulation should be x:

This step is justified by a very weak principle of equivalence. Let's split the two cases. In the first case, which is simpler, all the minds under investigation are similar to yours, in the sense that they match your mind exactly qualitatively: they have the same information and the same experiences that you have. In the second case, minds are only similar to each other only in a broad sense, being the kind of minds that are typical of human beings, but qualitatively different from each other and each has a different set of experiences. I argue that even when minds are qualitatively different, proof of simulation still works, provided that you have no information that answers the question of which of the different minds are simulated and which are biologically realized.

A more rigorous substantiation of the principle, which includes both of our particular examples as trivial special cases, has been given in the literature. Lack of space makes it impossible to give the whole rationale here, but we can give one of the intuitive reasons here. Imagine that x% of a population has a certain genetic sequence S within a certain part of their DNA, which is usually called "junk DNA". Suppose, further, that there are no manifestations of S (with the exception of those that may appear with genetic testing) and there is no correlation between the possession of S and any external manifestations. It is then quite obvious that, before your DNA is sequenced, it is rational to attribute confidence in x% to the hypothesis that you have a fragment S. And this is quite independent of the fact thatthat people who have S have minds and experiences that are qualitatively different from those who do not have S. (They are different simply because all people have different experiences, not because there is any direct connection between S and the kind of experience the person is experiencing.)

The same reasoning applies if S is not the property of having a certain genetic sequence, but instead the fact of being in a simulation, on the assumption that we have no information that allows us to predict any differences between the experiences of the simulated minds and between the experiences of the original biological minds.

It should be emphasized that the soft principle of equivalence emphasizes only equivalence between hypotheses about which of the observers you are, when you do not have information about which of the observers you are. It does not generally attribute equivalence between hypotheses when you have no specific information about which hypothesis is true. Unlike Laplace and other stronger principles of equivalence, he is thus immune to the Bertrand paradox and other similar difficulties that complicate the unlimited application of the principles of equivalence.

Readers familiar with proof of the Doomsday argument (DA) (J. Leslie, “Is the End of the World Nigh?” Philosophical Quarterly 40, 158: 65-72 (1990)) may be uneasy that the principle of equivalence, used here is based on the same assumptions that are responsible for knocking the soil out of the DA, and that the counter-intuitiveness of some of the conclusions of the latter casts a shadow on the validity of the reasoning about the simulation. This is not true. DA rests on the much stricter and more controversial premise that a person should reason as if they were a random sample of the entire set of people who have ever lived and will live (in the past, present and future), despite the fact that that we know that we are living at the beginning of the 21st century, and not at some point in the distant future. The soft uncertainty principle applies only to those caseswhen we do not have additional information about which group of people we belong to.

If betting is some basis for rational belief, then if everyone is betting on whether they are in a simulation or not, then if people use the soft uncertainty principle and bet that they are in a simulation, relying on on the knowledge that most of the people are in it, then almost everyone will win their bets. If they bet that they are not in the simulation, then almost everyone will lose. It seems more useful to follow the principle of soft equivalence. Further, one can imagine a sequence of possible situations in which an increasing proportion of people live in simulations: 98%, 99%, 99.9%, 99.9999%, and so on. As we approach the upper limit, when everyone is living in a simulation (from which one can deduce that everyone is in a simulation), it seems like a reasonable requirement,so that the certainty one attributes to being in a simulation smoothly and continuously approaches the limiting limit of total certainty.

6. Interpretation

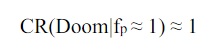

The possibility mentioned in point (1) is quite understandable. If (1) is true, then humanity will almost certainly fail to reach the posthuman level; no species at our level of development becomes posthuman, and it is difficult to find any justification for thinking that our own species has any advantages or special protection against future disasters. Under condition (1), therefore, we must attribute a high certainty of Doom (DOOM), that is, to the hypothesis that humanity will disappear before it reaches the posthuman level:

One can imagine a hypothetical situation in which we have data that overlap our knowledge about f p... For example, if we find that a giant asteroid is about to hit us, we can assume that we were extremely unlucky. In this case, we can ascribe to the Doom hypothesis more certainty than our expectation of the proportion of human-level civilizations that will not be able to reach post-humanity. In our case, however, we do not seem to have any reason to think that we are special in this respect, for better or worse.

Assumption (1) does not in itself mean that we are likely to die out. It suggests that we are unlikely to reach the posthuman phase. This possibility could mean, for example, that we will remain at or slightly above current levels for a long time before we become extinct. Another possible reason for the truth of (1) is that, most likely, the technological civilization will collapse. At the same time, primitive human societies will remain on Earth.

There are many ways in which humanity can become extinct before it reaches the posthuman phase of development. The most natural explanation (1) is that we will die out as a result of the development of some powerful but dangerous technology. One candidate is molecular nanotechnology, the mature stage of which will allow the creation of self-replicating nanorobots that can feed on dirt and organic matter - something like a mechanical bacteria. Such nanorobots, if engineered for malicious purposes, can lead to the death of all life on the planet.

A second alternative to the inference of the simulation reasoning is that the proportion of posthuman civilizations that are interested in running ancestor simulations is negligible. For (2) to be true, there must be a strict convergence between the development paths of advanced civilizations. If the number of ancestor simulations created by interested civilizations is exceptionally large, then the rarity of such civilizations must be correspondingly extreme. Virtually no posthuman civilization decides to use its resources to create a large number of ancestor simulations. Moreover, almost all posthuman civilizations lack individuals who have the appropriate resources and interest to run ancestor simulations; or they have laws backed up by force,preventing the behavior of individuals according to their desires.

What force can lead to such convergence? Someone might argue that advanced civilizations all as one develop along a trajectory that leads to the recognition of the ethical prohibition of running ancestor simulations due to the suffering that the inhabitants of the simulation experience. However, from our present point of view, it does not seem obvious that the creation of the human race is immoral. On the contrary, we tend to perceive the existence of our race as of great ethical value. Moreover, the convergence of only ethical views on the immorality of running ancestor simulations is not enough: it must be combined with the convergence of civilizational social structure, which leads to the fact that activities considered immoral are effectively prohibited.

Another possibility of convergence is that nearly all individual posthumans in almost all posthuman civilizations develop in a direction in which they lose the urge to run ancestral simulations. This will require significant changes in the motivations that drive their posthuman ancestors, as there are certainly many people out there who would like to run ancestor simulations if they had the chance. But perhaps many of our human desires will seem foolish to anyone who becomes a posthuman. Maybe the scientific value of ancestor simulations for posthuman civilizations is negligible (which does not seem too incredible given their incredible intellectual superiority) and maybePosthumans regard recreational activity as a very ineffective way of obtaining pleasure - which can be obtained much more cheaply by directly stimulating the brain's pleasure centers. One conclusion that follows from (2) is that posthuman societies will be extremely different from human societies: they will not have relatively wealthy independent agents who have a full range of human-like desires and who are free to act in accordance with them. ...and who are free to act in accordance with them.and who are free to act in accordance with them.

The opportunity described by inference (3) is the most intriguing conceptually. If we live in a simulation, then the space we observe is only a small piece in the totality of physical existence. The physics of the universe where the computer is located may or may not resemble the physics of the world we observe. While the world we observe is somewhat “real,” it is not located at some fundamental level of reality. It may be possible for simulated civilizations to become posthuman. They can in turn run ancestor simulations on powerful computers that they have built in a simulated universe. Such computers would be “virtual machines,” a very common concept in computer science. (Web applications written in Java script such asruns in a virtual machine - a simulated computer - on your laptop.)

Virtual machines can be nested within one another: it is possible to simulate a virtual machine simulating another machine, and so on, with an arbitrarily large number of steps. If we can create our own ancestor simulations, this would be strong evidence against points (1) and (2), and we would therefore have to conclude that we are living in a simulation. Moreover, we will have to suspect that the posthumans who started our simulation are themselves simulated creatures, and their creators, in turn, can also be simulated creatures.

Reality, therefore, can contain several levels. Even if the hierarchy is to end at some level - the metaphysical status of this statement is quite unclear - there may be enough space for a large number of levels of reality, and this number may increase over time. (One consideration that speaks against such a tiered hypothesis is that the computational cost for basic simulators will be very high. Simulating even one posthuman civilization can be prohibitively expensive. If so, then we should expect our simulation to be turned off. when we get closer to the posthuman level.)

Although all elements of this system are naturalistic, even physical, it is possible to draw some loose analogies with religious concepts of the world. In a sense, the posthumans who launched the simulation are like gods in relation to people in the simulation: posthumans create the world that we see; they have a superior intelligence; they are omnipotent in the sense that they can interfere with our world in ways that violate physical laws, and they are omniscient in the sense that they can monitor everything that happens. However, all demigods, with the exception of those who live on the fundamental level of reality, are subject to the actions of more powerful gods who dwell on higher levels of reality.

Further chewing on these themes may end with naturalistic theogony, which will study the structure of this hierarchy and the restrictions imposed on the inhabitants by the possibility that their actions at their level may affect the attitude of the inhabitants of a deeper level of reality towards them. For example, if no one can be sure that they are at a basic level, then everyone should consider the likelihood that their actions will be rewarded or punished, perhaps based on some moral criteria, by the masters of the simulation. Life after death will be a real possibility. Because of this fundamental uncertainty, even civilization at a basic level will be motivated to behave ethically. The fact that they have a reason to behave morally will of course be a compelling reason for someone else to behave morally, and so on,forming a virtuous circle. Thus, you can get something like a universal ethical imperative, which will be in the personal interests of everyone, and which comes out of nowhere.

In addition to simulations of ancestors, it is possible to imagine the possibility of more selective simulations that involve only a small group of people or one individual. The rest of the people will then be "zombies" or "shadow people" - people simulated only at a level sufficient for fully simulated people not to notice anything suspicious.

It is not clear how much cheaper it will be to simulate shadow people than real people. It is not even obvious that it is possible for an object to behave indistinguishable from a real person and thus not have conscious experiences. Even if such selective simulations exist, you do not have to be sure that you are in it before you are sure that such simulations are much more numerous than full simulations. The world must have about 100 billion more self-simulations (simulations of the life of only one consciousness) than there are full ancestor simulations - in order for most of the simulated people to be in self-simulations.

There is also the possibility that simulators jump over a certain part of the mental life of the simulated creatures and give them fake memories of the type of experience they might have during the missed periods. If so, one can imagine the following (stretched) solution to the problem of evil: that in reality there is no suffering in the world and that all memories of suffering are an illusion. Of course, this hypothesis can only be considered seriously in those moments when you yourself are not suffering.

Assuming we are living in a simulation, what are the implications for us humans? Contrary to what has been said before, the consequences for humans are not particularly drastic. Our best guide to how our posthuman creators chose to arrange our world is standard empirical exploration of the universe we see. Changes to much of our belief system are likely to be small and gentle — proportional to our lack of confidence in our ability to understand posthuman thought systems.

A correct understanding of the truth of thesis (3) should not make us "insane" or force us to quit our business and stop making plans and predictions for tomorrow. The main empirical importance of (3) at the moment, apparently, lies in its role in the triple conclusion given above.

We should hope that (3) is true because it reduces the likelihood of (1), however, if computational constraints make it likely that simulators will turn off the simulation before it reaches posthuman levels, then our best hope is that (2) is true. ...

If we learn more about posthuman motivation and resource constraints, perhaps as a result of our development towards posthumanity, then the hypothesis that we are simulated will receive a much richer set of empirical applications.

7. Conclusion

A technologically mature posthuman civilization would have enormous computing power. Based on this, reasoning about the simulation shows that at least one of the following theses is true:

- (1) , , .

- (2) , , .

- (3) , , .

If (1) is true, then we will almost certainly die before we reach the posthuman level.

If (2) is true, then there must be a strictly coordinated convergence of the development paths of all advanced civilizations, so that none of them has relatively wealthy individuals who would like to run ancestor simulations and would be free to do so.

If (3) is true, then we are almost certainly living in a simulation. The dark forest of our ignorance makes it reasonable to distribute our confidence almost evenly between items (1), (2) and (3).

Unless we already live in a simulation, our descendants will almost certainly never run ancestor simulations.

Acknowledgments

I am grateful to many people for their comments, and especially Amara Angelica, Robert Bradbury, Milan Cirkovic, Robin Hanson, Hal Finney, Robert A. Freitas Jr., John Leslie, Mitch Porter, Keith DeRose, Mike Treder, Mark Walker, Eliezer Yudkowsky, and anonymous referees.

Translation: Alexey Turchin

Translator's Notes:

1) (1) (2) – . , , . , , 10**500 , , . , , , – . , . ( : « » « » « » « ».) , . , , , .

2) – , , . .

3) . , , – , , , , – last but not least – .

4) , , . , , , .

5) : - .

6) – , .

7) , . , , , .

8) ( , , ). . , ( .)

9) , 2 1 , 0 . . , . . 1000 . 3003 . , 1000 , . 1003 . , .

10) , , , , . , , – , – .70 % , - , . : , , , , , , . , , , , . , , , , , , .

11) , , – ) ) , – . .

12) , . , . , , , , , , .

13) , , , . , . . .

14) , . .

15) , . ( ). , - , .

16) . , . , . , . . , , , . , , .

« »:

–

. ( . . www.proza.ru/2009/03/09/639), , , . . , . , , , , , . , . . , – , , , . , , , , , .

– . , . , , , , .

, . . , . , , , . , , . , , , . - , . , .

, , , , . , , .

, , . , , . ! , , . , , , , , - .

, – -. ( ). - , 70 . - – . - , . – , .

, – , . ( ), . , , . , .

, , – , . , , – , . , , , , , , , .

– , , , . , . – . , , . , ( «13 »). , , . , , «», , . , , . , , , : , , ( , , , . .) , , , , . , , . -. , , . , 21 (, , 23 ) 21 . 21 , , 23 . ( 23 , .) 21 , , . , , , , , , , ( , , ) . self-sampling assumption , . , ) ) , , ( ) ) - ) , N , N ) .

, , , . , , , .

.

, , .

, « » — , , - -. , , , – - «». ru.wikipedia.org/wiki/_()

2) – , , . .

3) . , , – , , , , – last but not least – .

4) , , . , , , .

5) : - .

6) – , .

7) , . , , , .

8) ( , , ). . , ( .)

9) , 2 1 , 0 . . , . . 1000 . 3003 . , 1000 , . 1003 . , .

10) , , , , . , , – , – .70 % , - , . : , , , , , , . , , , , . , , , , , , .

11) , , – ) ) , – . .

12) , . , . , , , , , , .

13) , , , . , . . .

14) , . .

15) , . ( ). , - , .

16) . , . , . , . . , , , . , , .

« »:

–

. ( . . www.proza.ru/2009/03/09/639), , , . . , . , , , , , . , . . , – , , , . , , , , , .

– . , . , , , , .

, . . , . , , , . , , . , , , . - , . , .

, , , , . , , .

, , . , , . ! , , . , , , , , - .

, – -. ( ). - , 70 . - – . - , . – , .

, – , . ( ), . , , . , .

, , – , . , , – , . , , , , , , , .

– , , , . , . – . , , . , ( «13 »). , , . , , «», , . , , . , , , : , , ( , , , . .) , , , , . , , . -. , , . , 21 (, , 23 ) 21 . 21 , , 23 . ( 23 , .) 21 , , . , , , , , , , ( , , ) . self-sampling assumption , . , ) ) , , ( ) ) - ) , N , N ) .

, , , . , , , .

.

, , .

, « » — , , - -. , , , – - «». ru.wikipedia.org/wiki/_()

More works by Alexey Turchin:

- A message to an unfriendly artificial intelligence

- Glitch in the Matrix: Urban Legend or Evidence of the Simulation?

- Simulation Typology and Termination Risks

About Ontol

Ontol is a map that allows you to choose the most effective route for the formation of your worldview.

Ontol is a map that allows you to choose the most effective route for the formation of your worldview.

Ontol is based on a superposition of subjective assessments, reflection of the read texts (ideally, millions / billions of people). Each person participating in the project decides for himself what is the top 10/100 of the most important, what he has read / watched on significant aspects of life (thinking, health, family, money, trust, etc.) over the past 10 years or all his life ... What can be shared in 1 click (texts and videos, not books, conversations and events).

The ideal end result of Ontola is access 10x-100x faster (than the existing analogues of wikipedia, quora, chats, channels, LJ, search engines) to meaningful texts and videos that will affect the life of the reader ("Oh, how I wish that I read this text before! Most likely life would have gone differently "). Free for all inhabitants of the planet and in 1 click.

- Telegram channel: t.me/ontol

- Web service prototype: beta.ontol.org

- On Habré:

- DeepMind Ontol: The World's Most Helpful AI Resources

- Ontol (= the most useful) about remote work [selection of 100+ articles]

- Ontol: a selection of articles on "burnout" [100+]

- Ontol on startups from Y Combinator: www.ycombinator.com/library