Most often today, motion capture (motion capture) technologies are used in films and video games. But there are other options for their use: today, motion capture helps to compete for the title of the best machine operator, allows you to diagnose gait, preserve the dances of small peoples for future generations, and in the future it will allow you to wage a real war from a cozy bunker.

Horses, Model Proletarians and Nuclear Explosions: What were the motion capture creators trying to photograph?

In 1878, Leland Stanford, the governor of California, founder of Stanford University and horse-riding enthusiast, made a bet with his friends. He argued that a horse running at a gallop at a certain moment lifts all four legs off the ground. The friends did not agree, and it was impossible to visually prove or confirm the assumption. Stanford then brought in renowned photographer and animal movement researcher Edward Muybridge to settle the dispute. On Leland's farm in Palo Alto, a special "photodrome" was built - a corral consisting of a white wall and cameras directed at it from the opposite wall. Ropes were stretched across the corral path, tied to the camera gates. When the horse started to gallop, the rider guided it to the photodrome, the animal's legs touched the ropes, the gates worked and a series of pictures appeared.

This is how chronophotography was born, the first motion capture technology that helped resolve a $ 25,000 dispute.

Stanford proved that he was right: in a gallop, the horse really lifts all four legs off the ground, but at this moment they are tucked under the body, and not stretched back and forth, as artists of different eras have often depicted. This small discovery caused a furor among art critics and artists at the turn of the 19th and 20th centuries. By the way, only black horses were used for shooting - their movements are more clearly recorded against the white background of the photodrome. Source: Eadweard Muybridge / Wikimedia Commons

But the technology of motion capture was interesting not only because of the opportunity to win a bet, and subsequently more than one apparatus based on the principle of chronophotography appeared. For example, photographer Arnold Lond invented the 12-lens chronophotographer in 1891 to capture the facial expressions of neurologist Jean Charcot's patients. From then until now, the main and most difficult task has been to reproduce the captured actions.

The first to become interested in this opportunity were multipliers. In the first decade of the 20th century, the era of cartoons began, which were either puppet or hand-drawn. The artists strove to achieve maximum believability of the movements of the drawn characters. This was done by the American inventor Max Fleischer, who, together with his brother Dave, invented rotoscoping ("photo-shifting") in 1914. At first, real actors were filmed. Then this recording was enlarged frame by frame, projected and reproduced on a glass lumen. The artist traced each frame on tracing paper. The result was a new movie with a realistically moving character.

, , , . Tantalizing Fly (« ») . . «», « 7 », « », «-», «», « » . : Mohamed El Amine CHRAIBI / YouTube

Realistic reproduction of movements by means of their video capture interested in those years the scientists of Soviet Russia, keen on the scientific organization of labor. If you study thoroughly the movements of the best representatives of the proletariat, then you can teach other workers - this was the logic of the Soviet leadership and scientists. This topic was dealt with by the Central Institute of Labor (CIT), created in Moscow, and supervised by Nikolai Bernstein, the founder of the scientific direction of biomechanics, which studies human movement. In his laboratory, Bernstein conducted cyclogrammetric studies: the subject was dressed in a special suit, consisting of dozens of small lamps that played the role of markers. Then, using rapid filming (100-200 frames per second), a cyclogram was obtained. The error in measuring the positions of a moving or running person was only 0.5 mm.

Over the years, Bernstein has studied the movements of athletes, workers and musicians, which has helped to improve performance in competitions and develop teaching methods in different fields. Despite the disgrace during the period of the struggle against the "cosmopolitans", Bernstein still managed to give recommendations on the adaptation of astronauts to the state of weightlessness. Source: Thomas Oger / YouTube

It is noteworthy that Bernstein anticipated the advent of optical and acoustic motion capture, for which sensors attached to the human body are still used today.

This idea was developed by American Lee Harrison III, who experimented with analog microcircuits and cathode-ray tubes. In 1959, he designed a suit with built-in potentiometers (adjustable resistors) and was able to record and animate an actor's movements in real time on a cathode ray tube. Although it was a primitive setup - the animated actor looked like a pillar of light on the screen - it was the first real-time motion capture.

In parallel, the photo-optical method pushed its way, when motion capture was achieved by photographing an object at high speed or from different viewing angles. Harold Edgerton, the inventor of the Rapatronic Camera, created in the 1940s, contributed greatly to this. It is capable of recording a still image with an exposure time of just 10 nanoseconds. Thanks to this device, the scientist was able to capture very fast events - from a splash of water from a falling drop to a nuclear explosion.

The moment of the explosion of a nuclear charge a fraction of a second after detonation, captured by a rapatronic camera. Source: Federal Government of the United States / Wikimedia Commons

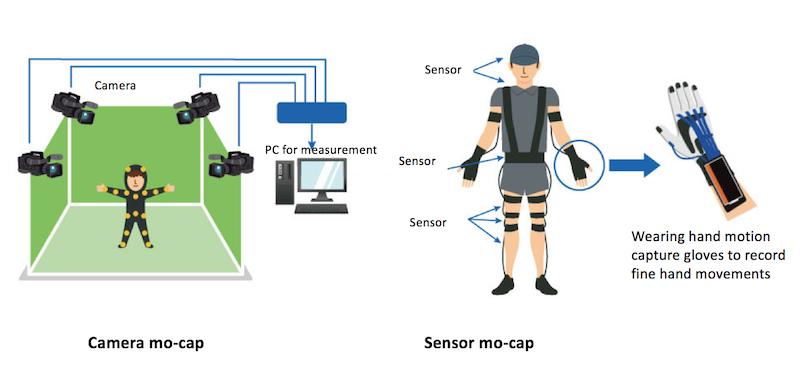

By the end of the 20th century, two main technologies of motion capture were formed, which are conventionally called marker and markerless. In the first case, motion capture occurs using markers or sensors placed on the human body. They can be different: infrared, magnetic, gyroscopic. Infrared light can either reflect (passive) or emit (active) light, magnetic ones distort the magnetic flux, and the wave receiver determines their position in space, the gyroscopic sensor also transmits information about changes in the position of the body in space.

Markerless systems are based on optical capture. A lot of cameras are mounted in the room, which shoot from different angles. The resulting images are then combined into a 3D model. Another type is an exoskeleton, which is attached to the body and creates an animation model, capturing movements.

If the model of the captured character subsequently needs to be placed in a virtual environment, then it is filmed in a green room (chromakey). The color green contrasts very much with the colors of the human body, so the computer is better at “clipping” green pixels from frames. Source: Toshiba

Motion captures are mainly used in movies and video games. However, every year this technology is increasingly used outside the entertainment industry - in manufacturing, medicine, preservation of cultural heritage, sports and military affairs.

Repeat after me: how does Toshiba teach file use with motion capture?

You can endlessly look at fire, water and how someone works. And sometimes you can benefit from it. We at Toshiba figured this out a long time ago, which is why our Corporate Manufacturing Engineering Center has motion capture specialist Hiroaki Nakamura. Recently, he has been following with enthusiasm how the participants of the National Championship of Professionals work - a special competition for craftsmen in different fields - from baking to welding.

In 2018, Haruki Okabe, a young worker at one of the Toshiba enterprises, decided to participate in this competition, specifically in the assembly of a small device. When assembling it, the contestants use a good old file for the final processing of some parts. It is believed that first-class technicians in this business can achieve machining accuracy of 0.001 mm or less, otherwise the device will not work. This is where our contestant turned out to be weak and missed the victory in the competition.

Toshiba sent a 66-year-old instructor Tatsuo Matsui, who worked as a file at the company's enterprises for more than 50 years, to mentor the young specialist. However, not every bearer of unique skills is born a good teacher who is able to convey them. He explained that the problem was in the position of the contestant at the machine, but the matter did not progress further. Then we dressed both of them in motion capture costumes. They are "sewn" according to the marker principle, that is, sensors are used to record movements. In this case, accelerometers are about the same as in smartphones. It is much cheaper than multiple cameras.

When we compared their data, we noticed one significant difference in how they balance on their feet.

The vertical axis is the resistance of the support [N], and the horizontal axis is the time [S]. Since the young worker's center of gravity is more concentrated on the forward leg compared to the experienced worker, there is a large gap between his blue and green curves. (Okabe, a young worker, is left-handed, and Matsui, a teacher, is right-handed, so the lines are reversed in the diagrams.) Source: Toshiba

It turned out that the center of gravity of the young worker is strongly shifted forward, not in the same way as that of the experienced specialist. Because of this, the contestant quickly gets tired when working with a file, and because of this, a marriage occurs. Realizing his mistake, Okabe was able to improve the quality of his file. And he took bronze at the Japanese National Professional Championship. Perhaps, our descendants will learn from the records of Matsuya's work. And we can also save for them another kind of disappearing art - dancing.

: ?

Everyone has probably heard about the UNESCO World Heritage List, which includes more than 1,000 natural and man-made sites that humanity seeks to preserve and pass on to future generations. In the early 2000s, many thought about how to preserve what was not built by hands and not created by nature - singing, ceremonies, theatrical performances, crafts. This is how the concept of intangible cultural heritage (ICH) appeared, which has also been accumulated and preserved since 2003. And motion capture technology helps to preserve one of the main objects of ICH - dancing.

Several projects around the world are digitizing the dances of the peoples of the world (Wholedance, i-Treasures, AniAge and others). Most often, when recording dances, active and passive sensors are used, which are placed on the dancer's body.

Motion capture systems with active sensors use LEDs that emit their own light. For example, the Phasespace Impulse X2 motion capture system consists of eight cameras capable of detecting movement using modulated LEDs. The dancer puts on a suit with 38 sensors and active LEDs and begins to dance.

The WholoDance project experimented with integrating Microsoft HoloLens as a visualization tool. Streaming data from Autodesk MotionBuilder wirelessly to a headset allows dancers and choreographers to see holograms in real time. Source: Jasper Brekelmans / YouTube

In passive capture systems, the sensors do not emit a signal, but only reflect it using special materials from which the suit is made. This technology provides the dancer with greater freedom of action, allows him to make sharp movements, perform acrobatic elements, and at the same time does not reduce the accuracy and speed of fixation. Now scientists expect to integrate motion capture marker technologies and 3D shooting, as the dancer's mimicry and costume are involved in the dance.

Similar motion capture technologies are used for a much simpler form of physical activity than dance - for gait analysis.

Learn from gait: how does gait study help doctors?

Gait can tell a lot about a person's health. Timothy Niiler of Pennsylvania State University knows this - he collects the world's largest collection of human gaits using motion capture technology. He invites people from 18 to 65 years old to walk under 12 high-speed cameras into his laboratory. Niiler attaches about 40 reflective sensors to the body of the study participants. Thus, a gait database is formed, which is subsequently used by doctors. First of all, knowledge of normal gait provides a basis for identifying almost any orthopedic problem that occurs in adults. For example, if it is necessary to measure the effectiveness of a hip or knee replacement, doctors "record" the patient's gait and compare it with the average gait parameters from a database.

? 1/3 . 1/3 . : 2/3 . 2/3 . : Motion Analysis

Motion analysis systems are already used directly in medical centers, for example, GaitTrack. Using this technology, doctors calculate and generalize the basic biomechanical parameters when walking or running, diagnose joint diseases at an early stage, and detect the risk of possible injuries. Coaches and athletes use motion capture in a similar way.

Faster, Higher, Better: How did motion capture help basketball players?

Motion capture is widely used in sports. Analysis of the actions of players allows you to identify their mistakes or test a variety of equipment. Keith Pamment is the head coach of the basketball team, which is made up of wheelchair users. He is also an engineer and has long wondered how motion capture can improve the performance of basketball teams. In particular, the Perception Neuron motion capture system helped to find the most effective wheelchairs for basketball players with disabilities. The costumed athletes sprinted, maneuvered, dribbled, and one-on-one while alternating between six different types of chairs while the trainer studied the movement of their animations in real time using 16 cameras.

Animated athletes' avatars were integrated with 3D models of wheelchairs. Source: Rockets Science CIC / YouTube

In one day of studying player training, Keith worked out technical criteria for sports chairs for his team based on the athlete's skill, strengths, weaknesses, movement and play technique. Now the coach is preparing a whole course for his colleagues who work with the same athletes.

Thus, while motion capture technologies are used in “niche” spheres of human activity due to their relative “youth” and, as a result, technical limitations. But with the development of robotics, machine learning, as well as augmented and virtual reality, their field of application will expand.

What's next: robotic avatars, teleportation and virtual reality

Robots are becoming more and more agile, faster and clever, which means that people will increasingly send them where he does not want to go. However, now among the interfaces for telecontrol of cars, joysticks or exoskeletons are mainly used. Such means are not always effective - their main disadvantage is that in one way or another they interfere or complicate the movement of the person himself, who remotely controls the robot. Optical motion capture technologies can solve this problem.

In 2018, a group of scientists from Great Britain and Italy managed to subdue a robot called Centauro to the actions of a fragile girl through motion capture. To do this, they used the inexpensive ASUS Xtion PRO motion recognition system, which contains infrared sensors and a color image recognition (RGB) function. She was responsible for motion capture. The information received was processed by OpenPose, a machine learning-based algorithm capable of detecting in real time (8-10 Hz) human body movements using 2D images from an RGB camera. Three-dimensional coordinates of human joints and limbs obtained from the system, after filtration, are transmitted to control the Centauro robot.

3D- , . : Dimitrios Kanoulas / YouTube

Of course, while the Centauro cannot be armed with an assault rifle and transferred to the control of an experienced marine, but in the future the creation of such systems is possible. Back in 2012, the Defense Advanced Research Projects Agency (DARPA) announced a project under the unexpected name Avatar, which envisaged “the development of interfaces and algorithms that would allow a soldier to effectively cooperate with a semi-autonomous vehicle on two legs ( bi-pedal) as a surrogate for the soldier. " Obviously, such a system will actually make it possible to endow the robot with the skills of an experienced and well-trained soldier, and at the same time keep it alive in the most difficult operational environment thanks to remote control. The progress of the studies was then not reported.

In 2018, the XPrize Foundation announced a competition to create the ANA Avatar XPrize robot avatar, and then 77 teams were selected to present their development of robot avatars at the end of 2020. According to the terms of the competition, developers must combine solutions in the field of motion capture, sensitive technologies, AR and VR. The ultimate goal, which will cost $ 5 million, is a system where the operator can see, hear, move and perform various tasks in a remote environment.

The avatar should have three modes of operation:

- fully controlled mode: the robot performs only human commands;

- Advanced: Solve some tasks independently, such as environmental analysis or mapping;

- semi-autonomous: independent decision making.

In fact, we are talking about the "teleportation" of a person with the creation of the effect of being present at the control point of the robot. In the future, these technologies will allow remote control of anthropomorphic robots in an environment that is unfavorable for humans: in a volcano crater, in outer space, in natural disaster zones or in war. In 2020 we will see the first developments, and in 2021 we will find out who will be the best and receive $ 5 million for further research.