We are talking about a visual search engine that received the first Western venture capital investments in IT in Russia, built on the basis of active semantic neural networks. Under the cut, we will tell you about its basic principles of operation and architecture.

Origins

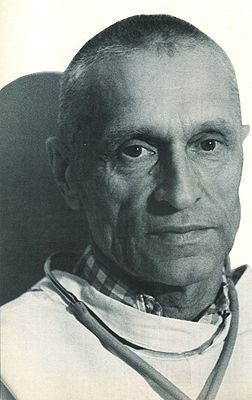

I was very lucky in my life - I studied with Nikolai Mikhailovich Amosov , an outstanding person, cardiac surgeon and cybernetics. I studied in absentia - the collapse of the USSR did not give me the opportunity to meet in person.

A lot can be said about Nikolai Mikhailovich, he was born into a peasant family in a village near Cherepovets and at the same time received second place in the "Great Ukrainians" project, yielding first place to Yaroslav the Wise. Outstanding cardiac surgeon and cybernetic engineer who independently developed the first artificial valve in the USSR. Streets, a medical school, a college, a boat cruising in the Ivankovskoye reservoir are named after him.

Much has been written about this on Wikipedia and on other sites .

I want to touch on the not so widely illuminated side of Amosov. A broad outlook, two educations (medical and engineering) allowed him to develop a theory of active semantic neural networks (M-networks), within which, more than 50 years ago, things were implemented that are still striking in their uniqueness.

And if there were sufficient computing power at that time, perhaps strong AI would already have been implemented today.

In his works, Amosov managed to maintain a balance between neurophysiology and mathematics, studying and describing the informational processes of intelligence. The results of his work are presented in several works, the final was the monograph "Algorithms of the Mind" , published in 1979.

Here is a short quote from it about one of the models:

“… (We) conducted a study, the purpose of which was to study the possibilities of M-networks in the field of neurophysiology and neuropsychology, as well as to assess the practical and cognitive importance of such models. An M-automaton was developed and studied, which simulates speech mechanisms. The model presents such aspects of oral speech as perception, comprehension, verbal expression.

The model is designed to reproduce relatively simple speech functions (!!!) - answers to questions of a limited type, repetition, naming. It contains the following blocks: auditory perception, sensory speech, proprioceptive speech, conceptual, emotion, motivational, motor speech, articulatory and SUT block. The blocks of the model are correlated with certain brain formations ...

... The input of the model was the letters of the Russian alphabet combined into words and phrases, as well as special objects corresponding to the images of objects. At the output of the model, depending on the mode of its operation, sequences of letters of the Russian alphabet were observed, which were either answers to input questions, or a repetition of input words, or the names of objects.

The fact that neurophysiological data were widely used in the creation of the model made it possible in experiments to simulate a number of brain lesions of an organic and functional nature, leading to impaired speech functions.

And this is just one of the works.

Another is the control of a mobile robot. “… The robot control system assumes the implementation of purposeful movement with ensuring its own safety (avoiding obstacles, avoiding dangerous places, maintaining internal parameters within the specified limits) and minimizing time and energy costs”.

Also, the monograph describes the results of modeling the free behavior of “a certain subject in an environment that contained useful and dangerous objects for him. The subject's motives for behavior were determined by feelings of fatigue, hunger, and a desire for self-preservation. The subject studied the environment, chose the goal of the movement, built a plan to achieve this goal and then implemented it by performing actions-steps, comparing the results obtained during the movement with the planned ones, supplementing and adjusting the plan depending on the emerging situations.

Features of the theory

In his works, Amosov attempted to create an informational / algorithmic model, as we now say, of "strong intelligence" and, in my opinion, his theory most closely describes what really happens in the mammalian brain.

The main features of M-networks, which radically distinguish them from other neural network paradigms, are the strict semantic load of each neuron and the presence of a system for internal assessment of its state. There are neurons-receptors, neurons-objects, neurons-feelings, neurons-actions.

Learning takes place according to the modified Hebb rule, taking into account your inner state. Accordingly, the decision is made on the basis of an understandable distribution of the activity of semantically designated neurons.

The network learns "on the fly", without repeated repetition. The same mechanisms work with different types of information, be it speech or perception of visual data, motor activity. This paradigm simulates both the work of consciousness and subconsciousness.

Those interested can find Amosov's books with a more detailed description of the theory and practical implementations of various aspects of intelligence, but I will tell you about our experience in building a visual search engine Quintura Search.

Quintura

The Quintura company was founded in 2005. A prototype of a desktop application demonstrating our approach was implemented with our own money. With the angel money of Ratmir Timashev and Andrey Baronov (later ABRT fund ), the prototype was finalized, negotiations were held and investments were received from the Luxembourg fund Mangrove Capital Partners . This was the first Western investment in Russia in the IT field. All three partners of the fund came to Sergiev Posad to look us in the eye and make a decision on investing.

With the funds received, over six years, the full functionality of a web search engine was developed - collecting information, indexing, processing queries and issuing results. The core was an M-network, or rather a set of M-networks (one conceptual network and networks for each document). The network was trained in one pass over the document. For a couple of cycles of recalculation, keywords were selected, documents were found that matched the query in meaning, and their annotations were built. The network understood the context of the request, more precisely, it allowed the user to clarify it by adding the necessary meanings for the search and removing documents that had irrelevant contexts from the search results.

Basic principles and approaches

As noted above, each neuron in the network has its own semantic load. To illustrate the patents, we proposed a visual picture (I apologize for the quality - the originals of the pictures have not been preserved, hereinafter we used pictures from scans of our patents and from articles about us from various sites):

Simplified, a conceptual network is a set of neurons-concepts related with each other by connections, in proportion to the frequency of their occurrence with each other. When the user enters a query word, we "pull out" the neuron (s) of this word, and he, in turn, pulls those associated with it. And the stronger the connection, the closer other neurons will be to the request neuron.

If there are several words in the request, then all neurons corresponding to the words of the request will be "pulled" along.

If we decide to delete an irrelevant word, we kind of hang on it a load that pulls down both the deleted concept and those associated with it.

As a result, we get a tag cloud, where the query words are at the top (the largest font), and related concepts are located side by side, the larger the link, the larger the font.

Below is a semantic map of documents found by the query "beauty".

When you hover the mouse over the word "fashion", the map is rebuilt:

If you move the mouse pointer over the word "travel", we get another map:

Thus, we can refine the query by pointing the direction we need, form the context we need.

Simultaneously with the rebuilding of the map, we get the documents that are most relevant to the given context, and we exclude unnecessary branches by deleting irrelevant words.

We build the document index according to the following principle:

There are 4 layers of neurons. Layer of words, layer of concepts (they can be combined into one layer), layer of sentences, layer of documents.

Neurons can be connected with each other by two types of connections - amplifying and inhibitory. Accordingly, each neuron can be in three types of states: active, neutral and suppressed. Neurons transmit activity via the amplifying connections over the network, inhibitory "anchors". The concept layer is useful for working with synonyms, as well as highlighting "strong" or significant concepts over others, defining document categories.

For example, the word Apple in the word layer would be associated with the concepts of fruit, anatomical term, and company. Oil - with oil and oil. The Russian word "braid" with a hairdo, tools, weapons, relief. Etc.

We establish bi-directional connections - both direct and reverse. Learning (changes in links) occurs in one pass through the document based on the Hebb rule - we increase the links between words that are close to each other, also, we additionally link words that are in the same sentence, paragraph, document with smaller links. The more often words are found side by side, both within the same document and within a group of documents, the more the connection between them becomes.

This architecture allows you to effectively solve the following tasks:

- setting and managing the search context;

- search for documents that match the search context (not only containing query words);

- displaying the semantic contexts of a set of documents (what are the associations of the search engine with the words of the query);

- highlighting the keywords of the document / documents;

- annotating a document;

- search for documents similar in meanings.

Let's consider the implementation of each task.

Setting and managing the search context

This case was illustrated earlier. The user enters the query words, these words excite all associated with them, then, we can either select the nearest concepts (having maximum excitation), or further transmit excitation through the network from all neurons excited at the previous step, and so on - in this case, they begin work associations. A number of the most excited words / concepts are displayed on the map (you can also choose what to show - a layer of words, concepts, or both). If necessary, irrelevant words are removed (removing the associated concepts from the map), thereby providing an accurate description of the required search context.

Finding documents matching the search context

The state of the first two layers of the network at this step transmit their excitement to the next layers, whose neurons are ranked by excitation, and we get a list of documents ranked by relevance not just to the words of the query, but to the generated context.

Thus, the search results will include not only documents containing the words of the query, but also those related to them in meaning. The resulting documents can be used to change the search context either by strengthening the concepts contained in the documents "necessary" to us, or by lowering the weight of concepts associated with irrelevant documents. This way, you can easily and intuitively refine your query and manage search results.

Displaying semantic contexts of a collection

The transfer of excitation in the opposite direction, from neurons-documents to neurons to concepts / words, allows you to get a visual map of which semantic contexts are present in the set of documents. This is an alternative way to get a meaningful tag map and navigate the meaning space. This process can be performed iteratively by changing the direction of excitation - by receiving documents for search contexts, or by changing the search context for active documents.

Usually, the search engine's scenario is as follows - the initial map is formed either on the basis of the latest news, popular articles, queries, or their combination. Then the user can either start forming a request by clicking on the words from the map, or by creating a new request by entering the words of interest in the input field.

In our children's service Quintura Kids, even children who could not write (or who had difficulty with it) could work and search for the necessary information.

This is how the initial map looked.

And the user saw this after clicking on the word "Space".

The children's service was built on manually selected and approved sites, so the child could not see anything from an adult. This explains the small number of documents found. The English version contained many times more documents - thanks to the Yahoo children's directory.

Highlighting the keywords of the document / documents

Actually those words and concepts that become active when transmitting excitement from the layer of documents or the layer of sentences are the keywords. They are also ranked by activity, then a certain amount is cut off from them, which is displayed.

Here we have applied another solution. We believe that the total excitement of all words or concepts is equal to 100%. Then we can easily control the number of words that we will consider as keywords by setting the percentage of activity as a threshold.

For example, suppose there is a document dealing with a Boeing headliner contract. When the excitation was transmitted from the neuron of this document to the layer of words / concepts, the words presented in this figure were excited. The weighted total arousal, reduced to 100%, gave us individual weight for each word. And if we want to get 75% of the “meaning” of keywords, then we will see the words “BOEING”, “787”, “DREAMLINER”, and if we want to see 85% of the “meaning”, then we will add “SALES” to these words.

This method allows you to automatically limit the number of displayed keywords and sentences / documents.

Annotate the document.

Receiving snippets was also carried out in the above described way. First, we get the keywords of the document, after which, by direct distribution of excitement from the keywords, we obtain a list of sentences, bring the weights of these sentences to 100% and cut off the required amount of "meaning", after which we display these sentences in the sequence in which they occurred in the text of the document ...

Finding documents similar in meaning

The transfer of excitation from the neuron corresponding to the document for which we are looking for words / concepts similar in meaning to the layer and after that in the opposite direction to the document layer allows us to find documents that are similar to the original in context or meaning. If we do several beats of transmission of excitement in the word / concept layer, we can expand the original context. In this case, we can find documents that do not contain an exact match in all words, but coincide in topic.

In fact, this mechanism can be used to determine the similarity of anything, for example, by adding a layer to the network where the user ID will correspond to neurons, we can find people with similar interests. Replacing the layer of sentences and documents with indices of music or video works, we get a music / video search.

To be continued

This article covers only the basic principles of the Quintura visual search engine. Over the six years of the Company's life, many complex problems have been solved, both related to the architecture of active semantic neural networks and in building a fault-tolerant, expandable cluster. The search index consisted of many neural networks working in parallel, the result of these networks was combined, and an individual map of the user's interests was built.

Within the framework of the project, a site search service, a children's search engine, a text analysis tool were implemented, work began on the formation of an information ontology of the world, the construction of a neural network morphology, 9 US patents were obtained.

However, we have never been able to monetize our system. At that time, there was no neural network boom yet, we did not manage to agree with large search engines (as the architect of one of them told us - “we have too different paradigms and we do not understand how to combine them”).

The project had to be closed.

Personally, I still have an "understatement" - I have a great desire to give a second life to this project. Knowing that the former head of advertising at Google is building an ad-free search engine where users can “break out of the bubble of content personalization filters and tracking tools” only strengthens my confidence in the relevance of our developments.

I will once again list the features of active neural networks built on the basis of Nikolai Amosov's theory:

- learning on the fly;

- adding new entities without the need to retrain the entire network;

- clear semantics - it is clear why the network made this or that decision;

- uniform network architecture for different applications;

- simple architecture, as a result - high performance.

I hope this article will be the first step in opening the implemented technologies to the general public of developers and will serve as the beginning of the opensource project of Amosov's active semantic neural networks.