The information used to prepare this material is taken from the Kubernetes enhancements tracking table , CHANGELOG-1.19 , the Sysdig overview , as well as related issues, pull requests, Kubernetes Enhancement Proposals (KEP).

Let's start with a few major innovations of a fairly general nature ...

With the release of Kubernetes 1.19, the "support window" for Kubernetes versions has been increased from 9 months (ie the last 3 releases) to 1 year (ie 4 releases). Why?

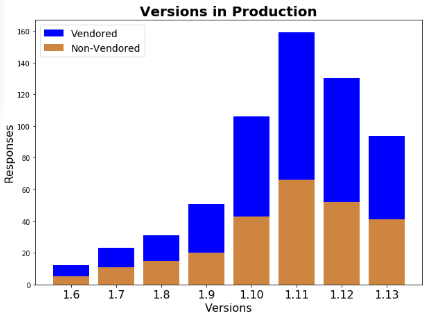

It turned out that due to the high speed of the project development (frequent major releases), Kubernetes cluster administrators do not have time to update their installations. The authors of the corresponding KEP refer to a survey conducted by the working group early last year and showed that about a third of Kubernetes users are dealing with obsolete K8s releases running in production:

(At the time of the survey, the current Kubernetes version was 1.13, i.e. all K8s users 1.9 and 1.10 worked with releases that were no longer supported at the time.)

Thus, it is assumed that a 3-month extension of the support period for Kubernetes releases - the release of patches that fix problems found in the code - will ensure that more than 80% of users will work on supported versions of K8s (instead of the assumed 50-60% at the moment ).

Another big development: a standard for structured logs has been developed ... The current logging system in the control plane does not guarantee a uniform structure for messages and object references in Kubernetes, which complicates the processing of such logs. To solve this problem, a new structure for messages in logs is introduced, for which the klog library has been extended with new methods that provide a structured interface for generating logs, as well as auxiliary methods for identifying K8s objects in the logs.

Simultaneously with the migration to klog v2, the transition to a new format for outputting logs in JSON is carried out, which will simplify the execution of requests to the logs and their processing. To do this, a flag appears

--logging-formatthat by default uses the old text format.

Since the Kubernetes repository is huge and the authorsStructured Logging KEPs are realists and will focus their efforts to bring new ideas to life on the most common messages.

An illustration of logging using the new methods in klog:

klog.InfoS("Pod status updated", "pod", "kubedns", "status", "ready")

I1025 00:15:15.525108 1 controller_utils.go:116] "Pod status updated" pod="kubedns"klog.InfoS("Pod status updated", "pod", klog.KRef("kube-system", "kubedns"), "status", "ready")

I1025 00:15:15.525108 1 controller_utils.go:116] "Pod status updated" pod="kube-system/kubedns" status="ready"klog.ErrorS(err, "Failed to update pod status")

E1025 00:15:15.525108 1 controller_utils.go:114] "Failed to update pod status" err="timeout"Using JSON format:

pod := corev1.Pod{Name: "kubedns", Namespace: "kube-system", ...}

klog.InfoS("Pod status updated", "pod", klog.KObj(pod), "status", "ready"){

"ts": 1580306777.04728,

"v": 4,

"msg": "Pod status updated",

"pod":{

"name": "nginx-1",

"namespace": "default"

},

"status": "ready"

}Another important (and very relevant) innovation is the mechanism for informing about deprecated APIs , implemented immediately as a beta version (i.e., active in installations by default). As explained by the authors, in many Kubernetes constantly outdated capacity, staying in different states and with different time s mi prospects. It is almost impossible to keep track of them, carefully reading all Release Notes and manually cleaning configs / settings.

To solve this problem, now when using the deprecated API, a header is added to its responses

Warning, which is recognized on the client side (client-go) with the possibility of different reactions: ignore, deduplicate, log. In the kubectl utility, they taught how to output these messages to stderr, highlight the message in the console with color, and also added a flag --warnings-as-errorswith a self- explanatory name.

In addition to this, special metrics have been added to report the use of deprecated APIs and audit annotations.

Finally, the developers attended to the advancement of Kubernetes features from the beta version . As the experience of the project has shown, some new features and changes in the API were "stuck" in the Beta status for the reason that they were already automatically (by default) activated and did not require further action from users.

To prevent this from happening, it is suggested automatically send to the deprecation list those features that have been in beta for 6 months (two releases) and do not meet any of these conditions:

- meet GA criteria and are promoted to stable status;

- have a new beta that obsoletes the previous beta.

Now for the other changes in Kubernetes 1.19, categorized by their respective SIGs.

Vaults

The new CSIStorageCapacity objects are intended to improve the scheduling process for pods that use CSI volumes: they will not be placed on nodes that have run out of storage space. To do this, information about the available disk space will be stored in the API server and available to CSI drivers and the scheduler. The current implementation status is alpha version; see KEP for details .

Another innovation in the alpha version is the ability to define ephemeral volumes directly in pod specifications, generic ephemeral inline volumes ( KEP). Ephemeral volumes are created for specific pods at the time they spawn and are deleted when they exit. They could have been defined earlier (including directly in the specification, i.e. by the inline method), but the existing approach, having proved the consistency of the feature itself, did not cover all cases of its use.

The new mechanism offers a simple API to define ephemeral volumes for any driver with dynamic provisioning support (previously, this would require a driver modification). It allows you to work with any ephemeral volumes (both CSI and in-tree, like

EmptyDir), and also provides support for another new feature (described just above) - tracking the available storage space.

An example of a high-level Kubernetes object using a new ephemeral volume (generic inline):

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd-elasticsearch

namespace: kube-system

spec:

selector:

matchLabels:

name: fluentd-elasticsearch

template:

metadata:

labels:

name: fluentd-elasticsearch

spec:

containers:

- name: fluentd-elasticsearch

image: quay.io/fluentd_elasticsearch/fluentd:v2.5.2

volumeMounts:

- name: varlog

mountPath: /var/log

- name: scratch

mountPath: /scratch

volumes:

- name: varlog

hostPath:

path: /var/log

- name: scratch

ephemeral:

metadata:

labels:

type: fluentd-elasticsearch-volume

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "scratch-storage-class"

resources:

requests:

storage: 1GiHere the DaemonSet controller creates pods with view names

fluentd-elasticsearch-b96sd, after which a PersistentVolumeClaim will be added for such a pod fluentd-elasticsearch-b96sd-scratch.

And the last completely new storage feature, introduced as an alpha version, is a new field

csidriver.spec.SupportsFSGroupfor CSI drivers that indicates support for FSGroup based permissions ( KEP ). Motivation: a change of ownership for a CSI volume is performed before it is mounted in a container, but not all types of storage support such an operation (for example, NFS), which is why it can now lead to errors.

Up to the beta version (ie the default inclusions):

- CSI- Azure vSphere ( , Kubernetes);

- Secrets ConfigMaps.

/ Kubelet

Seccomp has been declared stable (GA) . In particular, this work led to the transition to fields for seccomp in the API instead of annotations declared obsolete (new Kubelets ignore annotations), and affected PodSecurityPolicy .

A new field has been added to the PodSpec

fqdnInHostnameto allow you to set the FQDN (Fully Qualified Domain Name) for the pod host . The goal is to improve support for legacy applications in Kubernetes. Here's how the authors explain their intentions:

« Unix Linux-, Red Hat CentOS, FQDN- hostname. , , Kubernetes, . ».

The default will be

falseto keep the old (for Kubernetes) behavior. Feature status - alpha version, which is planned to be declared stable in the next release (1.20).

It was decided to abandon the Accelerator Metrics collected by Kubelet . It is proposed to collect such metrics by external monitoring agents through the PodResources API. This API itself was created precisely with the aim of removing all device-specific metrics from the main Kubernetes repository, allowing vendors to implement them without making changes to the Kubernetes core. PodResources API is in beta (feature gate is responsible for it

KubeletPodResources) and will be stable soon. For the current release, the abandonment process is in alpha status, details are in KEP .

From now on, Kubelet can be built without Docker : by this "without" the authors mean the absence of any Docker-specific code and dependency on the Golang package

docker/docker. The ultimate goal of this initiative is to arrive at a completely "dockerless" (ie, no Docker dependency) Kubelet. You can read more about motivation, as always, at KEP . This opportunity immediately received GA status.

The Node Topology Manager, which reached its beta version in the last K8s release, now has the ability to level resources at the pod level.

Scheduler

Back in Kubernetes 1.18, we wrote about a global configuration for even pod distribution (Even Pod Spreading) , but then it was decided to postpone this feature based on the results of performance testing. She is now in Kubernetes (in alpha status).

The essence of the innovation is the addition of global constraints (

DefaultConstraints), which allow regulating the rules for distributing pods at a higher level - at the cluster level, and not only in PodSpec(through topologySpreadConstraints), as it was until now. The default configuration will be similar to the current plugin DefaultPodTopologySpread:

defaultConstraints:

- maxSkew: 3

topologyKey: "kubernetes.io/hostname"

whenUnsatisfiable: ScheduleAnyway

- maxSkew: 5

topologyKey: "topology.kubernetes.io/zone"

whenUnsatisfiable: ScheduleAnywayDetails are in KEP .

Another feature related to Even Pod Spreading: the distribution of a group of pods by failure domains (regions, zones, nodes, etc.) has been moved from alpha to beta (enabled by default).

Three other features in the scheduler have achieved a similar "increase":

- Planning profiles (Scheduling the Profiles) , presented in 1.18;

- option to enable / disable pod preemption in PriorityClasses;

- configuration file kube-scheduler ComponentConfig .

Networks

The Ingress resource is finally declared stable and has a version

v1in the API. In this regard, quite a few updates have been presented in the relevant documentation. Particular attention should be paid to the changes noticeable to the user from this PR : for example, there are such renames as spec.backend→ spec.defaultBackend, serviceName→ service.name, servicePort→ service.port.number...

The AppProtocol field for Services and Endpoints, as well as the EndpointSlice API (kube-proxy on Linux starts use EndpointSlices by default, but remained in alpha for Windows) and SCTP support .

kubeadm

Two new features (in alpha version) are introduced for the kubeadm utility.

The first is using patches to modify the manifests generated by kubeadm. It was already possible to modify them using Kustomize (in alpha), however the kubeadm developers decided that using regular patches was the preferred way (since Kustomize becomes an unnecessary dependency, which is not welcome).

Now it is possible to apply raw patches (via a flag

--experimental-patches) for kubeadm commands init, joinand upgrade, as well as their phases. The Kustomize-based implementation (flag --experimental-kustomize) will be deprecated and removed.

The second feature is a new approach to working with component configsthat kubeadm works with. The utility generates, validates, sets default values, stores configs (in the form of ConfigMaps) for such Kubernetes cluster components as Kubelet and kube-proxy. Over time, it became clear that this brings a number of difficulties: how to distinguish between configs generated by kubeadm or submitted by the user (and if not, then what to do with config migration)? Which fields with default values were automatically generated, and which were intentionally set? ..

To solve these problems, a large set of changes is presented , including: refusal to set default values (this must be done by the components themselves), delegation of config validation themselves components, signing each generated ConfigMap, etc.

And another less significant feature for kubeadm is a feature gate called

PublicKeysECDSA, which includes the ability to create a cluster [via kubeadm init] with ECDSA certificates. Updating existing certificates (via kubeadm alpha certs renew) is also provided, but there are no mechanisms for easily switching between RSA and ECDSA.

Other changes

- GA status received 3 features in the field of authentication: CertificateSigningRequest API , restricting node access to certain APIs (via an admission plugin

NodeRestriction), bootstrap and automatic renewal of the Kubelet client certificate. - The new Event API was also declared stable with a changed approach to deduplication (to avoid overloading the cluster with events).

- (kube-apiserver, kube-scheduler, etcd )

debiandistroless. : , ( — KEP). - Kubelet Docker runtime target-,

TargetContainerNameEphemeralContainer ( ). - « »

.status.conditions, API . - kube-proxy IPv6DualStack Windows ( feature gate).

- Feature gate with a self-explanatory name

CSIMigrationvSphere(migration from the built-in - in-tree - plug-in for vSphere to the CSI-driver) has moved to the beta version. - For

kubectl runadded flag--privileged. - A new extension point has been added to the scheduler - , - that starts after the phase .

PostFilterFilter - Cri-containerd support on Windows has reached beta.

Dependency changes:

- version of CoreDNS included in kubeadm - 1.7.0;

- cri-tools 1.18.0;

- CNI (Container Networking Interface) 0.8.6;

- etcd 3.4.9;

- the version of Go used is 1.15.0-rc.1.

PS

Read also on our blog: