In this article, we use bash , ssh , docker and nginx to organize a seamless layout of a web application. Blue-green deployment is a technique that allows you to instantly update your application without rejecting any requests. It is one of the zero downtime deployment strategies and is best suited for applications that have one instance, but it is possible to load a second, ready-to-run instance side by side.

Let's say you have a web application with which many clients are actively working, and it absolutely cannot lie down for a couple of seconds. And you really need to roll out a library update, fix a bug or a new cool feature. In a normal situation, you will need to stop the application, replace it and restart it. In the case of docker, you can first replace, then restart, but there will still be a period in which requests to the application will not be processed, because usually the application takes some time to boot up. What if it starts up, but turns out to be inoperative? This is the task, let's solve it with minimal means and as elegant as possible.

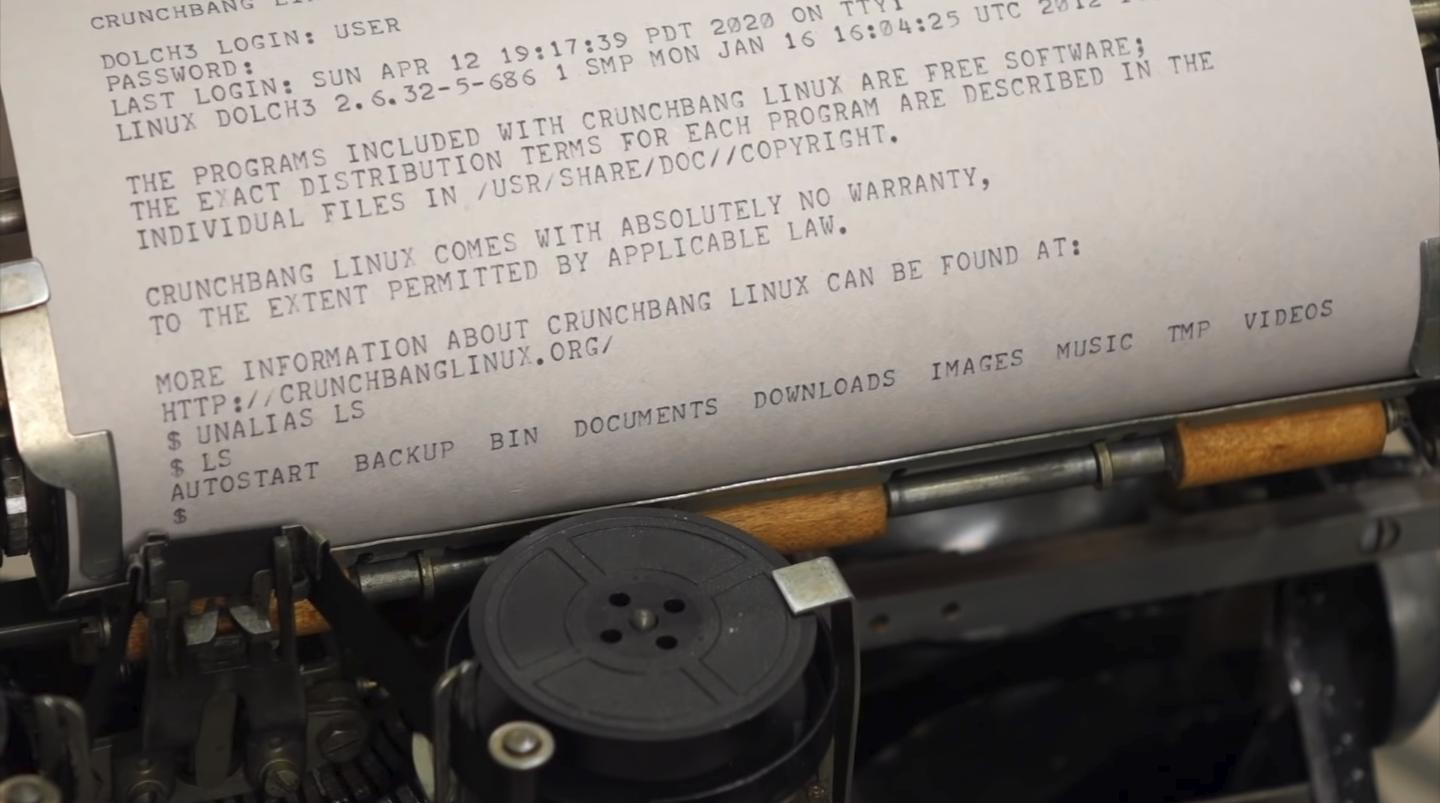

Disclaimer: Most of the article is presented in an experimental format - in the form of a console session recording. Hopefully it won't be very difficult to understand, and this code is self-documenting enough. For the atmosphere, imagine that these are not just code snippets, but paper from an "iron" teletype.

Interesting techniques that are difficult to Google just by reading the code are described at the beginning of each section. If you don't understand something else - google and check in explainshell (fortunately, it works again, due to unblocking the telegram). What is not googled - ask in the comments. I am pleased to add the appropriate section "Interesting Techniques".

Let's get started.

$ mkdir blue-green-deployment && cd $_Service

Let's create an experimental service and place it in a container.

Interesting techniques

cat << EOF > file-name(Here Document + I/O Redirection) — . , bash/dev/stdinEOFfile-name.wget -qO- URL(explainshell) — HTTP/dev/stdout(curl URL).

, Python. . , ( ), .

$ cat << EOF > uptimer.pyfrom http.server import BaseHTTPRequestHandler, HTTPServer

from time import monotonic

app_version = 1

app_name = f'Uptimer v{app_version}.0'

loading_seconds = 15 - app_version * 5

class Handler(BaseHTTPRequestHandler):

def do_GET(self):

if self.path == '/':

try:

t = monotonic() - server_start

if t < loading_seconds:

self.send_error(503)

else:

self.send_response(200)

self.send_header('Content-Type', 'text/html')

self.end_headers()

response = f'<h2>{app_name} is running for {t:3.1f} seconds.</h2>\n'

self.wfile.write(response.encode('utf-8'))

except Exception:

self.send_error(500)

else:

self.send_error(404)

httpd = HTTPServer(('', 8080), Handler)

server_start = monotonic()

print(f'{app_name} (loads in {loading_seconds} sec.) started.')

httpd.serve_forever()EOF

$ cat << EOF > Dockerfile

FROM python:alpine

EXPOSE 8080

COPY uptimer.py app.py

CMD [ "python", "-u", "./app.py" ]

EOF

$ docker build --tag uptimer .

Sending build context to Docker daemon 39.42kB

Step 1/4 : FROM python:alpine

---> 8ecf5a48c789

Step 2/4 : EXPOSE 8080

---> Using cache

---> cf92d174c9d3

Step 3/4 : COPY uptimer.py app.py

---> a7fbb33d6b7e

Step 4/4 : CMD [ "python", "-u", "./app.py" ]

---> Running in 1906b4bd9fdf

Removing intermediate container 1906b4bd9fdf

---> c1655b996fe8

Successfully built c1655b996fe8

Successfully tagged uptimer:latest

$ docker run --rm --detach --name uptimer --publish 8080:8080 uptimer

8f88c944b8bf78974a5727070a94c76aa0b9bb2b3ecf6324b784e782614b2fbf

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8f88c944b8bf uptimer "python -u ./app.py" 3 seconds ago Up 5 seconds 0.0.0.0:8080->8080/tcp uptimer

$ docker logs uptimer

Uptimer v1.0 (loads in 10 sec.) started.

$ wget -qSO- http://localhost:8080

HTTP/1.0 503 Service Unavailable

Server: BaseHTTP/0.6 Python/3.8.3

Date: Sat, 22 Aug 2020 19:52:40 GMT

Connection: close

Content-Type: text/html;charset=utf-8

Content-Length: 484

$ wget -qSO- http://localhost:8080

HTTP/1.0 200 OK

Server: BaseHTTP/0.6 Python/3.8.3

Date: Sat, 22 Aug 2020 19:52:45 GMT

Content-Type: text/html

<h2>Uptimer v1.0 is running for 15.4 seconds.</h2>

$ docker rm --force uptimer

uptimer-

- docker network. , -, .

- , docker network - , --publish, -.

- 80, , . 80- , --publish 80:80 --publish ANY_FREE_PORT:80.

- " docker-, , IP . " ( "Networking with standalone containers", "Use user-defined bridge networks", 5 -).

$ docker network create web-gateway

5dba128fb3b255b02ac012ded1906b7b4970b728fb7db3dbbeccc9a77a5dd7bd

$ docker run --detach --rm --name uptimer --network web-gateway uptimer

a1105f1b583dead9415e99864718cc807cc1db1c763870f40ea38bc026e2d67f

$ docker run --rm --network web-gateway alpine wget -qO- http://uptimer:8080

<h2>Uptimer v1.0 is running for 11.5 seconds.</h2>

$ docker run --detach --publish 80:80 --network web-gateway --name reverse-proxy nginx:alpine

80695a822c19051260c66bf60605dcb4ea66802c754037704968bc42527bf120

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

80695a822c19 nginx:alpine "/docker-entrypoint.…" 27 seconds ago Up 25 seconds 0.0.0.0:80->80/tcp reverse-proxy

a1105f1b583d uptimer "python -u ./app.py" About a minute ago Up About a minute 8080/tcp uptimer

$ cat << EOF > uptimer.conf

server {

listen 80;

location / {

proxy_pass http://uptimer:8080;

}

}

EOF

$ docker cp ./uptimer.conf reverse-proxy:/etc/nginx/conf.d/default.conf

$ docker exec reverse-proxy nginx -s reload

2020/06/23 20:51:03 [notice] 31#31: signal process started

$ wget -qSO- http://localhost

HTTP/1.1 200 OK

Server: nginx/1.19.0

Date: Sat, 22 Aug 2020 19:56:24 GMT

Content-Type: text/html

Transfer-Encoding: chunked

Connection: keep-alive

<h2>Uptimer v1.0 is running for 104.1 seconds.</h2>( startup performance) .

echo 'my text' | docker exec -i my-container sh -c 'cat > /my-file.txt'—my text/my-file.txtmy-container.cat > /my-file.txt—/dev/stdin.

$ sed -i "s/app_version = 1/app_version = 2/" uptimer.py

$ docker build --tag uptimer .

Sending build context to Docker daemon 39.94kB

Step 1/4 : FROM python:alpine

---> 8ecf5a48c789

Step 2/4 : EXPOSE 8080

---> Using cache

---> cf92d174c9d3

Step 3/4 : COPY uptimer.py app.py

---> 3eca6a51cb2d

Step 4/4 : CMD [ "python", "-u", "./app.py" ]

---> Running in 8f13c6d3d9e7

Removing intermediate container 8f13c6d3d9e7

---> 1d56897841ec

Successfully built 1d56897841ec

Successfully tagged uptimer:latest

$ docker run --detach --rm --name uptimer_BLUE --network web-gateway uptimer

96932d4ca97a25b1b42d1b5f0ede993b43f95fac3c064262c5c527e16c119e02

$ docker logs uptimer_BLUE

Uptimer v2.0 (loads in 5 sec.) started.

$ docker run --rm --network web-gateway alpine wget -qO- http://uptimer_BLUE:8080

<h2>Uptimer v2.0 is running for 23.9 seconds.</h2>

$ sed s/uptimer/uptimer_BLUE/ uptimer.conf | docker exec --interactive reverse-proxy sh -c 'cat > /etc/nginx/conf.d/default.conf'

$ docker exec reverse-proxy cat /etc/nginx/conf.d/default.conf

server {

listen 80;

location / {

proxy_pass http://uptimer_BLUE:8080;

}

}

$ docker exec reverse-proxy nginx -s reload

2020/06/25 21:22:23 [notice] 68#68: signal process started

$ wget -qO- http://localhost

<h2>Uptimer v2.0 is running for 63.4 seconds.</h2>

$ docker rm -f uptimer

uptimer

$ wget -qO- http://localhost

<h2>Uptimer v2.0 is running for 84.8 seconds.</h2>

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

96932d4ca97a uptimer "python -u ./app.py" About a minute ago Up About a minute 8080/tcp uptimer_BLUE

80695a822c19 nginx:alpine "/docker-entrypoint.…" 8 minutes ago Up 8 minutes 0.0.0.0:80->80/tcp reverse-proxy, , . (, CI-) .

, localhost localhost , . :

$ ssh production-server docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

$ docker image save uptimer | ssh production-server 'docker image load'

Loaded image: uptimer:latest

$ ssh production-server docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

uptimer latest 1d56897841ec 5 minutes ago 78.9MB docker save .tar , 1.5 , . :

$ docker image save uptimer | gzip | ssh production-server 'zcat | docker image load'

Loaded image: uptimer:latest, (, ):

$ docker image save uptimer | gzip | pv | ssh production-server 'zcat | docker image load'

25,7MiB 0:01:01 [ 425KiB/s] [ <=> ]

Loaded image: uptimer:latest: SSH , ~/.ssh/config. docker image save/load — , . :

- Container Registry ( ).

- docker daemon :

-

DOCKER_HOST. -

-H--hostdocker-compose. docker context

-

( ) How to deploy on remote Docker hosts with docker-compose.

deploy.sh

, . top-level , , .

${parameter?err_msg}— bash- (aka parameter substitution).parameter,err_msg1.docker --log-driver journald— -, - . , production- .

deploy() {

local image_name=${1?"Usage: ${FUNCNAME[0]} image_name"}

ensure-reverse-proxy || return 2

if get-active-slot $image_name

then

local OLD=${image_name}_BLUE

local new_slot=GREEN

else

local OLD=${image_name}_GREEN

local new_slot=BLUE

fi

local NEW=${image_name}_${new_slot}

echo "Deploying '$NEW' in place of '$OLD'..."

docker run \

--detach \

--restart always \

--log-driver journald \

--name $NEW \

--network web-gateway \

$image_name || return 3

echo "Container started. Checking health..."

for i in {1..20}

do

sleep 1

if get-service-status $image_name $new_slot

then

echo "New '$NEW' service seems OK. Switching heads..."

sleep 2 # Ensure service is ready

set-active-slot $image_name $new_slot || return 4

echo "The '$NEW' service is live!"

sleep 2 # Ensure all requests were processed

echo "Killing '$OLD'..."

docker rm -f $OLD

docker image prune -f

echo "Deployment successful!"

return 0

fi

echo "New '$NEW' service is not ready yet. Waiting ($i)..."

done

echo "New '$NEW' service did not raise, killing it. Failed to deploy T_T"

docker rm -f $NEW

return 5

}:

ensure-reverse-proxy— , - ( )get-active-slot service_name— (BLUEGREEN)get-service-status service_name deployment_slot—set-active-slot service_name deployment_slot— nginx -

:

ensure-reverse-proxy() {

is-container-up reverse-proxy && return 0

echo "Deploying reverse-proxy..."

docker network create web-gateway

docker run \

--detach \

--restart always \

--log-driver journald \

--name reverse-proxy \

--network web-gateway \

--publish 80:80 \

nginx:alpine || return 1

docker exec --interactive reverse-proxy sh -c "> /etc/nginx/conf.d/default.conf"

docker exec reverse-proxy nginx -s reload

}

is-container-up() {

local container=${1?"Usage: ${FUNCNAME[0]} container_name"}

[ -n "$(docker ps -f name=${container} -q)" ]

return $?

}

get-active-slot() {

local image=${1?"Usage: ${FUNCNAME[0]} image_name"}

if is-container-up ${image}_BLUE && is-container-up ${image}_GREEN; then

echo "Collision detected! Stopping ${image}_GREEN..."

docker rm -f ${image}_GREEN

return 0 # BLUE

fi

if is-container-up ${image}_BLUE && ! is-container-up ${image}_GREEN; then

return 0 # BLUE

fi

if ! is-container-up ${image}_BLUE; then

return 1 # GREEN

fi

}

get-service-status() {

local usage_msg="Usage: ${FUNCNAME[0]} image_name deployment_slot"

local image=${1?usage_msg}

local slot=${2?$usage_msg}

case $image in

# Add specific healthcheck paths for your services here

*) local health_check_port_path=":8080/" ;;

esac

local health_check_address="http://${image}_${slot}${health_check_port_path}"

echo "Requesting '$health_check_address' within the 'web-gateway' docker network:"

docker run --rm --network web-gateway alpine \

wget --timeout=1 --quiet --server-response $health_check_address

return $?

}

set-active-slot() {

local usage_msg="Usage: ${FUNCNAME[0]} service_name deployment_slot"

local service=${1?$usage_msg}

local slot=${2?$usage_msg}

[ "$slot" == BLUE ] || [ "$slot" == GREEN ] || return 1

get-nginx-config $service $slot | docker exec --interactive reverse-proxy sh -c "cat > /etc/nginx/conf.d/$service.conf"

docker exec reverse-proxy nginx -t || return 2

docker exec reverse-proxy nginx -s reload

} get-active-slot :

, ?

, exit code bash , . , :

get-active-slot service && echo BLUE || echo GREEN.

, ?

, , else.

, nginx: get-nginx-config service_name deployment_slot. , . — cat <<- EOF, . , — , . bash , nginx . , . , , " ", 4 EOF. .

,cat << 'EOF', .cat << EOF, heredoc ( ($foo), ($(bar)) ..), ,$. .

get-nginx-config() {

local usage_msg="Usage: ${FUNCNAME[0]} image_name deployment_slot"

local image=${1?$usage_msg}

local slot=${2?$usage_msg}

[ "$slot" == BLUE ] || [ "$slot" == GREEN ] || return 1

local container_name=${image}_${slot}

case $image in

# Add specific nginx configs for your services here

*) nginx-config-simple-service $container_name:8080 ;;

esac

}

nginx-config-simple-service() {

local usage_msg="Usage: ${FUNCNAME[0]} proxy_pass"

local proxy_pass=${1?$usage_msg}

cat << EOF

server {

listen 80;

location / {

proxy_pass http://$proxy_pass;

}

}

EOF

} . localhost :

$ ssh-copy-id localhost

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

himura@localhost's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'localhost'"

and check to make sure that only the key(s) you wanted were added., , ? , - ( nginx url ). , ( ), , = .

1: , scp. ssh .

:

- , , .

- ( ).

- .

2:

-

sed - shh pipe (

|)

:

- Truely stateless

- No boilerplate entities

- Feeling cool

Ansible. , . , . , , :

$ cat << 'EOF' > deploy.sh#!/bin/bash

usage_msg="Usage: $0 ssh_address local_image_tag"

ssh_address=${1?$usage_msg}

image_name=${2?$usage_msg}

echo "Connecting to '$ssh_address' via ssh to seamlessly deploy '$image_name'..."

( sed "\$a deploy $image_name" | ssh -T $ssh_address ) << 'END_OF_SCRIPT'

deploy() {

echo "Yay! The '${FUNCNAME[0]}' function is executing on '$(hostname)' with argument '$1'"

}

END_OF_SCRIPTEOF

$ chmod +x deploy.sh

$ ./deploy.sh localhost magic-porridge-pot

Connecting to 'localhost' via ssh to seamlessly deploy 'magic-pot'...

Yay! The 'deploy' function is executing on 'hut' with argument 'magic-porridge-pot', , bash, ( shellbang):

if [ "$SHELL" != "/bin/bash" ]

then

echo "The '$SHELL' shell is not supported by 'deploy.sh'. Set a '/bin/bash' shell for '$USER@$HOSTNAME'."

exit 1

fi-:

$ docker exec reverse-proxy rm /etc/nginx/conf.d/default.conf

$ wget -qO deploy.sh https://git.io/JUc2s

$ chmod +x deploy.sh

$ ./deploy.sh localhost uptimer

Sending gzipped image 'uptimer' to 'localhost' via ssh...

Loaded image: uptimer:latest

Connecting to 'localhost' via ssh to seamlessly deploy 'uptimer'...

Deploying 'uptimer_GREEN' in place of 'uptimer_BLUE'...

06f5bc70e9c4f930e7b1f826ae2ca2f536023cc01e82c2b97b2c84d68048b18a

Container started. Checking health...

Requesting 'http://uptimer_GREEN:8080/' within the 'web-gateway' docker network:

HTTP/1.0 503 Service Unavailable

wget: server returned error: HTTP/1.0 503 Service Unavailable

New 'uptimer_GREEN' service is not ready yet. Waiting (1)...

Requesting 'http://uptimer_GREEN:8080/' within the 'web-gateway' docker network:

HTTP/1.0 503 Service Unavailable

wget: server returned error: HTTP/1.0 503 Service Unavailable

New 'uptimer_GREEN' service is not ready yet. Waiting (2)...

Requesting 'http://uptimer_GREEN:8080/' within the 'web-gateway' docker network:

HTTP/1.0 200 OK

Server: BaseHTTP/0.6 Python/3.8.3

Date: Sat, 22 Aug 2020 20:15:50 GMT

Content-Type: text/html

New 'uptimer_GREEN' service seems OK. Switching heads...

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

2020/08/22 20:15:54 [notice] 97#97: signal process started

The 'uptimer_GREEN' service is live!

Killing 'uptimer_BLUE'...

uptimer_BLUE

Total reclaimed space: 0B

Deployment successful!http://localhost/ , , .

:3

$ docker rm -f uptimer_GREEN reverse-proxy

uptimer_GREEN

reverse-proxy

$ docker network rm web-gateway

web-gateway

$ cd ..

$ rm -r blue-green-deploymentDisclaimer: . , bash-. bash — , , , systemd, systemd /etc/init.d/ . , Docker Swarm Mode () and many powerful orchestrators with tons of ready-made seamless layouts. But ready-made instruments are never panacea. This script was born not only out of love for bash scripting, but also because a long time ago, in a galaxy far, far away, it turned out to be easier to write than to implement an orchestrator. Plus, it can be easily modified to suit the specific needs of specific applications.