A new chapter has appeared in the story of machines defeating humans: the AI once again defeated the human pilot in virtual aerial combat. The AlphaDogfight competition was the final test of neural network algorithms developed for the US military. And the best demonstration of the capabilities of intelligent autonomous agents capable of defeating enemy aircraft in aerial combat. More details - in the material Cloud4Y.

This isn't the first time an AI has defeated a human pilot. Tests in 2016 showed that an artificial intelligence system can beat an experienced combat flight instructor. But Thursday's DARPA simulation was arguably more meaningful, as it pitted many algorithms against each other and then against humans in challenging environments. In addition to integrating AI into combat vehicles to enhance their combat capability, simulations like these can also help train human pilots.

Start

Last August, the Defense Advanced Research Project Agency (DARPA) selected eight teams to participate in a series of tests. The list includes Aurora Flight Sciences, EpiSys Science, Georgia Tech Research Institute, Heron Systems, Lockheed Martin, Perspecta Labs, PhysicsAI and SoarTech (as you can see, along with large defense contractors such as Lockheed Martin, small companies such as Heron Systems).

The goal of the program was to create AI systems for combat drones and unmanned wingmen covering manned fighters. Scientists and the military expect that AI will be able to conduct aerial combat faster and more efficiently than humans, and reduce the burden on the pilot, giving him time to make important tactical decisions within a larger combat mission.

The first phase of the AlphaDogfight Trials was held in November 2019 at the Applied Physics Laboratory of Johns Hopkins University. On it, neural network algorithms created by different teams fought an air battle with the Red artificial intelligence system, created by DARPA specialists. The battles between the algorithms were fought in 1x1 mode at a low difficulty level. The second stage of testing took place in January 2020. It differed from the first in increased complexity. The final test phase, which took place on August 20, 2020, could be watched live on the DARPA YouTube channel .

The tests were carried out in the FlightGear aircraft simulator using the JSBSim flight dynamics software model. In the first two stages, neural network algorithms controlled the F-15C Eagle heavy fighters, and in the third, the medium F-16 Fighting Falcon.

How a machine defeated a man

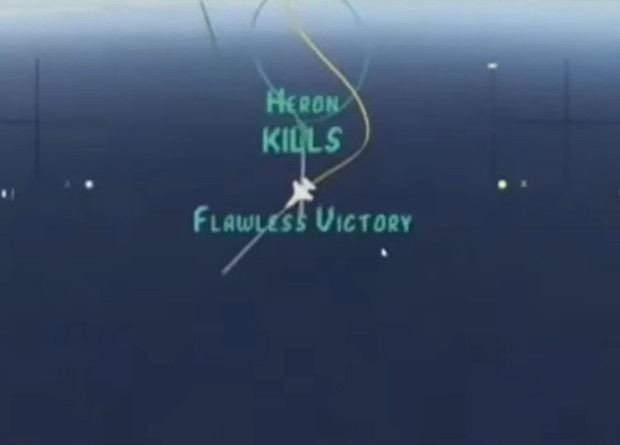

At the third stage of testing, neural network algorithms first conducted air battles with each other. The winner of all battles was the system created by Heron Systems. Air battles were fought at close range using only cannon armament.

Heron Systems' algorithm then conducted a dogfight with an experienced US Air Force fighter pilot and instructor, callsign Banger. In total, five battles were fought. The AI algorithm has triumphed over all. "The standard air combat techniques that fighter pilots learn did not work," the pilot who lost the car admitted. But in the last rounds, the man was able to hold out longer.

The reason is that AIs could not learn from their own experience during real-world testing. By the fifth and final round of the air combat, the human pilot was able to significantly change his tactics, which allowed him to hold out much longer. However, the lack of training speed of an experienced pilot led to his defeat.

Another test winner is deep reinforcement learning, in which artificial intelligence algorithms over and over, sometimes very quickly, test a problem in a virtual environment until they develop something like understanding. What type of neural network the developers used is not disclosed. Heron Systems used reinforcement learning to train the neural network. During training, the network ran four billion simulations.

The second result in virtual aerial combat was shown by an algorithm developed by Lockheed Martin. Its preparation was also carried out by training a neural network with reinforcement.

Few details

Lee Rietholz, director and chief architect of artificial intelligence at Lockheed Martin, told reporters after testing that trying to make the algorithm work well in aerial combat is very different from teaching software to simply "fly", that is, maintain a certain direction, altitude and speed.

« , . , . , . , . , [] , .

, , . , : , , , . , . », — .

There is no doubt that AI can learn, and very quickly. Using local or cloud resources to simulate air battles, he can repeat the lesson over and over on multiple machines. Lockheed, like several other teams, had a fighter pilot. They could also run training sets on 25 DGx1 servers at the same time. But what they ended up producing could run on a single GPU . In comparison, after the victory, Ben Bell, senior machine learning engineer at Heron Systems, said that their algorithm went through at least 4 billion simulations and gained about 12 years of experience.

As a result, DARPA congratulated startup Heron Systems on the victory , whose algorithm managed to bypass the development of larger companies like Lockheed Martin.

What else is interesting in the Cloud4Y blog

→ Do it yourself, or a computer from Yugoslavia

→ The US Department of State will create its own great firewall

→ Artificial intelligence sings about revolution

→ What is the geometry of the Universe?

→ Easter eggs on topographic maps of Switzerland

Subscribe to our Telegram channel so as not to miss another article. We write no more than twice a week and only on business.