When starting out with Kubernetes, it is common to forget about configuring container resources. At this point, you just need to make sure that the Docker image is working and can be deployed to your Kubernetes cluster.

But later, the application needs to be deployed to the production cluster along with other applications. To do this, you need to allocate resources for the container and make sure that there are enough resources to start and work the application, and in other running applications there will be no problems.

The Kubernetes aaS team from Mail.ru has translated an article on container resources (CPU & MEM), requests and resource limits. You will learn the benefits of these settings and what happens if you do not install them.

Computing resources

We have two types of resources with the following units:

- Central processing unit (CPU) - cores;

- Memory (MEM) - bytes.

Resources are specified for each container. In the following YAML pod file, you will see a resource section that contains the requested and limit resources:

- Requested Pod Resources = the sum of the requested resources of all pods;

- Limit Pod Resources = the sum of the marginal resources of all containers.

apiVersion: v1

kind: Pod

metadata:

name: backend-pod-name

labels:

application: backend

spec:

containers:

— name: main-container

image: my-backend

tag: v1

ports:

— containerPort: 8080

resources:

requests:

cpu: 0.2 # REQUESTED CPU: 200m cores

memory: "1Gi" # REQUESTED MEM: 1Gi

limits:

cpu: 1 # MAX CPU USAGE: 1 core

memory: "1Gi" # MAX MEM USAGE: 1Gi

— name: other-container

image: other-app

tag: v1

ports:

— containerPort: 8000

resources:

requests:

cpu: "200m" # REQUESTED CPU: 200m cores

memory: "0.5Gi" # REQUESTED MEM: 0.5Gi

limits:

cpu: 1 # MAX CPU USAGE: 1 core

memory: "1Gi" # MAX MEM USAGE: 1Gifield

resources.requestedfrom the Pod specification is one of the elements used to find the desired node. Already on it, you can schedule a Pod deployment. How are they looking for a suitable node?

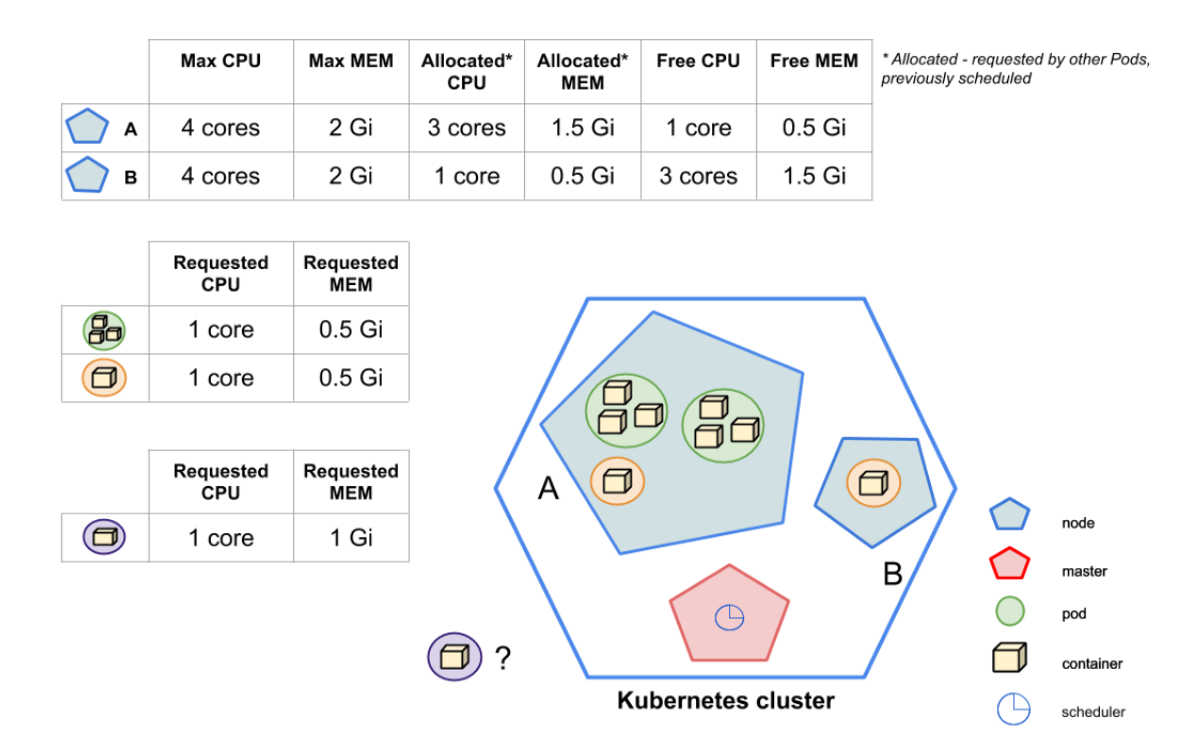

Kubernetes consists of several components, including the master node or master node (Kubernetes Control Plane). There are several processes in the master node: kube-apiserver, kube-controller-manager, and kube-scheduler.

The kube-scheduler process is responsible for looking at the newly created modules and looking for possible worker nodes that match all module requests, including the number of requested resources. The list of nodes found by kube-scheduler is ranked. The pod is planned for the site with the highest scores.

The picture shows that kube-scheduler should schedule a new purple Pod. The Kubernetes cluster contains two nodes: A and B. As you can see, kube-scheduler cannot schedule a Pod to node A - the available (unsolicited) resources do not match the requests for the purple Pod. For example, the 1GB of memory requested by the purple Pod will not fit on node A, since the available memory is 0.5GB. But Node B has enough resources. As a result, kube-scheduler decides that the destination of the purple Pod is Node B.

Now we know how the requested resources affect the choice of the node to start the Pod. But how do marginal resources affect?

Limit resources are a boundary that the CPU / MEM cannot cross. However, the CPU is flexible, so containers that hit the CPU limit will not cause the Pod to shutdown. Instead, CPU throttling will start. If the MEM usage limit is reached, the container will be stopped due to OOM-Killer and restarted, if allowed by the RestartPolicy setting.

Requested and limit resources in detail

best way to explain how requested and limit resources work is to represent the relationship between Kubernetes and Docker. In the picture above, you can see how Kubernetes fields and Docker launch flags are related.

Memory: Request and Limit

containers:

...

resources:

requests:

memory: "0.5Gi"

limits:

memory: "1Gi"

As mentioned above, memory is measured in bytes. Based on the Kubernetes documentation , we can specify memory as a number. Usually it is an integer, for example 2678 - that is, 2678 bytes. You can also use suffixes

Gand Gi, most importantly, remember that they are not equivalent. The first is decimal and the second is binary. As an example, mentioned in the documentation K8S: 128974848, 129e6, 129M, 123Mi- they are virtually equivalent.

The Kubernetes parameter

limits.memorymatches the flag --memoryfrom Docker. In case ofrequest.memorythere is no arrow for Docker because Docker does not use this field. You may ask if this is necessary at all? Yes need. As I said, the field matters to Kubernetes. Based on the information from it, the kube-scheduler decides which node to schedule the Pod for.

What happens if there is not enough memory installed for a query?

If the container reaches the limits of the requested memory, then the Pod is placed in the Pod group, which stop when there is no memory in the node.

What happens if you set the memory limit too low?

If the container exceeds the memory limit, it will be terminated due to OOM-Killed. And will be restarted if possible based on RestartPolicy, where the default is

Always.

What happens if you do not specify the requested memory?

Kubernetes will take the limit and set it as the default.

What can happen if you don't specify the memory limit?

The container has no limits, it can use as much memory as it wants. If he starts using all the available memory of the node, then OOM will kill him. The container will then be restarted if possible based on RestartPolicy.

What happens if you don't specify memory limits?

This is the worst case scenario: the scheduler does not know how much resources the container needs, and this can cause serious problems on the node. In this case, it would be nice to have default namespace constraints (set by LimitRange). There are no limits by default - the Pod has no limits, it can use as much memory as it wants.

If the requested memory is more than the node can offer, the Pod will not be scheduled. It is important to remember that

Requests.memoryis not a minimum value. This is a description of the amount of memory sufficient for the container to run continuously.

It is usually recommended to set the same value for

request.memoryandlimit.memory... This prevents Kubernetes from scheduling a Pod on a node that has enough memory to run the Pod but not enough to run. Keep in mind: When scheduling a Pod, Kubernetes only counts requests.memory, limits.memorynot counts.

CPU: Request and Limit

containers:

...

resources:

requests:

cpu: 1

limits:

cpu: "1200m"

With the CPU, everything is a little more complicated. Going back to the picture of the relationship between Kubernetes and Docker, you can see that it

request.cpumatches --cpu-shares, while it limit.cpumatches the flag cpusin Docker.

The CPU that Kubernetes requests is multiplied by 1024, the proportion of CPU cycles. If you want to request 1 full core, you must add

cpu: 1as shown above.

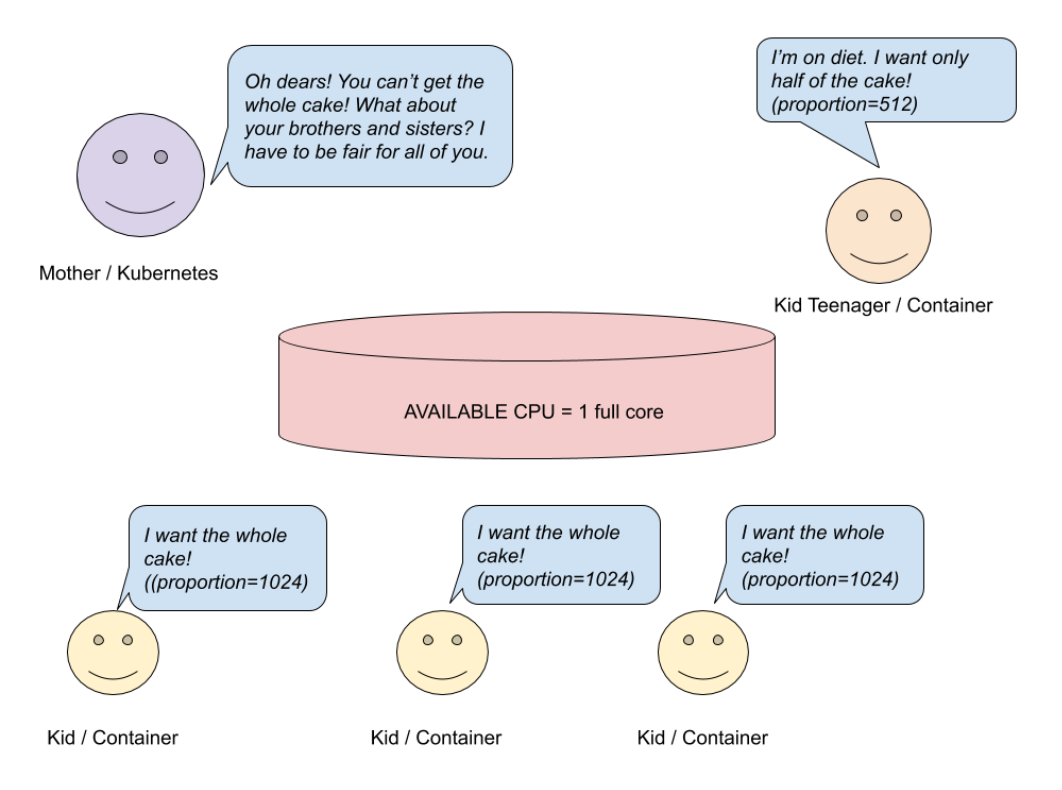

Requesting a full kernel (proportion = 1024) does not mean your container will receive it. If your host machine has only one core and you are using more than one container, then all containers must share the available CPU among themselves. How does this happen? Let's take a look at the picture.

CPU Request - Single Core System

Let's say you have a single core host system running containers. Mom (Kubernetes) has baked a cake (CPU) and wants to share it among children (containers). Three children want a whole cake (proportion = 1024), another child wants half of the cake (512). Mom wants to be fair and does a simple calculation.

# ?

# 3

cakesNumberKidsWant = (3 * 1) + (1 * 0.5) = 3.5

# :

3 (/) * 1 ( / ) + 1 (/) * 0.5 ( / )

# ?

availableCakesNumber = 1

# () ?

newMaxRequest = 1 / 3.5 =~ 28%Based on the calculation, three children will receive 28% of the kernel, and not the whole kernel. The fourth child will get 14% of the full kernel, not half. But things will be different if you have a multi-core system.

CPU request - multi-core (4) system

In the image above, you can see that three kids want a whole pie and one wants half. Since Mom has baked four cakes, each of her children will get as much as they want. In a multi-core system, processor resources are spread across all available processor cores. If a container is limited to less than one full CPU core, it can still use it 100%.

The above calculations have been simplified to understand how the CPU is allocated between containers. Of course, besides the containers themselves, there are other processes that also use CPU resources. When processes in one container are idle, others can use its resource.

CPU: "200m"corresponds CPU: 0,2, which means approximately 20% of one core.

Now let's talk about

limit.cpu... The CPU that limits Kubernetes is multiplied by 100. The result is the amount of time the container can use every 100 μs ( cpu-period).

limit.cpumatches the Docker flag --cpus. This is a new combination of old --cpu-periodand --cpu-quota. By setting it, we indicate how many available CPU resources the container can use to the maximum until it starts throttling:

- cpus is a combination of

cpu-periodand iscpu-quota. cpus = 1.5equivalent to settingcpu-period = 100000andcpu-quota = 150000; - cpu-period - CPU CFS scheduler period , by default 100 microseconds;

- cpu-quota - the number of microseconds inside

cpu-periodthat the container is limited to.

What happens if the requested CPU is insufficient?

If the container needs more than it is installed, then it will steal CPU from other processes.

What happens if you set an insufficient CPU limit?

Since the CPU resource is adjustable, throttling will turn on.

What happens if you don't specify a CPU request?

As with memory, the request value is equal to the limit.

What happens if you do not specify the CPU limit?

The container will use as much CPU as it needs. If a default CPU policy (LimitRange) is defined in the namespace, then this limit is used for the container as well.

What happens if you don't specify either the request or the CPU limit?

As with memory, this is the worst case scenario. The scheduler does not know how much resources your container needs, and this can cause serious problems on the node. To avoid this, you need to set the default limits for namespaces (LimitRange).

Remember, if you request more CPU than the nodes can supply, then the Pod will not be scheduled.

Requests.cpu- not a minimum value, but a value sufficient to start the Pod and work without failures. If your application does not perform complex calculations, the best option is to install request.cpu <= 1and run as many replicas as needed.

Ideal amount of requested resources or resource limit

We learned about the limitation of computing resources. Now it's time to answer the question, “How many resources does my Pod need to run the application without problems? What is the ideal amount? "

Unfortunately, there are no definite answers to these questions. If you don't know how your application is performing, how much CPU or memory it needs, the best option is to give the application a lot of memory and CPU and then run benchmarks.

In addition to performance tests, observe the application's monitoring behavior for a week. If the graphs show that your application is consuming less resources than you requested, then you can reduce the amount of CPU or memory requested.

See this Grafana dashboard as an example .... It displays the difference between the requested resources or resource limit and the current resource usage.

Conclusion

Querying and limiting resources helps keep your Kubernetes cluster healthy. Correctly configuring limits minimizes costs and keeps applications running at all times.

In short, there are a few things to keep in mind:

- Requested resources are the configuration that is taken into account during startup (when Kubernetes plans to host the application). In contrast, limiting resources is important at runtime — when the application is already running on the node.

- Compared to memory, the CPU is a regulated resource. In case of insufficient CPU, your Pod will not shut down, it will start throttling.

- Requested resources and resource limit are not minimum and maximum values! By identifying the resources requested, you ensure that your application will run smoothly.

- It is good practice to set the memory request equal to the memory limit.

- It is good to install as requested

CPU <=1if the application does not perform complex calculations. - If you request more resources than the node has, then the Pod will never be scheduled for that node.

- Use load testing and monitoring to determine the correct amount of requested resources / resource limits.

Hopefully this article helps you understand the basic concept of resource limiting. And you will be able to apply this knowledge in your work.

Good luck!

What else to read:

- SRE observability: namespaces and metric structure .

- 90+ Kubernetes: , , , .

- Kubernetes .