The site is located on a VDS running CentOS 7 with 1C-Bitrix: Web Environment installed. Additionally, make a backup copy of the OS settings.

Requirements:

- Frequency - 2 times a day;

- Keep copies for the last 90 days;

- The ability to get individual files for a specific date, if necessary;

- The backup must be stored in a data center different from VDS;

- The ability to access the backup from anywhere (another server, local computer, etc.).

An important point was the ability to quickly create backups with minimal consumption of additional space and system resources.

We are not talking about a snapshot to quickly restore the entire system, but about files and the database and the history of changes.

Initial data:

- VDS on XEN virtualization;

- CentOS 7 OS;

- 1C-Bitrix: Web environment;

- Site based on "1C-Bitrix: Site Management", Standard version;

- The file size is 50 GB and will grow;

- The database size is 3 GB and will grow.

Standard backup built into 1C-Bitrix - excluded immediately. It is only suitable for small sites, because:

- , , , 50 .

- PHP, — , .

- 90 .

The solution offered by the hoster is a backup disk located in the same data center as VDS, but on a different server. You can work with the disk via FTP and use your own scripts, or if ISPManager is installed on the VDS, then through its backup module. This option is not suitable due to the use of the same data center.

From all of the above, the best choice for me is an incremental backup according to my own scenario in Yandex.Cloud (Object Storage) or Amazon S3 (Amazon Simple Storage Service).

This requires:

- root access to VDS;

- installed utility duplicity;

- account in Yandex.Cloud.

Incremental backup is a method in which only data changed since the last backup are archived.

duplicity is a backup utility that uses rsync algorithms and can work with Amazon S3.

Yandex.Cloud vs Amazon S3

There is no difference between Yandex.Cloud and Amazon S3 in this case. Yandex supports the main part of the Amazon S3 API, so you can work with it using the solutions available for working with S3. In my case, this is the duplicity utility.

The main advantage of Yandex can be payment in rubles, if there is a lot of data, then there will be no link to the exchange rate. In terms of speed, Amazon's European data centers work commensurate with the Russian ones at Yandex, for example, you can use Frankfurt. I previously used Amazon S3 for similar tasks, now I decided to try Yandex.

Configuring Yandex.Cloud

1. You need to create a billing account in Yandex.Cloud. To do this, you need to log in to Yandex.Cloud through your Yandex account or create a new one.

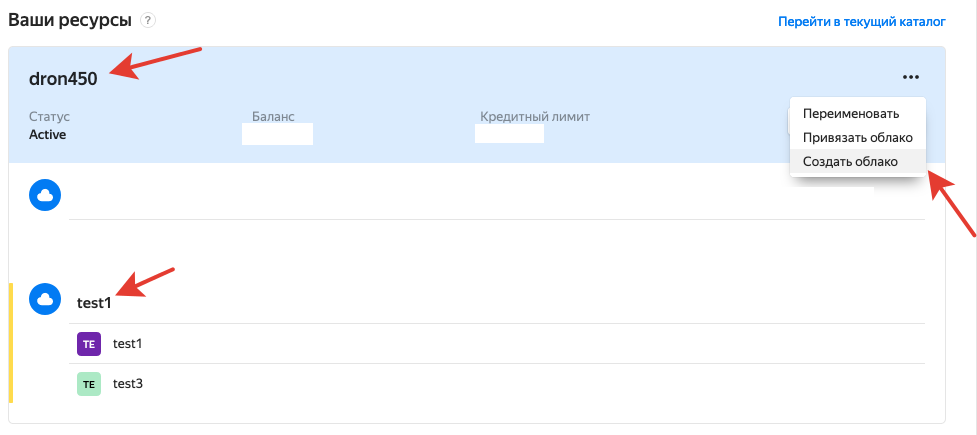

2. Create "Cloud".

3. Create a "Catalog" in the "Cloud".

4. For the "Catalog" create a "Service account".

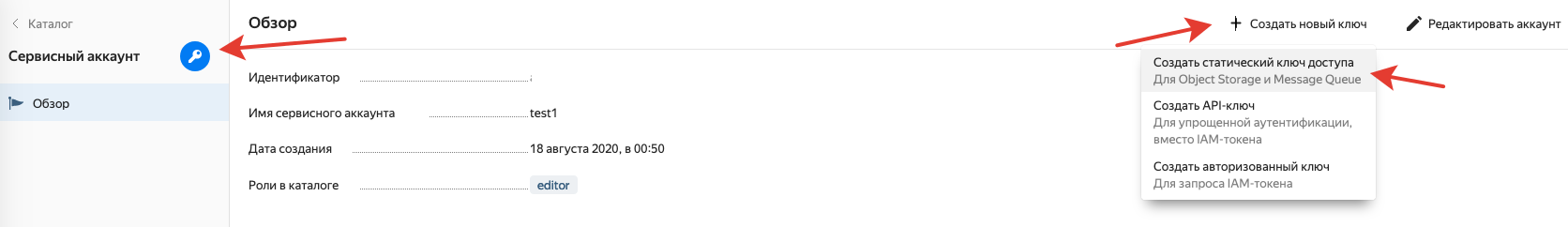

5. Create keys for the "Service Account".

6. Keep the keys, they will be needed in the future.

7. Create a "Bucket" for the "Catalog", it will contain files.

8. I recommend setting a limit and choosing "Cold storage".

Configuring a scheduled backup on the server

This guide assumes basic administration skills.

1. Install the duplicity utility on VDS

yum install duplicity2. Create a folder for mysql dumps, in my case it is / backup_db in the VDS root

3. Create a folder for bash scripts / backup_scripts and make the first script that will backup /backup_scripts/backup.sh

Contents of the script:

#!`which bash`

# /backup_scripts/backup.sh

# , , email ( )

if [ -f /home/backup_check.mark ];

then

DATE_TIME=`date +"%d.%m.%Y %T"`;

/usr/sbin/sendmail -t <<EOF

From:backup@$HOSTNAME

To:< EMAIL>

Subject:Error backup to YANDEX.CLOUD

Content-Type:text/plain; charset=utf-8

Error backup to YANDEX.CLOUD

$DATE_TIME

EOF

else

#

# backup

echo '' > /home/backup_check.mark;

# backup

/bin/rm -f /backup_db/*

# mysql , /root/.my.cnf

DATETIME=`date +%Y-%m-%d_%H-%M-%S`;

`which mysqldump` --quote-names --all-databases | `which gzip` > /backup_db/DB_$DATETIME.sql.gz

# .

export PASSPHRASE=< >

export AWS_ACCESS_KEY_ID=< >

export AWS_SECRET_ACCESS_KEY=< >

# duplicity .

# backup

# -- exclude , ,

# --include :

# - /backup_db

# - /home

# - /etc

# s3://storage.yandexcloud.net/backup , backup

# :

# "--exclude='**'" "/" , --include --exclude . "/", "--exclude='**'"

# --full-if-older-than='1M' -

# --volsize='512' -

# --log-file='/var/log/duplicity.log' -

`which duplicity` \

--s3-use-ia --s3-european-buckets \

--s3-use-new-style \

--s3-use-multiprocessing \

--s3-multipart-chunk-size='128' \

--volsize='512' \

--no-print-statistics \

--verbosity=0 \

--full-if-older-than='1M' \

--log-file='/var/log/duplicity.log' \

--exclude='**/www/bitrix/backup/**' \

--exclude='**/www/bitrix/cache/**' \

--exclude='**/www/bitrix/cache_image/**' \

--exclude='**/www/bitrix/managed_cache/**' \

--exclude='**/www/bitrix/managed_flags/**' \

--exclude='**/www/bitrix/stack_cache/**' \

--exclude='**/www/bitrix/html_pages/*/**' \

--exclude='**/www/bitrix/tmp/**' \

--exclude='**/www/upload/tmp/**' \

--exclude='**/www/upload/resize_cache/**' \

--include='/backup_db' \

--include='/home' \

--include='/etc' \

--exclude='**' \

/ \

s3://storage.yandexcloud.net/backup

# .

# 3 backup backup.

# .. backup 3 , .. backup

`which duplicity` remove-all-but-n-full 3 --s3-use-ia --s3-european-buckets --s3-use-new-style --verbosity=0 --force s3://storage.yandexcloud.net/backup

unset PASSPHRASE

unset AWS_ACCESS_KEY_ID

unset AWS_SECRET_ACCESS_KEY

# backup

/bin/rm -f /home/backup_check.mark;

fi4. Run the script for the first time and check the result; files should appear in the "Bucket".

`which bash` /backup_scripts/backup.sh

5. Add a script to cron for the root user to be executed 2 times a day, or with the frequency you need.

10 4,16 * * * `which bash` /backup_scripts/backup.shData recovery from Yandex.Cloud

1. Make a folder for recovery / backup_restore

2. Make a bash script for recovery /backup_scripts/restore.sh

I give the most popular example of restoring a specific file:

#!`which bash`

export PASSPHRASE=< >

export AWS_ACCESS_KEY_ID=< >

export AWS_SECRET_ACCESS_KEY=< >

# 3 ,

# backup

#`which duplicity` collection-status s3://storage.yandexcloud.net/backup

# index.php

#`which duplicity` --file-to-restore='home/bitrix/www/index.php' s3://storage.yandexcloud.net/backup /backup_restore/index.php

# index.php 3

#`which duplicity` --time='3D' --file-to-restore='home/bitrix/www/index.php' s3://storage.yandexcloud.net/backup /backup_restore/index.php

unset PASSPHRASE

unset AWS_ACCESS_KEY_ID

unset AWS_SECRET_ACCESS_KEY3. Run the script and wait for the result.

`which bash` /backup_scripts/backup.shIn the / backup_restore / folder, you will find the index.php file that was previously backed up.

You can make more detailed settings to suit your needs.

Disadvantage of duplicity

Duplicity has one disadvantage - there is no way to set a channel usage limit. With a regular channel, this does not create a problem, but when using a DDoS-protected channel with a rate rating per day, I would like to be able to set a limit of 1-2 megabits.

As a conclusion

Reservation in Yandex.Cloud or Amazon S3 provides an independent copy of the site and OS settings, which can be accessed from any other server or local computer. At the same time, this copy is not visible either in the hosting control panel or in the Bitrix admin panel, which gives additional security.

With the saddest outcome, you can build a new server and deploy the site for any date. Although the most requested functionality will be the ability to refer to a file for a specific date.

You can use this technique with any VDS or Dedicated servers and sites on any engines, not only 1C-Bitrix. The OS can also be other than CentOS, such as Ubuntu or Debian.