My name is Maria Snopok, I am the manager of the automation direction in the Testing Department of the Development and Support Department of Big Data Products of X5 Retail Group. In this article, I will share our experience with implementing autotests and reducing the associated costs. Hopefully this information is helpful for teams that are struggling to transition to automated testing.

How we came to autotests

Before the advent of automation, I worked as a test manager in one of our products. When a fairly large test model was formed there, we began to distribute the pool of regression tests to the entire development team - if she is responsible for the release, then she is also testing. Such team regression solved the following tasks:

- developers who had previously dealt with their separate pieces of functionality were able to see the whole product;

- we made regression faster and released more often due to this;

- we didn’t reduce recourse anymore - the quality improved.

But it was expensive, because the tests were performed by expensive specialists - developers and analysts, and we began to move towards automation.

Smoke tests were the first to go - simple checks to make sure that pages open, load, do not crash, and that all basic functionality is available (so far without checking the logic and values).

We then automated positive end-to-end scripts. This step brought the maximum benefit: tests made it possible to make sure that the main business processes of the product are working, even if there were errors in negative, alternative and secondary scenarios.

And finally we got to the automation of advanced regression checks and alternative scenarios.

At each of these stages, we faced a number of difficulties that seriously slowed down and complicated the whole process. What practical solutions have helped us speed up and facilitate the work of automators?

Four ways to reduce costs on autotests

1. Agree on the formats of test attributes on the shore

Developers and automated testers need to agree in advance on the rules for naming HTML elements for their subsequent use as locators in autotests. It is desirable to have the same format for all products. We have developed requirements to understand how to name the attributes, even before the feature is transferred to development; this understanding exists both on the side of developers and on the side of testers. We agreed that each visible html element is assigned a custom data-qa attribute, by which the tester will search for it. The attribute is named according to the following pattern:

[screen name] [form / table / widget name] [element name]

Example:

data-qa = "plu-list_filter-popover_search-input"

data-qa = "common_toolbar_prev-state"

It's easy to isolate such an attribute simply from the documentation and layout, and everyone knows what its meaning should be. When a developer takes a task to work, he, according to these rules, assigns data-qa attributes to all visible page elements - headers, buttons, links, selectors, rows and columns of tables, etc. As a result, the tester can start writing autotests during the development of the feature, based on only to the layout and documentation, because it already knows how the attributes will be named.

The introduction of test attributes allowed us to reduce the costs of developing and supporting autotests by an average of 23% compared to the previous period by reducing the costs of updating tests and localizing elements for autotests.

2. We write manual tests so that they are easy to automate

Manual testers and automation engineers live in different universes. The manual ones are aimed at checking several different nearby test objects with one script. Automators, on the other hand, tend to structure everything and check only objects of the same type within a single test. For this reason, manual tests are not always easy to automate. When we received manual cases for automation, we faced a number of problems that did not allow us to automate the received scripts word for word, because they were written for easy execution by a living person.

If an automator is deeply immersed in the product, he does not need "manual" cases, he can write himself a script for automation. If he comes to the product "from the outside" (in our Department, in addition to testers, teams also have testing as a dedicated service), and there is already a test model and scripts prepared by manual testers, you may be tempted to instruct him to write autotests on the basis of these "manual" test cases.

Do not give in to this: the maximum for which an automator can use manual test cases is just to understand how the system works from the user's point of view.

Accordingly, it is necessary to initially prepare manual tests so that they can be automated , and for this to deal with common problems.

Problem 1: simplifying manual cases.

Solution: detailing the cases.

Let's imagine a description of a manual case:

- open the revision version list page

- click create button

- fill the form

- click the "create" button

- make sure revision version is created

This is a bad scenario for automation, because it does not specify what exactly needs to be opened, with what data to fill in , what exactly we expect to see and in which fields to look at it. The instructions "make sure the version was created successfully" is not enough for automation - the machine needs specific criteria by which it can be convinced of the success of the action.

Problem 2: case branching.

Solution: the case should only have a linear scenario.

Constructions with "or" often appear in hand-held cases. For example: "open revision 184 under user 1 or 2". This is unacceptable for automation, the user must be clearly indicated. Conjunctions "or" should be avoided.

Problem 3: case irrelevance.

Solution: check cases before submitting them to automation.

This is the biggest pain for us: if the functionality changes frequently, testers do not have time to update test cases. But if a manual tester comes across an irrelevant case, it will not be difficult for him to quickly correct the script. An automator, especially if he is not immersed in the product, will not be able to do this: he simply will not understand why the case is not working well, and will perceive it as a test bug. Will spend a lot of time on development, after which it turns out that the tested functionality has long been cut, and the script is irrelevant. Therefore, all scripts for automation should be checked for relevance.

Problem 4: not enough preconditions.

Decision:full stack of auxiliary data for case execution.

Manual testers get blurry, and as a result, when describing preconditions, they miss some nuances that are obvious to them, but not obvious to automators who are not so familiar with the product. For example, they write: "open a page with calculated content." They know that to calculate this content, you need to run a calculation script, and the automator, who sees the project for the first time, will decide that it is necessary to take something already calculated.

Conclusion: in the preconditions it is necessary to write an exhaustive list of actions that must be performed before starting the test.

Problem 5: multiple checks in one scenario.

Decision:no more than three checks per scenario (with the exception of costly and difficult-to-reproduce scenarios).

Manual testers very often have a desire to save money on cases, especially when they are heavy, and test as much as possible in one scenario, cramming a dozen tests into it. This should not be allowed, because both in manual and automated testing, this approach gives rise to the same problem: the first test did not pass, and all the rest are considered not passed or do not start at all in the case of automation. Therefore, in autotest scripts, from 1 to 3 checks are allowed. Exceptions are really difficult scenarios that require time-consuming pre-calculations, as well as scenarios that are difficult to reproduce. Here you can compromise the rule, although it is better not to do so.

Problem 6: duplicate checks.

Solution: there is no need to automate the same functionality over and over again.

If we have the same functionality on several pages, for example, a standard filter, then it makes no sense to check it everywhere during regression testing, it is enough to look in one place (unless, of course, we are talking about a new feature). Manual testers write scripts and test this sort of thing on every page, simply because they look at each page in isolation, without getting into the inner workings of it. But automators should understand that repeated testing of the same thing is a waste of time and resources, and it is quite easy for them to detect such situations.

The solution of the above problems allowed us to reduce the cost of developing autotests by 16%.

3. Synchronization with product teams on the issue of refactoring, redesign, significant changes in functionality, so as not to write tests "on the table"

In our Big Data department, where 13 products are developed, there are two groups of automators:

- automators directly in product teams;

- an automation stream service outside of product teams, engaged in the development of the framework and common components and working on requests from products with ready-made functional tests.

Stream automators are initially not so deeply into the product, and previously they did not attend daily team meetings, because it would take too much of their time. Once this approach let us down: we accidentally learned from third-party sources that one team was going to refactor their product (break the monolith into microservices), which is why some of the tests that we wrote are sent to the archive. It was very painful.

In order to prevent this from happening in the future, it was decided that at least once a week, an automator from the stream will come to the development team meetings to keep abreast of what is happening with the product.

We also agreed that tests are automated only for the functionality that is ready for production and does not undergo frequent changes (regression). We must be sure that at least in the next three months, refactoring or major reworking will not happen to it, otherwise the automators simply will not have time with tests.

The cost savings resulting from the implementation of these measures are more difficult to calculate. We took the time to develop autotests as a basis, which have lost their value due to the planned transition of part of the functionality to microservices. If we knew about this transition in advance, we would not cover the variable functionality with autotests. The total loss (also known as potential savings) is 7%.

4. Upgrading a manual tester to an automation engineer

There are few test automators on the labor market, especially good ones, and we are actively looking for them. We are also actively upgrading the existing manual testers who have a desire and basic understanding of automation. First of all, we send such people to courses in the programming language, because we need full-fledged automators and full-fledged autotests, and from the recorders, in our opinion, there are more disadvantages than advantages.

In parallel with learning a programming language, a person learns to write correct, structured scripts without problems from point 2, read and analyze the results of autotest reports, correct minor errors in locators, simple methods, then maintain ready-made tests, and only then write their own. All development takes place with the support of experienced colleagues from the stream service. In the future, they can participate in the finalization of the framework: we have our own library, scalable for all projects, it can be improved by adding something of our own.

This direction of cost reduction is in its infancy, so it is too early to calculate the savings, but there is every reason to believe that personnel training will help to significantly reduce operating costs. And at the same time, it will give our manual testers the opportunity to develop.

Outcome

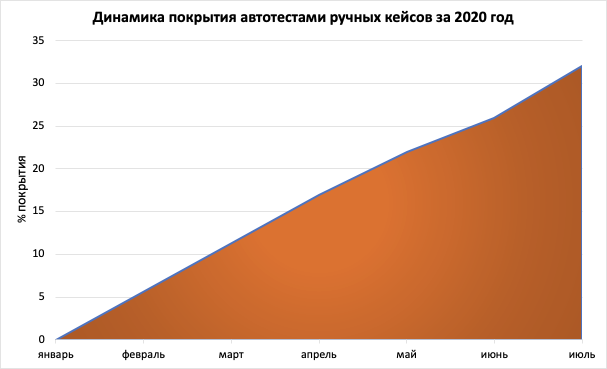

Now we have automated about 30% of tests on five products, which has led to a 2-fold reduction in regression testing time.

This is a good result, since time is of great importance to us: we cannot test indefinitely and we cannot give the product away without checking; automation, on the other hand, allows us to provide the required volume of product inspections at the optimum time. In the future, we plan to increase the percentage of autotests to 80-90% in order to release releases as often as possible, but at the same time not to cover projects with autotests where manual testing is still more profitable.