Our company has a more conservative approach when it comes to databases than in applications. The database is spinning not in Kubernetes, but on hardware or in a virtual machine. We have a well-established process for changing the payment processing database, which includes many automated checks, a large review and a release with the participation of the DBA. The number of checks and people involved in this case negatively affects the time-to-market. On the other hand, it is debugged and allows you to reliably make changes to production, minimizing the likelihood of breaking something. And if something breaks, then the right people are already included in the repair process. This approach makes the work of the company's main service more stable.

We start most of the new relational databases for microservices on PostgreSQL. A debugged process for Oracle, while reliable, carries with it unnecessary complexity for small databases. Nobody wants to drag difficult processes from the past into a bright future. Nobody started working on the process for a bright future in advance. As a result, we got a lack of a standard and raznozhopitsu. If you want to know what problems this led to and how we solved them, welcome to cat.

Problems We Solved

There are no uniform versioning standards

In the best case, these are DDL SQL files that are located somewhere in the db directory in the repository with the microservice. It is very bad if this is just the current state of the database, different on the test and on the production, and there are no reference scripts for the database schema.

During debugging, we shatter the test base

“I'm going to shake the test database a little now, don't be alarmed there” - and went to debug the newly written schema change code on the test database. Sometimes it takes a long time, and all this time the test circuit does not work.

At the same time, the test circuit may break in the part where other microservices interact with the microservice, whose base the developer has wrecked.

DAO methods are not covered by tests, are not validated in CI

When developing and debugging, DAO methods are invoked by pulling the outer handles a few layers above. This exposes entire scenarios of business logic instead of specific interactions between the microservice and the database.

There is no guarantee that nothing will fall apart in the future. The quality and maintainability of the microservice suffers.

Non-isomorphism of media

If the change loops are delivered differently to test and production, then you cannot be sure that it will work the same. Especially when development and debugging is actually carried out on the test.

Objects on the test can be created under the account of the developer or application. Grants are handed over at random, usually grant all privileges. Grants for the application are issued on the principle “I see an error in the log - I give a grant”. Grants are often forgotten at release. Sometimes, after the release, smok testing does not cover all new functionality and the lack of a grant does not fire immediately.

Heavy and fragile process of rolling into production

Rolling into production was done manually, but by analogy with the process for Oracle, through the approval of the DBA, release managers, and rolling forward by release engineers.

This slows down the release. And in case of problems, it increases downtime, complicating the developer's access to the database. The exec.sql and rollback.sql scripts were often not tested on the test, because there is no patchsetting standard for non-Oracle, and the test was going all the way.

Therefore, it happens that developers roll changes to non-critical services without this process at all.

How can you do to be good

Debugging on a local DB in a docker container

For some, all the technical solutions described in the article may seem obvious. But for some reason, from year to year I see people who enthusiastically step on the same rake.

You don't go to the test server via ssh to write and debug application code, do you? I find it just as absurd to develop and debug database code on a test DB Instance. There are exceptions, it happens that it is very difficult to raise the database locally. But usually, if we are talking about something lightweight and non-legacy, then it is not difficult to raise the base locally and roll all migrations on it consistently. In return, you will get a stable instance at your side, which is not bogged down by another developer, to which access will not be lost and on which you have the rights necessary for development.

Here's an example of how easy it is to bring up a local database:

Let's write a two-line Dockerfile:

FROM postgres:12.3

ADD init.sql /docker-entrypoint-initdb.d/In init.sql we make a "clean" database, which we expect to get both on the test and in production. It should contain:

- The schema owner and the schema itself.

- Application user with a grant to use the schema.

- Required EXTENSIONs

Init.sql example

create role my_awesome_service

with login password *** NOSUPERUSER inherit CREATEDB CREATEROLE NOREPLICATION;

create tablespace my_awesome_service owner my_awesome_service location '/u01/postgres/my_awesome_service_data';

create schema my_awesome_service authorization my_awesome_service;

grant all on schema my_awesome_service to my_awesome_service;

grant usage on schema my_awesome_service to my_awesome_service;

alter role my_awesome_service set search_path to my_awesome_service,pg_catalog, public;

create user my_awesome_service_app with LOGIN password *** NOSUPERUSER inherit NOREPLICATION;

grant usage on schema my_awesome_service to my_awesome_service_app;

create extension if not exists "uuid-ossp";

For convenience, you can add the db task to the Makefile, which will (re) start the container with the base and protrude the port for connection:

db:

docker container rm -f my_awesome_service_db || true

docker build -t my_awesome_service_db docker/db/.

docker run -d --name my_awesome_service_db -p 5433:5432 my_awesome_service_db

Versioning changesets with something industry standard

It also looks obvious: you need to write migrations and keep them in the version control system. But very often I see "bare" sql scripts, without any binding. And this means that there is no control over rollback and rollback, by whom, what and when was pumped. There is not even a guarantee that your SQL scripts can be executed on the test and production database, as its structure may have changed.

In general, you need control. Migration systems are just about control.

We will not go into a comparison of different database schema versioning systems. FlyWay vs Liquibase is not the topic of this article. We chose Liquibase.

We version:

- DDL-structure of database objects (create table).

- DML content of lookup tables (insert, update).

- DCL grants for UZ Applications (grant select, insert on ...).

When launching and debugging a microservice on a local database, a developer will be faced with the need to take care of grants. The only legal way for it is to add a DCL script to the changeset. This ensures that the grants will go to sale.

Sql patchset example

0_ddl.sql:

1_dcl.sql:

2_dml_refs.sql:

Fixtures. dev

3_dml_dev.sql:

rollback.sql:

create table my_awesome_service.ref_customer_type

(

customer_type_code varchar not null,

customer_type_description varchar not null,

constraint ref_customer_type_pk primary key (customer_type_code)

);

alter table my_awesome_service.ref_customer_type

add constraint customer_type_code_ck check ( (customer_type_code)::text = upper((customer_type_code)::text) );

1_dcl.sql:

grant select on all tables in schema my_awesome_service to ru_svc_qw_my_awesome_service_app;

grant insert, update on my_awesome_service.some_entity to ru_svc_qw_my_awesome_service_app;

2_dml_refs.sql:

insert into my_awesome_service.ref_customer_type (customer_type_code, customer_type_description)

values ('INDIVIDUAL', '. ');

insert into my_awesome_service.ref_customer_type (customer_type_code, customer_type_description)

values ('LEGAL_ENTITY', '. ');

insert into my_awesome_service.ref_customer_type (customer_type_code, customer_type_description)

values ('FOREIGN_AGENCY', ' . ');

Fixtures. dev

3_dml_dev.sql:

insert into my_awesome_service.some_entity_state (state_type_code, state_data, some_entity_id)

values ('BINDING_IN_PROGRESS', '{}', 1);

rollback.sql:

drop table my_awesome_service.ref_customer_type;

Changeset.yaml example

databaseChangeLog:

- changeSet:

id: 1

author: "mr.awesome"

changes:

- sqlFile:

path: db/changesets/001_init/0_ddl.sql

- sqlFile:

path: db/changesets/001_init/1_dcl.sql

- sqlFile:

path: db/changesets/001_init/2_dml_refs.sql

rollback:

sqlFile:

path: db/changesets/001_init/rollback.sql

- changeSet:

id: 2

author: "mr.awesome"

context: dev

changes:

- sqlFile:

path: db/changesets/001_init/3_dml_dev.sql

Liquibase creates a databasechangelog table on the database, where it notes the pumped up changesets.

Automatically calculates how many changesets you need to roll to the database.

There is a maven and a gradle plugin with the ability to generate a script from several changesets that needs to be rolled into the database.

Integration of the database migration system into the application launch phase

It could be any adapter of the migration control system and the framework on which your application is built. With many frameworks, it comes bundled with the ORM. For example Ruby-On-Rails, Yii2, Nest.JS.

This mechanism is needed to roll migrations when the application context starts.

For instance:

- On the test database, patchsets 001, 002, 003.

- The pogromist developed patchsets 004, 005 and did not deploy the application to the test.

- Deploy to the test. Patchsets 004, 005 are rolling out.

If they do not roll, the application does not start. Rolling update doesn't kill old pods.

Our stack is JVM + Spring and we are not using ORM. Therefore, we needed the Spring-Liquibase integration .

We have an important security requirement at our company: the user of the application should have a limited set of grants and definitely should not have schema owner level access. With Spring-Liquibase it is possible to roll migrations on behalf of the schema owner user. In this case, the connection pool of the application application level does not have access to the Liquibase DataSource. Therefore, the application will not get access from the schema owner user.

Application-testing.yaml example

spring:

liquibase:

enabled: true

database-change-log-lock-table: "databasechangeloglock"

database-change-log-table: "databasechangelog"

user: ${secret.liquibase.user:}

password: ${secret.liquibase.password:}

url: "jdbc:postgresql://my.test.db:5432/my_awesome_service?currentSchema=my_awesome_service"DAO tests in the CI stage verify

Our company has such a CI stage - verify. At this stage, changes are checked for compliance with internal quality standards. For microservices, this is usually a linter run to check the code style and for bugs, a unit test run, and an application launch with context hoisting. Now, at the verify stage, you can check the database migrations and the interaction of the application DAO layer with the database.

Raising a container with a database and rolling patchsets increases the start time of the Spring context by 1.5-10 seconds, depending on the power of the working machine and the number of patchsets.

These are not really unit tests, they are tests for integrating the DAO layer of the application with the database.

By calling a database a part of a microservice, we say that it is testing the integration of two parts of one microservice. No external dependencies. Therefore, these tests are stable and can be run during the verify phase. They fix the microservice and database contract, providing reassurance for future improvements.

It's also a handy way to debug DAOs. Instead of calling RestController, simulating user behavior in some business scenario, we immediately call the DAO with the required arguments.

DAO test example

@Test

@Transactional

@Rollback

fun `create cheque positive flow`() {

jdbcTemplate.update(

"insert into my_awesome_service.some_entity(inn, registration_source_code)" +

"values (:inn, 'QIWICOM') returning some_entity_id",

MapSqlParameterSource().addValue("inn", "526317984689")

)

val insertedCheque = chequeDao.addCheque(cheque)

val resultCheque = jdbcTemplate.queryForObject(

"select cheque_id from my_awesome_service.cheque " +

"order by cheque_id desc limit 1", MapSqlParameterSource(), Long::class.java

)

Assert.assertTrue(insertedCheque.isRight())

Assert.assertEquals(insertedCheque, Right(resultCheque))

}

There are two related tasks for running these tests in the verify pipeline:

- The build agent can potentially be busy with the standard PostgreSQL port 5432 or any static one. You never know, someone didn't put out the container with the base after the tests were completed.

- From this the second task: you need to extinguish the container after the tests are completed.

The TestContainers library solves these two tasks . It uses an existing docker image to bring up the database container in init.sql state.

Example of using TestContainers

@TestConfiguration

public class DatabaseConfiguration {

@Bean

GenericContainer postgreSQLContainer() {

GenericContainer container = new GenericContainer("my_awesome_service_db")

.withExposedPorts(5432);

container.start();

return container;

}

@Bean

@Primary

public DataSource onlineDbPoolDataSource(GenericContainer postgreSQLContainer) {

return DataSourceBuilder.create()

.driverClassName("org.postgresql.Driver")

.url("jdbc:postgresql://localhost:"

+ postgreSQLContainer.getMappedPort(5432)

+ "/postgres")

.username("my_awesome_service_app")

.password("my_awesome_service_app_pwd")

.build();

}

@Bean

@LiquibaseDataSource

public DataSource liquibaseDataSource(GenericContainer postgreSQLContainer) {

return DataSourceBuilder.create()

.driverClassName("org.postgresql.Driver")

.url("jdbc:postgresql://localhost:"

+ postgreSQLContainer.getMappedPort(5432)

+ "/postgres")

.username("my_awesome_service")

.password("my_awesome_service_app_pwd")

.build();

}

With the development and debugging figured out. Now we need to deliver the changes to the database schema to production.

Kubernetes is the answer! What was your question?

So, you need to automate some CI / CD process. We have a tried and true team city approach. It would seem, where is the reason for another article?

And there is a reason. In addition to the tried-and-true approach, there are also boring problems of a large company.

- There are not enough team city builders for everyone.

- A license costs money.

- The settings of the buildagent virtual machines are done the old fashioned way, through the repositories with configs and puppet.

- Access from builders to target networks must be sawn through the old fashioned way.

- Logins and passwords for rolling forward changes to the database are also stored the old fashioned way.

And in all this "the old fashioned way" the problem is - everyone is running to a bright future, and support from Legacy ... you know. It works and okay. Doesn't work - we'll do it later. Someday. Not today.

Let's say you already have one knee-deep leg in the bright future and you already have a Kubernetes infrastructure. There is even an opportunity to generate another microservice, which will immediately start in this infrastructure, pick up the necessary config and secrets, have the necessary access, and register with the service mesh infrastructure. And all this happiness can be obtained by an ordinary developer, without involving a person with the * OPS role. We recall that in Kubernetes there is a type of Job workload, just intended for some kind of service work. Well, we drove to make an application on Kotlin + Spring-Liquibase, trying to reuse as much as possible the infrastructure for microservices in the company on JVM in kubera.

Let's reuse the following aspects:

- Generation of the project.

- Deploy.

- Delivery of configs and secrets.

- Access.

- Logging and delivery of logs to ELK.

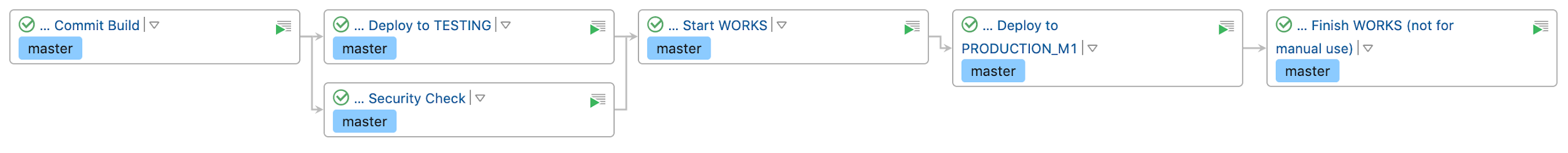

We get such a pipeline : Clickable

We now have

- Changeset versioning.

- We check them for feasibility update → rollback.

- Writing tests for DAO. Sometimes we even follow TDD: we run DAO debugging using tests. Tests are performed on a freshly raised database in TestContainers.

- Run the docker database locally on the standard port. We are debugging, looking at what is left in the database. If necessary, we can manage the local database manually.

- We roll into the test and auto-release patchsets with a standard pipeline in teamcity, by analogy with microservices. The pipeline is a child of the microservice that owns the database.

- We do not store credits from the database in team city. And we don't care about accesses from virtual builders.

I know that for many this is not a revelation. But since you've finished reading, we'll be happy to share your experience in the comments.