Introduction

There are many competitions in machine learning, as well as the platforms on which they are held, for every taste. But not so often the theme of the contest is the human language and its processing, even less often such a competition is associated with the Russian language. I recently took part in a Chinese-Russian machine translation competition on the ML Boot Camp platform from Mail.ru. Not having much experience in competitive programming, and having spent, thanks to quarantine, all the May holidays at home, I managed to take first place. I will try to talk about this, as well as about languages and the substitution of one task for another in the article.

Chapter 1. Never Speak Chinese

The authors of this competition proposed to create a general-purpose machine translation system, since translation even from large companies in a Chinese-Russian pair lags far behind more popular pairs. But since the validation took place on news and fiction, it became clear that it was necessary to learn from news corpus and books. The metric for evaluating transfers was the standard BLEU . This metric compares human translation with machine translation and, roughly speaking, estimates the similarity of texts on a 100-point scale based on the number of matches found. The Russian language is rich in its morphology, therefore this metric is always noticeably lower when translated into it than into languages with fewer ways of word formation (for example, Romance languages - French, Italian, etc.).

Anyone who deals with machine learning knows that it is primarily about data and its cleaning. Let's start looking for corpuses and at the same time we will understand the wilds of machine translation. So, in a white cloak ...

Chapter 2. Pon Tiy Pi Lat

In a white cloak with a bloody lining, a shuffling cavalry gait, we climb into a search engine behind a parallel Russian-Chinese corps. As we will understand later, what we have found is not enough, but for now let's take a look at our first finds (I collected the datasets I found and cleaned up and put them in the public domain [1] ):

- OpenSubtitles corpora (4M + lines)

- WikiMatrix

- TED transcriptions corpora (540K lines)

OPUS is quite large and linguistically diverse corpus, let's look at examples from it:

"The fact that we had experienced, even stranger than what you have experienced ...»

我与她的比你的经历经历离奇多了

"I'll tell you about it.»

我给你讲讲这段经历...

" The small town where I was born ... ”

我 出生 那座 小镇 ...

As the name suggests, these are mostly subtitles for movies and TV series. TED subtitles belong to the same type , which, after parsing and cleaning, also turn into a completely parallel corpus:

This is how our historical experiment in punishment turned out:WikiMatrix is LASER aligned texts from Internet pages (the so-called common crawling ) in various languages, but for our task they are few, and they look strange:

这 就是 关于 我们 印象 中 的 惩戒 措施 的 不为人知 的 一面

young people are afraid that at any moment they can be stopped, searched, detained.

年轻人 总是 担心 随时 会 被 截停、 搜身 和 逮捕

And not only on the street, but also in their own homes,

无论 是 在 街上 还是 在家

Zbranki (UkrainianAfter the first stage of data retrieval, a question arises with our model. What are the tools and how to approach the task at all?

但 被 其 否认。

But you'd better fast if you only knew!

斋戒 对于 你们 更好 , 如果 你们 知道。

He rejected this statement

.. 这个 推论 被 否认。

There is an NLP course I liked very much from MIPT on Stepic [2] , which is especially useful when going online, where machine translation systems are also understood at seminars, and you write them yourself. I remember the delight that the network, written from scratch, after training at Colab, produced an adequate Russian translation in response to the German text. We built our models on the architecture of transformers with an attention mechanism, which at one time became a breakthrough idea [3] .

Naturally, the first thought was “just give the model different input data” and win already. But, as any Chinese schoolchild knows, there are no spaces in the Chinese script, and our model accepts sets of tokens as input, which are words in it. Libraries like jieba can break Chinese text into words with some precision. Embedding word tokenization into the model and running it on the found corpuses, I got a BLEU of about 0.5 (and the scale is 100 points).

Chapter 3. Machine translation and exposing it

An official baseline (simple but working example solution) was proposed for the competition, which was based on OpenMNT . It is an open source translation learning tool with many hyperparameters for twisting. In this step, let's train and inference the model through it. We will train on the kaggle platform, since it gives 40 hours of GPU training for free [4] .

It should be noted that by this time there were so few participants in the competition that, having entered it, one could immediately get into the top five, and there were reasons for that. The format of the solution was a docker container, to which folders were mounted during the inference process, and the model had to read from one, and put the answer in another. Since the official baseline did not start (I personally did not assemble it right away) and was without weights, I decided to collect my own and put it in the public domain [5]. After that, participants began to apply with a request to correctly assemble the solution and generally help with the docker. Moral, containers are the standard in today's development, use them, orchestrate and simplify your life (not everyone agrees with the last statement).

Let's now add a couple more to the bodies found in the previous step:

- United Nations Parallel Corpus (3M + rows)

- UM-Corpus: A Large English-Chinese Parallel Corpus (News subcorpora) (450K lines)

The first is a huge corpus of legal documents from UN meetings. It is available, by the way, in all official languages of this organization and is aligned according to the proposals. The second one is even more interesting, since it is directly a news corpus with one peculiarity - it is Chinese-English. This fact does not bother us, because modern machine translation from English into Russian is very high quality, and Amazon Translate, Google Translate, Bing and Yandex are used. For completeness, we will show examples from what happened.

UN documents

.

它是一个低成本平台运转寿命较长且能在今后进一步发展。

.

报告特别详细描述了由参加者自己拟订的若干与该地区有关并涉及整个地区的项目计划。

UM-Corpus

Facebook closed the deal to buy Little Eye Labs in early January.

1 月初 脸 书 完成 了 对 Little Eye Labs 的 收购 ,

Four engineers in Bangalore launched Little Eye Labs about a year and a half ago

一年 半 以前 四位 工程师 在 班加罗尔 创办 了

Company builds software tools for mobile apps, deal will cost between $ 10 and $ 15 million.

该 公司 开发 移动 应用 软件 工具 , 这次 交易 价值 1000 到 1500 万 美元 ,

So, our new ingredients: OpenNMT + high-quality enclosures + BPE (you can read about BPE tokenization here ). We train, assemble into a container, and after debugging / cleaning and standard tricks, we get BLEU 6.0 (the scale is still 100 points).

Chapter 4. Parallel manuscripts do not burn

Up to this point, we have been improving our model step by step, and the biggest gain has come from the use of the news corpus, one of the validation domains. Besides news, it would be nice to have a body of literature. Having spent a fair amount of time it became clear that the machine translations of Chinese books with no popular system can not provide - Nastasia becomes something like Nostosi Filipauny and Rogozhin - Rogo Wren . The names of the characters usually make up a fairly large percentage of the entire work and often these names are rare, therefore, if the model has never seen them, then most likely it will not be able to translate them correctly. We must learn from books.

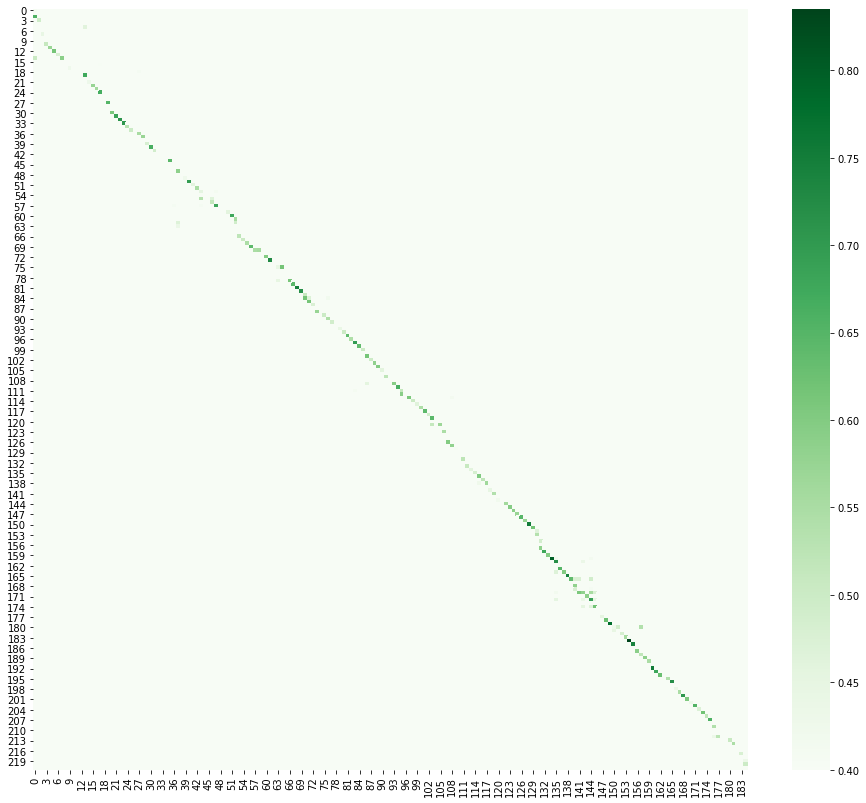

Here we replace the task of translation with the task of text alignment. I must say right away that I liked this part the most, because I myself am fond of studying languages and parallel texts of books and stories, in my opinion, this is one of the most productive ways of learning. There were several ideas for alignment, the most productive turned out to be to translate sentences into vector space and calculate the cosine distance between candidates for a match. Translating something into vectors is called embedding, in this case it's a sentence embedding . There are several good libraries for this purpose [6] . When visualizing the result, it can be seen that the Chinese text slips a little due to the fact that complex sentences in Russian are often translated as two or three in Chinese.

Having found everything that is possible on the Internet, and leveling the books ourselves, we add them to our corpus.

He was in an expensive gray suit, foreign, in the color of his suit, shoes.

他 穿 一身 昂贵 的 灰色 西装 , 脚上 的 外国 皮鞋 也 与 西装 颜色 十分 协调。

He famously wrung a gray beret in his ear, carried a cane with a black with a poodle-

shaped knob .

She looks more than forty years old.

看 模样 年纪 在 四十 开外。

After training on the new building, BLEU grew to 20 on a public dataset and to 19.7 on a private one. It also played a role in the fact that works from validation obviously got into the training. In reality, this should never be done, it is called a leak, and the metric ceases to be indicative.

Conclusion

Machine translation has come a long way from heuristics and statistical methods to neural networks and transformers. I'm glad I was able to find the time to become familiar with this topic, it definitely deserves close attention from the community. I would like to thank the authors of the competition and other participants for the interesting communication and new ideas!

[1] Parallel Russian-Chinese corpora

[2] Course on Natural Language Processing from MIPT

[3] Breakthrough article Attention is all you need

[4] Laptop with an example of learning on kaggle

[5] Public docker baseline

[6] Library for multilingual sentence embeddings