Each such event is accompanied by a surge of interest from Internet users. People not only want to read about what happened, but also look at the pictures. They go in search of pictures and expect to find there fresh, relevant pictures that may not have existed a few hours ago. Interest arises unexpectedly and within a few days it drops to almost zero.

The peculiarity of the situation is that conventional search engines are not designed for such a scenario. Moreover, the criterion of freshness of content contradicts other important properties of good search: relevance, authority, etc. Special technologies are needed to not only find new content, but also to maintain a balance in the results.

My name is Denis Sakhnov, today I will talk about a new approach to delivering fresh content to Yandex.Kartinki. And my colleague Dmitry Krivokonkrivokonwill share details about the metrics and ranking of fresh images. You will learn about the old and new approaches to quality assessment. We will also remind you about YT, Logbroker and RTMR.

In order for image search to work well on that part of the requests, the answers to which should contain fresh content, you need to solve the following tasks:

- Learn to quickly find and download fresh pictures.

- Learn to process them quickly.

- Learn how to quickly collect documents for search based on pictures (this point will become clearer as the story progresses).

- Formulate criteria for the quality of the search for fresh content.

- Learn to rank and mix content in the SERP based on quality requirements.

Let's start with the first point.

1. Getting pictures

There are many sites on the Internet, many of them regularly publish something, including pictures. For people to see all this in the Image search, the robot must reach the site and download the content. This is usually how the search works: we go around the sites we know relatively quickly and get new pictures. But when it comes to content that suddenly becomes relevant right now, this model fails. Because the Internet is huge, it is impossible to download HTML documents of all sites in the world “right now” and quickly digest it all. At least no one in the world has solved such a problem yet.

Someone might imagine a solution to the problem in this way: track the bursts of requests and first of all process only those sources that somehow match the requests. But that only sounds good on paper. First, to check the correspondence of something to something, you need to already have content on hand. Secondly, if we start doing something after the peak of requests, then we are already late. As crazy as it sounds, you need to find fresh content before you need it. But how do you predict the unexpected?

The correct answer is no way. We don't know anything about the timing of volcanic eruptions. But we do know which sites usually have fresh and useful content. We went from this side. We started to use a machine-learning formula that prioritizes crawling our crawler based on the quality and relevance of the content. Let SEOs forgive us: we will not go into details here. The robot's task is to deliver HTML documents to us as quickly as possible. Only after that we can take a look at their filling and find new texts, links to pictures, etc.

Image links are good, but not particularly useful for searches so far. First of all, you need to download them to us. But again, there are too many new links to images to download instantly. And the problem here is not only in our resources: site owners also would not want Yandex to accidentally bother them. Therefore, we use machine learning to prioritize image downloads. The factors are different, there are many of them, we will not explain everything, but for example we can say that the frequency with which the picture appears on different resources also affects the priority.

We now have a list of links to images. Then we download them to ourselves. We use our own Logbroker service. This thing acts as a transport bus, successfully surviving huge volumes of traffic. Several years ago, our colleague Alexey Ozeritsky already talked about this technology on Habré.

This is where the first stage is logically completed. We have identified the sources and have successfully extracted some pictures. Just a little bit is left: to learn how to work with them.

2. Process pictures

The pictures themselves are, of course, useful, but they still need to be prepared. It

works like this: 1. In the stateless computing service RTHub, versions of different sizes are prepared. This is necessary for search, where it is convenient to show thumbnails in the results, and give the original content from the source site on click.

2. Neural network features are calculated. Offline (that is, in advance, and not at the time of ranking) on machines with a GPU, neural networks are launched, the result of which will be vectors of image features. And also the values of useful classifiers are calculated: beauty, aesthetics, inappropriate content and many others. We still need all this.

3. And then using the information counted from the picture, duplicates are glued together. This is important: the user is unlikely to be happy with search results in which the same images will prevail. At the same time, they may differ slightly: somewhere they cut off the edge, somewhere they added a watermark, etc. We carry out the gluing of duplicates in two stages. First, there is a rough clustering of close pictures using neural network vectors. In this case, the pictures in the cluster may not even coincide in meaning, but this allows us to parallelize further work with them. Then, inside each cluster, we glue duplicates by searching for anchor points in the pictures. Please note: neural networks are great at looking for similar images, but less "fashionable" tools are more effective for finding full duplicates; neural networks can be too clever and see "the same in different".

So, by the end of this stage, we have ready-made pictures in different versions, passed through the gluing of duplicates, with pre-calculated neural network and other features. Submitting to the ranking? No, it's too early.

3. We collect pictures into documents

Document is our name for the entity that participates in the ranking. From the user's side, it may look like a link to a page (search through sites), a picture (search for images), a video (search for a video), a coffee maker (search for goods), or something else. But inside, behind each unit in the search results, there is a whole bunch of heterogeneous information. In our case - not only the picture itself, its neural network and other features, but also information about the pages where it is placed, the texts that describe it on these pages, statistics of user behavior (for example, clicks on the picture). All together - this is the document. And before proceeding directly to the search, the document must be collected. And the mechanism of forming the usual search base of images is not suitable here.

The main challenge is that different components of a document are generated at different times and in different places. The same Logbroker can download information about pages and texts, but not simultaneously with pictures. Real-time user behavior data comes through the RTMR log processing system . And all this is stored independently of the pictures. To collect a document, you need to consistently bypass different data sources.

We use MapReduce to form the main search base of images. It is an efficient, reliable way to work with huge amounts of data. But for the task of freshness, it is not suitable: we need to very quickly receive from the storage all the data necessary for the formation of each document, which does not correspond to MapReduce. Therefore, in the freshness loop, we use a different method: heterogeneous information comes to the RTRobot streaming data processing system, which uses KV-storage to synchronize different data processing flows and fault tolerance.

In the freshness loop, we use dynamic tables based on our YT system as KV storage... In fact, this is a repository of all the content that we might need. With very fast access. It is from there that we promptly request everything that may be useful for searching for pictures, collect documents and, using LogBroker, transfer them to the search servers, from where the prepared data is added to the search database.

Thanks to a separate circuit for working with freshness, which covers all stages (from searching for pictures on the web to preparing documents), we are able to process hundreds of new pictures per second and deliver them to search, on average, in a few minutes from their appearance.

But bringing pictures to search is not enough. You need to be able to show them in search results when they are useful. And here we move on to the next step - determining utility. I give the floor to Dmitrykrivokon...

4. We measure quality

A general approach to optimizing search quality starts with choosing a metric. In the Yandex image search, the type of metric is approximately the following:

where

n is the number of first images (documents) of the issue that we evaluate;

p_i - position weight in the search results (the higher the position, the more weight);

r_i - relevance (how closely the picture matches the request);

w_i… m_i - other components of the response quality (freshness, beauty, size ...);

f (...) is a model that aggregates these components.

Simply put, the higher the more useful pictures are in the search results, the higher the amount in this expression.

A few words about the f (...) model. She is trained by pairwise comparison of pictures with polokers. A person sees a request and two pictures, and then chooses the best one. If you repeat this many, many times, the model will learn to predict which quality component is most important for a particular request.

For example, if a request for fresh photographs of a black hole, then the freshness component has the highest coefficient. And if about a tropical island, then beauty, because few people are looking for amateur photos of ugly islands, usually attractive pictures are needed. The visually better the delivery of pictures looks in such cases, the more likely it is that a person will continue to use the service. But let's not be distracted by this.

So, the task of the ranking algorithms is to optimize this metric. But you cannot estimate all the millions of daily requests: this is a huge load, and first of all, on tolokers. Therefore, for quality control, we allocate a random sample (basket) for a fixed period of time.

It would seem that there is a metric in which the freshness component is already taken into account, and there is a selection of requests for quality control. You can close the topic on this and go to ranking. But no.

In the case of fresh pictures, a problem arises. When evaluating algorithms, we must be able to understand that we respond well to a user's request at the very moment when the request is entered in the search. Last year's fresh request may not be so now. And something else would be a good answer. Therefore, a fixed basket of requests (for example, per year) is not suitable.

As a first approach to solving this problem, we tried to do without the basket at all. Following a certain logic, we began to mix fresh images into the output, and then we studied user behavior. If it changed for the better (for example, people clicked more actively on the results), then mixing was useful. But this approach has a flaw: the quality assessment directly depended on the quality of our algorithms. For example, if for some request our algorithm does not cope and does not mix content, then there will be nothing to compare, which means that we will not understand whether fresh content was needed there. So we came to the understanding that an independent evaluation system is required that will show the current quality of our algorithms and not depend on them.

Our second approach was as follows. Yes, we cannot use a fixed cart due to the variability of fresh requests. But we can leave for the base that part of the basket for which there are no requirements for freshness, and add the fresh part there daily. To do this, we have created an algorithm that selects those in the stream of user requests that most likely require a response with fresh pictures. Such queries usually have unexpected details. Of course, we use manual validation to filter out noise and debris and accommodate special situations. For example, a query may be relevant only for a specific country. In this case, we are no longer helped by tolokers, but by assessors: such work requires special experience and knowledge.

Request [black hole photo]

In doing so, we not only add such fresh requests to the cart for quality assessment, but also save the results of our search at the time the requests are found. So we can assess not only the primary quality of the response, but also how quickly our search reacted to the event.

So, let's summarize the preliminary results. To respond well to fresh inquiries, we not only ensured fast delivery to search and image processing, but also reinvented the way we measure quality. It remains to figure out the quality of which we are measuring.

5. Ranking

Let me remind you that above we described the transition from the first approach to assessing the quality of image search to the second: from mixing the results to the daily replenishment of the acceptance basket with fresh requests. The paradigm has changed - the algorithms themselves needed changes. It is not easy to explain it to readers from the outside, but I will try. If you have any questions, feel free to ask them in the comments.

Previously, the methods were implemented by analogy with the solution I was talking aboutour colleague Alexey Shagraev. There is a main source of documents (main search index). And there is also an additional source of fresh documents, for which the speed of getting into the search is critical. Documents from different sources could not be ranked according to a single logic, so we used a rather non-trivial scheme to mix documents from a fresh source into the main issue. Next, we compared the metrics of the main search results without and with additional documents.

Now the situation is different. Yes, the sources are still physically different, but in terms of metrics, it doesn't matter where the fresh picture came from. It can also get from the main source if an ordinary robot managed to get to it. In this case, the metrics will be identical to the situation when the same picture got to the issue through a separate source. The new approach has a meaningful freshness of the query and result, and the source architecture is no longer so important. As a result, both main and recent documents are ranked using the same model, which allows us to mix fresh images into the output using a much simpler logic than before: by simply sorting by value at the output of a single model. Of course, this also affected the quality.

Move on. To rank something, you need a dataset on which the model will be trained. For fresh images - a dataset with examples of fresh content. We already have a basic dataset, we needed to learn how to add freshness examples to it. And here we are reminded of the receiving basket, which we already use for quality control. Fresh requests in it vary every day, which means that the very next day we can take yesterday's fresh requests and add them to the dataset for training. At the same time, we do not risk retraining, since the same data is not used simultaneously for training and control.

Due to the transition to a new scheme, the quality of search results for fresh images has increased significantly. If earlier training was based primarily on user statistics on fresh requests and because of this, we had feedback with the current ranking algorithm, now the basis of training is objectively collected request baskets, which depend solely on the flow of user requests. This allowed us to learn how to show fresh results even when there were none before. In addition, due to the merging of the ranking pipelines of the main and fresh contours, the latter began to develop noticeably faster (all improvements in one source now automatically reach the second).

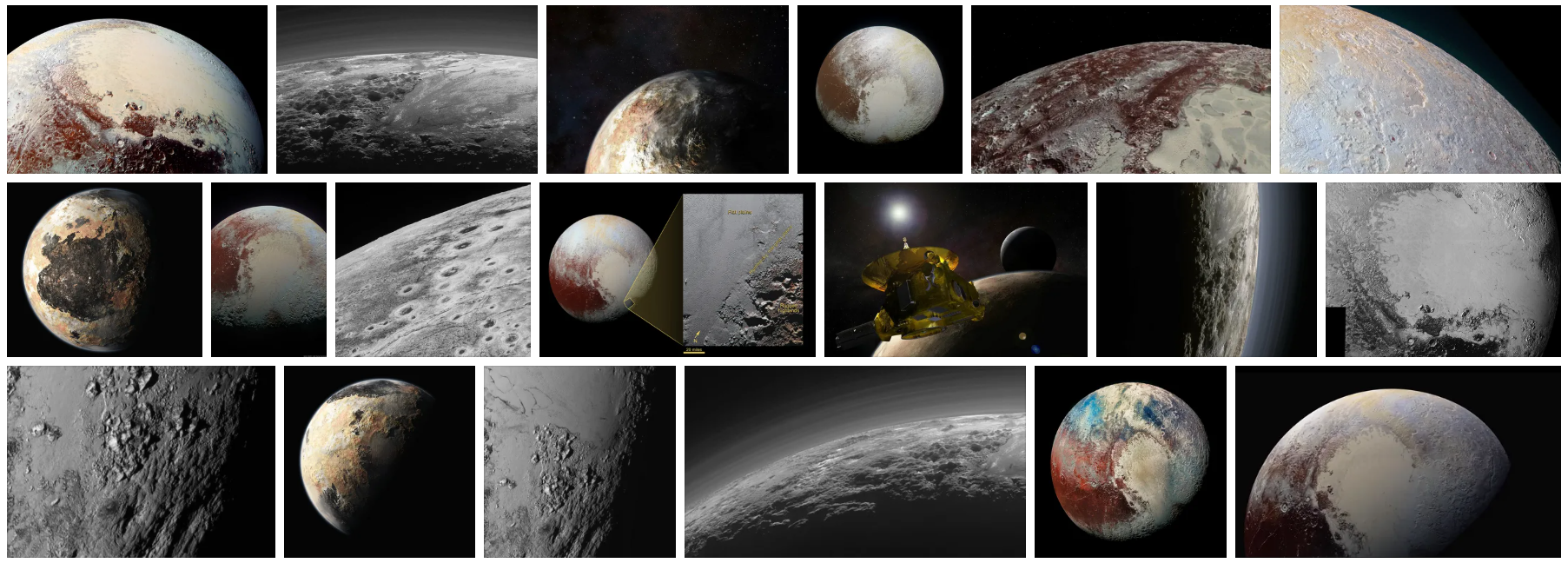

In one post, it is impossible to tell in detail about all the work that the Yandex image search team has done. We hope we succeeded in explaining what are the features of finding fresh pictures. And why are changes needed at all stages of the search, so that users can quickly find fresh photos of Pluto or any other relevant information.