The technological world has embraced a new hype - GPT-3.

Huge language models (like GPT-3) surprise us more and more with their capabilities. While business confidence in them is not yet sufficient to present them to their customers, these models demonstrate the beginnings of intelligence that will accelerate the development of automation and the capabilities of smart computing systems. Let's take the aura of mystery out of GPT-3 and find out how it learns and how it works.

The trained language model generates text. We can also send some text to the input of the model and see how the output changes. The latter is generated from what the model has "learned" during the training period by analyzing large amounts of text.

Learning is the process of transferring a large amount of text to a model. For GPT-3, this process is complete and all the experiments you can see are running on the already trained model. It was estimated that the training would take 355 GPU-years (355 years of training on a single graphics card) and cost $ 4.6 million.

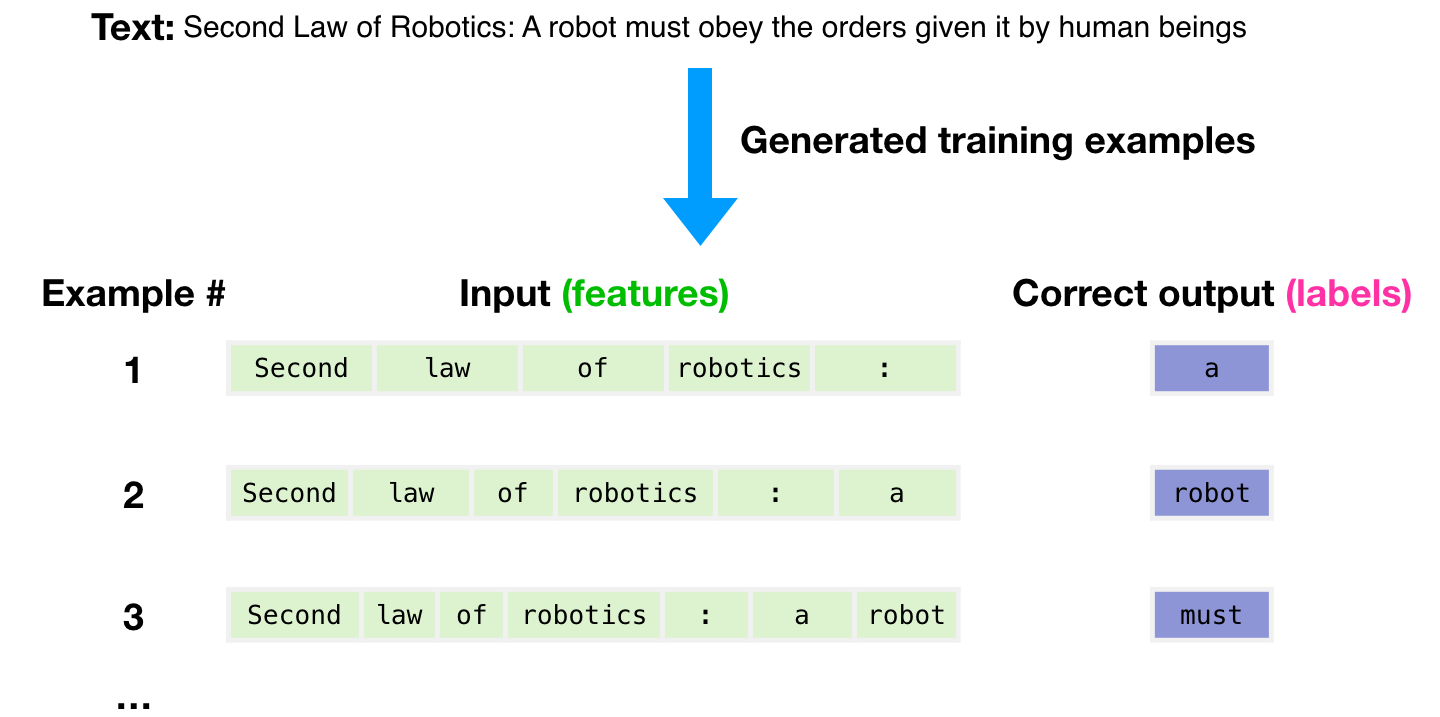

A dataset of 300 billion text tokens was used to generate examples for training the model. For example, this is how three training examples look like, derived from one sentence above.

, , .

( ) .

. , .

.

.

GPT-3 ( , – ).

, — GPT-3, , ( ). – , .

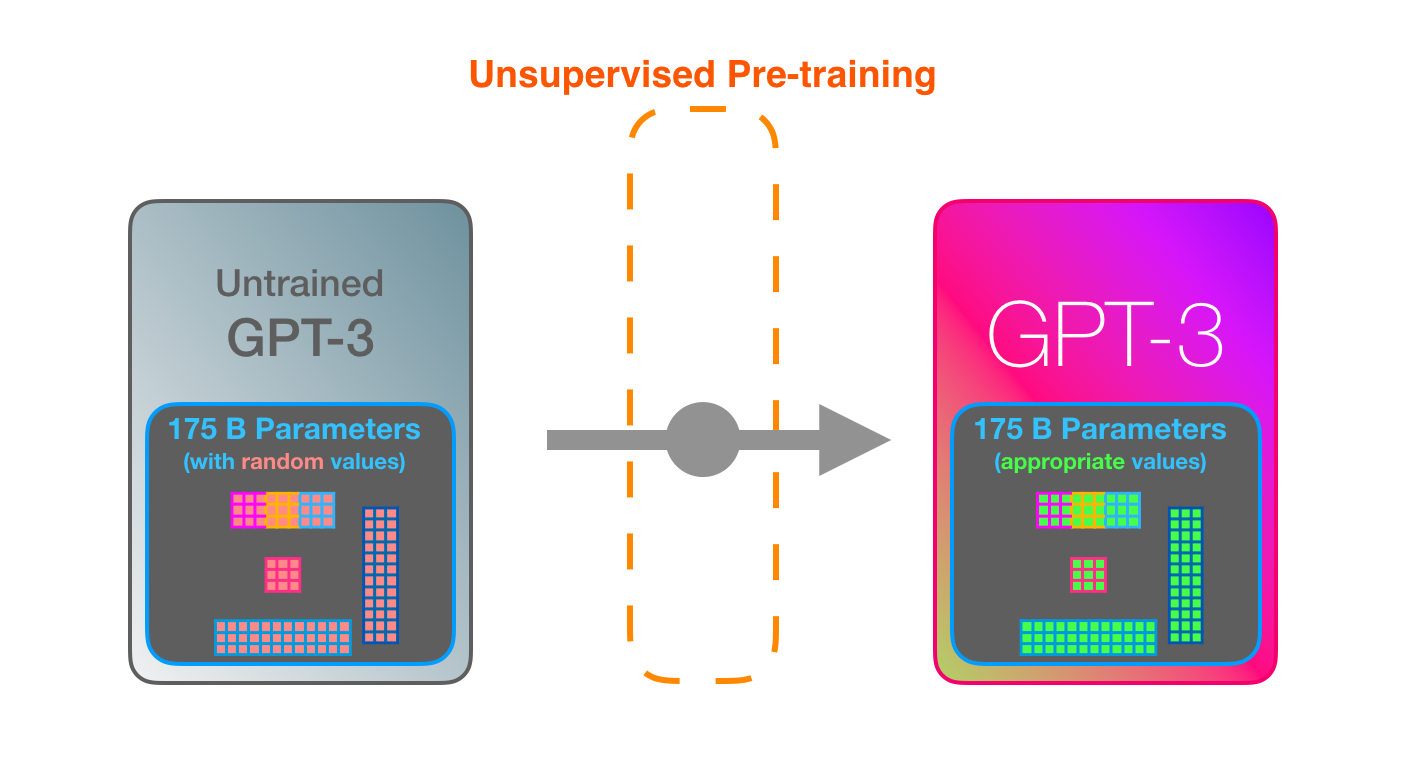

GPT-3 . , , 175 ( ). .

, , .

– , – , .

« Youtube» – 175- .

, , .

GPT-3 2048 – « », 2048 , .

. «robotics» «A»?

:

- ( ).

- .

- .

GPT-3 96 .

? «» « » (deep learning).

1.8 . «». :

, , GTP-2 .

GPT-3 (dense) (sparse) (self-attention).

«Okay human» GPT-3. , . : , . .

React ( ), , => . React , , .

It can be assumed that the initial examples and descriptions were added to the input of the model along with special tokens separating the examples from the result.

The way it works is impressive. You just have to wait for the GPT-3 fine-tuning to complete. And the possibilities will be even more amazing.

Tweaking simply updates the model weights in order to improve its performance for a specific task.

Authors

- Original author - Jay Alammar

- Translation - Ekaterina Smirnova

- Editing and layout - Sergey Shkarin