The 360 performance review method is also used in X5 Retail Group. Today we are going to tell you about BigData X5's best practices for in-depth HR analytics.

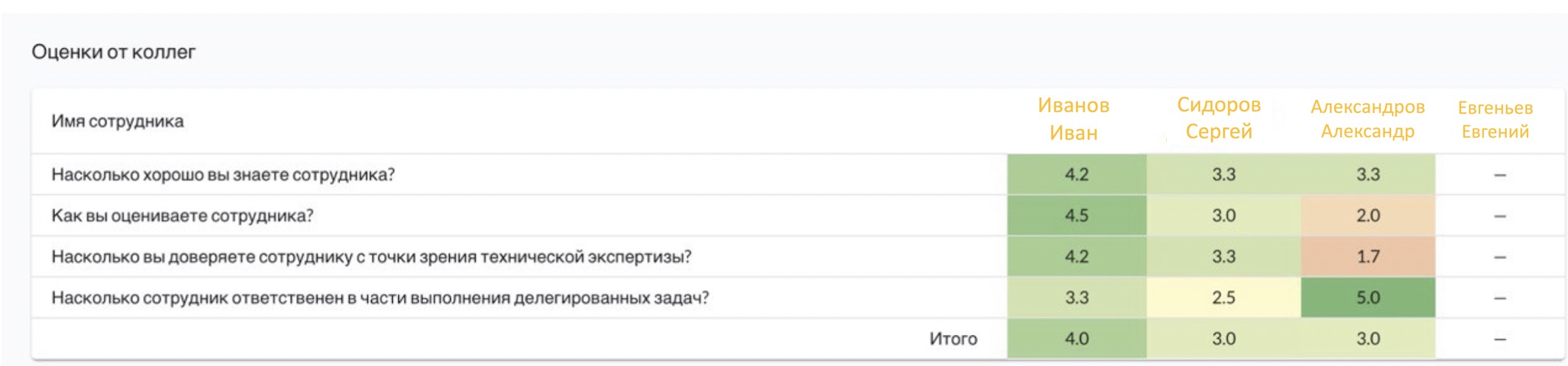

Obviously, the accuracy of this method, although it is increased by averaging different opinions, still depends on the openness and enthusiasm with which people fill out the questionnaires, on their understanding of the scale, on the strength of the team, the atmosphere in the team, and much more.

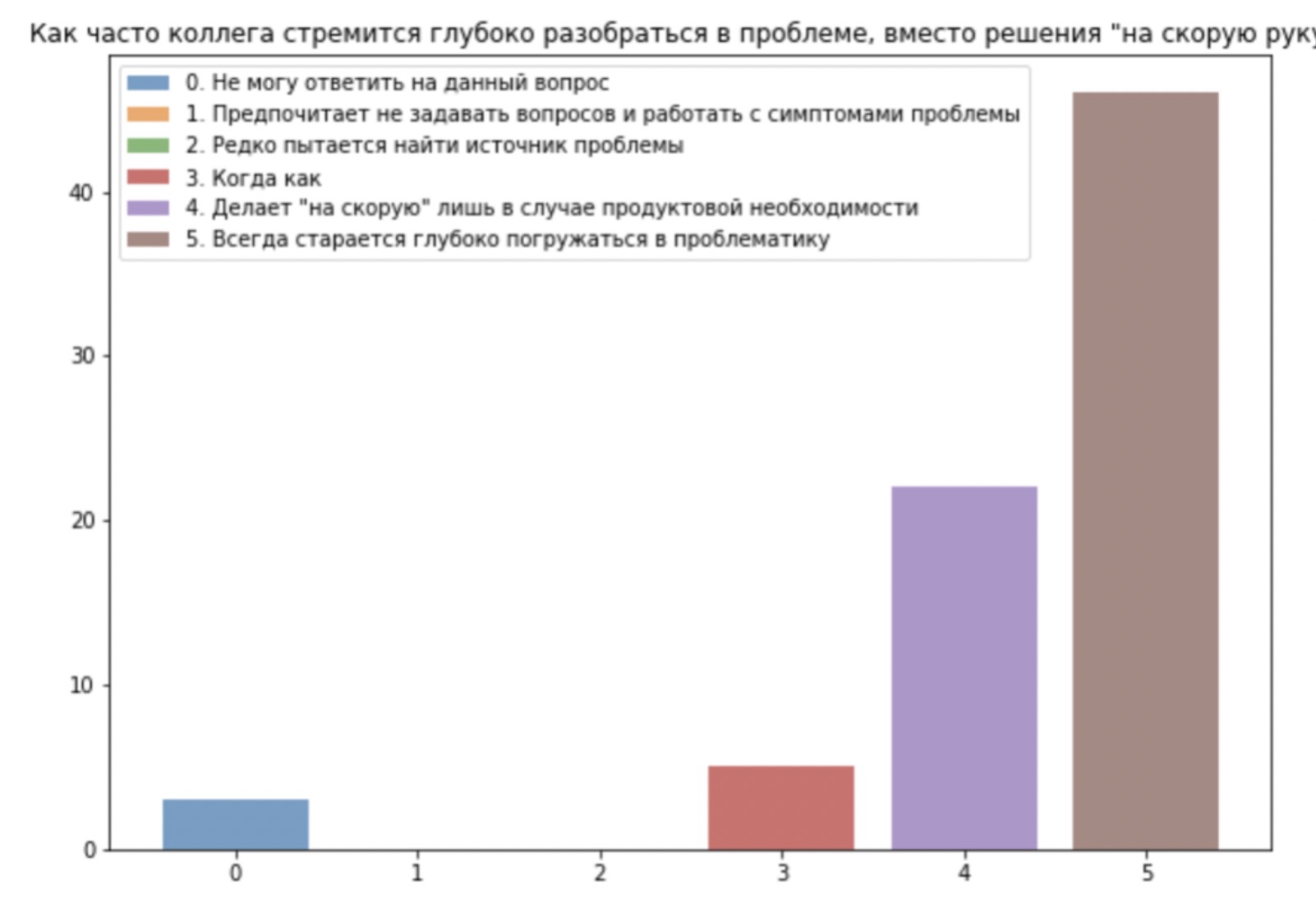

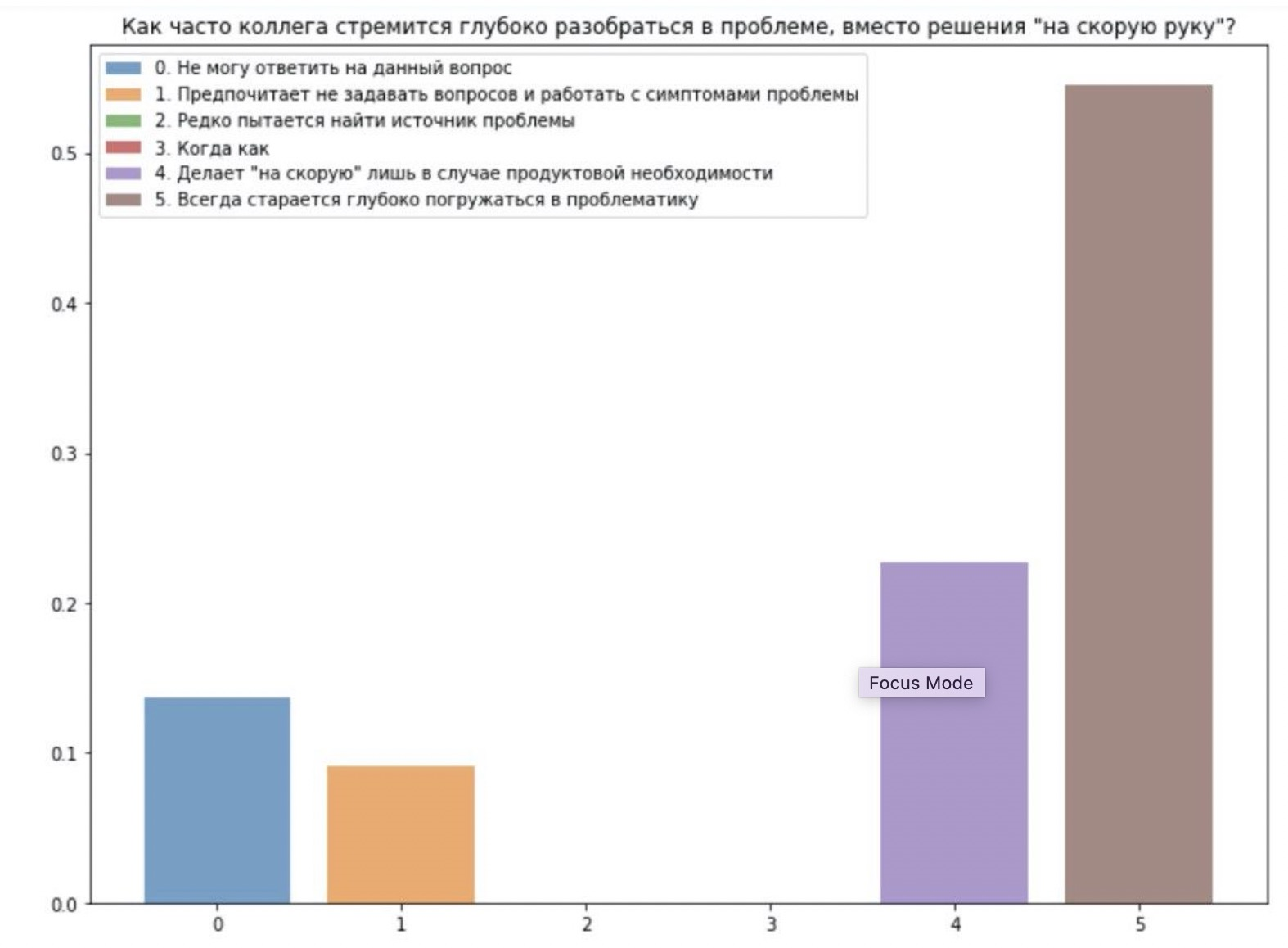

An important aspect of the operation of such a system is interaction with filling out questionnaires. If a person thoughtlessly gives everyone fives, they need to work with him, explain the importance of the process. In Russia, there is a certain attitude towards grades on the basis of a five-point scale, according to which a C-student is a so-so character, a good one is normal, but an excellent student is someone who works well, that's praise. Losers remain for the second year, and indeed “there are not many of them, and I have never seen them in our company” - this is how managers usually answer about their team. “Somewhere,” but not here. So, if you think that the employee is good, you give him a four, because a C ... well, there is a C, and if you are friends, then you can put an five - do not hesitate. This leads to skewed ratings, to a high percentage of fives in the survey,which degenerates into almost two-point: with fours and fives.

Teaching evaluators is a slow and sad (well, not always sad) process that includes explanations: how the instrument works; how to correctly assess a person, without striking either admiration following the results of one single interaction, or negative after one rude letter; what a grading scale looks like, different from the one used in school; an overview of the typical mistakes of reviewers, and so on. It is very important to relax people, to get away from their perception of the process as yet another boring tool, to get rid of the fear that the assessment will affect the financial results of a colleague. It is fundamentally important here not to make hasty personnel decisions and not to adjust the command staff in fresh tracks.

The statement of the analytical process from the point of view of the HR employee is well laid out in the textfrom Avito, which I highly recommend reading. The guys observed a strong bias towards good, the number of fours ("above expectations") was similar to the number of triples ("meets expectations"). We also encountered arguments about "good and evil", although we used a scale of our own design.

Further, the voices were divided. Either it is a strong friendly team, or one of two things. Therefore, we quickly launched a second review on a different team,

and made sure that sometimes, without additional work on clarifying the scale and calibrating the estimates, it is possible to obtain data with high variance. That is, you need to work with people and take into account the organic propensity for "objective" assessments. Or maybe this is a disagreement within the team, which, generally speaking, is also useful to know.

The 360 score is generally used for two purposes: employee development and performance analysis. It is important to understand that the output may differ depending on the level of preparedness and openness of those who provide the feedback. When we create a tool for empowering employee development, it is important for us to provide anonymous feedback from various sources in order to help him understand his strengths and weaknesses, pump skills, develop missing qualities. The survey focuses on competencies or behaviors closely related to the performance of job responsibilities, and the values of the organization. When we launch such a tool, we must make it clear to the participants that we are notwe will use the results for personnel decisions. Our story will be about using the 360 review method to develop employees.

Employee development data is needed to assess strengths and areas for development, not to make bonus / talent decisions. It is also important for a company to understand how a person's values correlate with those of the company. 360 results are always shared with the employee and their manager.

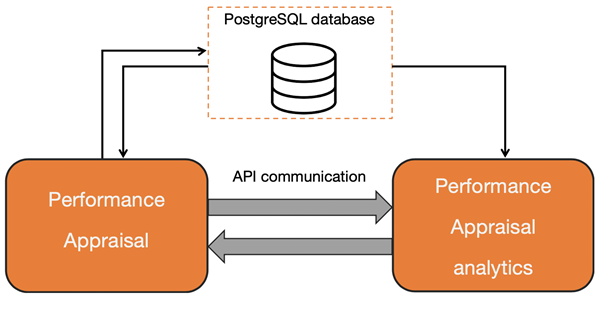

360 survey scores and results are a treasure trove of data that can be used to provide insight and analytics. These data are needed to calculate "correction" factors that will help to obtain a more reliable result, as well as for clustering employees by competencies, skills, compiling a "profile" of individual teams and much more. All these calculations require additional power and frameworks, which we decided to move into a separate microservice. Thus, we have logically separated the part that the user sees (from the HR department) from the "analytical" part, on which all additional analytical calculations are performed. This approach allows these services to be developed independently, and enables additional separation of computations.The analytical service does not have its own database, all calculations are made based on the data that is in the database of the main service, and interacts using the REST-API.

The analytical service is a separate server written in Flask, and the main service is implemented in NodeJS with a PostgreSQL database. This undoubtedly difficult interaction scheme is presented below:

Consider an example of assessing surveys in other teams, let's call them team A and team B. Imagine a situation that in team A the employees are friendly, treat each other well, and, accordingly, the average score can be quite high. In contrast to Team A, suppose Team B is made up of more critical people who honestly give high marks only to those employees who actually perform well.

How do we compare two employees from Team A and Team B? To compare employees from different teams, we use a special "team" calibration to get an employee's score relative to the average score in his team. You can't do without a formula here.

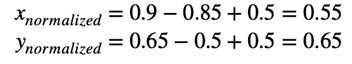

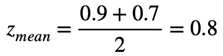

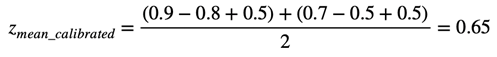

Suppose we have employee x with a score of 0.9 from team A, whose average score is 0.85, and there is employee y with a score of 0.65 from team B, whose average score is 0.5. After subtracting the average scores of the teams, we get the "calibrated" scores for employees:

Thus, we see that employee y has a calibrated score higher than the calibrated score of employee x.

The same example applies to intra-team normalization. All employees are different and tend to rate their colleagues differently as well. Let's say there is employee x who treats all colleagues very well and gives everyone an average score of 0.8, and there is employee y who looks more critically at others and on average evaluates other employees at 0.5. When employees x and y rate employee z, they can rate him equally good (or equally bad), but in their own value system, so when averaging the average score within the team, we subtract the average of each employee, which is calculated from historical data. Suppose employee x rated employee z at 0.9, and employee y at 0.7, the average score will be equal

However, if we subtract the historically average authors' ratings, we get

After this calibration, we get a metric that takes into account the “value system” of each employee and, therefore, is more “honest”.

The important thing is that when defining a person's profile, we can weigh the ratings from the reviewers with different coefficients. There is a lot of evidence that managers tend to be more accurate and impartial in assessing people (in fact, this is also why they ended up where they were), most likely due to more experience.

The default values of the weights are 0.25, that is, in the current version we do not give preference to any of the categories of respondents, but as it was said in one old anecdote, “the tool is there”.

In other words, having collected estimates calibrated by authors, we try to drive them into a global “coordinate system” in order to be able to extract correct insights from the data. Otherwise, due to biased assessments, we can discover some amazing regularity that really does not exist, and what good, we will begin to develop the employee in the direction opposite to his profile.

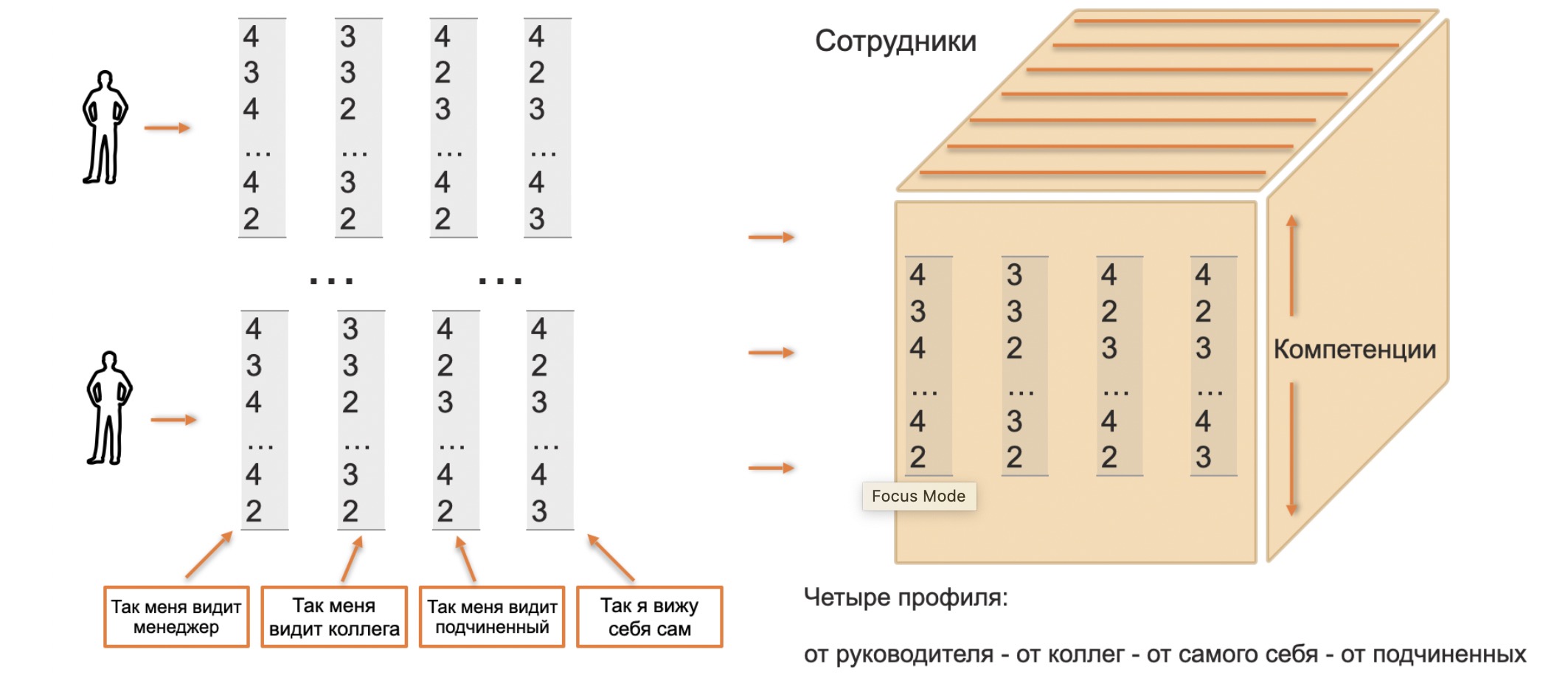

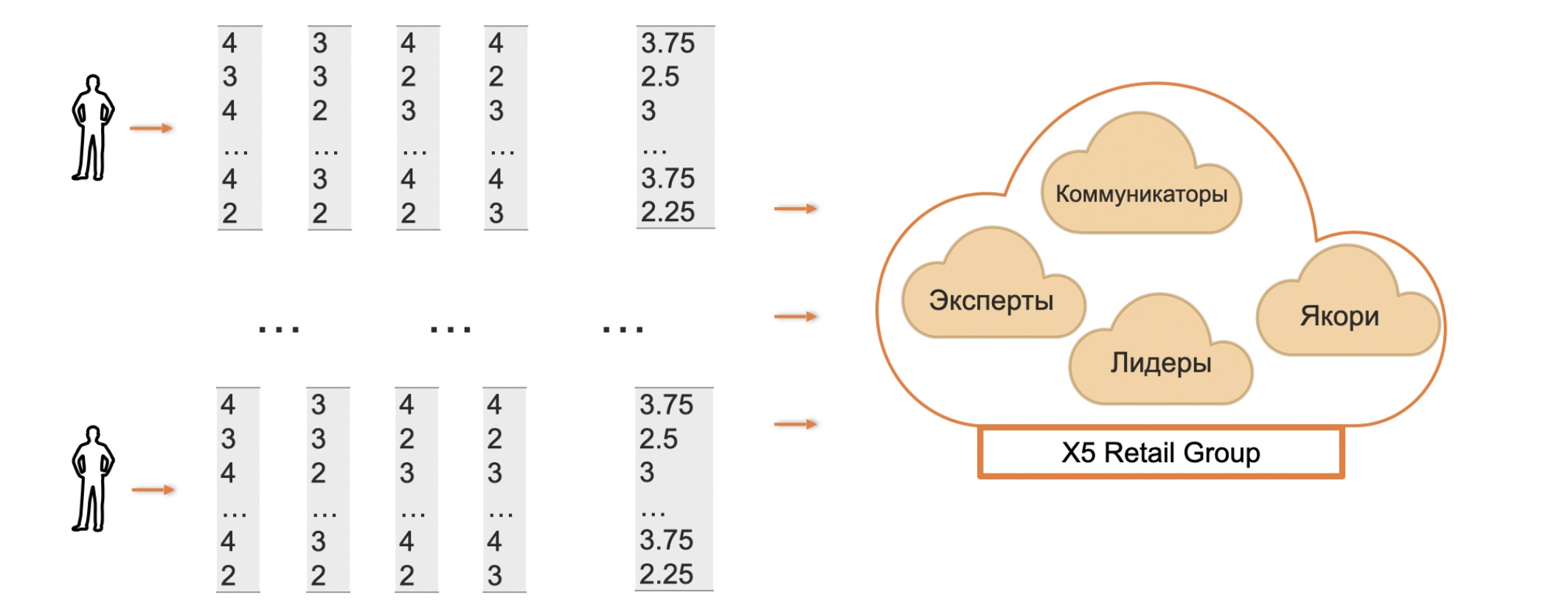

May we succeed and we have compiled vectors that represent the employee's competency profile. Moreover, there are vectors received from colleagues, managers, subordinates and self-esteem. We collect all this into a cube (to be precise, a parallelepiped, but further on I will use the term cube by analogy with OLAP cubes).

But now, by dissecting the cube along different axes, we can obtain various analytical dependencies. For example, let's fix the competence and see its distribution throughout the organization as a whole or cross-team within the organization. Or take the far right column of managers' ratings and look internally at the variance of ratings to see if there are any surprising findings.

Developing this logic, it is possible to obtain diagrams of comparison of employees, both within the team and belonging to different departments, the so-called cobweb; but it is possible, on the same diagram, to give the average values of competencies in a team and understand for a specific person where he is knocked out relative to the team and in which direction; you can take another team instead of the one in which the employee is located and compare its average competencies with the competencies of a person. Why, if you swing, you can compare the team with respect to another team, that's what a fun game can come out.

Clusters of certain types within an organization can also be analyzed to find people who are perhaps effective communicators or experts who are known for their deep approach to problem solving.

Analytically simpler finds are also possible, although no less interesting. In particular, the high variance in the ratings of one of the employees when polled among colleagues may indicate a polar perception of his colleagues.

What if the variance is high when comparing the ratings from colleagues and from the manager? Do colleagues and a manager assess an employee very differently? Perhaps here you can wonder what kind of leader he is, and whether he is too strict with the members of his team (well, or vice versa, uncritical). Or draw a conclusion about the fundamental superobjectivity of managers in the organization, if a similar pattern repeats for other teams.

A high number of missing evaluations for any of the employees is likely to indicate that the person has little interaction with colleagues. At the same time, for some teams in X5 this is quite a modus operandi, and there is nothing surprising here, but it is obvious that for some teams this will serve as an indicator for the need for changes in the process of work.

In the future, we want to form more subtle questions in a research form in order to eliminate biases in estimates at this stage, avoiding manual work with service users and endless explanations of how to choose the right estimates and what they mean. We have several ideas, they are in the process of verification, and we will definitely share the results with you. We also want to apply more cunning techniques to the data cube, in addition to cuts along the axes and clustering. Here we try various autoencoders, linear and non-linear, looking for cross-links between views along different coordinate axes. In general, there is a lot of work, the data is disobedient, and setting up the system is not easy :)

Authors:

Evgeny Makarov

Valery Babushkin

Svyatoslav Oreshin

Daniil Pavlyuchenko

Evgeny Molodkin