All implementation in one header .h file: [fast_mem_pool.h]

Chips, why this allocator is better than hundreds of similar ones - under the cut.

This is how my bike works.

1) In the Release build, there are no mutexes and no waiting cycles for atomic - but the allocator is cyclic, and continuously regenerates resources as they are released by threads. How he does it?

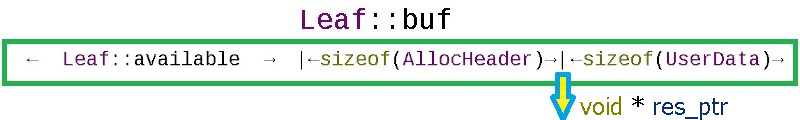

Each allocation of RAM that FastMemPool gives through fmalloc is actually more for a header:

struct AllocHeader {

// : tag_this = this + leaf_id

uint64_t tag_this { 2020071700 };

// :

int size;

// :

int leaf_id { -2020071708 };

};

This header can always be obtained from the pointer owned by the user by rewinding down from the pointer (res_ptr) sizeof (AllocHeader):

By the contents of the AllocHeader header, the ffree (void * ptr) method recognizes its allocations and finds out in which of the sheets of the circular buffer memory is returned :

void ffree(void *ptr)

{

char *to_free = static_cast<char *>(ptr)

- sizeof(AllocHeader);

AllocHeader *head = reinterpret_cast<AllocHeader *>(to_free);

When the allocator is asked to allocate memory, it looks at the current sheet of the array of sheets to see if it can cut off the required size + size of the header sizeof (AllocHeader).

In the allocator, Leaf_Cnt memory sheets are reserved in advance, each sheet of the size Leaf_Size_Bytes (everything is traditional here). In search of an allocation opportunity, the fmalloc (std :: size_t allocation_size) method will circle through the leaves of the leaf_array array, and if everything is busy everywhere, then, provided that the Do_OS_malloc flag is enabled, it will take memory from the operating system larger than the required size by sizeof (AllocHeader) - outside memory is taken from the internal circular buffer or from the OS, the allocator always creates a header with service information. If the allocator runs out of memory and the Do_OS_malloc == false flag, then fmalloc will return nullptr - this behavior is useful to control the load (for example, skip frames from a video camera when the frame processing modules do not keep up with the camera's FPS).

How cycling is implemented

Cyclic allocators are designed for cyclical tasks - tasks should not last forever. For example, it can be:

- allocations for user sessions

- processing video stream frames for video analytics

- the life of combat units in the game

Since there can be any number of memory sheets in the leaf_array array, in the limit it is possible to make a page for the theoretically possible number of combat units in the game, so that with the condition of units dropping out, we are guaranteed to get a free memory sheet. In practice, for video analytics, 16 large sheets are usually enough for me, of which the first few sheets are donated for long-term non-cyclic allocations when the detector is initialized.

How thread safety is implemented

An array of allocation sheets works without mutexes ... Protection against errors of the "data race" type is done as follows:

char *buf;

// available == offset

std::atomic<int> available { Leaf_Size_Bytes };

// allocated ==

std::atomic<int> deallocated { 0 };

Each memory sheet has 2 counters:

- available initialized by the size of the Leaf_Size_Bytes. With each allocation, this counter decreases, and the same counter serves as an offset relative to the beginning of the memory sheet == memory is allocated from the end of the buffer:

result_ptr = leaf_array[leaf_id].buf + available_after;

- deallocated is initialized {0} to zero, and with each deallocation on this sheet (I learn from AllocHeader on which sheet or OS the deal is being dealt with) the counter is increased by the released volume:

const int deallocated = leaf_array[head->leaf_id].deallocated.fetch_add(real_size, std::memory_order_acq_rel) + real_size;

As soon as the counters like this (deallocated == (Leaf_Size_Bytes - available)) match, this means that everything that has been allocated is now released and you can reset the leaf to its original state, but here is a subtle point: what will happen if after making a decision to reset the leaf back to the original, someone allocates another small piece of memory from the sheet ... To exclude this, use the compare_exchange_strong check:

if (deallocated == (Leaf_Size_Bytes - available))

{ // ,

// , , Leaf

if (leaf_array[head->leaf_id].available

.compare_exchange_strong(available, Leaf_Size_Bytes))

{

leaf_array[head->leaf_id].deallocated -= deallocated;

}

}

The memory sheet is reset to its initial state only if at the moment of reset the same state of the available counter remains, which was at the time of the decision. Ta-daa !!!

A nice bonus is that you can catch the following bugs using the AllocHeader header of each allocation:

- redeallocation

- deallocation of someone else's memory

- buffer overflow

- access to someone else's memory area

The second feature is implemented on these opportunities.

2) The Debug compilation provides the exact information where the previous deallocation was during the redeallocation: file name, code line number, method name. This is implemented in the form of decorators around the base methods (fmallocd, ffreed, check_accessd - the debug version of the methods has a d at the end):

/**

* @brief FFREE - free

* @param iFastMemPool - FastMemPool

* @param ptr - fmaloc

*/

#if defined(Debug)

#define FFREE(iFastMemPool, ptr) \

(iFastMemPool)->ffreed (__FILE__, __LINE__, __FUNCTION__, ptr)

#else

#define FFREE(iFastMemPool, ptr) \

(iFastMemPool)->ffree (ptr)

#endif

The preprocessor macros are used:

- __FILE__ - c ++ source file

- __LINE__ - line number in the c ++ source file

- __FUNCTION__ - function name where this happened

This information is stored as a correspondence between the allocation pointer and allocation information in the mediator:

struct AllocInfo {

// : , , :

std::string who;

// true - , false - :

bool allocated { false };

};

std::map<void *, AllocInfo> map_alloc_info;

std::mutex mut_map_alloc_info;

Since speed is not so important for debugging, a mutex was used to protect the standard std :: map. The template parameter (bool Raise_Exeptions = DEF_Raise_Exeptions) affects whether to throw an Exception on error.

For those who want maximum comfort in the Release build, you can set the DEF_Auto_deallocate flag, then all OS malloc allocations will be written (already under the mutex in std :: set <>) and released in the FastMemPool destructor (used as an allocation tracker).

3)To avoid errors like "buffer overflow", I recommend using the FastMemPool :: check_access check before starting to work with allocated memory. While the operating system only complains when you get into someone else's RAM, the check_access function (or the FCHECK_ACCESS macro) calculates by the AllocHeader header whether there will be an overrun of the given allocation:

/**

* @brief check_access -

* @param base_alloc_ptr - FastMemPool

* @param target_ptr -

* @param target_size - ,

* @return - true FastMemPool

*/

bool check_access(void *base_alloc_ptr, void *target_ptr, std::size_t target_size)

// :

if (FCHECK_ACCESS(fastMemPool, elem.array,

&elem.array[elem.array_size - 1], sizeof (int)))

{

elem.array[elem.array_size - 1] = rand();

}

Knowing the pointer of the initial allocation, you can always get the header, from the header we find out the size of the allocation, and then we calculate whether the target element will be within the initial allocation. It is enough to check once before starting the processing cycle at the maximum theoretically possible access. It may very well be that the limiting values will break through the allocation boundaries (for example, in the calculations it is assumed that some variable can only walk in a certain range due to the physics of the process, and therefore you do not make checks for breaking the allocation border).

Better to check once than to kill a week later looking for someone who occasionally writes random data to your structures ...

4) Setting the Default template code at compile time via CMake.

CmakeLists.txt contains configurable parameters, for example:

set(DEF_Leaf_Size_Bytes "65536" CACHE PATH "Size of each memory pool leaf")

message("DEF_Leaf_Size_Bytes: ${DEF_Leaf_Size_Bytes}")

set(DEF_Leaf_Cnt "16" CACHE PATH "Memory pool leaf count")

message("DEF_Leaf_Cnt: ${DEF_Leaf_Cnt}")

This makes it very convenient to edit the parameters in QtCreator:

or CMake GUI:

Further, the parameters are passed to the code during compilation as follows:

set(SPEC_DEFINITIONS

${CMAKE_SYSTEM_NAME}

${CMAKE_BUILD_TYPE}

${SPEC_BUILD}

SPEC_VERSION="${Proj_VERSION}"

DEF_Leaf_Size_Bytes=${DEF_Leaf_Size_Bytes}

DEF_Leaf_Cnt=${DEF_Leaf_Cnt}

DEF_Average_Allocation=${DEF_Average_Allocation}

DEF_Need_Registry=${DEF_Need_Registry}

)

#

target_compile_definitions(${TARGET} PUBLIC ${TARGET_DEFINITIONS})

and in the code override the template values in Default:

#ifndef DEF_Leaf_Size_Bytes

#define DEF_Leaf_Size_Bytes 65535

#endif

template<int Leaf_Size_Bytes = DEF_Leaf_Size_Bytes,

int Leaf_Cnt = DEF_Leaf_Cnt,

int Average_Allocation = DEF_Average_Allocation,

bool Do_OS_malloc = DEF_Do_OS_malloc,

bool Need_Registry = DEF_Need_Registry,

bool Raise_Exeptions = DEF_Raise_Exeptions>

class FastMemPool

{

// ..

};

So the allocator template can be comfortably adjusted with the mouse by turning on / off the checkboxes of the CMake parameters.

5) In order to be able to use the allocator in STL containers in the same .h file, the capabilities of std :: allocator are partially implemented in the FastMemPoolAllocator template:

// compile time :

std::unordered_map<int, int, std::hash<int>,

std::equal_to<int>,

FastMemPoolAllocator<std::pair<const int, int>> > umap1;

// runtime :

std::unordered_map<int, int> umap2(

1024, std::hash<int>(),

std::equal_to<int>(),

FastMemPoolAllocator<std::pair<const int, int>>());

Examples of usage can be found here: test_allocator1.cpp and test_stl_allocator2.cpp .

For example, the work of constructors and destructors on allocations:

bool test_Strategy()

{

/*

* Runtime

* ( )

*/

using MyAllocatorType = FastMemPool<333, 33>;

// instance of:

MyAllocatorType fastMemPool;

// inject instance:

FastMemPoolAllocator<std::string,

MyAllocatorType > myAllocator(&fastMemPool);

// 3 :

std::string* str = myAllocator.allocate(3);

// :

myAllocator.construct(str, "Mother ");

myAllocator.construct(str + 1, " washed ");

myAllocator.construct(str + 2, "the frame");

//-

std::cout << str[0] << str[1] << str[2];

// :

myAllocator.destroy(str);

myAllocator.destroy(str + 1);

myAllocator.destroy(str + 2);

// :

myAllocator.deallocate(str, 3);

return true;

}

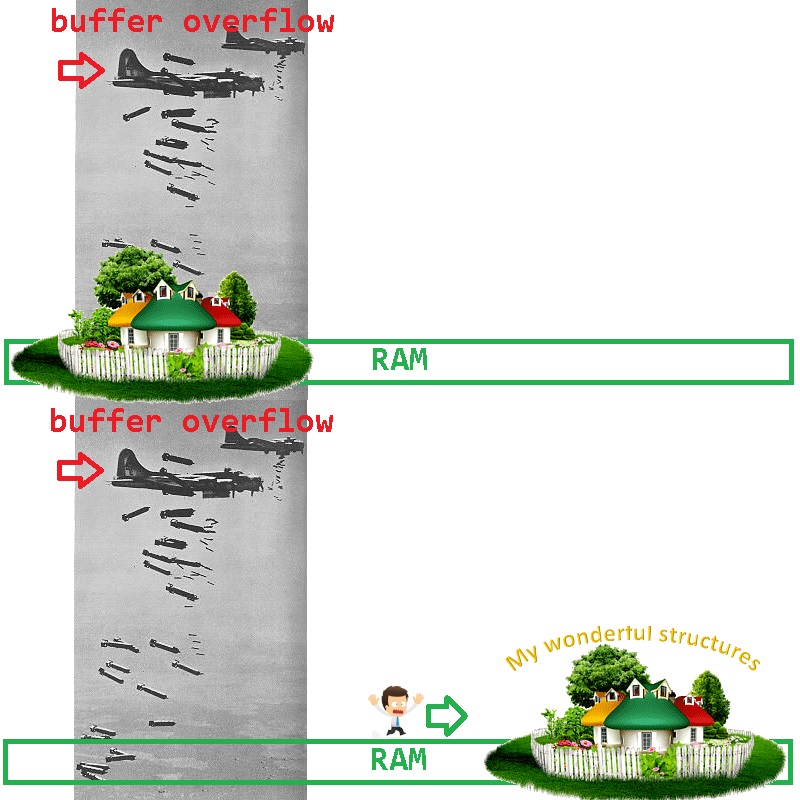

6) Sometimes in a large project you make some kind of module, thoroughly tested everything - it works like a Swiss watch. Your module is included in the Detector, put on a battle - and there sometimes, once a day, the library starts to fall into a dump. Running a dump on the debugger, you find out that in one of the loop traversal of pointers, instead of nullptr, someone wrote the number 8 into your pointer - by going to this pointer, you naturally angered the operating system.

How can we narrow down the range of possible culprits? It is very simple to exclude your structures from the suspects - they need to be moved to RAM to another place (where the saboteur does not bomb):

How can this be done easily with FastMemPool? It's very simple: in FastMemPool, allocation occurs by biting off from the end of the memory page - by requesting a page of memory more than you need for work, you guarantee that the beginning of the memory page remains a testing ground for buggy bombing. For instance:

FastMemPool<100000000, 1, 1024, true, true> bulletproof_mempool;

void *ptr = bulletproof_mempool.fmalloc(1234567);

// ..

// - c ptr

// ..

bulletproof_mempool.ffree(ptr);

If in a new place someone is bombing your structures, then most likely it is you yourself ...

Otherwise, if the library stabilizes, the team will receive several gifts at once:

- your algorithm works like a Swiss watch again

- a buggy coder can now safely bomb an empty memory area (while everyone is looking for it), the library is stable.

- the bombing range can be monitored for changing memory - to set traps on a buggy encoder.

In total , what are the advantages of this particular bike:

- ( / )

- , Debug ,

- , /

- , ( nullptr), — , ( FPS , FastMemPool -).

In our company, the installation of the analysis of 3D geometry of metal sheets required multi-threaded processing of video frames (50FPS). The sheets pass under the camera and on the reflection of the laser I build a 3D map of the sheet. FastMemPool was used to ensure maximum speed of working with memory and safety. If the streams cannot cope with the incoming frames, then saving frames for future processing in the usual way leads to an uncontrolled consumption of RAM. With FastMemPool, in case of overflow, nullptr will simply be returned during allocation and the frame will be skipped - in the final 3D image, such a defect in the form of a step jump indicates that it is necessary to add CPU threads to processing.

Mutex-free operation of threads with a circular memory allocator and a task stack made it possible to process incoming frames very quickly, without frame loss and without overflowing RAM. Now this code runs in 16 threads on an AMD Ryzen 9 3950X CPU, no failures in the FastMemPool class have been identified.

A simplified example-diagram of the video analytics process with RAM overflow control can be seen in the test_memcontrol1.cpp source code .

And for dessert: the same example-scheme uses a non-mutex stack:

using TWorkStack = SpecSafeStack<VideoFrame>;

//..

// Video frames exchanger:

TWorkStack work_stack;

//..

work_staff->work_stack.push(frame);

//..

VideoFrame * frame = work_staff->work_stack.pop();

A working demo stand with all sources is here on gihub .