Using the example of Habitica (an open source app for fixing habits and achieving goals, written in Kotlin) Vitalya Gorbachev, a solution architect at Just AI, shows how to quickly and seamlessly integrate a voice interface into the functionality of any application.

But first, let's discuss why voice control of a mobile application is convenient? Let's start with the obvious.

- We often need to use the application when our hands are busy: cooking, driving, carrying suitcases, during mechanical work, and so on.

- Voice is an essential tool for people with visual impairments.

The cases are already transparent, but in reality everything is even simpler: in some cases, voice dialing is just faster ! Imagine - ordering an air ticket with one phrase "Buy me a ticket for tomorrow for two to Samara" instead of a long form filling. At the same time, with the ability to ask the user clarifying questions: in the evening or in the afternoon? with or without luggage?

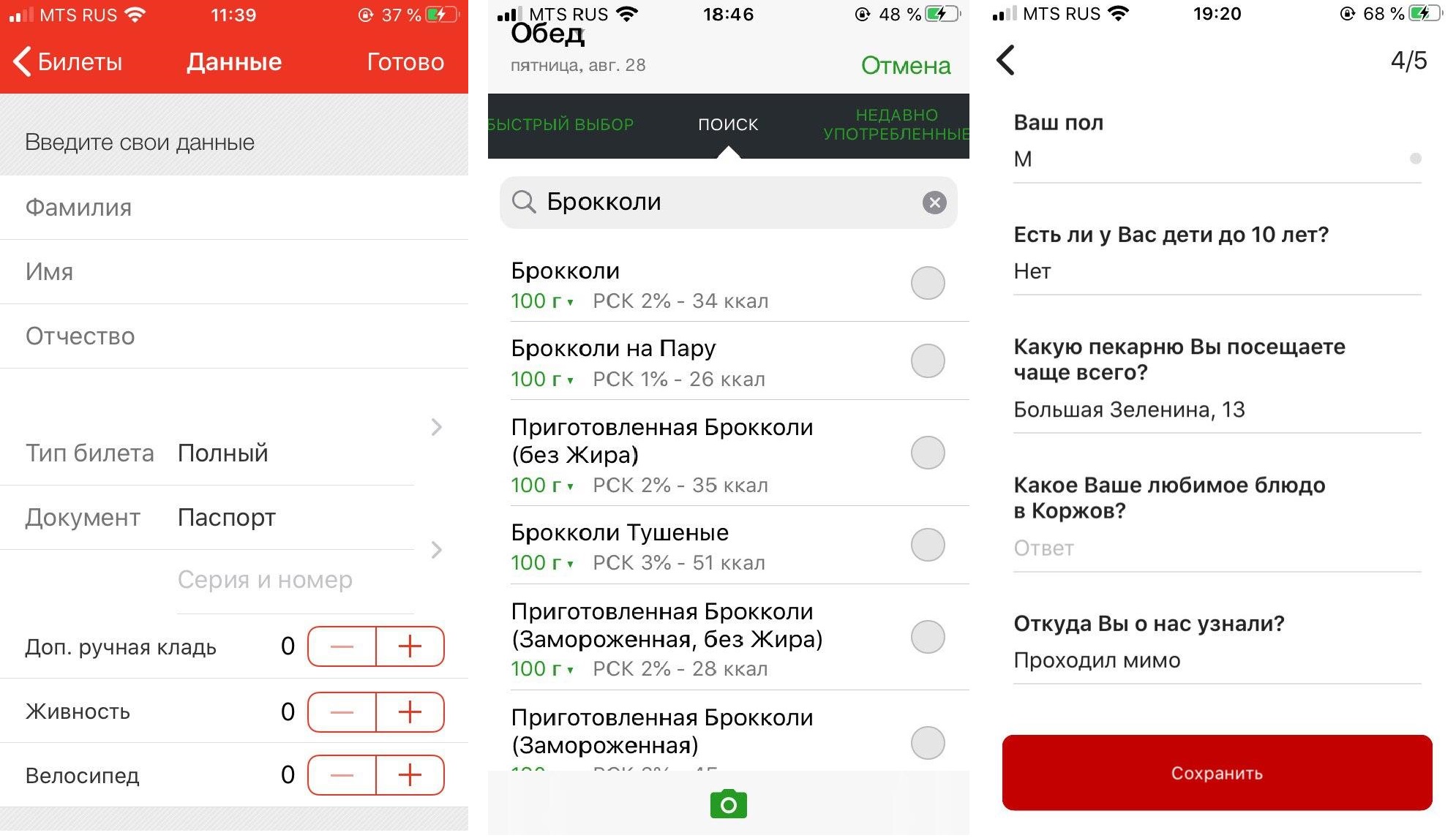

The voice is useful when we go through the "form-filling" scenario and is convenient for filling out almost any long forms that require a certain amount of information from the user. And such forms are present in most mobile applications.

From left to right: Prigorod RZD app, FatSecret food diary (users have to fill out a form several times a day, choosing from hundreds of products), Korzhov bakery app.

Due to the fact that today voice assistants are often introduced into support chat and they develop from there, most companies are trying to push the functionality of the application into the chat. Top up the balance, find out something about a product or service ... This is not always conveniently implemented, and in the case of voice input, it is completely counterproductive, if only because speech recognition often does not work perfectly.

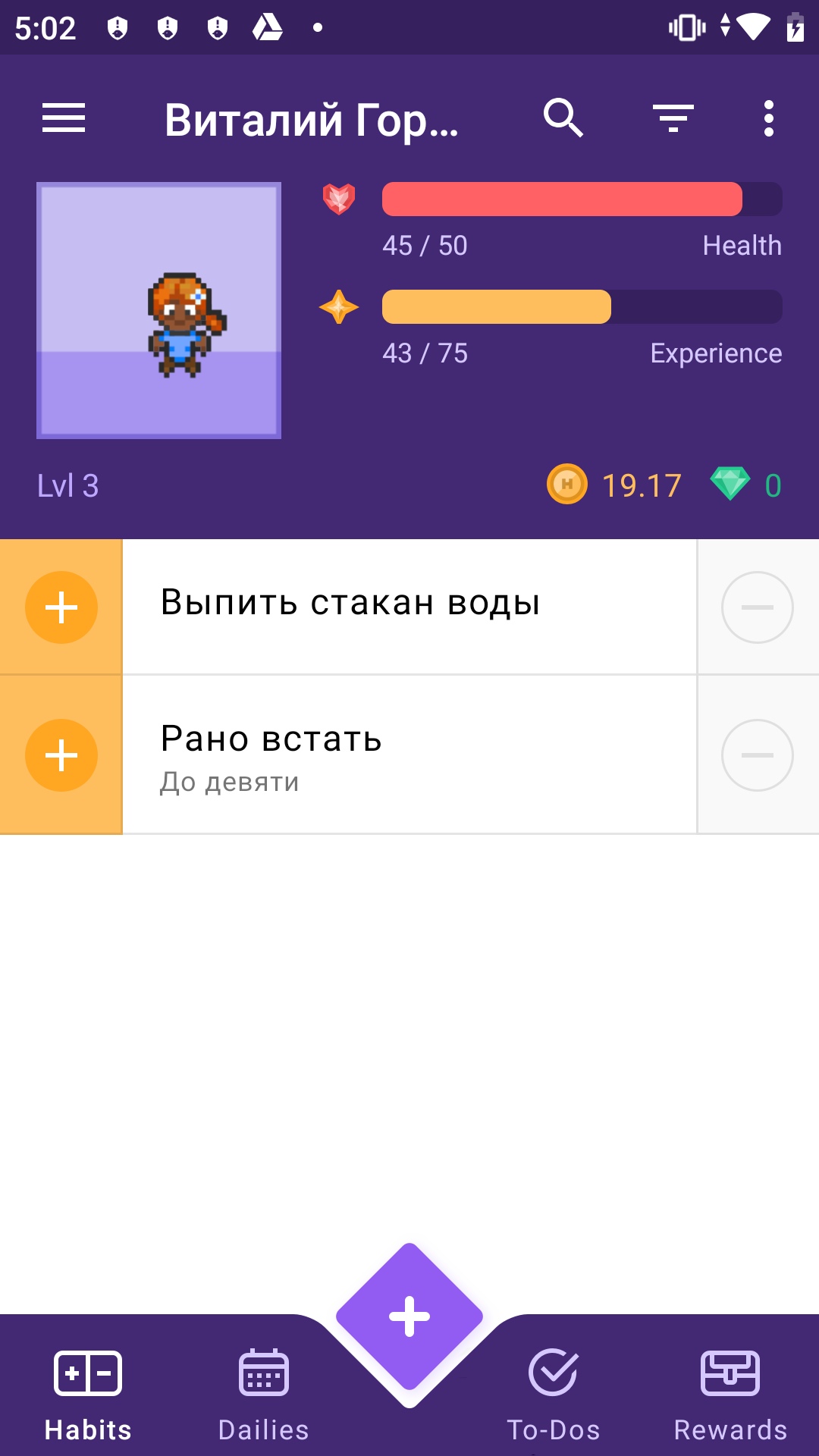

The correct approach is to integrate the assistant seamlessly into the existing functionality of the application, in the interface of which the form will be filled in, so that the person can simply check that he has said everything correctly and click OK.We decided to show how this can be done using the example of Habitica - this is an open source application written in almost pure Kotlin. "Habitika" is perfect for a case with a voice assistant - here, too, in order to start a new task, you need to fill out a rather voluminous form. Let's try to replace this dreary process with one phrase with leading questions?

I've split the tutorial into two parts. In this article, we will figure out how to add a voice assistant to a mobile application and implement a basic scenario (in our case, this is a ready-made scenario for clarifying the weather and time forecast - one of the most popular requests for voice assistants in the world). In the second article - and it will be released soon - we will learn how to call certain screens by voice and implement complex queries inside the application.

What you need to work

SDK. We took Aimybox as an SDK for building dialog interfaces. Out of the box, Aimybox provides an assistant SDK and a laconic and customizable UI (which can be altered if desired). At the same time , you can choose from existing ones or create your own module as engines for recognition , synthesis and NLP .

Basically, Aimybox implements the voice assistant architecture, standardizing the interfaces of all these modules and organizing their interaction in the right way. Thus, by implementing this solution, you can significantly reduce the time for developing a voice interface within your application. You can read more about Aimybox here orhere .

Script creation tool. We will write the script in JAICF (this is an open source and completely free framework for developing voice applications from Just AI), and we will recognize intents using Caila (NLU service) in JAICP (Just AI Conversational Platform). I'll tell you more about them in the next part of the tutorial - when we get to using them.

Smartphone. For the tests, we need an Android smartphone, on which we will run and test Habitika.

Procedure

First, we fork "Habitika" (the Release branch) and look for the files that are most important to us. I used the Android Studio IDE:

Find MainActivity .kt - we will embed logic there.

HabiticaBaseApplication .kt - there we will initialize Aimybox.

Activity_main .xml - embed the interface element there.

AndroidManifest .xml - the entire structure of the application and its permissions are stored there.

According to the instructions in the Habitiki turnip, rename habitica.properties.example and habitica.resources.example, removing example from them, start the project in firebase for the application and copy the google-services.json file to the root.

We launch the application to check that the assembly is working. Voila!

First, let's add the Aimybox dependencies.

implementation 'com.justai.aimybox:core:0.11.0'

implementation("com.justai.aimybox:components:0.1.8")

in dependencies and

maven { url 'https://dl.bintray.com/aimybox/aimybox-android-sdk/' }

maven { url "https://dl.bintray.com/aimybox/aimybox-android-assistant/" }

in repositories.

And add the following line right after compileOptions so that everything works correctly

kotlinOptions {

jvmTarget = JavaVersion.VERSION_1_8.toString()

}Now permissions.

Remove flags from RECORD_AUDIO and MODIFY_AUDIO_SETTINGS permissions in AndroidManifest .xml so that the options look like this.

<uses-permission android:name="android.permission.READ_PHONE_STATE" />

<uses-permission android:name="android.permission.ACCESS_NETWORK_STATE" />

<uses-permission android:name="android.permission.INTERNET" />

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

<uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE" />

<uses-permission android:name="com.android.vending.BILLING" />

<uses-permission android:name="android.permission.RECEIVE_BOOT_COMPLETED"/>

<uses-permission android:name="android.permission.RECORD_AUDIO"/>

<uses-permission android:name="android.permission.MODIFY_AUDIO_SETTINGS"/>

Now let's initialize Aimybox in BaseApplication.

Add AimyboxProvider when initializing the class.

And we do the actual initialization.

private fun createAimybox (context: Context): Aimybox {

val unitId = UUID.randomUUID().toString()

val textToSpeech = GooglePlatformTextToSpeech(context, Locale("Ru"))

val speechToText = GooglePlatformSpeechToText(context, Locale("Ru"))

val dialogApi = AimyboxDialogApi(

"YOUR KEY", unitId)

return Aimybox(Config.create(speechToText, textToSpeech, dialogApi))

}Instead of YOUR_KEY, your code from the Aimybox Console will subsequently be.

Now we embed the snippet in mainActivity.kt. Pre-insert the FrameLayout in activity_main.xml, right below the frameLayout with id bottom_navigation

<FrameLayout

android:id="@+id/assistant_container"

android:layout_width="match_parent"

android:layout_height="match_parent"/>In MainActivity itself, first add an explicit permission request to OnCreate

ActivityCompat.requestPermissions(this, arrayOf(android.Manifest.permission.RECORD_AUDIO), 1)

And when you receive them, add a fragment to the above frame.

@SuppressLint("MissingPermission")

override fun onRequestPermissionsResult(

requestCode: Int,

permissions: Array<out String>,

grantResults: IntArray

) {

val fragmentManager = supportFragmentManager

val fragmentTransaction = fragmentManager.beginTransaction()

fragmentTransaction.add(R.id.assistant_container, AimyboxAssistantFragment())

fragmentTransaction.commit()

}Do not forget to add to OnBackPressed the ability to exit the assistant after entering it.

val assistantFragment = (supportFragmentManager.findFragmentById(R.id.assistant_container)

as? AimyboxAssistantFragment)

if (assistantFragment?.onBackPressed() != true) {

return

}In addition, add to styles (styles.xml) in AppTheme

<item name="aimybox_assistantButtonTheme">@style/CustomAssistantButtonTheme</item>

<item name="aimybox_recognitionTheme">@style/CustomRecognitionWidgetTheme</item>

<item name="aimybox_responseTheme">@style/CustomResponseWidgetTheme</item>

<item name="aimybox_imageReplyTheme">@style/CustomImageReplyWidgetTheme</item>

<item name="aimybox_buttonReplyTheme">@style/CustomButtonReplyWidgetTheme</item>And the individual styles are just below:

<style name="CustomAssistantButtonTheme" parent="DefaultAssistantTheme.AssistantButton">

</style>

<style name="CustomRecognitionWidgetTheme" parent="DefaultAssistantTheme.Widget.Recognition">

</style>

<style name="CustomResponseWidgetTheme" parent="DefaultAssistantTheme.Widget.Response">

</style>

<style name="CustomButtonReplyWidgetTheme" parent="DefaultAssistantTheme.Widget.ButtonReply">

</style>

<style name="CustomImageReplyWidgetTheme" parent="DefaultAssistantTheme.Widget.ImageReply">

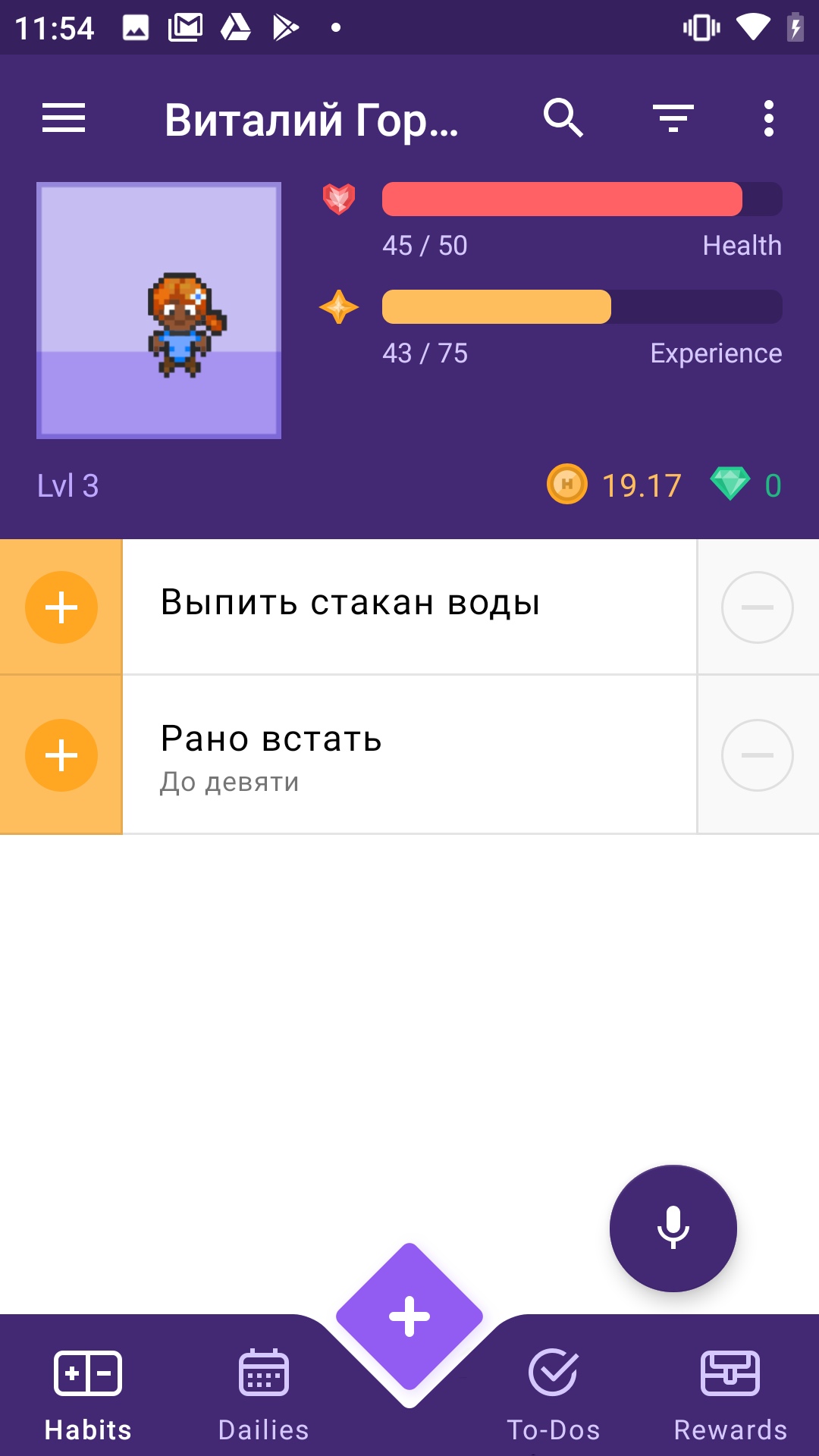

</style>Let's check if a microphone has been added. We launch the application.

We got a bunch of errors about incorrect syntax. We fix everything as the IDE advises.

Working!

But the microphone is creeping into the bottom navigation. Let's raise it a little. Add to the styles above in the CustomAssistantButtonTheme:

<item name="aimybox_buttonMarginBottom">72dp</item>

Better!

Now let's connect an assistant there and check if he answers normally. For this we need the Aimybox console.

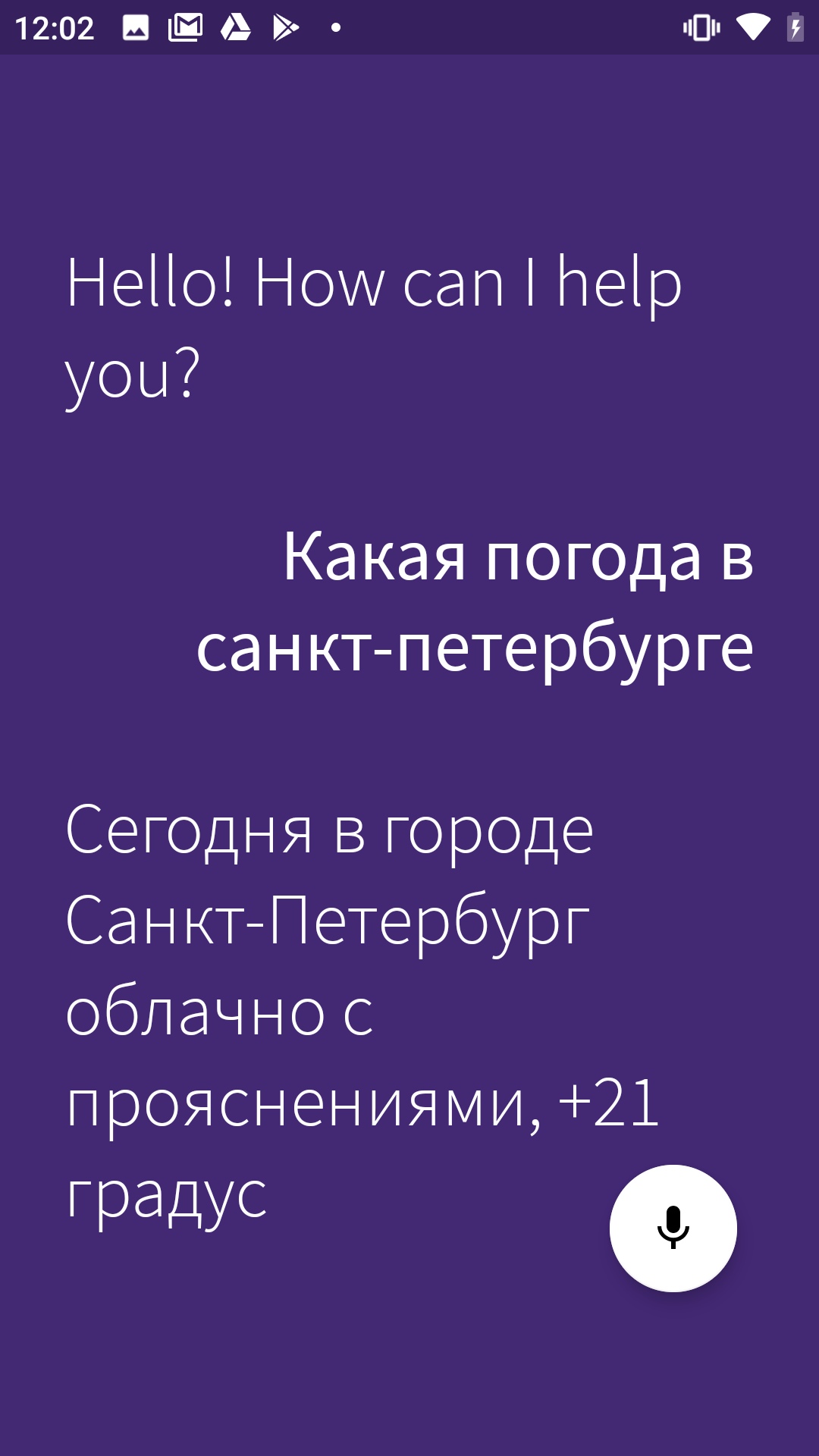

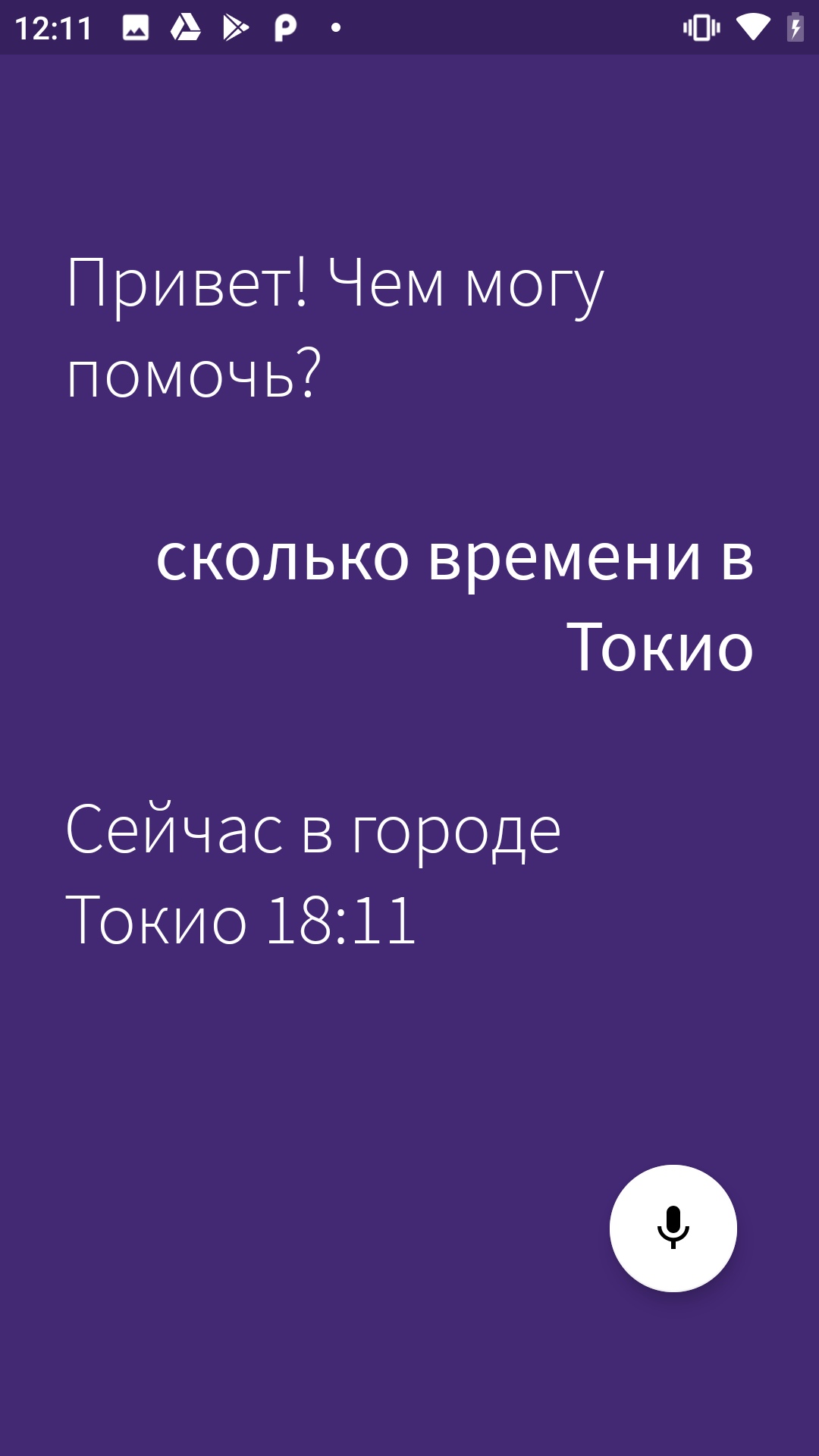

Let's start by going to app.aimybox.com under our github account, make a new project, connect a couple of skills (I connected DateTime for the test) and try to ask the appropriate questions in the assistant. Here in the settings, in the upper right corner, we take the apiKey, which we insert into createAimybox instead of YOUR KEY.

private fun createAimybox (context: Context): Aimybox {

val unitId = UUID.randomUUID().toString()

val textToSpeech = GooglePlatformTextToSpeech(context)

val speechToText = GooglePlatformSpeechToText(context)

val dialogApi = AimyboxDialogApi(

"YOUR KEY", unitId)

return Aimybox(Config.create(speechToText, textToSpeech, dialogApi))

}Working!

English text only, let's change the welcome message in strings.constants.xml.

<?xml version="1.0" encoding="utf-8"?>

<resources>

<!-- Prefs -->

<string name="SP_userID" translatable="false">UserID</string>

<string name="SP_APIToken" translatable="false">APIToken</string>

<string name="base_url" translatable="false">https://habitica.com</string>

<string name="initial_phrase">"! ?</string>Hooray!

Here is a link to the code repository.

In the next article about an assistant for "Habitika" I will tell you how to use your voice not only to find out the weather, but to control the application directly - navigate through the pages and add habits and tasks.