And this, of course, is a victory out of competition! But we also had an interesting experience of debugging a robot located 2000 km away from us in a boat garage on the Norwegian coast. Under the cut, a story about how we made vision and ruled “cloud brains” to robots during quarantine remotely:

In the spring, we made a prototype of the entire remote control system for 3D streaming and training by a robot on a two-handed YuMi and met a Norwegian company whose solution is very useful to us for broadcasting a 3D stream of Realsense cameras - Aivero. So after a difficult working period, the plans seemed cloudless: to fly to Italy for a month of winter with my family, from there to travel to exhibitions of robotics in Europe and end everything with a stop for a couple of weeks in a city with beautiful fjords in the vicinity - Stavanger, where to discuss 3D integration codecs into our system and try to convince Aivero to put a couple of robots together.

What could have gone wrong in this wonderful plan ...

Sitting in quarantine for 2 weeks after returning (not without incident) from the Italian lockdown, I had to blow off the dust from my spoken and written English and execute the second part of the plan already in Zoom, and not in the entourage of fjords.

Although, here's how to look. Quarantine forced many to seriously start working on possible ways to automate where it is not difficult to replace a person. All the more so for Western countries, where the minimum wage is above 1500 Euros, where robotization of simple manual labor is relevant even without the current epidemiological situation.

We connect different robots

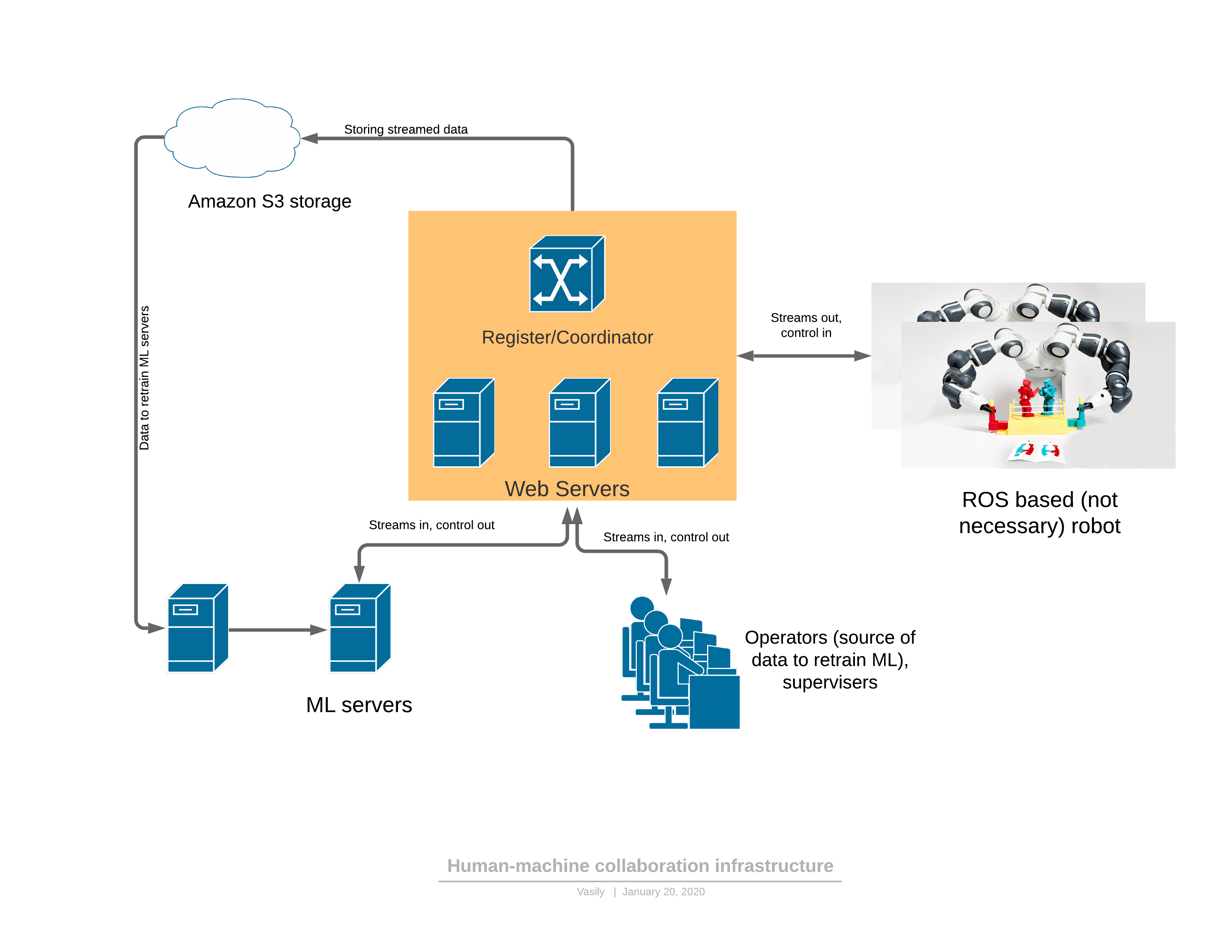

Let me remind you that we have trained robots using remote control records. Those. the robot connects to the Internet, to our cloud and starts sending 3D pictures and sensor readings. Receives commands back and executes them. In this logic, our task is to teach the ML processor to behave like an operator. 3D is needed to render the scene in virtual reality for the operator. This is convenient, and ML becomes much more accurate when grasping objects when there is a depth map.

As planned, we can connect a variety of robots to our cloud, but creating all of them ourselves is a very thorny path. We focus on their brains, on learning.

As a result, we agreed with Aivero to create a universal one-armed robot with 3D eyes by their powers, let's call it "Unit", and we get to do all cloud Robotics.

The priority was the simplicity and cost of the solution for the end customer. And, of course, versatility. We want to minimize the entry threshold to automate simple manual labor. Ideally, even a small business owner with no special skills could buy or rent our “Unit”, put it in the workplace and run it.

We thought for a couple of weeks, tested the hypotheses for a couple of months and this is what happened (version with Jetson AGX at the base and a different overview camera than on the title one):

And a closer searchlight:

Composition:

- Jetson NX

- 2 3D Realsense cameras (one overview, the other for the work area)

- spotlight

- vacuum pump if needed

- robotic arm (Eva / UR / ABB YuMi) with vacuum or mechanical grip

- WiFi internet or wired

Such a telescopic stand with a calculator and a vacuum pump at the base is placed next to the working area of the robot, connects to the Internet (for example, via a QR code to WiFi), and immediately begins to solve the task with little or no configuration.

Here you can immediately estimate the cost. The most affordable robotic arm Eva is 8000 Euros (not supplied in Russia), and the UR10 will already cost almost 50,000 Euros, but here it should be noted that the UR claims much greater reliability, so it may not be much more expensive in the long run. And they are getting cheaper lately. The rest of the kit costs about 2000 Euros.

ABB YuMi IRB 14050

We have previously dealt with a two-handed YuMi , but here we tried a new version of the IRB14050, which is essentially just one severed hand.

Briefly what I liked:

- precision and mechanical workmanship

- high sensitivity to collisions and dampers on the joints

and didn't like it:

- difficult to remotely resolve collisions and emergency situations

- the small angular travel of some joints makes the trajectories rather difficult for seemingly simple movements, which are not difficult for the kinematics of other 6-coordinate arms

- low carrying capacity in comparison with analogues

- additionally requires uploading (and sometimes debugging) a program in its own programming language from ABB, which processes TCP commands from a computer

And not briefly.

This is where we spent the most time. The recipe for how to launch is not at all simple:

- Take a Windows machine because otherwise, you won't be able to install ABB's RobotStudio.

- https://github.com/BerkeleyAutomation/yumipy RAPID ( ABB) ( , ), python API YuMi IRB 14050 IRB 14000.

- , IRB14000 urdf ROS moveit. IRB14000, IRB14050.

- ROS moveit Python API .

- FlexPendant for OmniCore, .

But, of course, this is only a possible trajectory of how YuMi can be made to obey, and all the little things where you can stumble are not to mention, of course.

Eva

Briefly what we liked:

- Of course the price

- API simple and concise

And the cons:

- no collision detection (announced in the fall release)

- positioning accuracy - the manufacturer still needs to work on it, but we have enough

Of course, the ease of management captivates:

pip install evasdk and

import evasdk

eva = evasdk.Eva(host_ip, token)

with eva.lock():

eva.control_wait_for_ready()

eva.control_go_to([0, 0, 0, 0, 0, 0])And the robotic arm is out! and performs.

No, of course, then we were able to overflow the logs in the hand controller, after which it stopped listening. But we must pay tribute to the manufacturer - the creation of an issue in their gita was enough for them to understand the reasons (and led to a couple of calls with a whole council about our problems).

And in general, Automata (producer of Eva) is great! I hope they will be able to grow and develop in the robotics market further, making robots much more affordable and easier than they are now.

UR

Liked:

- excellent mechanics and high precision

- large ranges of joint angles, which makes trajectory planning much easier

- collisions can be resolved in VNC Viewer by connecting to the robot's computer

- well debugged in ROS infrastructure

Minuses:

- outdated OS on the UR controller, there have been no security updates for about a year and a half

- still not the most modern way of communication, although it is well covered by the available open libraries

From python, the robotic arm is available in two main scenarios:

- Install https://github.com/SintefManufacturing/python-urx and enjoy. The listing is slightly longer than in the case of evasdk, so I will not give it. There are also known compatibility issues with the new robotic arms, judging by the issue tracker. Something that had to be also corrected for yourself, tk. not all modes of movement were implemented in the library, but these are subtleties.

- “ROS-” (https://github.com/ros-industrial/universal_robot). , ROS , : UR moveit ( ROS, , , ).

We try to avoid ROS because part of its functions (message broker) is performed by rabbitmq in our system, and there is a serious complication of the technology stack used. So for the case when you need to go around obstacles, we encapsulate ROS into a microservice on the server side.

Now the trick!

For you to understand, UR is:

Ie. any sneeze is allowed on the robot's touch panel. And in order to torture our colleague from Aivero not 5 times a day, driving to the boat garage, you need to somehow get in there remotely.

It turned out that the UR controller has linux installed (and by the way, not the weakest x86 processor).

We type ssh IP ... user: root, password: easybot.

And you're on Debian Wheezy.

So we take and install the VNC server and find ourselves the complete master of the robot! (Here it is only necessary to note that Wheezy has not been updated for 2 years already and you will not be able to simply take and install a vnc server due to obsolete registers. But there is a link to the “magic file” that allows you to do this).

By the way, Universal Robots, when we showed them our demo, said that such a remote control requires a new security certification procedure. Fair enough. It's very curious how Smart Robotics is doing with this in general. I cannot imagine that the targeting variables from computer vision could be 100% safe for others.

It's time to teach the robot to grab the boxes

Let me remind you that we are showing what a robot should do in VR:

Those. for each movement we have recorded how the scene looked and what kind of command it was, for example, this:

{“op": "pickup_putdown_box",

"pos1": [441.1884, -112.833069, 151.29303],

"pos2": [388.1267, 91.0179138, 114.847595],

"rot1": [[0.9954941, 0.06585537, -0.06822499], [0.0917332, -0.851038456, 0.517028868], [-0.0240128487, -0.52095747, -0.85324496]],

"rot2": [[0.992139041, 0.102700718, -0.07150351], [0.100485876, -0.99436, -0.0339238755], [-0.0745842, 0.026472155, -0.996863365]],

"calibration": [[-0.01462146, 0.9814359, -0.191232175, 551.115051], [0.9987302, 0.0051134224, -0.0501191653, -6.613386], [-0.0482108966, -0.191722155, -0.9802644, 771.933167]],

"box": [[474.331482, -180.079529, 114.765076], [471.436157, -48.88102, 188.729553], [411.868164, -180.27713, 112.670532], [476.105164, -148.54512, 58.89856]],

"source": "operator"}In general, this is enough for us to train the grids to determine the bounding box of an object in space and where to grab it.

So we sit for half an hour and show the robot how to juggle 4 types of boxes, we get about 100 examples. We press the magic button ... well, more precisely sudo docker run -e INPUT_S3_FOLDER = ... OUTPUT_S3_FOLDER = ... rembrain / train_all_stages: dev . And let's go to sleep. In the morning, the docker sends a message to the ML processor to update the weights, and with bated breath (although robots were given free testing by manufacturers, they cost serious money), we launch and ...

OOPS ... I

must say that not a single robot was harmed during debugging. I think solely due to incredible luck.

One day my 2-year-old son came up and decided to play with the VR tracker. He climbed into a chair, took it from the windowsill ... And sent the UR10 on an unimaginable journey, pushing the camera bar aside and putting the robotic arm into a rather tricky position. So I had to add some fuses to the controls. And the second observation camera, tk. otherwise, sometimes it is simply not visible where the hand has gone and whether it is possible to move it.

And if it's no joke, then the detection accuracy of such simple boxes in our tests exceeded 99.5% even with a small training sample of several hundred examples. The main source of problems here is no longer computer vision, but related complications: for example, some anomalies in the initial arrangement of objects, or unintended interference in the frame. But then we make a learning system with operators in a loop to be ready for anything, solving problems without involving live people on the spot.

One more algorithm, about my being in the backend and a mistake in the UI frontend

bin-picking «bin-stuffing», .. . — . , .

, , . . .. , , . , .

, . , ( , ). - :

, .. , :

Backend

, websocket-, :

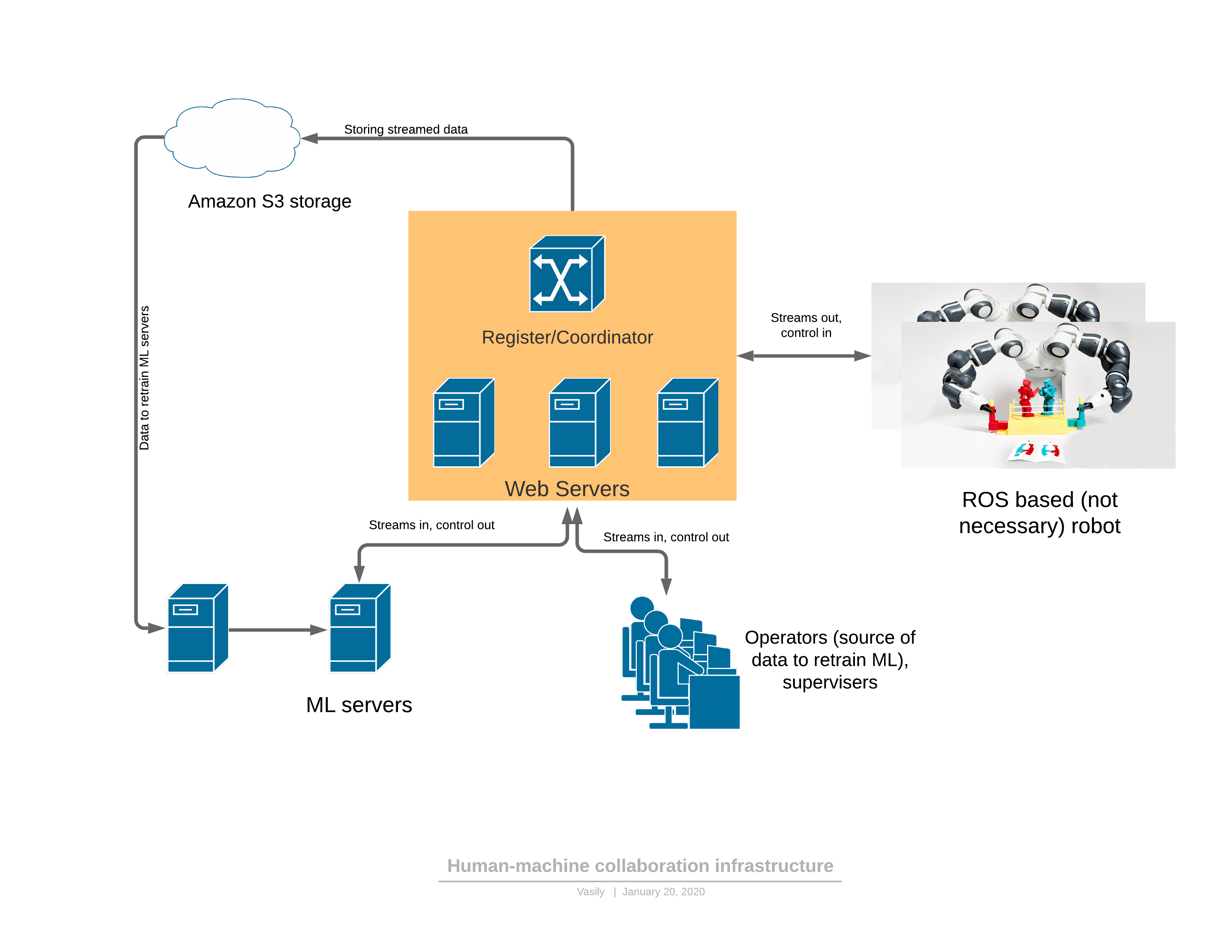

, Coordinator . , Rabbit mongoDB, , ( ). , .

, , backend- , ML .

UI

. , UI , . .

AWS console, Yandex console, , , , . .

, .

, -> -> , , , -> , .

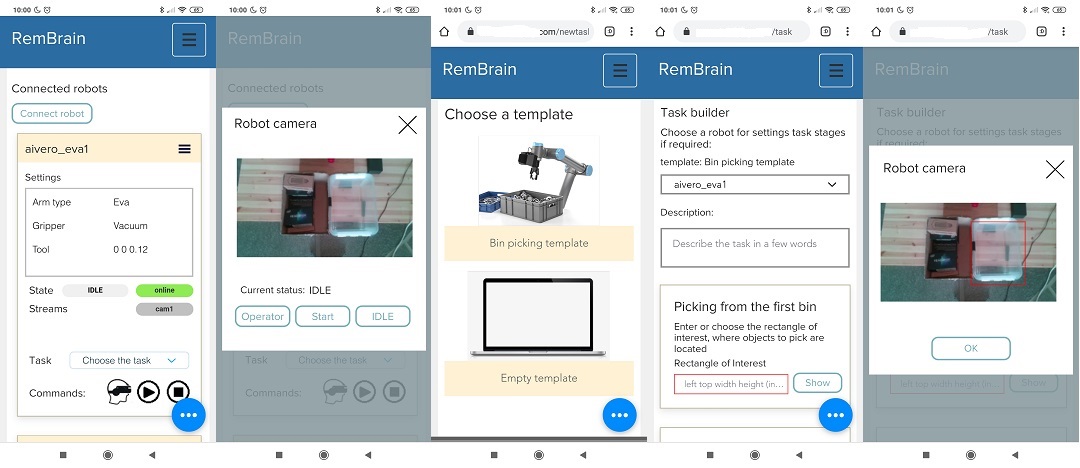

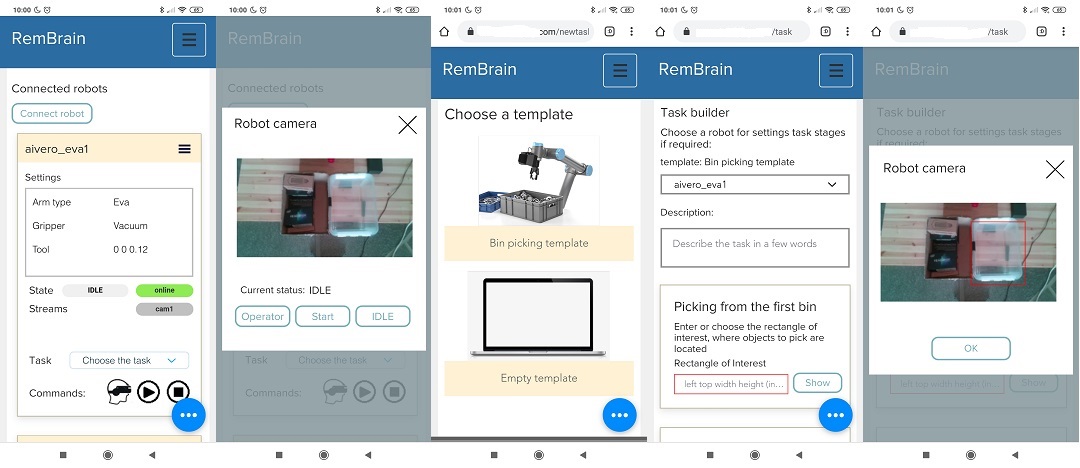

, “”. , UX . — . UI robot Console .

bin-picking «bin-stuffing», .. . — . , .

, , . . .. , , . , .

, . , ( , ). - :

, .. , :

Backend

, websocket-, :

, Coordinator . , Rabbit mongoDB, , ( ). , .

, , backend- , ML .

UI

. , UI , . .

AWS console, Yandex console, , , , . .

, .

, -> -> , , , -> , .

, “”. , UX . — . UI robot Console .

What's next

We shoot a video of the installation and setup of the robot in 2 minutes, prepare materials for promotion on several types of tasks.

At the same time, we are looking for new practical applications in addition to the understandable and popular bin-picking (personally, I dream of using robots at a construction site).

I think in a few months we

So the quarantine was good!