Life goes on. And we continue to introduce you to the most interesting news from PostgreSQL.

This time, we decided to slightly change the Postgresso format : now there is no information equality. We will talk about some releases and articles in more detail, others - in a couple of lines. The choice is subjective, constructive criticism is encouraged.

Releases

PostgreSQL 13 beta 3

There are changes in the 3rd beta compared to the 2nd beta, see the release page .

Along with Beta 3, updates were released: 12.4, 11.9, 10.14, 9.6.19 and 9.5.23. They closed two discovered security holes related to the search path (

search_path) for elements (tables, functions, operators, and so on) when creating extensions and during logical replication. More than two years ago, the CVE-2018-1058 vulnerability was found, which allows using the features of working with a variablesearch_path(it determines the order of searching in schemas when referring to database objects) to run malicious code. If this variable is used carelessly, the enemy can take control of the execution of queries and then run arbitrary SQL code with the rights of the attacked user. You can read about this, for example, here . These hazards have been explained, precautions have been listed. Now it turns out that measures are not enough for logical replication and when creating extensions.

Now, during logical replication, the send and receive processes will be executed with an empty string

search_path. When creating extensions, an attacker, if he had the right to create new objects in the extension, could run dangerous code under the superuser. Now extensions have been tweaked and created instructions for those who are going to develop new extensions.

For beta3 not follow beta4 : a release group, that is Jonathan Katz (Johnathan S. Katz), Alvaro Herrera (Alvaro Herrera) and Peter Geigy (Peter Peter Geoghegan) written on 2 September that, after analyzing unsecured items, you can immediately prepare release candidate 1(RC1) on September 17th. And, if no critical problems are found, on September 24, the main version of PostgreSQL 13 is already. Well , we have already written about how many new things are in the Devil's Dozen .

New Postgres Pro Enterprise / Standard releases Postgres Pro Enterprise

versions 12.4.1 , 11.9.1 , 10.14.1 , 9.6.19.1 , Standard 12.4.1 , 11.9.1 , 10.14.1 , 9.6.19.1 have been released .

Changes in Enterprise 12.4.1, for example:

- fixed a bug in the optimization of the scheduler, which led to an incorrect estimation of the number of rows when the parameter was enabled

enable_compound_index_stats; - pgpro_scheduler, ,

schedule.stop(); - rum: , ;

- BRIN;

- , ;

- multimaster , Postgres Pro Enterprise 11.8.1 ( Postgres Pro Enterprise);

- pg_probackup 2.4.2.

Last but not least (and for many, the most important):

12.4.1 and 11.9.1 are now able - thanks to the experience of Anton Doroshkevich (InfoSoft) and the efforts of Andrey Bille (Postgres Professional) - to configure the instance to work with 1C (

pg-setup inidb --tune=1C) during installation .

mamonsu 2.5.1

mamonsu is a monitoring agent for collecting operating system and Postgres metrics developed by Postgres Professional. The main thing in the new version is the final transition to python 3 due to the fact that support for python 2 is ending in 2020. There are also other updates. For example, two new plugins. The first plugin (it is named exactly the same: pg_probackup) allows you to monitor the size of the backup directories that store WAL and backup files created by the utilitypg_probackup .

The second plugin is called prepared_transaction and allows you to keep track of the age of the oldest prepared transaction in seconds and the number of all prepared transactions for a two-phase commit. This is important because in real life, a “hanging” prepared transaction often prevents a vacuum from working.

Also, the new plugins include triggers: to track the error status during backup and the age of the oldest prepared transaction, respectively.

Developers are asked to leave feedback, questions and suggestions for functionality, new metrics and parameters on the mamonsu github . The documentation can be viewed here .

Mamonsu has plugins that appear during installation (in the directory

mamonsu/plugins). They are all listed in the sample config file . During installation, this file is automatically substituted as the default configuration file. The metrics in a standard installation are listed in the middle of the README.rst file . But the user also has the opportunity to write the plugin himself. The structure of each python file that collects metrics consists of a specific set of functions.

The Zabbix blog contains an article transcribing the report of the developer Daria Vilkova (Postgres Professional) for Zabbix Meetup Online .

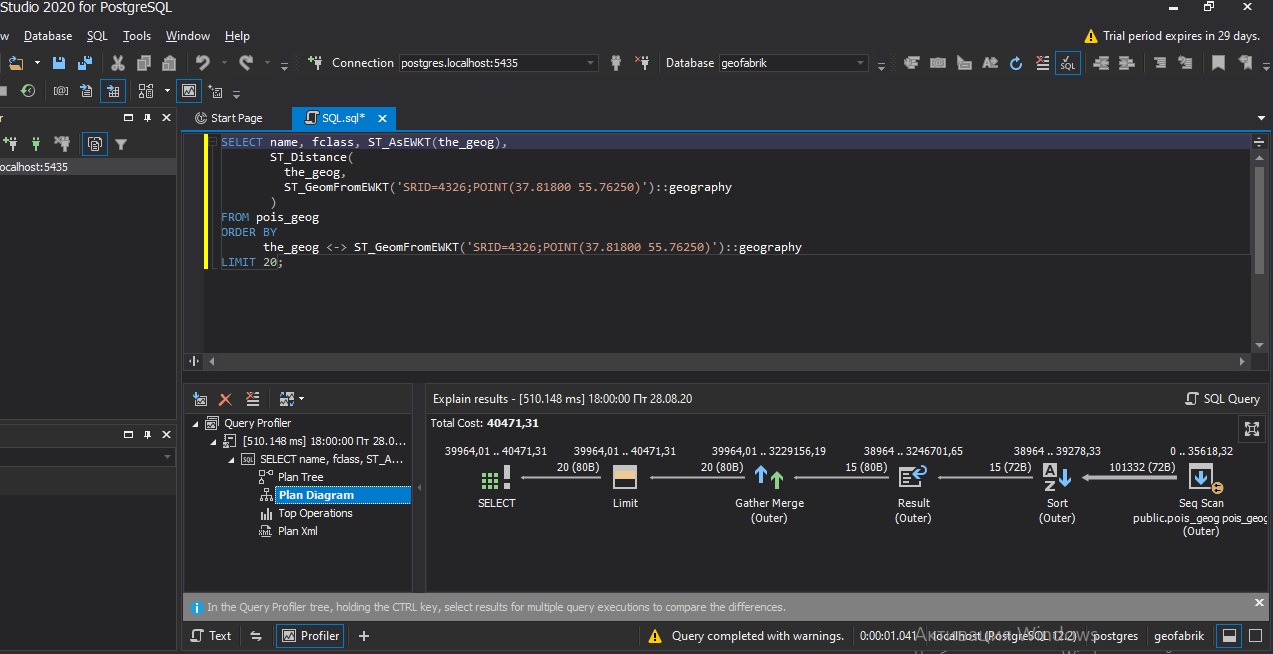

dbForge Studio for PostgreSQL v2.3

This is a graphical toolkit for working with SQL in Postgres under Windows: with a code editor, multi-colored formatting, a script generator and a profiler (you can see the profiler windows in the screenshots). Paid (trail - 30 days). The manufacturer of this studio, as well as the rather popular utility dbForge Data Compare for PostgreSQL, is Devart (head office in Prague, developers in Kharkov).

There are no revolutionary changes in the new version : for example, support for PostgreSQL 12 script generation appeared in 2.2, but a lot of amenities have been added.

The script generator, which creates the corresponding script in response to mouse actions, learned how to create them for: DROP / CREATE TABLE, DROP / CREATE SEQUENCE, DROP / CREATE, CREATE INDEX. Improved SQL formatting, now statements with CREATE TRIGGER, CREATE INDEX, CREATE SEQUENCE, CREATE TABLE, CREATE VIEW, CREATE MATERIALIZED VIEW, PROCEDURE \ FUNCTION look nice. The profiler learned to show the plan without executing the request.

pgagroal 0.8.1

In this version of the puller, the work with the Prometheus monitoring system has been improved : now pgagroal indicates the servers that have failed and shows errors on them. Pgagroal is released frequently . In the previous version - in version 0.8.0 - the puller learned to reconnect (failover) and support systemd. B 0.7.0- at the end of May - remote control appeared.

pg_dumpbinary 2.2 The

new version of Gilles Darold's program, which saves a dump in a binary format and restores the database with a command

pg_restorebinary, approaches the behavior of pg_dump itself: now tables and extension sequences registered by the function are dumped pg_extension_config_dump. A detailed release changer is here . You can download it from here .

PostGIS 3.0.2

Along with this version 2.5.5, 2.4.9 of the corresponding stable branches were released. Works with PostgreSQL 13 beta2 and below up to PostgreSQL 9.5 and GEOS later than 3.6. Correction of errors, fundamental changes are not visible.

pg_probackup 2.4.2

In this version of the utilitySUSE packages appeared. By the way, the Articles section has an outline for a whole series of articles about pg_probackup.

Foreign Data Wrapper for SQLite 1.2.1

Toshiba Software Engineering & Technology Center announces a new version. It works with PostgreSQL 9.6, 10, 11 and 12. Improved:

- added Limit / Sort pushdown to SQLite;

- SQLite type datetime numeric is converted to PostgreSQL TimeStamp

FDW supports:

SELECT, INSERT, UPDATE and DELETE on external tables; transactions.

Pushdowns:

- WHERE clauses;

- aggregation;

- ORDER BY;

- LIMIT and OFFSET (* in the case when all the tables being accessed are external);

Details are in the source repository .

Articles

PostgreSQL 14: Part 1 or "July Warm Up" (Commitfest 2020-07)

A thorough article, it reflects all the new commits, and many of them are illustrated with examples. And some of them belong to version 13. Now there have appeared and will appear articles devoted to individual or several innovations in PostgreSQL 14, but it is unlikely that there will be text concerning so many commits.

On the same topic, little by little:

by Hubert Lubashevsky (aka depesz) in the Waiting for PostgreSQL 14 series:

- Rename wal_keep_segments to wal_keep_size .

- pg_stat_statements: track number of rows processed by some utility commands .

- Improvements for handling large number of connections (a series of snapshot optimization patches) ..

So far there are 3 topics, but the continuation will undoubtedly follow.

How Lingualeo moved to PostgreSQL with 23 million users The

full title starts with "Quarantine load increased 5 times, but we were ready . " An article by the main backend development of Lingualeo, Oleg Pravdin, aroused the interest of layers wider than PostgreSQL sysadmins. At the time of Postgresso 24, there are already 772 comments with 44K views. Maybe because the article contains a lot about administrative transformations, search for performers, maybe due to the fact that during the transition to a new system, a certain amount (about 1%) of user data was deliberately banged. Well, holivars on the topics of NoSQL vs classics and logic within the base vs logic in applications started.

Lingualeo is a service with 23 million users in Russia, Turkey, Spain and Latin America who learn English with it, 100,000 concurrent users at its peak. Users have their own dictionaries (they were partially affected), Jungle , courses. It all worked in PHP / MySQL. Now all the logic has been transferred to the PL / pgSQL database.

The article does not contain a lot of technical details (they promise a whole series of articles in the near future), but they can be found in the comments under the article. For example, a new solution is described like this:

- the front tugs at the "shopping list" handle;

- a proxy service [in Go, balances requests to the Master and Slaves, plus provides interaction with external services] receives a request and pulls the corresponding storage in the database (at this point, flexible routing to the slaves is possible, for example);

- The storage generates a response in the form of json. The response contains an attribute with an instruction for the proxy service: "call the sms_sending microservice, here is a json for it with parameters";

- the proxy service executes the instruction;

- the proxy service sends a ready response to the front (items 4 and 5 can be executed in parallel, if independent).

Need to:

- develop storage (PL / pgSQL) for 50-100 lines;

- development and debugging time: 1 - 2 hours;

- response speed: 1 - 2 ms (if the data structure is correct);

The proxy service sends ready-made JSON to the front.

Colleagues suggested to me that, judging by this information, Lingualeo applied in practice an approach theoretically substantiated in articles such as Connecting Galaxies: Bridging the Gap Between Databases and Applications (co-author of the article, which, alas, is not available for free, Boris Asenovich Novikov , author of the textbook Basics of Database Technologies ) or earlier - Talking To The Database In A Semantically Rich Way... The bottom line is that a mismatch in data models between object-oriented applications and relational DBMSs can be fatal to the data. To overcome it, it is necessary to exchange not separate rows of separate tables, but composite objects (using, for example, JSON as a transport format).

In the Lingualeo article there is not a word about full-text search, there is only a task:

For the function of translating words on click to work, the text must be divided into words, expressions and phrases. Then - refer to the dictionary and display the translation in a new window over the text to the user. Backing sends ready-made JSON to the front, in which the text is already split into words and expressions.

But in a comment, the author explains:

We have simplified the entire system, excluding rabbit, kafka, elastic search, etc. FTS (full-text search) in PG allows you to find the necessary data in a database of millions of documents in 2-3 ms - more than enough for our tasks ...

... implemented in storage tokenization of texts with hieroglyphs (when there is a continuous list of hieroglyphs, no problem, and they must be correctly broken down into words and expressions, the dictionary is attached in the table). Storage with recursive CTE, 50 lines, took about an hour to develop. The processing speed is 20 times faster than a python script. And the code size is 10 times smaller.

We hope for clarification in upcoming articles.

Familiarity with pg_probackup. The first part

Alexander Nikitinfrom BARS Group wrote an article about this Postgres Professional utility. In the first part, he talks about backup. Further it will be about recovery. In general, it is planned to consider first of all 4 topics:

- creating offline backups on a separate server

- creating an archive of WAL files and creating backups in this mode

- deploying a replica from a backup and setting up creating backups from a replica

- various recovery options;

Database Backup Guide

Vladimir Komarov aka hard_sign examines backups in all major DBMSs (Oracle, DB2, MS SQL, MySQL, and in episodes MongoDB, Cassandra, Percona Server), including PostgreSQL, of course. In the chapter on incremental backups, several paragraphs are devoted to pg_probackup .

This article is part of a powerful series: A

Guide to Database Replication Criticality

Classification of Information Systems

Distributed Database Management Systems for the Enterprise .

Of the earlier ones, there is also an action-packed one: So what happened to Sberbank?

Why PostgreSQL 13 is a Lucky Release

Jonathan S. Katz (Crunchy Data) rightly believes that this release was not a decisive breakthrough in terms of increasing functionality, but that it is "a good thing for everyone" - everyone will find something for themselves, because of which is worth upgrading to PostgreSQL 13 immediately.

First of all, he thinks, is reducing the size of indexes (b-tree deduplication). On the demo, the gain is three times in volume and twice in performance. Further: the vacuum works faster due to the fact that the indexes, again, during table cleanup are processed in parallel, while the number of parallel processes is adjusted. Jonathan also notes incremental sorting as a particularly important feature. We remind you that in our articleAbout PostgreSQL 13, these points are considered in a little more detail (by the way, we also have a 3-fold gain in the volume of the index), but there are much more points there, which is an advantage and a disadvantage at the same time - depending on the reader's goal.

Avoiding the Pitfalls of BRIN Indexes in Postgres

John Porvaznik (Crunchy Data) for his examples generates a table with random data, then examines the structure of the BRIN (Block Range INdex) index using the pageinspect extension... To the question he asked himself "when should I use BRIN?", The author replies with the sentence "when the table is large insert-only in-order" and then dwells on the influence of each link of this construction. The index is gentle, delicate. An unexpected deviation in each of these links can negate all the charms of BRIN, so it is worth modeling the project well before launching it into production.

How we used Postgres extended statistics to achieve a 3000x speedup

An article on the website of Affinity, better known as a developer of design tools, talks about how their engineers solved the problem with a long response time on their website. The problem was that the optimizer radically missed the cardinality score - it waited for one record where there are thousands of them. The author - Jared Rulison - briefly and clearly explains the importance of correlations when collecting statistics, what unpleasant surprises accounting for correlations can come up with, how the optimizer can choose a completely non-optimal join type (which happened

nested loopinstead of hash join). To educate the optimizer, it is necessary to do some additional steps when collecting statistics.

Cardinality assessment is indeed one of the non-trivial tasks. In the last issueWe mentioned an article by our colleague Pavel Tolmachev from Postgres Professional Education Department: AQO - Adaptive Query Optimization in PostgreSQL . It talks about the intelligent (AI) module (extension) aqo, which in many cases helps the optimizer by successfully guessing the cardinality.

A Crash Course on PostgreSQL for R Users The

union of R and PostgreSQL is an infrequent topic. In a simple article and examples, a demo base of Flights from New York airports is used (there are> 12 million entries in flights14). Try the demobase of our airports - it is richer semantically. Joe Conway's plr extension(Joe Conway), which allows storing and executing custom R functions in the database, is not used. The author bypasses the usual connections using RPostgres. Used libraries Tidyverse, dplyr and others. There are some helpful links.

Building a recommendation engine inside Postgres with Python and Pandas

Craig Kirstiens of Crunchy Data decided to build a recommendation engine inside PostgreSQL, that is, using stored functions in

plpython3u.

He took a simple example of a Python engine with demodata, loaded the data into Postgres. Where there was a DataFrame type in Python, Craig uses Postgres arrays.

Data systems that learn to be better

Edam Conner-Simons(Adam Conner-Simons) of the Computer Science and Artificial Intelligence Laboratory (CSAIL, a lab inside MIT) writes about projects with sinister names: Tsunami and Bao (BAndit Optimizer).

Tsunami based on the theoretical paper of The pending Learned the Case for the Index Structures (link only abstract), written in 2017 by Professor MIT Tim Dye (Tim Kraska) and co-workers and colleagues from Google. The article then made a splash in the Postgres community. It said, "The idea is that the model can learn the sort order or structure of lookup keys and, based on this information, determine the optimal entry position in the index, or even need it."

"Is the Revolution Coming?" - asked Nikolay Samokhvalov... Few believed that learning indexes would actually replace B-trees, hash indexes, and Bloom filters. As a “sober view”, Oleg Bartunov , for example, cited a study where AI worked no better than spline interpolation :

The Case for B-Tree Index Structures by Thomas Neumann . None of the PostgreSQL developers, at any rate, have implemented workable learning indexes.

Paint, however, presented the Tsunami project within the walls of CSAIL. He claims that on tests it is possible to achieve an order of magnitude gain in query execution speed, and the data can be organized into sets of trained indices, reducing the total volume by two orders of magnitude (approximately as he predicted in that article).

In addition, with a different team led by Ryan Marcus, he participates in the Boa project (abstract), where, it is claimed, an optimizer fully integrated into PostgreSQL learns less than an hour from its own mistakes, and then makes a plan so that beats on performance open source and commercial DBMS.

And the goal of CSAIL is to combine these two projects into one that will work on existing cloud infrastructures such as Amazon's Redshift. Skeptics are not fooled: “Curious, I don’t know anyone who’s working on this,” writes Bruce Momjian . But Dmitry Dolgov saw reports of plans to implement Bao as an open source project, although no interaction with the Postgres community has yet been noticed.

Education

A new English version of "Baby" has been released - PostgreSQL: The First Experience

In this edition, examples on PostgreSQL 12. You can download the PDF for free from here . And the Russian version is here .

The clouds

Postgres Pro on Azure, Mail.ru and Yandex

Virtual machines with new minor versions of Postgres Pro released at the end of August have appeared in the Microsoft Azure cloud. There are Postgres Pro Enterprise and Postgres Pro Standard virtual machines of versions 9.6.19.1, 10.14.1, 11.9.1 and 12.4.1 (Postgres Pro Enterprise virtual machines of versions 10.14.1, 11.9.1 and 12.4.1 in two versions - with CentOS OS and Ubuntu OS).

In addition, Postgres Pro Standard versions 11 and 12 are offered in the Microsoft Azure cloud as Docker container images - both as part of a virtual machine and as a standalone container. Quite a few: 13 applications against a background of about 60related to Postgres. Including, for example, PostgreSQL Hosting: Fully Managed DBaaS on Azure .

Virtual machines with Postgres Pro Enterprise 11.9.1 and 12.4.1 are now available in Yandex.Cloud. They include pg_probackup , CFS , multimaster and other Enterprise features, as well as installed and configured Zabbix server and mamonsu agent . A guide to creating and using Postgres Pro Enterprise in Yandex.Cloud is here . Not long before that Postgres Pro appeared in the form of DBaaS in Mail.Ru Cloud Solutions - so far only with Postgres Pro Standard 11 .

Yandex also has its own PostgreSQL - Yandex Managed Service for PostgreSQL (clusters with versions 10, 11 and 12, as well as PostgreSQL 10 for 1C. There is a video about 1C performance in Yandex.Cloud in the Webinars section). ... There is a pretty impressive list of extensions . In general, comparing cloud offers is a useful and interesting activity, but not for this news review - there are too many options. Plus, cloud providers usually do not seek to immediately display technical information adequate for comparison.

Announcing pgBackRest for Azure: Fast, Reliable Postgres Backups

Craig Kerstiens talks about pgBackRest, which can now run in Azure clouds.

DB-Engines Ranking - Trend Popularity

This is a rating of cloud DBMSs according to a certain set of criteria . PostgreSQL sits behind Oracle, MySQL and Microsoft SQL Server. But if you look at the popularity curves , you can see that these three are standing still (and even falling a little), and our handsome man stubbornly climbs up (but MongoDB clings to his heels).

Webinars and meetups

#RuPostgre

Exactly at the beginning of the school year - September 1 - Nikolay Samokhvalov and Ilya Kosmodemyanskiy started anew season of RuPostgres-Tuesdayswith Intro . In the coming streams, the main focus will be on "development" topics: great and terrible SQL, complex queries, JSON, performance optimization, debugging, ORM, GraphQL, etc. etc. But they will try not to forget the "admin" themes. A document with information, where you can enter your wishes, is here .

1C webinar on Postgres in the

Yandex.Cloud cloud posted a video (about 30 minutes, for some reason starts at 9:35): Marat Mustafin, Head of the Development Center of Wise Advice Company - WiseAdvice (Yandex.Cloud's main 1C partner) - talks about load testing (22:00), hardware requirements, PostgreSQL settings (including disabling synchronous commit) on network and local SSDs, affecting performance, the dependence of the selected disk size on the speed, reliability and scalability of applications.

Everything was deployed and tested on a Windows cluster in the Yandex Managed Service for PostgreSQL, which includes their Odyssey puller. PostgreSQL version 10 (work is underway to upgrade to 11). Tests: 1C: ERP test center and synthetic Gilev test... Testing raises many questions. Marat says about the Gilev Test: the results are too ambiguous, and in general this is just the beginning of comprehensive testing.

Webinars 2ndQuadrant

JSON & ARRAY - Contemporary PostgreSQL Data Types

Held on September 2nd. Host - Boris ... - no, I don't know how to pronounce his surname: Boriss Mejías.

New Features in PostgreSQL 13

Coming soon on September 16th at 19:00. narrated by Peter Eisentraut

Conferences

pgDay Israel 2020 To

be held on September 10th in Tel Aviv.

Previous issues:

# 23 , # 22 , # 21 , # 20 , # 19 , # 18 , # 17 , # 16 , # 15 , # 14 , # 13 , # 12 , # 11 (special) , # 10 , # 9 , # 8 , # 7 , # 6 , # 5 , # 4 , # 3 , # 2 , # 1