Hello comrades!

Over the weekend, the hackasborkaton was held - a race on self-driving car models based on donkeycar kit with the assistance of X5 , FLESS and the community of self-driving enthusiasts .

The task was as follows: first it was necessary to assemble a car from spare parts, then train it to pass the track. The winner was determined by the fastest 3 laps. For hitting a cone - disqualification.

Although such a task for machine learning is not new, but difficulties can await all the way: from the inability to make the wifi work normally to the unwillingness of the trained model to pilot the hardware along the track. And all this in a tight time frame!

When we were going to this competition, it was immediately clear that it would be very fun and very difficult, because we were given only 5 hours, including a lunch break, to assemble a typewriter, record a dataset and train a model.

Donkey machine

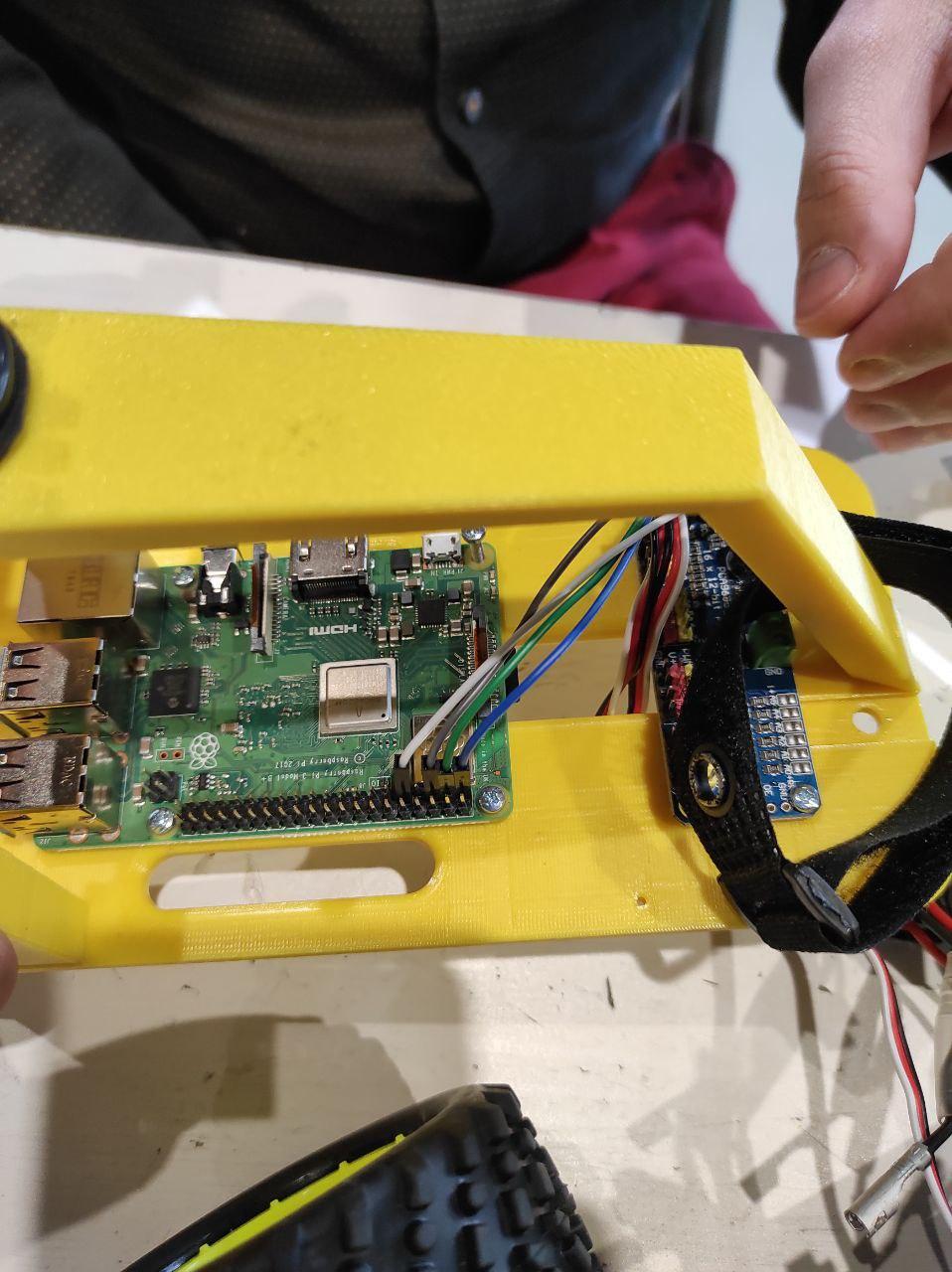

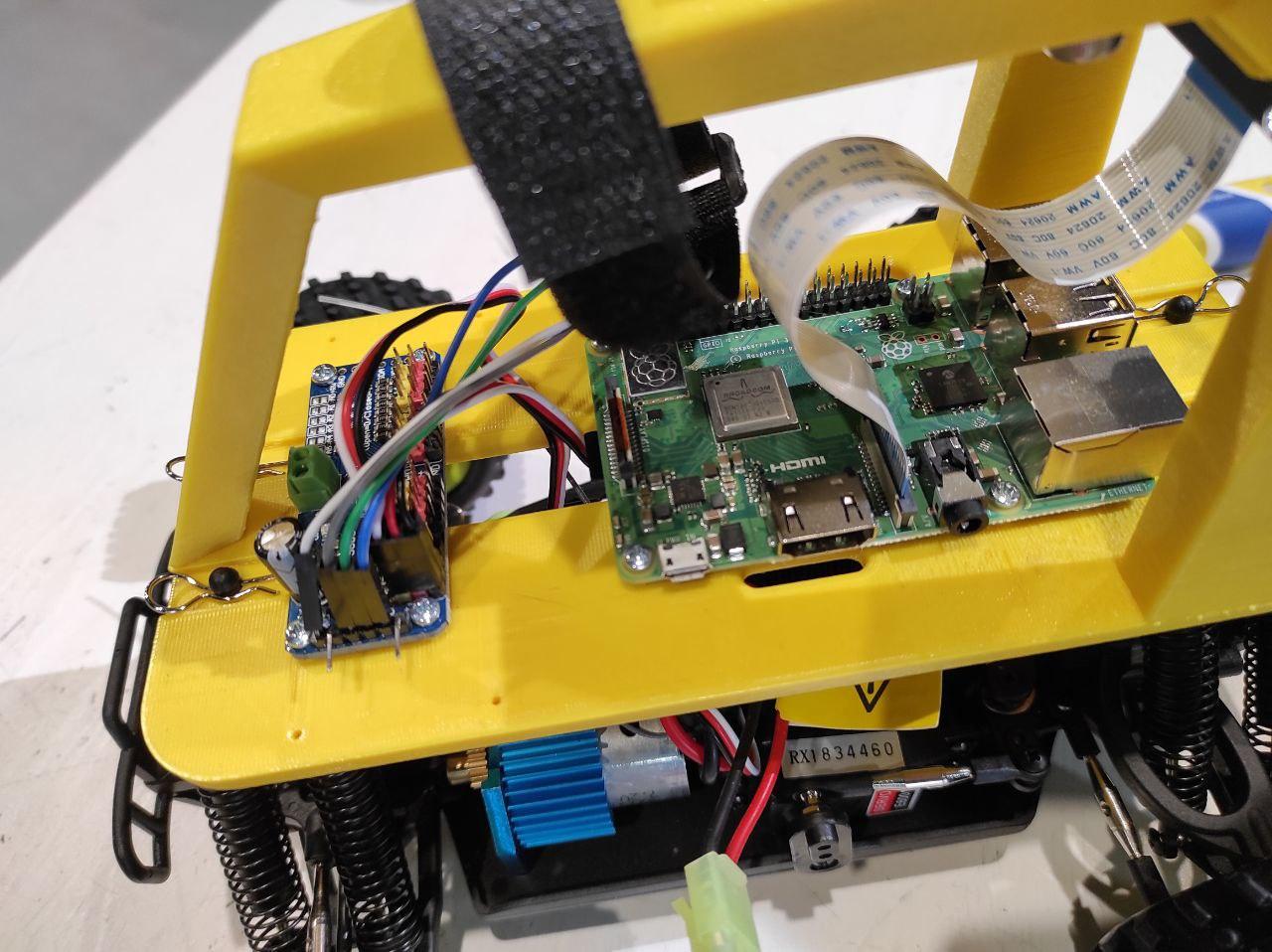

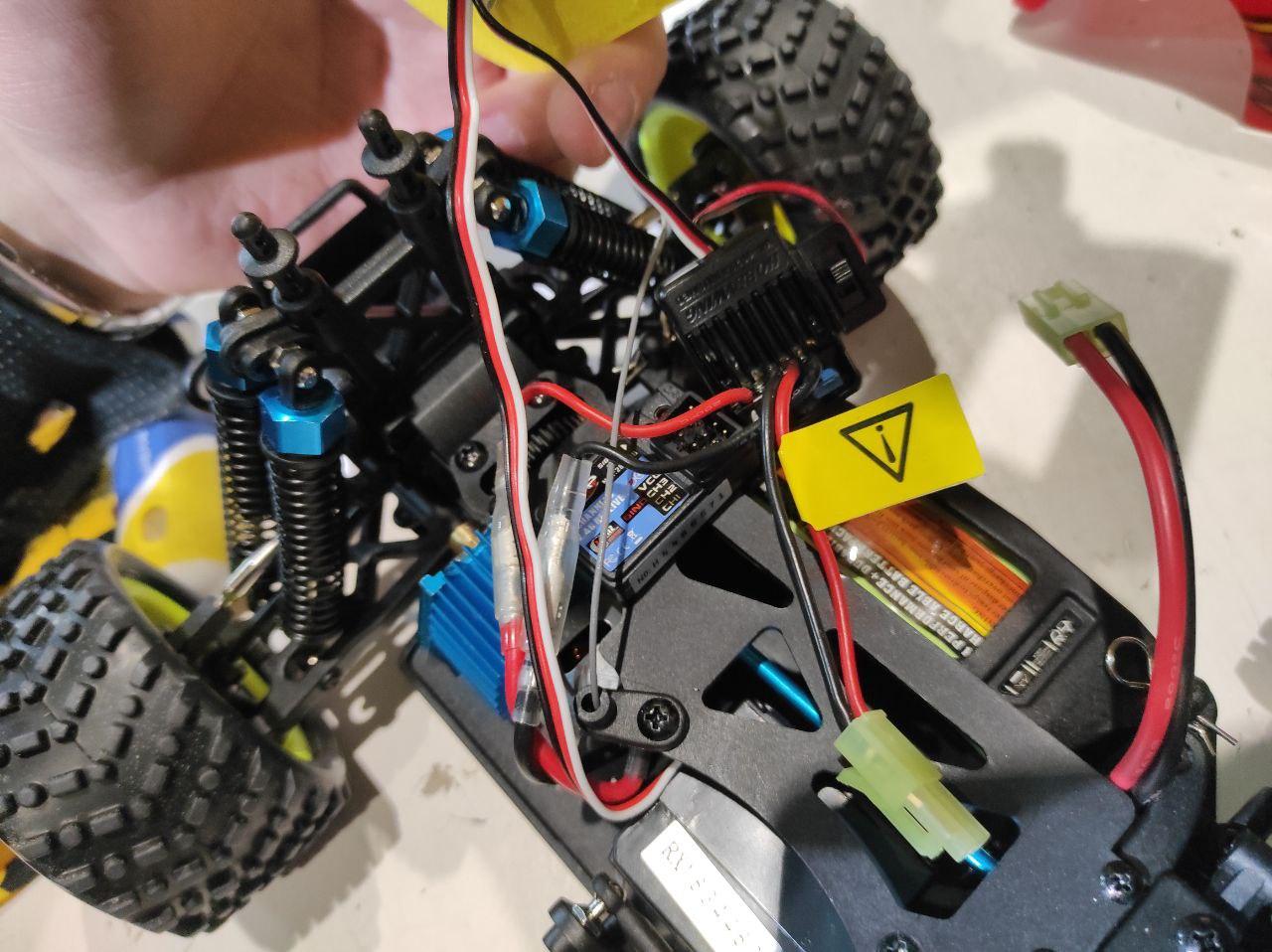

Donkeycar consists of a case on which a camera with a wide-angle lens (170 degrees) is attached, a Raspberry Pi3 +, a servo control board, software, and basically everything. But as it turned out later, the assembly of even such a simple device in a limited time and random glitches of equipment may take a long time, and you will not have time.

Assembly

The competition began with the fact that first it was necessary to disassemble the machine and assemble it again. We must pay tribute to the organizers, we were not offered to collect an incomprehensible bunch of parts from scratch, but were given the opportunity to understand the device using a ready-made example. We saved a lot of time by taking pictures of all the connections, and put the machine back in 10 minutes.

Connecting to a typewriter and checking work

After we assembled the car, there was a pause, because we had to connect the car to the Wi-Fi and start calibrating the chassis. As it turned out, working with Wi-Fi in the future will be one of the biggest problems when working with Raspberry, apparently you had to take your Wi-Fi with an antenna.

We decided not to get bored and connect to the Ethernet cable, which, along with the rest of the junk, is always lying around in my backpack. For some reason, the typewriter either did not have a DHCP server, or it did not work, or it should not have been there at all, and we realized that wireshark would easily get the source ip by broadcast when the cable was connected to the Raspberry. And so it happened, but after logging into the machine, we spent quite a lot of time trying to get the wifi to work. In the end, all participants were thrown off a special file where the config was located.

Calibrating the chassis and connecting the joystick

It took us about 35 minutes to connect the joystick, while we read the docks and scanned the bluetooth, trying to pair the typewriter and the joystick. It turned out that the problem was that there were too many joysticks in the room and they were randomly paired with cars of fellow racing - it was very fun to find that you were controlling the chassis of a random car =)

The next step was to calibrate the steering and throttle, that is, PWM turn and gas.

This was one of the most important parameters, it was required to make the value correlate with the speed of the car and the model copes with the control.

On intuition, we tried to make the acceleration and turn so that the car would go fast enough, but at the same time could be controlled.

Only about 2 hours were left until the end of the event, taking into account the teams' performances, and it was necessary to urgently accelerate. We ran to write down the data with the idea that it was necessary to create the most varied conditions in which the machine would stay. We assumed that when the competition starts, the lights will most likely be rearranged, foreign objects will appear next to the track, etc.

We recorded about 18 thousand pictures along with the throttle and turn values, trying to get a lot of people into the frame, we ran around the track, jumped over it, put chairs, made bridges, randomly placed the lights, went in the opposite direction.

We also added albumentations as augmentations and tried to add as many of them as possible!

In this forkI maliciously hardcoded heavy augmentations with an envelope from pil and vice versa - it also required rebuilding the environment for the typewriter, which affected the time.

By the time the first model was trained, we already had the code for the second one, the guys brought in new data from a neighboring track and ran to check how the first model would go.

The first model drove 3 laps with errors and took off 4 laps. After that, we lost another 20 minutes, because we forgot to insert an SD card into the machine.

The final model was trained on 19 thousand images with custom augmentation and data cleaning.

This is how the network itself looks like:

It can be seen that there is a field for a reversal, you can at least cut a batchnorm for a start, but we decided to touch it at a minimum, so that a fuckup would not happen.

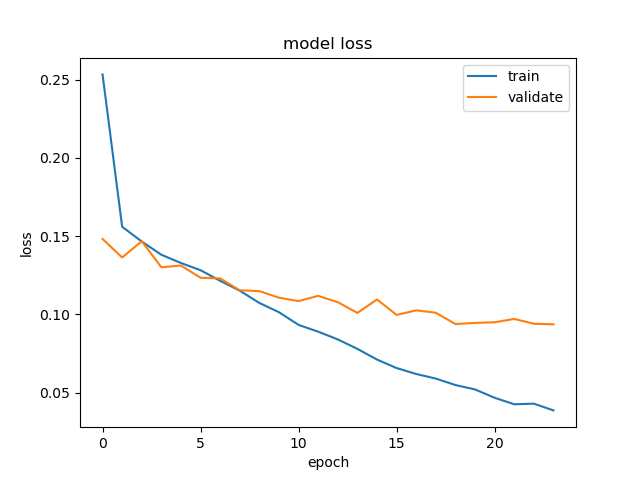

Further, the charts of the first and second models with the best MSE loss of 0.093 and 0.086, respectively.

The second graph seems to look better!

It is clear from the video that we have poorly calibrated the steering and poorly cleaned the dataset, but that was enough for us.

Video from GoPro, which we recorded after the main launch:

The final

We were the first to start the race and went to the track, but failure awaited us there, the Wi-Fi was constantly falling off, we were almost removed from the competition. And now, when the start was almost given, the machine suddenly began to go back. Apparently, I confused something when calibrating the throttle.

But nothing, to the laughter of the whole audience, she went ahead and kept dignified circles 8 or 9 on the track, twisting strongly, but still brought us a well-deserved victory!

I try not to look into the frame.

Acknowledgments

Thanks to the ods.ai community , it is impossible to develop without it! Many thanks to my teammates: Valea Biryukova, Egor Urvanov (Urvanov), Roma Derbanosov (Yandex). We are looking forward to a video review from Viktor Rogulenko (FLESS).

PS: Special thanks to Valya Biryukova, who, unfortunately, had a temperature of 38.5 the day before the competition, but helped a lot with the link .

Aurorai, llc

Aurorai, llc